Internet Protocol

From Citizendium - Reading time: 13 min

From Citizendium - Reading time: 13 min

The Internet Protocol (IP) is the highly resilient protocol for messages sent across the internet, first by being broken into smaller packets (each with the endpoint address attached), then moving among many mid-points by unpredictable routes, and finally being reassembled into the original message at the endpoint. IP version 4 (IPv4) is from 1980 but lacked enough addresses for the entire world and was superseded by IP version 6 (IPv6) in 1998.

This article puts the major versions and sub-versions of the basic protocol into a historical context; see Internet Protocol version 4 and Internet Protocol version 6 for the details of design. Presented here are the common aspects of designing a protocol for the internetworking layer of the Internet Protocol Suite.

- See also: Internet Protocol version 4

- See also: Internet Protocol version 6

While there were earlier laboratory versions, the first deployed version of the Internet Protocol version 4 (IPv4) was specified in January 1980, but, for reasons dealing with locators discussed below, that first specification proved inadequate in under two years. [1] A slight variation bought about a decade of utility, before serious limitations became obvious [2], and has been the standard for many years, but Internet Protocol version 6 (IPv6)[3] is the newer standard.

Architectural goals[edit]

It was a basic Internet architectural assumption that the intelligence should be at the edges of the network, while the internal forwarding elements should have no memory of previous packets or connections, and use the IP level to decide simply how to forward a packet one hop closer to the destination. The lack of memory, or statelessness, is very different than the assumption of classic telephone networks, which are more concerned with committing resources to a call, and verifying an end-to-end path exists, than forwarding information on that path.

Experience showed that the ideal lies somewhere in the middle. IP can remain stateless, and the decision of whether an end-to-end service is stateful or not is a decision for each application. IP, however, is a hop-by-hop, not an end-to-end, protocol.

Address structure[edit]

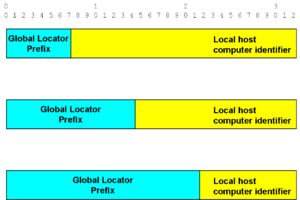

There are two fundamental parts of an address: the locator and the identifier. While these concepts were used informally, the results of discussions in the early nineties were published in a hazy yet provocative view of the future. [4]

Addresses are 32 bits long in IPv4 and 128 bits long in IPv6. Computers process them as strings of bits, but the human-readable notation for the two versions is different. The only common characteristic is that the address alone is not enough for most purposes; there also must be a second value that specified how many bits of the address, starting from the leftmost or most significant bit, should be treated as prefix. At various times in the life of a packet, relatively more or fewer bits will be evaluated as prefix, just as different amounts of physical information is needed to fly from city to city than is needed to drive to a specific building.

While the details of address representation are specific to the version, current practice is to write in the form:

address /prefix length

An IPv4 address might, then, be written 128.0.2.1/24, while an IPv6 address might be written 2001:0db8:0001:1001:0000:0000:0000:0001/64.

Every IP packet has a source address and a destination address. Within the routing domain in which these addresses are used, the addresses must be unique. In the global Internet, blocks of addresses are delegated from the Internet Corporation for Assigned Names and Numbers, which further delegates blocks of addresses to Regional Internet Registries at a roughly continental level.

Locator[edit]

By analogy, a locator tells how to get somewhere, such as a street name in geography, or country and area code in telephony. Routers make decisions based on the locator, until they are on the final "street" and need to look for the "house number".

In the very first implementations of the Internet Protocol, it was assumed, on the research ARPANET, that there would be a very small number of networks, each of which had a big mainframe computer that would manage lots and lots of individual computers. No one thought there would ever be more than the few tens of computers in the early ARPANET, so it was decided to give enough locator space. So, generous prefix space was provided, which would allow for more than 200 interconnected computers!'

That was indeed the assumption of the first IP standard, [1] in which a fixed 8-bit locator field was assigned.

For purposes of understanding, let us consider some slightly different concerns that came up very soon after the original specification. Assume that there were a very few intercontinental links, and the intercontinental routers really only needed to look at the first three bits of the prefix to know which line to use for an outgoing packet. Three bits give 8 possible values, which is more than the number of continents — and the penguins were not likely to be on the ARPANET (Linux had not yet been invented), so the Antarctica code might be assigned to North America, which had most of the computers, and the 8th code could go to whatever continent first had more than 32 computers.

Further, breaking up the prefix by content allowed delegation of the assignment of the computer number within the continental block. Let us call the continental-level administrators Regional Internet Registries.

Before long, however, it became obvious that there would be many more computers, even per continent, than 255. Unfortunately, the address length was already fixed at 32 bits. It was possible to stay with the existing length, at first, by playing some number assignment tricks, the details of which are not important here.

What is important is that there could be three classes of fixed prefix length, one for the small number of institutions with very large quantities of computers (i.e., needing 24 identifier bits), one for the larger number of organizations that did not have as many computers and could get by with a 16 bit identifier field, and one for the even more common case where 8 identifier bits were enough. We now use a convention to refer to the length of the prefix by suffixing /prefixlength (decimal) to the address, so we created /8, /16, and /24 address classes;[2] This is now considered an obsolete technique of classful addressing and routing.

Local versus remote principle[edit]

One of the original architectural principles of the Internet, the local versus remote problem comes up in this context. If the host to which you want to send has the space prefix, you are on the same L2 link and can communicate with it, once you know its layer 2 address. If the destination has different prefix, you must use a router to reach it.

L2 addressing is trivial on point-to-point links; there is only the 'other end". On broadcast-capable local area networks, the Address Resolution Protocol (ARP) provides the solution in IPv4 networks: the client broadcasts the IP address of the desired destination, in a frame with his L2 address as the source; the destination hears it, and sends back an ARP reply with its MAC address.

There is no absolutely general mechanism for a nonbroadcast multiaccess (NBMA) medium or transmission system, such as frame relay and Asynchronous Transfer Mode (ATM), which may be set up as a hub-and-spoke topology , with only the hub available for broadcast if broadcast (or multicast) is available if it is available at all. A common method is to configure a manual L2-L3 mapping, although there are inverse ARP protocols for some NBMA media.[5]

IPv6 takes a different approach, neighbor discovery, where a host can learn passively from the announcements of all hosts sharing the link.

Prefix aggregation[edit]

Remember that "prefix" is another name for "locator". Think of international telephone calls, where, as soon as the local telephone switch recognizes the international prefix, it will look no farther than the number of digits needed to reach that country. In the North American Numbering Plan for telephones, the basic address is 10-digit:

- 3 area code (between a state and a part of a city, based on user population)

- 3 exchange (a locality within a reasonably small area, such as a city); a physical telephone switching office will rarely contain the switches for more than five exchanges. Exchanges are unique within area codes, but each area code can have a 945 exchange.

- 4 line, unique within the address. Every exchange can have a line 1212.

When telephone switches look at only the international part of a number, they are aggregating all exchange and line numbers, or national equivalent. Different national numbering plans have different structures, but the international switch does not need to understand it.

In an analogous manner, major Internet routers do a great deal of prefix aggregation. They try to make decisions on a relatively few bits starting at the left, which might identify a large service provider or enterprise. Once the Internet routers deliver packets to the destination indicated by the aggregate, the routers in that location now look at more bits of the prefix, just as once an international telephone call reaches a country, the national switch looks at the next level of detail, area code in North America. Only when the call reaches the area code level of switch does the telephone routing process look for the additional information pointing to an exchange. Only at the final exchange does the switch look for the specific line.

The example used at the very beginning of the locator section, with a fixed-length /8 prefix field, had the actual implementation used the continent-country split, could have aggregated addresses: the continental link could be selected just on the /4 high-order bits of the address. Just looking at the high-order bits is called aggregation, collapses the "phone number toward the left" Aggregation, especially in the set of IPv4 techniques (to be discussed below) called classless inter-domain routing (CIDR), conserves space in routing tables and processing required to maintain them. For CIDR to work, the prefix length to be evaluated on a specific address, a length that could vary with context, there had to be a way to convey the prefix lengths between routers. Since this could not be done in the address itself, routing protocols were modified to be classless, such that they carry length information along with every address. By putting the length information into the routing protocol rather than in every address, overhead was not unreasonable.

CIDR is more than the technical transmission of prefix lengths; it also includes an administrative doctrine that allocates sufficient, but not excessive, address space to organizations. [6]

The true length information had to be sent through the Internet, so one of the major changes in the current version 4 of the Border Gateway Protocol was CIDR support; all addressing information transported by BGP is coupled with context-dependent prefix length information.

Prefix deaggregation[edit]

Originally, the assumption was that any given site or organization would be happy with a single large "flat" address space, managed by a single large mainframe computer. This assumption was shattered by several innovations: personal computers and servers needing at least enterprise-unique addresses, local area networks needing prefixes that could be part of the prefix seen by the rest of the public Internet but needing uniqueness within the enterprises, and organizations having multiple sites with direct connections (i.e., not accessible via the public Internet) between them.

For simplicity, assume that the institutions that needed to create extended prefixes, called subnets, all had an /8 prefix. Further, assume that their needs all could be met with 8 bits of extended prefix (i.e., giving them 255 separate media seen only inside the organization, and none of those media needed more than 8 bits of identifier. That is a simplification of the technique actually adopted, called fixed length subnetting. Since addresses have no way to carry the length of the subnet field, it was necessary to have it statically defined within routers; the early classful routing protocols had no way to carry the subnet length to other routers.

Originally, the number of bits in the subnet extension were fixed, just as the number of prefix bits in the three clases were fixed. This led to inefficiencies, the most extreme being that if the subnet field were 8 bits, allowing 254 hosts (the all-zeroes and all-ones values have special purpose), 252 addresses would be wasted on every point-to-point line that needed a unique prefix.

Analogously to CIDR, techniques were developed so that different subnet lengths could be used within the same routing domain. Static definition was not enough; interior routing protocols, not just BGP, were modified to carry lengths.

Identifiers[edit]

Think of a basic locator address as a street, and the identifier as the house name on it. In IPv4, the numbering may be static (i.e., administrator defined) or dynamic (i.e., next available number from a pool). IPv6 also has static and dynamic identifier assignment, but its much longer identifier field length allows autoconfiguration using a physical identifier defined as unique. This identifier, on local area networks, is the 48-bit medium access control address.

Rethinking requirements[edit]

One of the problems of Internet Protocol development was that it started as a research project, when there were no local area networks and no personal computers. The first version of the Internet Protocol could interconnect 255 sites, more than anyone thought would be necessary. Large computers at the sites were expected to know the locations of thousands of computers.

The need for larger addresses[edit]

Since the original IP had no way to indicate the prefix length, various administrative conventions were used to infer the length from the value of the first few bits, but, by the early 1990s, this relatively inflexible structure was wasting a great amount of address space. Allocations tended to be of two sizes: too large and too small. In particular, the Internet was running out of /16 prefixes.

An alternative was to give out more /24 prefixes, and give organizations as many as they needed as long as they could not justify a /16, but this strained what was then a precious resource: memory space in the routing table of routers. Each unique global prefix needed its own "slot" in the table. There was a race between running out of /16 prefixes to assign, and assigning more /24 prefixes than the existing routers could carry in their tables. The great mass of /24 addresses, which took up about half of the global routing table, was informally called the "Swamp".

It also was observed that about half the swamp significantly underutilized their identifier space, having 16 or fewer computers. That part of the Swamp became known as the "Toxic Waste Dump".

A number of "just in time" extensions managed to make address allocation more efficient,[7] but more and more limits appeared, due to the 32 bit total size of IPv4 addresses. While a great many computers could be counted serially within a 32 bit number, the need to split the address into locator and identifier meant that some of the potential numbers were not usable. More and more elaborate workarounds were put into service, but just so much could be done with 32 bits. The management of the address space became especially challenging for Internet Service Providers. [8]

Need for a new protocol?[edit]

It was not, at first, a given that an entirely new Internet Protocol was needed. after various user communities expressed their needs, four main proposals emerged, with two combining into what became the new Internet starship, IP version 6.

When the problem was put out to the Internet engineering community, one of the the Internet Engineering Task Force solicited proposals for "IP, the Next Generation", in conscious imitation of the second Star Trek series. [9]

One of the proposals to deal with the problem was relatively modest: IPv4 with larger addresses, called TUBA (TCP and UDP with Bigger Addresses). [10] The design finally accepted, however, merged two of the four proposal, removed some obsolete IPv4 features, and added others needed for more modern networking.

By 1993, it was clear that IPv4 was reaching its limits, not only from the address size. Aspects of the header were harder and harder to process at increasing line speed. The first IPv6 specification was published in 1995, but the Internet research and engineering communities continue to refine it, and it is only in limited deployment, with some outstanding issues, in 2009.[11] The cited first specification has been superseded; see Internet Protocol version 6 for more current work.

The much larger 128 bit addresses, in IPv6, are not so large because we expect to have 128 bits' worth of unique computer addresses. IPv6 addresses are so long because lengthening the address greatly simplifies the work of separating the locator from the identifier, and having a hierarchy of levels of locators/prefixes.

Address scope[edit]

- See also: Locality of networks

The scope of an address describes the area in which it must be unique..

Intranets have no need to have addresses unique with respect to the global Internet, and, indeed, there are blocks in IPv4 and IPv6 that are defined not to be routable in the global system. One way to extend the lifetime of the increasingly scarce IPv4 address space is to use "registered" IPv4 addresses only on the Internet-facing side of network address translators, and use private space in the enterprise side.

Extranets may use private space if the address administration does not become overwhelming; extranets of the size of U.S. military networks such as NIPRNET, SIPRNET and JWICS have unique address space that is delegated by military administrators. In practice, there is no conflict even if every address on the public Internet were duplicated, because the secure networks have an "air gap" to the Internet; there is no direct connectivity at the IP level.

IP address management[edit]

IP address management (IPAM) has become a commercial term for a problem that large networks, both IPv4, and IPv6, have faced for years. The problem covers the limited set of cases where manual assignment of addresses remain necessary, the most common scalable method using the Dynamic Host Configuration Protocol, and a new technique peculiar to Internet Protocol version 6, stateless address autoconfiguration (SLAAC).

There are several levels of IPAM, at an Internet-wide resource management level, at a deployment, and operational support perspectives. It is not always practical to keep a given address even at the address block level; renumbering is often a problem. One of the first industry specifications to formalize, in an Internet Protocol version 4 context, was RFC 2071, "What is network renumbering and why would I want it?" [12]. The immediate driver for this guide was an interim shortage of IPv4 address space through inefficient usage, as opposed to the true exhaustion of new address space expected around the year 2011.

Different renumbering concepts and techniques apply at the administrative, application server, infrastructure server, end user host computer level, router, and security levels. RFC 2072 dealt with the specific case of IPv4 router renumbering and things directly affected from it; it is primarily concerned with manual methods.[13] RFC 4192, which updates 2072, focused on IPv6, described more automated methods, and gave more consideration to devices besides routers.[14]

There are a number of commercial products to assist in IPAM, although their use is most common with medium to large enterprises. Service providers tend to have sufficiently large and specialized requirements that they are apt to build their own,[8] and small enterprises and end users may be able to get by with manual management. In the IPv6 environment, assignment may become easier, but troubleshooting can be harder without the use of techniques such as Domain Name System dynamic update,

IP is transmission medium agnostic[edit]

IP architects call it "agnostic" as to the underlying medium access control that manages shared access to the medium, the physical layer protocol managing the access of single devices to the medium, and to the transmission medium. It commonly runs over multimegabit or gigabit links, but has been demonstrated to operate, in conjunction with the Transmission Control Protocol, over avian media (i.e., carrier pigeons). [15][16][17] IP provides computers with communicable addresses that are globally unique.

IP is a connectionless protocol and provides best-effort delivery for its data payload, making no guarantees with respect to reliability. Without notification to either the sender or receiver, packets may become corrupted, lost, reordered, or duplicated. This design reduces the complexity of Internet routers. When reliable delivery is needed, the Internet Protocol Suite has mechanisms at the end-to-end (e.g., Transmission Control Protocol) or application (e.g., Hypertext Transfer Protocol) levels.

References[edit]

- ↑ 1.0 1.1 Postel, J. (January 1980), DoD Standard Internet Protocol, Internet Engineering Task Force, RFC0760

- ↑ 2.0 2.1 Postel, J. (September 1981), Internet Protocol: DARPA Internet program protocol specification, Internet Engineering Task Force, RFC0791

- ↑ Deering, S. & Hinden, R. (December 1998), Internet Protocol, Version 6 (IPv6) Specification, Internet Engineering Task Force, RFC2460

- ↑ I. Castineyra, N. Chiappa, M. Steenstrup (August 1996), The Nimrod Routing Architecture, RFC1992

- ↑ T. Bradley, C. Brown, A. Malis (September 1998), Inverse Address Resolution Protocol, RFC2390

- ↑ Hubbard, K. et al. (November 1996), Internet Registry IP Allocation Guidelines., Internet Engineering Task Force, RFC2050

- ↑ V. Fuller, T. Li, J. Yu, K. Varadhan (September 1993), Classless Inter-Domain Routing (CIDR): an Address Assignment and Aggregation Strategy, RFC1519

- ↑ 8.0 8.1 Berkowitz, Howard C. (November 1998), "Good ISPs Have No Class: Addressing Nuances and Nuisances", North American Network Operators Group

- ↑ S. Bradner, A. Mankin (December 1993), IP: Next Generation (IPng) White Paper Solicitation., RFC1550

- ↑ R. Callon (June 1992), TCP and UDP with Bigger Addresses (TUBA), A Simple Proposal for Internet Addressing and Routing, RFC1347

- ↑ S. Deering, R. Hinden (December 1995), Internet Protocol, Version 6 (IPv6) Specification, RFC1883

- ↑ Paul Ferguson and Howard C. Berkowitz (January 1997), Network Renumbering Overview: Why would I want it and what is it anyway?, Procedures for Internet and Enterprise Renumbering Working Group (PIER), Internet Engineering Task Force, RFC 2071

- ↑ Howard C. Berkowitz (January 1997), Router Renumbering Guide, Procedures for Internet and Enterprise Renumbering Working Group (PIER), Internet Engineering Task Force, RFC 2072

- ↑ F. Baker, E. Lear, R. Droms (September 2005), Procedures for Renumbering an IPv6 Network without a Flag Day, Internet Engineering Task Force, RFC 4192

- ↑ Waitzman, D. (April 1 1990), Standard for the transmission of IP datagrams on avian carriers, Internet Engineering Task Force, RFC1149

- ↑ Waitzman, D. (April 1 1999), IP over Avian Carriers with Quality of Service, Internet Engineering Task Force, RFC2549

- ↑ Bergen Linux Users Group (April 28 2001, 12:00), The highly unofficial CPIP WG

KSF

KSF