Bayesian optimization

From HandWiki - Reading time: 11 min

From HandWiki - Reading time: 11 min

Bayesian optimization is a sequential design strategy for global optimization of black-box functions,[1][2][3] that does not assume any functional forms. It is usually employed to optimize expensive-to-evaluate functions. With the rise of artificial intelligence innovation in the 21st century, Bayesian optimization algorithms have found prominent use in machine learning problems for optimizing hyperparameter values.[4][5]

History

The term is generally attributed to Jonas Mockus (lt) and is coined in his work from a series of publications on global optimization in the 1970s and 1980s.[6][7][1]

Early mathematics foundations

From 1960s to 1980s

The earliest idea of Bayesian optimization[8] sprang in 1964, from a paper by American applied mathematician Harold J. Kushner,[9] “A New Method of Locating the Maximum Point of an Arbitrary Multipeak Curve in the Presence of Noise”. Although not directly proposing Bayesian optimization, in this paper, he first proposed a new method of locating the maximum point of an arbitrary multipeak curve in a noisy environment. This method provided an important theoretical foundation for subsequent Bayesian optimization.

By the 1980s, the framework we now use for Bayesian optimization was explicitly established. In 1978, the Lithuanian scientist Jonas Mockus,[10] in his paper “The Application of Bayesian Methods for Seeking the Extremum”, discussed how to use Bayesian methods to find the extreme value of a function under various uncertain conditions. In his paper, Mockus first proposed the Expected Improvement principle (EI), which is one of the core sampling strategies of Bayesian optimization. This criterion balances exploration while optimizing the function efficiently by maximizing the expected improvement. Because of the usefulness and profound impact of this principle, Jonas Mockus is widely regarded as the founder of Bayesian optimization. Although Expected Improvement principle (EI) is one of the earliest proposed core sampling strategies for Bayesian optimization, it is not the only one, with the development of modern society, we also have Probability of Improvement (PI), or Upper Confidence Bound (UCB)[11] and so on.

From theory to practice

In the 1990s, Bayesian optimization began to gradually transition from pure theory to real-world applications. In 1998, Donald R. Jones[12] and his coworkers published a paper titled “Efficient Global Optimization of Expensive Black-Box Functions[13]”. In this paper, they proposed the Gaussian Process (GP) and elaborated on the Expected Improvement principle (EI) proposed by Jonas Mockus in 1978. Through the efforts of Donald R. Jones and his colleagues, Bayesian Optimization began to shine in the fields like computer science and engineering. However, the computational complexity of Bayesian optimization for the computing power at that time still affected its development to a large extent.

In the 21st century, with the gradual rise of artificial intelligence and bionic robots, Bayesian optimization has been widely used in machine learning and deep learning, and has become an important tool for Hyperparameter Tuning.[14] Companies such as Google, Facebook and OpenAI have added Bayesian optimization to their deep learning frameworks to improve search efficiency. However, Bayesian optimization still faces many challenges, for example, because of the use of Gaussian Process[15] as a proxy model for optimization, when there is a lot of data, the training of Gaussian Process will be very slow and the computational cost is very high. This makes it difficult for this optimization method to work well in more complex drug development and medical experiments.

Strategy

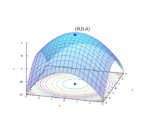

Bayesian optimization is used on problems of the form , with being the set of all possible parameters , typically with less than or equal to 20 dimensions for optimal usage (), and whose membership can easily be evaluated. Bayesian optimization is particularly advantageous for problems where is difficult to evaluate due to its computational cost. The objective function, , is continuous and takes the form of some unknown structure, referred to as a "black box". Upon its evaluation, only is observed and its derivatives are not evaluated.[17]

Since the objective function is unknown, the Bayesian strategy is to treat it as a random function and place a prior over it. The prior captures beliefs about the behavior of the function. After gathering the function evaluations, which are treated as data, the prior is updated to form the posterior distribution over the objective function. The posterior distribution, in turn, is used to construct an acquisition function (often also referred to as infill sampling criteria) that determines the next query point.

There are several methods used to define the prior/posterior distribution over the objective function. The most common two methods use Gaussian processes in a method called kriging. Another less expensive method uses the Parzen-Tree Estimator to construct two distributions for 'high' and 'low' points, and then finds the location that maximizes the expected improvement.[18]

Standard Bayesian optimization relies upon each being easy to evaluate, and problems that deviate from this assumption are known as exotic Bayesian optimization problems. Optimization problems can become exotic if it is known that there is noise, the evaluations are being done in parallel, the quality of evaluations relies upon a tradeoff between difficulty and accuracy, the presence of random environmental conditions, or if the evaluation involves derivatives.[17]

Acquisition functions

Examples of acquisition functions include

- probability of improvement

- expected improvement

- Bayesian expected losses

- Upper Confidence Bound (UCB) or lower confidence bounds

- Thompson sampling

and hybrids of these.[19] They all trade-off exploration and exploitation so as to minimize the number of function queries. As such, Bayesian optimization is well suited for functions that are expensive to evaluate.

Solution methods

The maximum of the acquisition function is typically found by resorting to discretization or by means of an auxiliary optimizer. Acquisition functions are maximized using a numerical optimization technique, such as Newton's method or quasi-Newton methods like the Broyden–Fletcher–Goldfarb–Shanno algorithm.

Applications

The approach has been applied to solve a wide range of problems,[20] including learning to rank,[21] computer graphics and visual design,[22][23][24] robotics,[25][26][27][28] sensor networks,[29][30] automatic algorithm configuration,[31][32] automatic machine learning toolboxes,[33][34][35] reinforcement learning,[36] planning, visual attention, architecture configuration in deep learning, static program analysis, experimental particle physics,[37][38] quality-diversity optimization,[39][40][41] chemistry, material design, and drug development.[17][42][43][44]

Bayesian optimization has been applied in the field of facial recognition.[45] The performance of the Histogram of Oriented Gradients (HOG) algorithm, a popular feature extraction method, heavily relies on its parameter settings. Optimizing these parameters can be challenging but crucial for achieving high accuracy.[45] A novel approach to optimize the HOG algorithm parameters and image size for facial recognition using a Tree-structured Parzen Estimator (TPE) based Bayesian optimization technique has been proposed.[45] This optimized approach has the potential to be adapted for other computer vision applications and contributes to the ongoing development of hand-crafted parameter-based feature extraction algorithms in computer vision.[45]

See also

- Multi-armed bandit

- Kriging

- Thompson sampling

- Global optimization

- Bayesian experimental design

- Probabilistic numerics

- Pareto optimum

- Active learning (machine learning)

- Multi-objective optimization

References

- ↑ 1.0 1.1 Močkus, J. (1989). Bayesian Approach to Global Optimization. Dordrecht: Kluwer Academic. ISBN 0-7923-0115-3.

- ↑ Garnett, Roman (2023). Bayesian Optimization. Cambridge University Press. ISBN 978-1-108-42578-0. https://bayesoptbook.com.

- ↑ Hennig, P.; Osborne, M. A.; Kersting, H. P. (2022). Probabilistic Numerics. Cambridge University Press. pp. 243–278. ISBN 978-1107163447. https://www.probabilistic-numerics.org/assets/ProbabilisticNumerics.pdf.

- ↑ Snoek, Jasper (2012). "Practical Bayesian Optimization of Machine Learning Algorithms". Advances in Neural Information Processing Systems 25 (NIPS 2012) 25. https://proceedings.neurips.cc/paper/2012/hash/05311655a15b75fab86956663e1819cd-Abstract.html.

- ↑ Klein, Aaron (2017). "Fast bayesian optimization of machine learning hyperparameters on large datasets". Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, PMLR: 528–536. https://proceedings.mlr.press/v54/klein17a.html.

- ↑ Močkus, Jonas (1975). "On bayesian methods for seeking the extremum". Optimization Techniques IFIP Technical Conference Novosibirsk, July 1–7, 1974. Lecture Notes in Computer Science. 27. pp. 400–404. doi:10.1007/3-540-07165-2_55. ISBN 978-3-540-07165-5.

- ↑ Močkus, Jonas (1977). "On Bayesian Methods for Seeking the Extremum and their Application". IFIP Congress: 195–200.

- ↑ GARNETT, ROMAN (2023). BAYESIAN OPTIMIZATION (First published 2023 ed.). Cambridge University Press. ISBN 978-1-108-42578-0.

- ↑ "Kushner, Harold". https://vivo.brown.edu/display/hkushner.

- ↑ "Jonas Mockus" (in en). https://en.ktu.edu/people/jonas-mockus/.

- ↑ Kaufmann, Emilie; Cappe, Olivier; Garivier, Aurelien (2012-03-21). "On Bayesian Upper Confidence Bounds for Bandit Problems" (in en). Proceedings of the Fifteenth International Conference on Artificial Intelligence and Statistics (PMLR): 592–600. https://proceedings.mlr.press/v22/kaufmann12.html.

- ↑ "Donald R. Jones". https://scholar.google.com/citations?user=CZhZ4MYAAAAJ&hl=en.

- ↑ Jones, Donald R.; Schonlau, Matthias; Welch, William J. (1998). "Efficient Global Optimization of Expensive Black-Box Functions". Journal of Global Optimization 13 (4): 455–492. doi:10.1023/A:1008306431147. https://link.springer.com/article/10.1023/A:1008306431147.

- ↑ T. T. Joy, S. Rana, S. Gupta and S. Venkatesh, "Hyperparameter tuning for big data using Bayesian optimisation," 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 2016, pp. 2574-2579, doi: 10.1109/ICPR.2016.7900023. keywords: {Big Data;Bayes methods;Optimization;Tuning;Data models;Gaussian processes;Noise measurement},

- ↑ Mackay, D. J. C. (1998). "Introduction to Gaussian processes". in Bishop, C. M.. Neural Networks and Machine Learning. NATO ASI Series. 168. pp. 133–165. https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=e045b76dc5daf9f4656ac10b456c5d1d9de5bc84. Retrieved 2025-03-06.

- ↑ Wilson, Samuel (2019-11-22), ParBayesianOptimization R package, https://github.com/AnotherSamWilson/ParBayesianOptimization, retrieved 2019-12-12

- ↑ 17.0 17.1 17.2 Frazier, Peter I. (2018-07-08). "A Tutorial on Bayesian Optimization". arXiv:1807.02811 [stat.ML].

- ↑ J. S. Bergstra, R. Bardenet, Y. Bengio, B. Kégl: Algorithms for Hyper-Parameter Optimization. Advances in Neural Information Processing Systems: 2546–2554 (2011)

- ↑ Matthew W. Hoffman, Eric Brochu, Nando de Freitas: Portfolio Allocation for Bayesian Optimization. Uncertainty in Artificial Intelligence: 327–336 (2011)

- ↑ Eric Brochu, Vlad M. Cora, Nando de Freitas: A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning. CoRR abs/1012.2599 (2010)

- ↑ Eric Brochu, Nando de Freitas, Abhijeet Ghosh: Active Preference Learning with Discrete Choice Data. Advances in Neural Information Processing Systems: 409-416 (2007)

- ↑ Eric Brochu, Tyson Brochu, Nando de Freitas: A Bayesian Interactive Optimization Approach to Procedural Animation Design. Symposium on Computer Animation 2010: 103–112

- ↑ Yuki Koyama, Issei Sato, Daisuke Sakamoto, Takeo Igarashi: Sequential Line Search for Efficient Visual Design Optimization by Crowds. ACM Transactions on Graphics, Volume 36, Issue 4, pp.48:1–48:11 (2017). DOI: https://doi.org/10.1145/3072959.3073598

- ↑ Yuki Koyama, Issei Sato, Masataka Goto: Sequential Gallery for Interactive Visual Design Optimization. ACM Transactions on Graphics, Volume 39, Issue 4, pp.88:1–88:12 (2020). DOI: https://doi.org/10.1145/3386569.3392444

- ↑ Daniel J. Lizotte, Tao Wang, Michael H. Bowling, Dale Schuurmans: Automatic Gait Optimization with Gaussian Process Regression . International Joint Conference on Artificial Intelligence: 944–949 (2007)

- ↑ Ruben Martinez-Cantin, Nando de Freitas, Eric Brochu, Jose Castellanos and Arnaud Doucet. A Bayesian exploration-exploitation approach for optimal online sensing and planning with a visually guided mobile robot. Autonomous Robots. Volume 27, Issue 2, pp 93–103 (2009)

- ↑ Scott Kuindersma, Roderic Grupen, and Andrew Barto. Variable Risk Control via Stochastic Optimization. International Journal of Robotics Research, volume 32, number 7, pp 806–825 (2013)

- ↑ Roberto Calandra, André Seyfarth, Jan Peters, and Marc P. Deisenroth Bayesian optimization for learning gaits under uncertainty. Ann. Math. Artif. Intell. Volume 76, Issue 1, pp 5-23 (2016) DOI:10.1007/s10472-015-9463-9

- ↑ Niranjan Srinivas, Andreas Krause, Sham M. Kakade, Matthias W. Seeger: Information-Theoretic Regret Bounds for Gaussian Process Optimization in the Bandit Setting. IEEE Transactions on Information Theory 58(5):3250–3265 (2012)

- ↑ Garnett, Roman; Osborne, Michael A.; Roberts, Stephen J. (2010). "Proceedings of the 9th International Conference on Information Processing in Sensor Networks, IPSN 2010, April 12–16, 2010, Stockholm, Sweden". in Abdelzaher, Tarek F.; Voigt, Thiemo; Wolisz, Adam. ACM. pp. 209–219. doi:10.1145/1791212.1791238. ISBN 978-1-60558-988-6.

- ↑ Frank Hutter, Holger Hoos, and Kevin Leyton-Brown (2011). Sequential model-based optimization for general algorithm configuration, Learning and Intelligent Optimization

- ↑ J. Snoek, H. Larochelle, R. P. Adams Practical Bayesian Optimization of Machine Learning Algorithms. Advances in Neural Information Processing Systems: 2951-2959 (2012)

- ↑ J. Bergstra, D. Yamins, D. D. Cox (2013). Hyperopt: A Python Library for Optimizing the Hyperparameters of Machine Learning Algorithms. Proc. SciPy 2013.

- ↑ Chris Thornton, Frank Hutter, Holger H. Hoos, Kevin Leyton-Brown: Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. KDD 2013: 847–855

- ↑ Jasper Snoek, Hugo Larochelle and Ryan Prescott Adams. Practical Bayesian Optimization of Machine Learning Algorithms. Advances in Neural Information Processing Systems, 2012

- ↑ Berkenkamp, Felix (2019). Safe Exploration in Reinforcement Learning: Theory and Applications in Robotics (Doctoral Thesis thesis). ETH Zurich. doi:10.3929/ethz-b-000370833. hdl:20.500.11850/370833.

- ↑ Philip Ilten, Mike Williams, Yunjie Yang. Event generator tuning using Bayesian optimization. 2017 JINST 12 P04028. DOI: 10.1088/1748-0221/12/04/P04028

- ↑ Evaristo Cisbani et al. AI-optimized detector design for the future Electron-Ion Collider: the dual-radiator RICH case 2020 JINST 15 P05009. DOI: 10.1088/1748-0221/15/05/P05009

- ↑ Kent, Paul; Gaier, Adam; Mouret, Jean-Baptiste; Branke, Juergen (2023-07-19). "BOP-Elites, a Bayesian Optimisation Approach to Quality Diversity Search with Black-Box descriptor functions". arXiv:2307.09326 [math.OC]. Preprint: Arxiv.

- ↑ Kent, Paul; Branke, Juergen (2023-07-12). "Bayesian Quality Diversity Search with Interactive Illumination". Proceedings of the Genetic and Evolutionary Computation Conference. GECCO '23. New York, NY, USA: Association for Computing Machinery. pp. 1019–1026. doi:10.1145/3583131.3590486. ISBN 979-8-4007-0119-1. https://dl.acm.org/doi/10.1145/3583131.3590486.

- ↑ Gaier, Adam; Asteroth, Alexander; Mouret, Jean-Baptiste (2018-09-01). "Data-Efficient Design Exploration through Surrogate-Assisted Illumination". Evolutionary Computation 26 (3): 381–410. doi:10.1162/evco_a_00231. ISSN 1063-6560. PMID 29883202.

- ↑ Gomez-Bombarelli et al. Automatic Chemical Design using a Data-Driven Continuous Representation of Molecules. ACS Central Science, Volume 4, Issue 2, 268-276 (2018)

- ↑ Griffiths et al. Constrained Bayesian Optimization for Automatic Chemical Design using Variational Autoencoders Chemical Science: 11, 577-586 (2020)

- ↑ Serles et al. Ultrahigh Specific Strength by Bayesian Optimization of Carbon Nanolattices Advanced Materials, 37 (14) 2410651 (2025)

- ↑ 45.0 45.1 45.2 45.3 Mohammed Mehdi Bouchene: Bayesian Optimization of Histogram of Oriented Gradients (Hog) Parameters for Facial Recognition. SSRN (2023)

|

KSF

KSF