Chebyshev polynomials

From HandWiki - Reading time: 29 min

From HandWiki - Reading time: 29 min

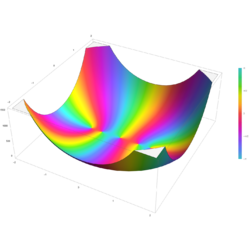

The Chebyshev polynomials are two sequences of polynomials related to the cosine and sine functions, notated as

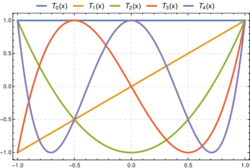

The Chebyshev polynomials of the first kind

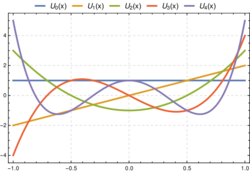

Similarly, the Chebyshev polynomials of the second kind

That these expressions define polynomials in

An important and convenient property of the Tn(x) is that they are orthogonal with respect to the inner product:

and Un(x) are orthogonal with respect to another, analogous inner product, given below.

The Chebyshev polynomials Tn are polynomials with the largest possible leading coefficient whose absolute value on the interval [−1, 1] is bounded by 1. They are also the "extremal" polynomials for many other properties.[1]

In 1952, Cornelius Lanczos showed that the Chebyshev polynomials are important in approximation theory for the solution of linear systems;[2] the roots of Tn(x), which are also called Chebyshev nodes, are used as matching points for optimizing polynomial interpolation. The resulting interpolation polynomial minimizes the problem of Runge's phenomenon and provides an approximation that is close to the best polynomial approximation to a continuous function under the maximum norm, also called the "minimax" criterion. This approximation leads directly to the method of Clenshaw–Curtis quadrature.

These polynomials were named after Pafnuty Chebyshev.[3] The letter T is used because of the alternative transliterations of the name Chebyshev as Tchebycheff, Tchebyshev (French) or Tschebyschow (German).

Definitions

Recurrence definition

The Chebyshev polynomials of the first kind are obtained from the recurrence relation:

The recurrence also allows to represent them explicitly as the determinant of a tridiagonal matrix of size

The ordinary generating function for Tn is:

There are several other generating functions for the Chebyshev polynomials; the exponential generating function is:

The generating function relevant for 2-dimensional potential theory and multipole expansion is:

The Chebyshev polynomials of the second kind are defined by the recurrence relation:

Notice that the two sets of recurrence relations are identical, except for

and the exponential generating function is:

Trigonometric definition

As described in the introduction, the Chebyshev polynomials of the first kind can be defined as the unique polynomials satisfying:

or, in other words, as the unique polynomials satisfying:

for n = 0, 1, 2, 3, ….

The polynomials of the second kind satisfy:

or

which is structurally quite similar to the Dirichlet kernel Dn(x):

(The Dirichlet kernel, in fact, coincides with what is now known as the Chebyshev polynomial of the fourth kind.)

An equivalent way to state this is via exponentiation of a complex number: given a complex number z = a + bi with absolute value of one:

Chebyshev polynomials can be defined in this form when studying trigonometric polynomials.[4]

That cos nx is an nth-degree polynomial in cos x can be seen by observing that cos nx is the real part of one side of de Moivre's formula:

The real part of the other side is a polynomial in cos x and sin x, in which all powers of sin x are even and thus replaceable through the identity cos2 x + sin2 x = 1. By the same reasoning, sin nx is the imaginary part of the polynomial, in which all powers of sin x are odd and thus, if one factor of sin x is factored out, the remaining factors can be replaced to create a (n−1)st-degree polynomial in cos x.

Commuting polynomials definition

Chebyshev polynomials can also be characterized by the following theorem:[5]

If

Pell equation definition

The Chebyshev polynomials can also be defined as the solutions to the Pell equation:

in a ring R[x].[6] Thus, they can be generated by the standard technique for Pell equations of taking powers of a fundamental solution:

Relations between the two kinds of Chebyshev polynomials

The Chebyshev polynomials of the first and second kinds correspond to a complementary pair of Lucas sequences Ṽn(P, Q) and Ũn(P, Q) with parameters P = 2x and Q = 1:

It follows that they also satisfy a pair of mutual recurrence equations:[7]

The second of these may be rearranged using the recurrence definition for the Chebyshev polynomials of the second kind to give:

Using this formula iteratively gives the sum formula:

while replacing

This relationship is used in the Chebyshev spectral method of solving differential equations.

Turán's inequalities for the Chebyshev polynomials are:[8]

The integral relations are[7]:187(47)(48)[9]

where integrals are considered as principal value.

Explicit expressions

Different approaches to defining Chebyshev polynomials lead to different explicit expressions. The trigonometric definition gives an explicit formula as follows:

From this trigonometric form, the recurrence definition can be recovered by computing directly that the bases cases hold:

and

and that the product-to-sum identity holds:

Using the complex number exponentiation definition of the Chebyshev polynomial, one can derive the following expression:

The two are equivalent because

An explicit form of the Chebyshev polynomial in terms of monomials xk follows from de Moivre's formula:

where Re denotes the real part of a complex number. Expanding the formula, one gets:

The real part of the expression is obtained from summands corresponding to even indices. Noting

which in turn means that:

This can be written as a 2F1 hypergeometric function:

where the prime at the summation symbol indicates that the contribution of j = 0 needs to be halved if it appears.

A related expression for Tn as a sum of monomials with binomial coefficients and powers of two is

Similarly, Un can be expressed in terms of hypergeometric functions:

Properties

Symmetry

That is, Chebyshev polynomials of even order have even symmetry and therefore contain only even powers of x. Chebyshev polynomials of odd order have odd symmetry and therefore contain only odd powers of x.

Roots and extrema

A Chebyshev polynomial of either kind with degree n has n different simple roots, called Chebyshev roots, in the interval [−1, 1]. The roots of the Chebyshev polynomial of the first kind are sometimes called Chebyshev nodes because they are used as nodes in polynomial interpolation. Using the trigonometric definition and the fact that:

one can show that the roots of Tn are:

Similarly, the roots of Un are:

The extrema of Tn on the interval −1 ≤ x ≤ 1 are located at:

One unique property of the Chebyshev polynomials of the first kind is that on the interval −1 ≤ x ≤ 1 all of the extrema have values that are either −1 or 1. Thus these polynomials have only two finite critical values, the defining property of Shabat polynomials. Both the first and second kinds of Chebyshev polynomial have extrema at the endpoints, given by:

The extrema of

Specifically,[12][13] when

When

This result has been generalized to solutions of

Differentiation and integration

The derivatives of the polynomials can be less than straightforward. By differentiating the polynomials in their trigonometric forms, it can be shown that:

The last two formulas can be numerically troublesome due to the division by zero (0/0 indeterminate form, specifically) at x = 1 and x = −1. By L'Hôpital's rule:

More generally,

which is of great use in the numerical solution of eigenvalue problems.

Also, we have:

where the prime at the summation symbols means that the term contributed by k = 0 is to be halved, if it appears.

Concerning integration, the first derivative of the Tn implies that:

and the recurrence relation for the first kind polynomials involving derivatives establishes that for n ≥ 2:

The last formula can be further manipulated to express the integral of Tn as a function of Chebyshev polynomials of the first kind only:

Furthermore, we have:

Products of Chebyshev polynomials

The Chebyshev polynomials of the first kind satisfy the relation:

which is easily proved from the product-to-sum formula for the cosine:

For n = 1 this results in the already known recurrence formula, just arranged differently, and with n = 2 it forms the recurrence relation for all even or all odd indexed Chebyshev polynomials (depending on the parity of the lowest m) which implies the evenness or oddness of these polynomials. Three more useful formulas for evaluating Chebyshev polynomials can be concluded from this product expansion:

The polynomials of the second kind satisfy the similar relation:

(with the definition U−1 ≡ 0 by convention ). They also satisfy:

for m ≥ n. For n = 2 this recurrence reduces to:

which establishes the evenness or oddness of the even or odd indexed Chebyshev polynomials of the second kind depending on whether m starts with 2 or 3.

Composition and divisibility properties

The trigonometric definitions of Tn and Un imply the composition or nesting properties:[15]

For Tmn the order of composition may be reversed, making the family of polynomial functions Tn a commutative semigroup under composition.

Since Tm(x) is divisible by x if m is odd, it follows that Tmn(x) is divisible by Tn(x) if m is odd. Furthermore, Umn−1(x) is divisible by Un−1(x), and in the case that m is even, divisible by Tn(x)Un−1(x).

Orthogonality

Both Tn and Un form a sequence of orthogonal polynomials. The polynomials of the first kind Tn are orthogonal with respect to the weight:

on the interval [−1, 1], i.e. we have:

This can be proven by letting x = cos θ and using the defining identity Tn(cos θ) = cos(nθ).

Similarly, the polynomials of the second kind Un are orthogonal with respect to the weight:

on the interval [−1, 1], i.e. we have:

(The measure √1 − x2 dx is, to within a normalizing constant, the Wigner semicircle distribution.)

These orthogonality properties follow from the fact that the Chebyshev polynomials solve the Chebyshev differential equations:

which are Sturm–Liouville differential equations. It is a general feature of such differential equations that there is a distinguished orthonormal set of solutions. (Another way to define the Chebyshev polynomials is as the solutions to those equations.)

The Tn also satisfy a discrete orthogonality condition:

where N is any integer greater than max(i, j),[9] and the xk are the N Chebyshev nodes (see above) of TN (x):

For the polynomials of the second kind and any integer N > i + j with the same Chebyshev nodes xk, there are similar sums:

and without the weight function:

For any integer N > i + j, based on the N zeros of UN (x):

one can get the sum:

and again without the weight function:

Minimal ∞-norm

For any given n ≥ 1, among the polynomials of degree n with leading coefficient 1 (monic polynomials):

is the one of which the maximal absolute value on the interval [−1, 1] is minimal.

This maximal absolute value is:

and |f(x)| reaches this maximum exactly n + 1 times at:

Let's assume that wn(x) is a polynomial of degree n with leading coefficient 1 with maximal absolute value on the interval [−1, 1] less than 1 / 2n − 1.

Define

Because at extreme points of Tn we have

From the intermediate value theorem, fn(x) has at least n roots. However, this is impossible, as fn(x) is a polynomial of degree n − 1, so the fundamental theorem of algebra implies it has at most n − 1 roots.

Remark

By the equioscillation theorem, among all the polynomials of degree ≤ n, the polynomial f minimizes || f ||∞ on [−1, 1] if and only if there are n + 2 points −1 ≤ x0 < x1 < ⋯ < xn + 1 ≤ 1 such that | f(xi)| = || f ||∞.

Of course, the null polynomial on the interval [−1, 1] can be approximated by itself and minimizes the ∞-norm.

Above, however, | f | reaches its maximum only n + 1 times because we are searching for the best polynomial of degree n ≥ 1 (therefore the theorem evoked previously cannot be used).

Chebyshev polynomials as special cases of more general polynomial families

The Chebyshev polynomials are a special case of the ultraspherical or Gegenbauer polynomials

Chebyshev polynomials are also a special case of Dickson polynomials:

In particular, when

Other properties

The curves given by y = Tn(x), or equivalently, by the parametric equations y = Tn(cos θ) = cos nθ, x = cos θ, are a special case of Lissajous curves with frequency ratio equal to n.

Similar to the formula:

we have the analogous formula:

For x ≠ 0:

and:

which follows from the fact that this holds by definition for x = eiθ.

Examples

First kind

The first few Chebyshev polynomials of the first kind are OEIS: A028297

Second kind

The first few Chebyshev polynomials of the second kind are OEIS: A053117

As a basis set

In the appropriate Sobolev space, the set of Chebyshev polynomials form an orthonormal basis, so that a function in the same space can, on −1 ≤ x ≤ 1, be expressed via the expansion:[16]

Furthermore, as mentioned previously, the Chebyshev polynomials form an orthogonal basis which (among other things) implies that the coefficients an can be determined easily through the application of an inner product. This sum is called a Chebyshev series or a Chebyshev expansion.

Since a Chebyshev series is related to a Fourier cosine series through a change of variables, all of the theorems, identities, etc. that apply to Fourier series have a Chebyshev counterpart.[16] These attributes include:

- The Chebyshev polynomials form a complete orthogonal system.

- The Chebyshev series converges to f(x) if the function is piecewise smooth and continuous. The smoothness requirement can be relaxed in most cases – as long as there are a finite number of discontinuities in f(x) and its derivatives.

- At a discontinuity, the series will converge to the average of the right and left limits.

The abundance of the theorems and identities inherited from Fourier series make the Chebyshev polynomials important tools in numeric analysis; for example they are the most popular general purpose basis functions used in the spectral method,[16] often in favor of trigonometric series due to generally faster convergence for continuous functions (Gibbs' phenomenon is still a problem).

Example 1

Consider the Chebyshev expansion of log(1 + x). One can express:

One can find the coefficients an either through the application of an inner product or by the discrete orthogonality condition. For the inner product:

which gives:

Alternatively, when the inner product of the function being approximated cannot be evaluated, the discrete orthogonality condition gives an often useful result for approximate coefficients:

where δij is the Kronecker delta function and the xk are the N Gauss–Chebyshev zeros of TN (x):

For any N, these approximate coefficients provide an exact approximation to the function at xk with a controlled error between those points. The exact coefficients are obtained with N = ∞, thus representing the function exactly at all points in [−1,1]. The rate of convergence depends on the function and its smoothness.

This allows us to compute the approximate coefficients an very efficiently through the discrete cosine transform:

Example 2

To provide another example:

Partial sums

The partial sums of:

are very useful in the approximation of various functions and in the solution of differential equations (see spectral method). Two common methods for determining the coefficients an are through the use of the inner product as in Galerkin's method and through the use of collocation which is related to interpolation.

As an interpolant, the N coefficients of the (N − 1)st partial sum are usually obtained on the Chebyshev–Gauss–Lobatto[17] points (or Lobatto grid), which results in minimum error and avoids Runge's phenomenon associated with a uniform grid. This collection of points corresponds to the extrema of the highest order polynomial in the sum, plus the endpoints and is given by:

Polynomial in Chebyshev form

An arbitrary polynomial of degree N can be written in terms of the Chebyshev polynomials of the first kind.[9] Such a polynomial p(x) is of the form:

Polynomials in Chebyshev form can be evaluated using the Clenshaw algorithm.

Polynomials denoted

and satisfy:

A. F. Horadam called the polynomials

Shifted Chebyshev polynomials of the first and second kinds are related to the Chebyshev polynomials by:[18]

When the argument of the Chebyshev polynomial satisfies 2x − 1 ∈ [−1, 1] the argument of the shifted Chebyshev polynomial satisfies x ∈ [0, 1]. Similarly, one can define shifted polynomials for generic intervals [a, b].

Around 1990 the terms "third-kind" and "fourth-kind" came into use in connection with Chebyshev polynomials, although the polynomials denoted by these terms had an earlier development under the name airfoil polynomials. According to J. C. Mason and G. H. Elliott, the terminology "third-kind" and "fourth-kind" is due to Walter Gautschi, "in consultation with colleagues in the field of orthogonal polynomials."[21] The Chebyshev polynomials of the third kind are defined as:

and the Chebyshev polynomials of the fourth kind are defined as:

where

and are proportional to Jacobi polynomials

All four families satisfy the recurrence

See also

- Chebyshev filter

- Chebyshev cube root

- Dickson polynomials

- Legendre polynomials

- Laguerre polynomials

- Hermite polynomials

- Minimal polynomial of 2cos(2pi/n)

- Romanovski polynomials

- Chebyshev rational functions

- Approximation theory

- The Chebfun system

- Discrete Chebyshev transform

- Markov brothers' inequality

- Clenshaw algorithm

References

- ↑ Rivlin, Theodore J. (1974). "Chapter 2, Extremal properties". The Chebyshev Polynomials. Pure and Applied Mathematics (1st ed.). New York-London-Sydney: Wiley-Interscience [John Wiley & Sons]. pp. 56–123. ISBN 978-047172470-4.

- ↑ Lanczos, C. (1952). "Solution of systems of linear equations by minimized iterations". Journal of Research of the National Bureau of Standards 49 (1): 33. doi:10.6028/jres.049.006.

- ↑ Chebyshev polynomials were first presented in Chebyshev, P. L. (1854). "Théorie des mécanismes connus sous le nom de parallélogrammes" (in fr). Mémoires des Savants étrangers présentés à l'Académie de Saint-Pétersbourg 7: 539–586.

- ↑ Schaeffer, A. C. (1941). "Inequalities of A. Markoff and S. Bernstein for polynomials and related functions". Bulletin of the American Mathematical Society 47 (8): 565–579. doi:10.1090/S0002-9904-1941-07510-5. ISSN 0002-9904. https://projecteuclid.org/journals/bulletin-of-the-american-mathematical-society/volume-47/issue-8/Inequalities-of-A-Markoff-and-S-Bernstein-for-polynomials-and/bams/1183503783.full.

- ↑ Ritt, J. F. (1922). "Prime and Composite Polynomials". Trans. Amer. Math. Soc. 23: 51–66. doi:10.1090/S0002-9947-1922-1501189-9. https://www.ams.org/journals/tran/1922-023-01/S0002-9947-1922-1501189-9.

- ↑ Demeyer, Jeroen (2007). Diophantine Sets over Polynomial Rings and Hilbert's Tenth Problem for Function Fields (PDF) (Ph.D. thesis). p. 70. Archived from the original (PDF) on 2007-07-02.

- ↑ 7.0 7.1 Erdélyi, Arthur, ed (1953). Higher Transcendental Functions - Volume II - Based, in part, on notes left by Harry Bateman.. Bateman Manuscript Project. II (1 ed.). New York / Toronto / London: McGraw-Hill Book Company, Inc.. p. 184:(3),(4). Contract No. N6onr-244 Task Order XIV. Project Designation Number: NR 043-045. Order No. 19546. http://apps.nrbook.com/bateman/Vol2.pdf. Retrieved 2020-07-23. [1][2] (xvii+1 errata page+396 pages, red cloth hardcover) (NB. Copyright was renewed by California Institute of Technology in 1981.); Reprint: Robert E. Krieger Publishing Co., Inc., Melbourne, Florida, USA. 1981. ISBN:0-89874-069-X; Planned Dover reprint: ISBN:0-486-44615-8.

- ↑ Beckenbach, E. F.; Seidel, W.; Szász, Otto (1951), "Recurrent determinants of Legendre and of ultraspherical polynomials", Duke Math. J. 18: 1–10, doi:10.1215/S0012-7094-51-01801-7

- ↑ 9.0 9.1 9.2 Mason & Handscomb 2002.

- ↑ Cody, W. J. (1970). "A survey of practical rational and polynomial approximation of functions". SIAM Review 12 (3): 400–423. doi:10.1137/1012082.

- ↑ Mathar, R. J. (2006). "Chebyshev series expansion of inverse polynomials". J. Comput. Appl. Math. 196 (2): 596–607. doi:10.1016/j.cam.2005.10.013. Bibcode: 2006JCoAM.196..596M.

- ↑ Gürtaş, Y. Z. (2017). "Chebyshev Polynomials and the minimal polynomial of

- ↑ 13.0 13.1 Wolfram, D. A. (2022). "Factoring Chebyshev polynomials of the first and second kinds with minimal polynomials of

- ↑ Wolfram, D. A. (2022). "Factoring Chebyshev polynomials with minimal polynomials of

- ↑ Rayes, M. O.; Trevisan, V.; Wang, P. S. (2005), "Factorization properties of chebyshev polynomials", Computers & Mathematics with Applications 50 (8–9): 1231–1240, doi:10.1016/j.camwa.2005.07.003

- ↑ 16.0 16.1 16.2 Boyd, John P. (2001). Chebyshev and Fourier Spectral Methods (second ed.). Dover. ISBN 0-486-41183-4. http://www-personal.umich.edu/~jpboyd/aaabook_9500may00.pdf. Retrieved 2009-03-19.

- ↑ "Chebyshev Interpolation: An Interactive Tour". http://www.scottsarra.org/chebyApprox/chebyshevApprox.html.

- ↑ 18.0 18.1 Abramowitz, Milton; Stegun, Irene Ann, eds (1983). "Chapter 22". Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. pp. 778. LCCN 65-12253. ISBN 978-0-486-61272-0. http://www.math.sfu.ca/~cbm/aands/page_778.htm.

- ↑ Horadam, A. F. (2002), "Vieta polynomials", Fibonacci Quarterly 40 (3): 223–232, https://www.fq.math.ca/Scanned/40-3/horadam2.pdf

- ↑ Viète, François (1646). Francisci Vietae Opera mathematica : in unum volumen congesta ac recognita / opera atque studio Francisci a Schooten. Bibliothèque nationale de France. https://gallica.bnf.fr/ark:/12148/bpt6k107597d.pdf.

- ↑ 21.0 21.1 21.2 Mason, J. C.; Elliott, G. H. (1993), "Near-minimax complex approximation by four kinds of Chebyshev polynomial expansion", J. Comput. Appl. Math. 46 (1–2): 291–300, doi:10.1016/0377-0427(93)90303-S

- ↑ 22.0 22.1 Desmarais, Robert N.; Bland, Samuel R. (1995), "Tables of properties of airfoil polynomials", NASA Reference Publication 1343 (National Aeronautics and Space Administration), https://ntrs.nasa.gov/citations/19960001864

Sources

- Abramowitz, Milton; Stegun, Irene Ann, eds (1983). "Chapter 22". Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. pp. 773. LCCN 65-12253. ISBN 978-0-486-61272-0. http://www.math.sfu.ca/~cbm/aands/page_773.htm.

- Dette, Holger (1995). "A note on some peculiar nonlinear extremal phenomena of the Chebyshev polynomials". Proceedings of the Edinburgh Mathematical Society 38 (2): 343–355. doi:10.1017/S001309150001912X.

- Elliott, David (1964). "The evaluation and estimation of the coefficients in the Chebyshev Series expansion of a function". Math. Comp. 18 (86): 274–284. doi:10.1090/S0025-5718-1964-0166903-7.

- Eremenko, A.; Lempert, L. (1994). "An Extremal Problem For Polynomials". Proceedings of the American Mathematical Society 122 (1): 191–193. doi:10.1090/S0002-9939-1994-1207536-1. http://www.math.purdue.edu/~eremenko/dvi/lempert.pdf.

- Hernandez, M. A. (2001). "Chebyshev's approximation algorithms and applications". Computers & Mathematics with Applications 41 (3–4): 433–445. doi:10.1016/s0898-1221(00)00286-8.

- Mason, J. C. (1984). "Some properties and applications of Chebyshev polynomial and rational approximation". Rational Approximation and Interpolation. Lecture Notes in Mathematics. 1105. pp. 27–48. doi:10.1007/BFb0072398. ISBN 978-3-540-13899-0.

- Mason, J. C.; Handscomb, D.C. (2002). Chebyshev Polynomials. Chapman and Hall/CRC. doi:10.1201/9781420036114. ISBN 978-1-4200-3611-4. https://books.google.com/books?id=8FHf0P3to0UC.

- Mathar, Richard J. (2006). "Chebyshev series expansion of inverse polynomials". Journal of Computational and Applied Mathematics 196 (2): 596–607. doi:10.1016/j.cam.2005.10.013. Bibcode: 2006JCoAM.196..596M.

- Koornwinder, Tom H.; Wong, Roderick S. C.; Koekoek, Roelof; Swarttouw, René F. (2010), "Orthogonal Polynomials", in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F. et al., NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0-521-19225-5, http://dlmf.nist.gov/18

- Remes, Eugene. "On an Extremal Property of Chebyshev Polynomials". https://www.math.technion.ac.il/hat/fpapers/remeztrans.pdf.

- Salzer, Herbert E. (1976). "Converting interpolation series into Chebyshev series by recurrence formulas". Mathematics of Computation 30 (134): 295–302. doi:10.1090/S0025-5718-1976-0395159-3.

- Scraton, R.E. (1969). "The Solution of integral equations in Chebyshev series". Mathematics of Computation 23 (108): 837–844. doi:10.1090/S0025-5718-1969-0260224-4.

- Smith, Lyle B. (1966). "Computation of Chebyshev series coefficients". Comm. ACM 9 (2): 86–87. doi:10.1145/365170.365195. Algorithm 277.

- Hazewinkel, Michiel, ed. (2001), "Chebyshev polynomials", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=Main_Page

External links

- Weisstein, Eric W.. "Chebyshev polynomial[s of the first kind"]. http://mathworld.wolfram.com/ChebyshevPolynomialoftheFirstKind.html.

- Mathews, John H. (2003). "Module for Chebyshev polynomials". California State University. http://math.fullerton.edu/mathews/n2003/ChebyshevPolyMod.html.

- "Chebyshev interpolation: An interactive tour". Mathematical Association of America (MAA). http://www.maa.org/sites/default/files/images/upload_library/4/vol6/Sarra/Chebyshev.htmlnone – includes illustrative Java applet.

- "Numerical computing with functions". http://www.chebfun.org.

- "Is there an intuitive explanation for an extremal property of Chebyshev polynomials?". https://mathoverflow.net/q/25534.

- "Chebyshev polynomial evaluation and the Chebyshev transform". https://www.boost.org/doc/libs/release/libs/math/doc/html/math_toolkit/sf_poly/chebyshev.html.

|

17 views | Status: cached on April 08 2025 01:13:03

↧ Download this article as ZWI file

KSF

KSF