Continuous integration

From HandWiki - Reading time: 12 min

From HandWiki - Reading time: 12 min

| Software development |

|---|

| Core activities |

| Paradigms and models |

| Methodologies and frameworks |

| Supporting disciplines |

| Practices |

| Tools |

| Standards and Bodies of Knowledge |

| Glossaries |

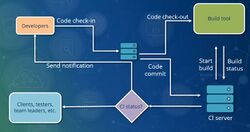

In software engineering, continuous integration (CI) is the practice of merging all developers' working copies to a shared mainline several times a day.[1] Nowadays it is typically implemented in such a way that it triggers an automated build with testing. Grady Booch first proposed the term CI in his 1991 method,[2] although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day – perhaps as many as tens of times per day.[3]

Rationale

When embarking on a change, a developer takes a copy of the current code base on which to work. As other developers submit changed code to the source code repository, this copy gradually ceases to reflect the repository code. Not only can the existing code base change, but new code can be added as well as new libraries, and other resources that create dependencies, and potential conflicts.

The longer development continues on a branch without merging back to the mainline, the greater the risk of multiple integration conflicts[4] and failures when the developer branch is eventually merged back. When developers submit code to the repository they must first update their code to reflect the changes in the repository since they took their copy. The more changes the repository contains, the more work developers must do before submitting their own changes.

Eventually, the repository may become so different from the developers' baselines that they enter what is sometimes referred to as "merge hell", or "integration hell",[5] where the time it takes to integrate exceeds the time it took to make their original changes.[6]

Workflows

Run tests locally

CI should be used in combination with automated unit tests written through the practices of test-driven development. All unit tests in the developer's local environment should be run and passed before committing to the mainline. This helps prevent one developer's work-in-progress from breaking another developer's copy. Where necessary, incomplete features can be disabled before committing, using feature toggles, for instance.

Compile the mainline periodically; run tests of the mainline and/or use continuous quality control

A build server compiles the code periodically. The build server may automatically run tests and/or implement other continuous quality control processes. Such processes aim to improve software quality and delivery time by periodically running additional static analyses, measuring performance, extracting documentation from the source code, and facilitating manual QA processes.

Use CI as part of continuous delivery or continuous deployment

CI is often intertwined with continuous delivery or continuous deployment in what is called a CI/CD pipeline. "Continuous delivery" ensures the software checked in on the mainline is always in a state that can be deployed to users, while "continuous deployment" fully automates the deployment process.

History

The earliest known work on continuous integration was the Infuse environment developed by G. E. Kaiser, D. E. Perry, and W. M. Schell.[7]

In 1994, Grady Booch used the phrase continuous integration in Object-Oriented Analysis and Design with Applications (2nd edition)[8] to explain how, when developing using micro processes, "internal releases represent a sort of continuous integration of the system, and exist to force closure of the micro process".

In 1997, Kent Beck and Ron Jeffries invented extreme programming (XP) while on the Chrysler Comprehensive Compensation System project, including continuous integration.[1][self-published source] Beck published about continuous integration in 1998, emphasising the importance of face-to-face communication over technological support.[9] In 1999, Beck elaborated more in his first full book on Extreme Programming.[10] CruiseControl, one of the first open-source CI tools,[11][self-published source] was released in 2001.

In 2010, Timothy Fitz published an article detailing how IMVU's engineering team had built and been using the first practical CI system. While his post was originally met with scepticism, it quickly caught on and found widespread adoption[12] as part of the Lean software development methodology, also based on IMVU.

Common practices

This section lists best practices suggested by various authors on how to achieve continuous integration, and how to automate this practice. Build automation is a best practice itself.[13][14]

Continuous integration—the practice of frequently integrating one's new or changed code with the existing code repository —should occur frequently enough that no intervening window remains between commit and build, and such that no errors can arise without developers noticing them and correcting them immediately.[1] Normal practice is to trigger these builds by every commit to a repository, rather than a periodically scheduled build. The practicalities of doing this in a multi-developer environment of rapid commits are such that it is usual to trigger a short time after each commit, then to start a build when either this timer expires or after a rather longer interval since the last build. Note that since each new commit resets the timer used for the short time trigger, this is the same technique used in many button debouncing algorithms.[15] In this way, the commit events are "debounced" to prevent unnecessary builds between a series of rapid-fire commits. Many automated tools offer this scheduling automatically.

Another factor is the need for a version control system that supports atomic commits; i.e., all of a developer's changes may be seen as a single commit operation. There is no point in trying to build from only half of the changed files.

To achieve these objectives, continuous integration relies on the following principles.

Maintain a code repository

This practice advocates the use of a revision control system for the project's source code. All artifacts required to build the project should be placed in the repository. In this practice and the revision control community, the convention is that the system should be buildable from a fresh checkout and not require additional dependencies. Extreme Programming advocate Martin Fowler also mentions that where branching is supported by tools, its use should be minimised.[16] Instead, it is preferred for changes to be integrated rather than for multiple versions of the software to be maintained simultaneously. The mainline (or trunk) should be the place for the working version of the software.

Automate the build

A single command should have the capability of building the system. Many build tools, which have existed for many years like make, and other more recent tools are frequently used in continuous integration environments to automate building. (E.g. Makefile, which contains a set of rules for building software, can be used automate the make build process.) Automation of the build should include automating the integration, which often includes deployment into a production-like environment. In many cases, the build script not only compiles binaries but also generates documentation, website pages, statistics and distribution media (such as Debian DEB, Red Hat RPM or Windows MSI files).

Make the build self-testing

Once the code is built, all tests should run to confirm that it behaves as the developers expect it to behave.[17]

Everyone commits to the baseline every day

By committing regularly, every committer can reduce the number of conflicting changes. Checking in a week's worth of work runs the risk of conflicting with other features and can be very difficult to resolve. Early, small conflicts in an area of the system cause team members to communicate about the change they are making.[18] Committing all changes at least once a day (once per feature built) is generally considered part of the definition of Continuous Integration. In addition, performing a nightly build is generally recommended.[citation needed] These are lower bounds; the typical frequency is expected to be much higher.

Every commit (to baseline) should be built

The system should build commits to the current working version to verify that they integrate correctly. A common practice is to use Automated Continuous Integration, although this may be done manually. Automated Continuous Integration employs a continuous integration server or daemon to monitor the revision control system for changes, then automatically run the build process.

Every bug-fix commit should come with a test case

When fixing a bug, it is a good practice to push a test case that reproduces the bug. This avoids the fix to be reverted, and the bug to reappear, which is known as a regression.

Keep the build fast

The build needs to complete rapidly so that if there is a problem with integration, it is quickly identified.

Test in a clone of the production environment

Having a test environment can lead to failures in tested systems when they deploy in the production environment because the production environment may differ from the test environment in a significant way. However, building a replica of a production environment is cost-prohibitive. Instead, the test environment or a separate pre-production environment ("staging") should be built to be a scalable version of the production environment to alleviate costs while maintaining technology stack composition and nuances. Within these test environments, service virtualisation is commonly used to obtain on-demand access to dependencies (e.g., APIs, third-party applications, services, mainframes, etc.) that are beyond the team's control, still evolving, or too complex to configure in a virtual test lab.

Make it easy to get the latest deliverables

Making builds readily available to stakeholders and testers can reduce the amount of rework necessary when rebuilding a feature that doesn't meet requirements. Additionally, early testing reduces the chances that defects survive until deployment. Finding errors earlier can reduce the amount of work necessary to resolve them.

All programmers should start the day by updating the project from the repository. That way, they will all stay up to date.

Everyone can see the results of the latest build

It should be easy to find out whether the build breaks and, if so, who made the relevant change and what that change was.

Automate deployment

Most CI systems allow the running of scripts after a build finishes. In most situations, it is possible to write a script to deploy the application to a live test server that everyone can look at. A further advance in this way of thinking is continuous deployment, which calls for the software to be deployed directly into production, often with additional automation to prevent defects or regressions.[19][20]

Costs and benefits

The neutrality of this section is disputed. (May 2016) (Learn how and when to remove this template message) |

Continuous integration is intended to produce benefits such as:

- Integration bugs are detected early and are easy to track down due to small changesets. This saves both time and money over the lifespan of a project.

- Avoids last-minute chaos at release dates, when everyone tries to check in their slightly incompatible versions

- When unit tests fail or a bug emerges, if developers need to revert the codebase to a bug-free state without debugging, only a small number of changes are lost (because integration happens frequently)

- Constant availability of a "current" build for testing, demo, or release purposes

- Frequent code check-in pushes developers to create modular, less complex code[21]

With continuous automated testing, benefits can include:

- Enforces discipline of frequent automated testing

- Immediate feedback on the system-wide impact of local changes

- Software metrics generated from automated testing and CI (such as metrics for code coverage, code complexity, and feature completeness) focus developers on developing functional, quality code, and help develop momentum in a team[citation needed]

Some downsides of continuous integration can include:

- Constructing an automated test suite requires a considerable amount of work, including ongoing effort to cover new features and follow intentional code modifications.

- Testing is considered a best practice for software development in its own right, regardless of whether or not continuous integration is employed, and automation is an integral part of project methodologies like test-driven development.

- Continuous integration can be performed without any test suite, but the cost of quality assurance to produce a releasable product can be high if it must be done manually and frequently.

- There is some work involved to set up a build system, and it can become complex, making it difficult to modify flexibly.[22]

- However, there are a number of continuous integration software projects, both proprietary and open-source, which can be used.

- Continuous integration is not necessarily valuable if the scope of the project is small or contains untestable legacy code.

- Value added depends on the quality of tests and how testable the code really is.[23]

- Larger teams mean that new code is constantly added to the integration queue, so tracking deliveries (while preserving quality) is difficult and builds queueing up can slow down everyone.[23]

- With multiple commits and merges a day, partial code for a feature could easily be pushed and therefore integration tests will fail until the feature is complete.[23]

- Safety and mission-critical development assurance (e.g., DO-178C, ISO 26262) require rigorous documentation and in-process review that are difficult to achieve using continuous integration. This type of life cycle often requires additional steps to be completed prior to product release when regulatory approval of the product is required.

See also

- Application release automation

- Build light indicator

- Comparison of continuous integration software

- Continuous design

- Continuous testing

- Multi-stage continuous integration

- Rapid application development

References

- ↑ 1.0 1.1 1.2 Fowler, Martin (1 May 2006). "Continuous Integration". http://martinfowler.com/articles/continuousIntegration.html.

- ↑ Booch, Grady (1991). Object Oriented Design: With Applications. Benjamin Cummings. p. 209. ISBN 9780805300918. https://books.google.com/books?id=w5VQAAAAMAAJ&q=continuous+integration+inauthor:grady+inauthor:booch. Retrieved 18 August 2014.

- ↑ Beck, K. (1999). "Embracing change with extreme programming". Computer 32 (10): 70–77. doi:10.1109/2.796139. ISSN 0018-9162.

- ↑ Duvall, Paul M. (2007). Continuous Integration. Improving Software Quality and Reducing Risk. Addison-Wesley. ISBN 978-0-321-33638-5.

- ↑ Cunningham, Ward (5 August 2009). "Integration Hell". http://c2.com/cgi/wiki?IntegrationHell.

- ↑ "What is Continuous Integration?". https://aws.amazon.com/devops/continuous-integration/.

- ↑ Kaiser, G. E.; Perry, D. E.; Schell, W. M. (1989). "Infuse: fusing integration test management with change management". Proceedings of the Thirteenth Annual International Computer Software & Applications Conference. Orlando, Florida. pp. 552–558. doi:10.1109/CMPSAC.1989.65147.

- ↑ Booch, Grady (December 1998). Object-Oriented Analysis and Design with applications (2nd ed.). http://www.cvauni.edu.vn/imgupload_dinhkem/file/pttkht/object-oriented-analysis-and-design-with-applications-2nd-edition.pdf. Retrieved 2 December 2014.

- ↑ Beck, Kent (28 March 1998). "Extreme Programming: A Humanistic Discipline of Software Development". 1. Lisbon, Portugal: Springer. pp. 4. ISBN 9783540643036. https://books.google.com/books?id=YBC5xD08NREC&q=%22Extreme+Programming%3A+A+Humanistic+Discipline+of+Software+Development%22&pg=PA4.

- ↑ Beck, Kent (1999). Extreme Programming Explained. Addison-Wesley Professional. p. 97. ISBN 978-0-201-61641-5. https://archive.org/details/extremeprogrammi00beck.

- ↑ "A Brief History of DevOps, Part III: Automated Testing and Continuous Integration". CircleCI. 1 February 2018. https://circleci.com/blog/a-brief-history-of-devops-part-iii-automated-testing-and-continuous-integration/.

- ↑ Sane, Parth (2021), "A Brief Survey of Current Software Engineering Practices in Continuous Integration and Automated Accessibility Testing", 2021 Sixth International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), pp. 130–134, doi:10.1109/WiSPNET51692.2021.9419464, ISBN 978-1-6654-4086-8, https://ieeexplore.ieee.org/document/9419464

- ↑ Brauneis, David (1 January 2010). "[OSLC] Possible new Working Group – Automation". open-services.net Community (Mailing list). Archived from the original on 1 September 2018. Retrieved 16 February 2010.

- ↑ Taylor, Bradley. "Rails Deployment and Automation with ShadowPuppet and Capistrano". http://blog.railsmachine.com/articles/2009/02/10/rails-deployment-and-automation-with-shadowpuppet-and-capistrano/.

- ↑ See for example "Debounce". 29 July 2015. https://www.arduino.cc/en/Tutorial/Debounce.

- ↑ Fowler, Martin. "Practices". http://martinfowler.com/articles/continuousIntegration.html#PracticesOfContinuousIntegration.

- ↑ Radigan, Dan. "Continuous integration". https://www.atlassian.com/agile/continuous-integration.

- ↑ "Continuous Integration". https://www.thoughtworks.com/continuous-integration.

- ↑ Ries, Eric (30 March 2009). "Continuous deployment in 5 easy steps". O’Reilly. http://radar.oreilly.com/2009/03/continuous-deployment-5-eas.html.

- ↑ Fitz, Timothy (10 February 2009). "Continuous Deployment at IMVU: Doing the impossible fifty times a day". Wordpress. http://timothyfitz.wordpress.com/2009/02/10/continuous-deployment-at-imvu-doing-the-impossible-fifty-times-a-day/.

- ↑ Junpeng, Jiang; Zhu, Can; Zhang, Xiaofang (July 2020). "An Empirical Study on the Impact of Code Contributor on Code Smell". International Journal of Performability Engineering 16 (7): 1067-1077. doi:10.23940/ijpe.20.07.p9.10671077. https://qrs20.techconf.org/download/QRS-IJPE/12_An%20Empirical%20Study%20on%20the%20Impact%20of%20Code%20Contributor%20on%20Code%20Smell.pdf.

- ↑ Laukkanen, Eero (2016). "Problems, causes and solutions when adopting continuous delivery—A systematic literature review". Information and Software Technology 82: 55–79. doi:10.1016/j.infsof.2016.10.001.

- ↑ 23.0 23.1 23.2 Debbiche, Adam. "Assessing challenges of continuous integration in the context of software requirements breakdown: a case study". http://publications.lib.chalmers.se/records/fulltext/220573/220573.pdf.

External links

- (wiki) Continuous Integration. C2. http://www.c2.com/cgi/wiki?ContinuousIntegration.

- Richardson, Jared. "Continuous Integration: The Cornerstone of a Great Shop". http://www.methodsandtools.com/archive/archive.php?id=42.

- Flowers, Jay. "A Recipe for Build Maintainability and Reusability". http://jayflowers.com/joomla/index.php?option=com_content&task=view&id=26.

- Duvall, Paul (4 December 2007). "Developer works". http://www.ibm.com/developerworks/java/library/j-ap11297/.

- "Version lifecycle". MediaWiki. http://www.mediawiki.org/wiki/Version_lifecycle.

|

KSF

KSF