Climate model

Topic: Earth

From HandWiki - Reading time: 14 min

From HandWiki - Reading time: 14 min

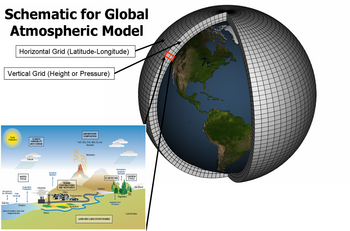

Numerical climate models (or climate system models) use quantitative methods to simulate the interactions of the important drivers of climate, including atmosphere, oceans, land surface and ice. They are used for a variety of purposes from study of the dynamics of the climate system to projections of future climate. Climate models may also be qualitative (i.e. not numerical) models and also narratives, largely descriptive, of possible futures.[1]

Quantitative climate models take account of incoming energy from the sun as short wave electromagnetic radiation, chiefly visible and short-wave (near) infrared, as well as outgoing long wave (far) infrared electromagnetic. An imbalance results in a change in temperature.

Quantitative models vary in complexity. For example, a simple radiant heat transfer model treats the earth as a single point and averages outgoing energy. This can be expanded vertically (radiative-convective models) and/or horizontally. Coupled atmosphere–ocean–sea ice global climate models solve the full equations for mass and energy transfer and radiant exchange. In addition, other types of modelling can be interlinked, such as land use, in Earth System Models, allowing researchers to predict the interaction between climate and ecosystems.

Uses

There are three major types of institution where climate models are developed, implemented and used:

- National meteorological services: Most national weather services have a climatology section.

- Universities: Relevant departments include atmospheric sciences, meteorology, climatology, and geography.

- National and international research laboratories: Examples include the National Center for Atmospheric Research (NCAR, in Boulder, Colorado, US), the Geophysical Fluid Dynamics Laboratory (GFDL, in Princeton, New Jersey, US), Los Alamos National Laboratory, the Hadley Centre for Climate Prediction and Research (in Exeter, UK), the Max Planck Institute for Meteorology in Hamburg, Germany, or the Laboratoire des Sciences du Climat et de l'Environnement (LSCE), France.

Big climate models are essential but they are not perfect. Attention still needs to be given to the real world (what is happening and why). The global models are essential to assimilate all the observations, especially from space (satellites) and produce comprehensive analyses of what is happening, and then they can be used to make predictions/projections. Simple models have a role to play that is widely abused and fails to recognize the simplifications such as not including a water cycle.[2]

General circulation models (GCMs)

Energy balance models (EBMs)

Simulation of the climate system in full 3-D space and time was impractical prior to the establishment of large computational facilities starting in the 1960s. In order to begin to understand which factors may have changed Earth's paleoclimate states, the constituent and dimensional complexities of the system needed to be reduced. A simple quantitative model that balanced incoming/outgoing energy was first developed for the atmosphere in the late 19th century.[3] Other EBMs similarly seek an economical description of surface temperatures by applying the conservation of energy constraint to individual columns of the Earth-atmosphere system.[4]

Essential features of EBMs include their relative conceptual simplicity and their ability to sometimes produce analytical solutions.[5]:19 Some models account for effects of ocean, land, or ice features on the surface budget. Others include interactions with parts of the water cycle or carbon cycle. A variety of these and other reduced system models can be useful for specialized tasks that supplement GCMs, particularly to bridge gaps between simulation and understanding.[6][7]

Zero-dimensional models

Zero-dimensional models consider Earth as a point in space, analogous to the pale blue dot viewed by Voyager 1 or an astronomer's view of very distant objects. This dimensionless view while highly limited is still useful in that the laws of physics are applicable in a bulk fashion to unknown objects, or in an appropriate lumped manner if some major properties of the object are known. For example, astronomers know that most planets in our own solar system feature some kind of solid/liquid surface surrounded by a gaseous atmosphere.

Model with combined surface and atmosphere

A very simple model of the radiative equilibrium of the Earth is

- [math]\displaystyle{ (1-a)S \pi r^2 = 4 \pi r^2 \epsilon \sigma T^4 }[/math]

where

- the left hand side represents the total incoming shortwave power (in Watts) from the Sun

- the right hand side represents the total outgoing longwave power (in Watts) from Earth, calculated from the Stefan–Boltzmann law.

The constant parameters include

- S is the solar constant – the incoming solar radiation per unit area—about 1367 W·m−2

- r is Earth's radius—approximately 6.371×106 m

- π is the mathematical constant (3.141...)

- [math]\displaystyle{ \sigma }[/math] is the Stefan–Boltzmann constant—approximately 5.67×10−8 J·K−4·m−2·s−1

The constant [math]\displaystyle{ \pi\,r^2 }[/math] can be factored out, giving a nildimensional equation for the equilibrium

- [math]\displaystyle{ (1-a)S = 4 \epsilon \sigma T^4 }[/math]

where

- the left hand side represents the incoming shortwave energy flux from the Sun in W·m−2

- the right hand side represents the outgoing longwave energy flux from Earth in W·m−2.

The remaining variable parameters which are specific to the planet include

- [math]\displaystyle{ a }[/math] is Earth's average albedo, measured to be 0.3.[8][9]

- [math]\displaystyle{ T }[/math] is Earth's average surface temperature, measured as about 288 K as of year 2020[10]

- [math]\displaystyle{ \epsilon }[/math] is the effective emissivity of Earth's combined surface and atmosphere (including clouds). It is a quantity between 0 and 1 that is calculated from the equilibrium to be about 0.61. For the zero-dimensional treatment it is equivalent to an average value over all viewing angles.

This very simple model is quite instructive. For example, it shows the temperature sensitivity to changes in the solar constant, Earth albedo, or effective Earth emissivity. The effective emissivity also gauges the strength of the atmospheric greenhouse effect, since it is the ratio of the thermal emissions escaping to space versus those emanating from the surface.[11]

The calculated emissivity can be compared to available data. Terrestrial surface emissivities are all in the range of 0.96 to 0.99[12][13] (except for some small desert areas which may be as low as 0.7). Clouds, however, which cover about half of the planet's surface, have an average emissivity of about 0.5[14] (which must be reduced by the fourth power of the ratio of cloud absolute temperature to average surface absolute temperature) and an average cloud temperature of about 258 K (−15 °C; 5 °F).[15] Taking all this properly into account results in an effective earth emissivity of about 0.64 (earth average temperature 285 K (12 °C; 53 °F)).[citation needed]

Models with separated surface and atmospheric layers

thumb|upright=1|right|One-layer EBM with blackbody surface

Dimensionless models have also been constructed with functionally separated atmospheric layers from the surface. The simplest of these is the zero-dimensional, one-layer model,[16] which may be readily extended to an arbitrary number of atmospheric layers. The surface and atmospheric layer(s) are each characterized by a corresponding temperature and emissivity value, but no thickness. Applying radiative equilibrium (i.e conservation of energy) at the interfaces between layers produces a set of coupled equations which are solvable.[17]

Layered models produce temperatures that better estimate those observed for Earth's surface and atmospheric levels.[18] They likewise further illustrate the radiative heat transfer processes which underlie the greenhouse effect. Quantification of this phenomenon using a version of the one-layer model was first published by Svante Arrhenius in year 1896.[3]

Radiative-convective models

Water vapor is a main determinant of the emissivity of Earth's atmosphere. It both influences the flows of radiation and is influenced by convective flows of heat in a manner that is consistent with its equilibrium concentration and temperature as a function of elevation (i.e. relative humidity distribution). This has been shown by refining the zero dimension model in the vertical to a one-dimensional radiative-convective model which considers two processes of energy transport:[19]

- upwelling and downwelling radiative transfer through atmospheric layers that both absorb and emit infrared radiation

- upward transport of heat by air and vapor convection, which is especially important in the lower troposphere.

Radiative-convective models have advantages over simpler models and also lay a foundation for more complex models.[20] They can estimate both surface temperature and the temperature variation with elevation in a more realistic manner. They also simulate the observed decline in upper atmospheric temperature and rise in surface temperature when trace amounts of other non-condensible greenhouse gases such as carbon dioxide are included.[19]

Other parameters are sometimes included to simulate localized effects in other dimensions and to address the factors that move energy about Earth. For example, the effect of ice-albedo feedback on global climate sensitivity has been investigated using a one-dimensional radiative-convective climate model.[21][22]

Higher-dimension models

The zero-dimensional model may be expanded to consider the energy transported horizontally in the atmosphere. This kind of model may well be zonally averaged. This model has the advantage of allowing a rational dependence of local albedo and emissivity on temperature – the poles can be allowed to be icy and the equator warm – but the lack of true dynamics means that horizontal transports have to be specified.[23]

Earth systems models of intermediate complexity (EMICs)

Depending on the nature of questions asked and the pertinent time scales, there are, on the one extreme, conceptual, more inductive models, and, on the other extreme, general circulation models operating at the highest spatial and temporal resolution currently feasible. Models of intermediate complexity bridge the gap. One example is the Climber-3 model. Its atmosphere is a 2.5-dimensional statistical-dynamical model with 7.5° × 22.5° resolution and time step of half a day; the ocean is MOM-3 (Modular Ocean Model) with a 3.75° × 3.75° grid and 24 vertical levels.[24]

Box models

Box models are simplified versions of complex systems, reducing them to boxes (or reservoirs) linked by fluxes. The boxes are assumed to be mixed homogeneously. Within a given box, the concentration of any chemical species is therefore uniform. However, the abundance of a species within a given box may vary as a function of time due to the input to (or loss from) the box or due to the production, consumption or decay of this species within the box.[citation needed]

Simple box models, i.e. box model with a small number of boxes whose properties (e.g. their volume) do not change with time, are often useful to derive analytical formulas describing the dynamics and steady-state abundance of a species. More complex box models are usually solved using numerical techniques.[citation needed]

Box models are used extensively to model environmental systems or ecosystems and in studies of ocean circulation and the carbon cycle.[25] They are instances of a multi-compartment model.

History

Increase of forecasts confidence over time

The IPCC stated in 2010 it has increased confidence in forecasts coming from climate models:

There is considerable confidence that climate models provide credible quantitative estimates of future climate change, particularly at continental scales and above. This confidence comes from the foundation of the models in accepted physical principles and from their ability to reproduce observed features of current climate and past climate changes. Confidence in model estimates is higher for some climate variables (e.g., temperature) than for others (e.g., precipitation). Over several decades of development, models have consistently provided a robust and unambiguous picture of significant climate warming in response to increasing greenhouse gases.[26]

Coordination of research

The World Climate Research Programme (WCRP), hosted by the World Meteorological Organization (WMO), coordinates research activities on climate modelling worldwide.

A 2012 U.S. National Research Council report discussed how the large and diverse U.S. climate modeling enterprise could evolve to become more unified.[27] Efficiencies could be gained by developing a common software infrastructure shared by all U.S. climate researchers, and holding an annual climate modeling forum, the report found.[28]

Issues

Electricity consumption

Cloud-resolving climate models are nowadays run on high intensity super-computers which have a high power consumption and thus cause CO2 emissions.[29] They require exascale computing (billion billion – i.e., a quintillion – calculations per second). For example, the Frontier exascale supercomputer consumes 29 MW.[30] It can simulate a year’s worth of climate at cloud resolving scales in a day.[31]

Techniques that could lead to energy savings, include for example: "reducing floating point precision computation; developing machine learning algorithms to avoid unnecessary computations; and creating a new generation of scalable numerical algorithms that would enable higher throughput in terms of simulated years per wall clock day."[29]

See also

- Atmospheric reanalysis

- Chemical transport model

- Atmospheric Radiation Measurement (ARM) (in the US)

- Climate Data Exchange

- Climateprediction.net

- Numerical Weather Prediction

- Static atmospheric model

- Tropical cyclone prediction model

- Verification and validation of computer simulation models

- CICE sea ice model

References

- ↑ IPCC (2014). AR5 Synthesis Report - Climate Change 2014. Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. p. 58. https://www.ipcc.ch/site/assets/uploads/2018/02/SYR_AR5_FINAL_full.pdf#page=74. "Box 2.3. ‘Models’ are typically numerical simulations of real-world systems, calibrated and validated using observations from experiments or analogies, and then run using input data representing future climate. Models can also include largely descriptive narratives of possible futures, such as those used in scenario construction. Quantitative and descriptive models are often used together.".

- ↑ Trenberth, Kevin E. (2022). "Chapter 1: Earth and Climate System". The Changing Flow of Energy Through the Climate System (1 ed.). Cambridge University Press. doi:10.1017/9781108979030. ISBN 978-1-108-97903-0. https://www.cambridge.org/core/product/identifier/9781108979030/type/book.

- ↑ 3.0 3.1 Svante Arrhenius (1896). "On the influence of carbonic acid in the air upon the temperature of the ground" (in en). Philosophical Magazine and Journal of Science 41 (251): 237–276. doi:10.1080/14786449608620846. https://zenodo.org/record/1431217.

- ↑ North, Gerald R.; Stevens, Mark J. (2006), "Energy-balance climate models", in Kiehl, J. T., Frontiers in Climate Modelling, Cambridge University, p. 52, doi:10.1017/CBO9780511535857.004, ISBN 9780511535857

- ↑ North, Gerald R.; Kwang-Yul, Kim (2017), Energy Balance Climate Models, Wiley Series in Atmospheric Physics and Remote Sensing, Wiley-VCH, ISBN 978-3-527-41132-0

- ↑ Held, Isaac M. (2005). "The gap between simulation and understanding in climate modelling". Bulletin of the American Meteorological Society 86 (11): 1609-1614. doi:10.1175/BAMS-86-11-1609.

- ↑ Polvani, L. M.; Clement, A. C.; Medeiros, B.; Benedict, J. J.; Simpson, I. R. (2017). "When less is more: opening the door to simpler climate models". Eos (98). doi:10.1029/2017EO079417.

- ↑ Goode, P. R. (2001). "Earthshine Observations of the Earth's Reflectance". Geophys. Res. Lett. 28 (9): 1671–4. doi:10.1029/2000GL012580. Bibcode: 2001GeoRL..28.1671G. https://authors.library.caltech.edu/50838/1/grl14388.pdf.

- ↑ "Scientists Watch Dark Side of the Moon to Monitor Earth's Climate". American Geophysical Union. 17 April 2001. http://www.agu.org/sci_soc/prrl/prrl0113.html.

- ↑ "Climate Change: Global Temperature". NOAA. https://www.climate.gov/news-features/understanding-climate/climate-change-global-temperature. Retrieved 6 July 2023.

- ↑ "Clouds and the Earth's Radiant Energy System". NASA. 2013. http://eospso.gsfc.nasa.gov/ftp_docs/lithographs/CERES_litho.pdf.

- ↑ "Seawater Samples - Emissivities". ucsb.edu. http://www.icess.ucsb.edu/modis/EMIS/html/seawater.html.

- ↑ "An Improved Land Surface Emissivity Parameter for Land Surface Models Using Global Remote Sensing Observations". J. Climate 19 (12): 2867–81. 15 June 2006. doi:10.1175/JCLI3720.1. Bibcode: 2006JCli...19.2867J. http://www.glue.umd.edu/~sliang/papers/Jin2006.emissivity.pdf.

- ↑ T.R. Shippert; S.A. Clough; P.D. Brown; W.L. Smith; R.O. Knuteson; S.A. Ackerman. "Spectral Cloud Emissivities from LBLRTM/AERI QME". http://www.arm.gov/publications/proceedings/conf08/extended_abs/shippert_tr.pdf.

- ↑ A.G. Gorelik; V. Sterljadkin; E. Kadygrov; A. Koldaev. "Microwave and IR Radiometry for Estimation of Atmospheric Radiation Balance and Sea Ice Formation". http://www.arm.gov/publications/proceedings/conf11/extended_abs/gorelik_ag.pdf.

- ↑ "ACS Climate Science Toolkit - Atmospheric Warming - A Single-Layer Atmosphere Model". American Chemical Society. https://www.acs.org/content/acs/en/climatescience/atmosphericwarming/singlelayermodel.html. Retrieved 2 October 2022.

- ↑ "ACS Climate Science Toolkit - Atmospheric Warming - A Multi-Layer Atmosphere Model". American Chemical Society. https://www.acs.org/content/acs/en/climatescience/atmosphericwarming/multilayermodel.html. Retrieved 2 October 2022.

- ↑ "METEO 469: From Meteorology to Mitigation - Understanding Global Warming - Lesson 5 - Modelling of the Climate System - One-Layer Energy Balance Model". Penn State College of Mineral and Earth Sciences - Department of Meteorology and Atmospheric Sciences. https://www.e-education.psu.edu/meteo469/node/198. Retrieved 2 October 2022.

- ↑ 19.0 19.1 Manabe, Syukuro; Wetherald, Richard T. (1 May 1967). "Thermal Equilibrium of the Atmosphere with a Given Distribution of Relative Humidity". Journal of the Atmospheric Sciences 24 (3): 241–259. doi:10.1175/1520-0469(1967)024<0241:TEOTAW>2.0.CO;2. Bibcode: 1967JAtS...24..241M.

- ↑ "Syukuro Manabe Facts". https://www.nobelprize.org/prizes/physics/2021/manabe/facts/.

- ↑ "Pubs.GISS: Wang and Stone 1980: Effect of ice-albedo feedback on global sensitivity in a one-dimensional...". nasa.gov. http://pubs.giss.nasa.gov/cgi-bin/abstract.cgi?id=wa03100m.

- ↑ Wang, W.C.; P.H. Stone (1980). "Effect of ice-albedo feedback on global sensitivity in a one-dimensional radiative-convective climate model". J. Atmos. Sci. 37 (3): 545–52. doi:10.1175/1520-0469(1980)037<0545:EOIAFO>2.0.CO;2. Bibcode: 1980JAtS...37..545W.

- ↑ "Energy Balance Models". shodor.org. http://www.shodor.org/master/environmental/general/energy/application.html.

- ↑ "emics1". pik-potsdam.de. http://www.pik-potsdam.de/emics/.

- ↑ Sarmiento, J.L.; Toggweiler, J.R. (1984). "A new model for the role of the oceans in determining atmospheric P CO 2". Nature 308 (5960): 621–24. doi:10.1038/308621a0. Bibcode: 1984Natur.308..621S.

- ↑ "Climate Models and Their Evaluation". http://www.ipcc.ch/pdf/assessment-report/ar4/wg1/ar4-wg1-chapter8.pdf.

- ↑ "U.S. National Research Council Report, A National Strategy for Advancing Climate Modeling". http://dels.nas.edu/Report/National-Strategy-Advancing-Climate/13430.

- ↑ "U.S. National Research Council Report-in-Brief, A National Strategy for Advancing Climate Modeling". http://dels.nas.edu/Materials/Report-In-Brief/4291-Climate-Modeling.

- ↑ 29.0 29.1 Loft, Richard (2020). "Earth System Modeling Must Become More Energy Efficient". Eos (101). doi:10.1029/2020EO147051. ISSN 2324-9250. https://eos.org/opinions/earth-system-modeling-must-become-more-energy-efficient.

- ↑ Trader, Tiffany (2021). "Frontier to Meet 20MW Exascale Power Target Set by DARPA in 2008" (in en-US). https://www.hpcwire.com/2021/07/14/frontier-to-meet-20mw-exascale-power-target-set-by-darpa-in-2008/.

- ↑ "Cloud-resolving climate model meets world’s fastest supercomputer" (in en-US). https://www.sandia.gov/labnews/2023/04/06/cloud-resolving-climate-model-meets-worlds-fastest-supercomputer/.

External links

- Why results from the next generation of climate models matter CarbonBrief, Guest post by Belcher, Boucher, Sutton, 21 March 2019

Climate models on the web:

- Dapper/DChart — plot and download model data referenced by the Fourth Assessment Report (AR4) of the Intergovernmental Panel on Climate Change. (No longer available)

- NCAR/UCAR Community Climate System Model (CCSM)

- Do it yourself climate prediction

- Primary research GCM developed by NASA/GISS (Goddard Institute for Space Studies)

- Original NASA/GISS global climate model (GCM) with a user-friendly interface for PCs and Macs

- CCCma model info and interface to retrieve model data

- NOAA/Geophysical Fluid Dynamics Laboratory CM2 global climate model info and model output data files

- Dry idealized AGCM based on above GFDL CM2[1]

- Model of an idealized Moist Atmosphere (MiMA): based on GFDL CM2. Complexity in-between dry models and full GCMs[2]

- Empirical Climate Model

|

- ↑ M. Jucker, S. Fueglistaler and G. K. Vallis "Stratospheric sudden warmings in an idealized GCM". Journal of Geophysical Research: Atmospheres 2014 119 (19) 11,054-11,064; doi:10.1002/2014JD022170

- ↑ M. Jucker and E. P. Gerber: "Untangling the Annual Cycle of the Tropical Tropopause Layer with an Idealized Moist Model". Journal of Climate 2017 30 (18) 7339-7358; doi:10.1175/JCLI-D-17-0127.1

KSF

KSF