List of cassette tape data storage formats

Topic: Engineering

From HandWiki - Reading time: 10 min

From HandWiki - Reading time: 10 min

Many early microcomputer and home computer systems used cassette tapes as an inexpensive magnetic tape data storage system. This article lists some of the historically notable formats.

As interoperability between platforms was difficult, there was little purpose to, or effort expended on, using standardized formats. The main exception to this rule was the Kansas City standard, which was supported by most S-100 bus based computers and was later adopted by a few other vendors like the BBC Computer and MSX. It also saw use as an exchange medium in some magazines and even broadcast over the radio in Europe.

RCA COSMAC

One of the earliest efforts to develop a microcomputer for home use was carried out in the early 1970s at RCA and led to the COSMAC processor design. As part of this process, a cassette interface was developed. This used a frequency-shift keying (FSK) system, with binary zeros ("space") represented by one cycle of a 2 kHz signal, and ones ("mark") as a cycle of 0.8 kHz. Bytes were written with a single mark bit, eight data bits, and a final odd parity bit. Files were prefixed with four seconds of space signals to provide clock recovery, and then all of the data written in a single stream. There was no support for reading and writing the data in the basic operating system; users had to type in their own loader program to do so, although the hexadecimal example version of the code was provided and is quite small.[1]

HITS

The Hobbyist Interchange Tape System (HITS) was introduced by Jerry Ogdin in a September 1975 article in Popular Electronics magazine. In contrast to almost every other system of the era, HITS did not use FSK for its storage mechanism; instead it used pulse-width modulation, or PWM. Any suitable carrier frequency could be used, with 2000 Hz being suggested. The article goes on to note that the basic concept works well at any frequency and that the system is capable of recording at data at a rate of about 1⁄4 of the carrier. This means that a 10 kHz carrier allows about 2,500 bit/s speeds.[2]

Zeros were recorded as short pulses, and ones long, with the overall bit time being a nominal 2.5 milliseconds when used at 2 kHz. The pulse lengths were measured by recording the time between the off-to-on transition when the carrier turned on and the on-to-off transition when it dropped again. This was compared to the time between the on-to-off and the next off-to-on marking the start of the next bit. If the on portion of the pulse was shorter than the off period, it was a 0; if the on portion was longer than off, it was 1. This meant every bit in the recording was self-timed, allowing it to easily survive tape stretch and other problems that changed the frequency or playback speed.[2]

Assembler code programs for reading and writing on Intel 8080 machines were provided.[2]

Kansas City Standard

The Kansas City Standard (KCS) was one of the few cassette formats that was standardized to any degree. It was created by a group of S-100 bus manufacturers at a meeting hosted by Byte Magazine in November 1975 in Kansas City.[3]

KCS was a simple FSK system that recorded zeros as four cycles of a 1200 Hz tone and ones as eight cycles of 2400 Hz. This produces an overall data rate of 300 Hz. Data was recorded in eight-bit bytes, least significant bit first, with a parity bit added to seven-bit data if needed. A single space was added to the front and a single mark to the end to act as start and stop bits. The bytes were written individually, with the tone returning to the mark frequency between characters. If shorter data was being written, for instance, six-bit ASCII codes, any unused bits were filled with mark at the end to fill out eight bits. The data was written using a slightly modified version of Manchester encoding.[3]

No file format was specified beyond adding five seconds of marks at the start of the file, nor was any example code provided. This would be up to the vendors of the plug-in cards to provide.[3]

KCS variations

thumb|Interface Age magazine May 1977 issue, with a Kansas City Standard flexi disc floppy ROM

CUTS was a faster version of the KCS system, developed by Processor Technology. It used a single cycle of 1200 Hz for a space and two cycles of 2400 Hz for a mark. This made the effective data rate 1200 bit/s, four times that of the KCS version. The CUTS S-100 board could support either CUTS or KCS for reading and writing and could support two tape decks from a single board.[4] Acorn Computers Ltd implemented both the original 300-baud KCS and the 1200-baud CUTS variation in their BBC Micro and Acorn Electron.[5][lower-alpha 1]

MSX took this another step to 2400 bit/s by moving to higher frequencies, using a single 2400 Hz cycle for a space and two cycles of 4800 Hz for a mark.[6] Additionally, MSX defined a block-based file format, although it changed several times. Blocks could contain between 0 and 255 bytes of data with header information and a one-byte checksum, later changing to a 16-bit cyclic redundancy check (CRC).[7]

Other formats

After introducing CUTS, Bob Marsh approached Bob Jones, the publisher of Interface Age magazine, about the possibility of binding Flexi disc recordings into the magazine as a distribution mechanism. Their first attempt did not work and they moved on to other projects. The concept was then picked up by Daniel Meyer and Gary Kay of Southwest Technical Products (SWTPC), who arranged for Robert Uiterwyk to provide his 4K BASIC interpreter program for the Motorola 6800 in KCS format. Several attempts were required before they came up with a workable process of producing the discs. The May 1977 issue of Interface Age contains the first "Floppy ROM", a 331⁄3 RPM record containing about six minutes of Kansas City standard audio.[8] Several additional such discs were distributed.

The 1200-baud CUTS variation was also used as the basis for the BASICODE system, which broadcast BASIC programs over commercial radio.[9] In this case, a five-second header and footer of the 2400 Hz signal was added to the file, and the program was sent as a single long series of ASCII bytes. The bytes were sent with a single mark start bit, eight bits of data containing a seven-bit ASCII code with the most significant bit set to 1, and a single mark stop bit. Users would record the programs to tape using their stereo equipment and then read the tapes in their existing computer decks.[10]

Apple

The Apple I introduced an expansion-card based cassette system similar to KCS, recording a single cycle of 2000 Hz for a space and a single cycle of 1000 Hz for a mark. This resulted in an average speed of about 1500 bit/s. The associated device driver in PROM offered an interactive mode that allowed users to write memory locations to tape. For instance, typing E000.EFFFR would read (the R at the end) data from the tape into memory locations $E000 to $EFFF (4 kB of data). When writing, 10 seconds of the mark signal was added as a header before it began to write the requested data.[11]

The Apple II moved the cassette interface onto the motherboard and made several changes to the format. The mark and space signals remained the same as in the original version, but the header was now ten seconds at 770 Hz followed by a new "sync tone" of one half cycle of 2500 Hz and one half cycle of 2000 Hz. Data following the header was recoded as before, but was also appended with an 8-bit checksum. Applesoft BASIC saved user programs as two "records", the first consisting of the header signal followed by the program length and checksum and the second with the header signal, program data and checksum.[12]

TI-99/4

The format for the TI-99/4 was driven by internal I/O pins being toggled at the rate needed to produce the proper tones on the cassette. This was accomplished by the TMS9901 support chip, which offered various clock dividers. For cassette operations, the clock divider was set to 17, and the input to the chip was the system's main 3 MHz clock divided by 64. Thus the output of the TMS9901 was 17 / (3 MHz / 64) = 363.6 microseconds, or 2750 Hz. To write a one to tape, the signal was toggled with every clock output, whereas a zero skipped one cycle. The result was that a mark was two cycles of 1379 Hz while spaces were a single cycle of 690 Hz. The resulting data rate was about 700 bit/s.[13]

The system also included a simple file format consisting of a 768-byte lead-in followed by a header with the number of blocks in the file. The data was encoded in blocks; these started with 8-byte lead-in of spaces and a single mark, then 64 bytes of data, and finally a 1-byte checksum, for a total length of 73 bytes. Every block was repeated twice as an error correction system, thus halving the effective data rate.[13]

The lead-in at the start of the file produced a steady tone that was used to measure the actual data rate on the tape, which might change due to tape stretch or differences between machines. During a read, the system set an input timer to the maximum value of $3FFF and then read one byte. When the byte was complete the timer was examined to see how many cycles had passed. This might be 16 or 18, for instance. This value was then put into the clock timer. During reads, if the signal did not cycle during a clock cycle it was a 0; if it did it was a space.[13]

Commodore

The Commodore tape format, introduced on the Commodore PET, uses a combination of FSK and PWM methodology. Bits were encoded within a fixed time period similar to PWM, but because the I/O hardware on most Commodore models responded only to the falling edge of a cycle, they were not capable of true PWM decoding. Instead, the fixed time period contained two complete cycles of differing lengths to simulate a PWM pulse and 'off' period. Zeros were encoded by a "short" cycle[lower-alpha 2] followed by a "medium" cycle, while ones were encoded as a medium cycle then short cycle. The signals were sent directly from the output pin as a square wave which was "rounded off" by the recording media. A third "long" cycle was used for special tones: long-medium marked the start of each byte, long-short marked the end of data.[14][15]

The primary use of the system was with Commodore BASIC, which recorded a header containing a series of bytes used as a way for the system to track the tape speed, followed by the file name, size and other data. The header was then repeated as a way to deal with data corruption. The program data followed as a single long stream of bytes, and was itself written a second time for the same reason.[14]

It was possible to bypass the Commodore tape format routines and access the I/O hardware directly, which allowed for the widespread development of 'turbo' loaders for Commodore computers.[14]

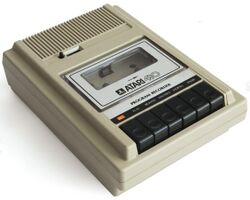

Atari 8-bit

The Atari 8-bit family used a system that was based on consultations with recording engineers, one of the most obvious outcomes being to use two frequencies that were not harmonics of each other. Ones were represented by 5327 Hz, and zeros by 3995 Hz, toggling at 600 Hz.[16]

The operating system defined a packet-oriented file format with 128 bytes of payload with two header bytes, a control byte, and a following checksum, making the packet a total of 132 bytes long. The two header bytes were character 55 hex, binary 01010101 01010101, which was used by the circuitry to perform clock recovery. The control byte had three values: $FC was a full-length packet, $FA was a short packet with the length stored prior to the checksum, and finally $FE was the end-of-file (EOF) marker. Short packets and EOFs still contained a full 128-byte payload; they simply ignored the unused portions.[16]

Packets were separated by short periods of pure 5327 Hz, a pre-record write tone and a post-record gap, which added together made the "inter-record gap" or IRG. When opened for writing, the driver could be set to one of two modes, with short or long IRGs. For binary formats, where the data was being copied directly to or from memory with no interpretation, the short IRG was used, about 0.25 seconds. For other uses, like a BASIC programming language program in text format that had to be converted line by line to the internal binary format, the normal IRG was used, 3 seconds. This time was chosen to allow the cassette deck to come to a complete stop and restart before reaching the next packet, allowing the system any amount of time needed to process the packet.[16]

Although there was a standard packet format, there was no defined file format used by the system as a whole. The closest thing was a header used in bootable cassettes, which contained only 6 bytes of data and lacked a file name or other identifying information. The boot packet contained the number of records (up to 255) in the second byte, the low and high bytes of the address to load to, and the low and high address of the location to jump to once the load had completed.[16]

In addition to the standard packet format, the driver gave the user direct control over the tape drive motors and reading and writing the tones. This was used with audio tapes to control playback. A typical scenario would have an audio recording on the "left" track and short bursts of 5327 Hz at key locations within the audio. The program would then start the tape motor, causing the audio to be routed through the television speaker, waiting for a 1 to appear on the I/O port. When this was seen, the program would stop the tape and wait for some user action before starting it again.[16]

Notes

References

- ↑ RCA COSMAC VIP Instruction Manual. RCA. 1987. pp. 30–32. http://www.bitsavers.org/components/rca/cosmac/COSMAC_VIP_Instruction_Manual_1978.pdf.

- ↑ 2.0 2.1 2.2 Ogdin, Jerry (September 1975). "Hobbyist Interchange Tape System". Popular Electronics: 57–61. http://cini.classiccmp.org/pdf/pe/1975/PE1975-Sep-pg57.pdf.

- ↑ 3.0 3.1 3.2 Peschke, Manfred; Peschke, Virginia (February 1976). "BYTE's Audio Cassette Standards Symposium". Byte: 72, 73. https://archive.org/stream/byte-magazine-1976-02/1976_02_BYTE_00-06_Color_Graphics#page/n74.

- ↑ CUTS Assembly and Test Instructions. http://www.s100computers.com/Hardware%20Manuals/Processor%20Technology/CUTS%20Manual.pdf.

- ↑ R. T. Russell, BBC Engineering Designs Department (1981). The BBC Microcomputer System. PART II — HARDWARE SPECIFICATION (Report). The British Broadcasting Corporation. http://chrisacorns.computinghistory.org.uk/docs/BBC/HardwareSpecification.txt.

- ↑ "4, ROM BIOS". The MSX Red Book. Kuma Computers. 1985. ISBN 0-7457-0178-7.

- ↑ "Acorn cassette format". https://beebwiki.mdfs.net/Acorn_cassette_format.

- ↑ Jones, Robert S. (May 1977). "The Floppy ROM Experiment". Interface Age (McPheters, Wolfe & Jones) 2 (6): 28, 83. https://archive.org/details/Interface_Age_vol02I06_1977_May.

- ↑ "History of BASICODE". https://www.hobbyscoop.nl/the-history-of-basicode/.

- ↑ Benschop, Lennart. "BASICODE". https://lennartb.home.xs4all.nl/basic/basicode.html.

- ↑ Apple-1 Cassette Interface. Apple. 1976. http://www.bitsavers.org/pdf/apple/apple_I/Apple-1_Cassette_Interface.pdf.

- ↑ Gaylor, Winston (1983). Apple II Circuit Description. Howard and Sams. http://www.applevault.com/hardware/apple/apple2/apple2cassette.html.

- ↑ 13.0 13.1 13.2 Nouspikel, Thierry (3 April 1999). "Cassette tape interface". http://www.unige.ch/medecine/nouspikel/ti99/cassette.htm#Cassette%20tape%20format.

- ↑ 14.0 14.1 14.2 Ekstrand, Cris. "Tape Format". http://sidpreservation.6581.org/tape-format/.

- ↑ De Ceukelaire, Harrie (February 1985). "How TurboTape Works". Compute!: 112. https://www.atarimagazines.com/compute/issue57/turbotape.html.

- ↑ 16.0 16.1 16.2 16.3 16.4 Crawford, Chris (1982). De Re Atari. Atari. https://archive.org/details/ataribooks-de-re-atari/page/n187.

Further reading

- Cassetternet – Radiolab Mixtape episode on broadcasting programs over the radio

|

KSF

KSF