Formal power series

From HandWiki - Reading time: 29 min

From HandWiki - Reading time: 29 min

In mathematics, a formal series is an infinite sum that is considered independently from any notion of convergence, and can be manipulated with the usual algebraic operations on series (addition, subtraction, multiplication, division, partial sums, etc.).

A formal power series is a special kind of formal series, whose terms are of the form where is the th power of a variable ( is a non-negative integer), and is called the coefficient. Hence, power series can be viewed as a generalization of polynomials, where the number of terms is allowed to be infinite, with no requirements of convergence. Thus, the series may no longer represent a function of its variable, merely a formal sequence of coefficients, in contrast to a power series, which defines a function by taking numerical values for the variable within a radius of convergence. In a formal power series, the are used only as position-holders for the coefficients, so that the coefficient of is the fifth term in the sequence. In combinatorics, the method of generating functions uses formal power series to represent numerical sequences and multisets, for instance allowing concise expressions for recursively defined sequences regardless of whether the recursion can be explicitly solved. More generally, formal power series can include series with any finite (or countable) number of variables, and with coefficients in an arbitrary ring.

Rings of formal power series are complete local rings, and this allows using calculus-like methods in the purely algebraic framework of algebraic geometry and commutative algebra. They are analogous in many ways to p-adic integers, which can be defined as formal series of the powers of p.

Introduction

A formal power series can be loosely thought of as an object that is like a polynomial, but with infinitely many terms. Alternatively, for those familiar with power series (or Taylor series), one may think of a formal power series as a power series in which we ignore questions of convergence by not assuming that the variable X denotes any numerical value (not even an unknown value). For example, consider the series

If we studied this as a power series, its properties would include, for example, that its radius of convergence is 1. However, as a formal power series, we may ignore this completely; all that is relevant is the sequence of coefficients [1, −3, 5, −7, 9, −11, ...]. In other words, a formal power series is an object that just records a sequence of coefficients. It is perfectly acceptable to consider a formal power series with the factorials [1, 1, 2, 6, 24, 120, 720, 5040, ... ] as coefficients, even though the corresponding power series diverges for any nonzero value of X.

Arithmetic on formal power series is carried out by simply pretending that the series are polynomials. For example, if

then we add A and B term by term:

We can multiply formal power series, again just by treating them as polynomials (see in particular Cauchy product):

Notice that each coefficient in the product AB only depends on a finite number of coefficients of A and B. For example, the X5 term is given by

For this reason, one may multiply formal power series without worrying about the usual questions of absolute, conditional and uniform convergence which arise in dealing with power series in the setting of analysis.

Once we have defined multiplication for formal power series, we can define multiplicative inverses as follows. The multiplicative inverse of a formal power series A is a formal power series C such that AC = 1, provided that such a formal power series exists. It turns out that if A has a multiplicative inverse, it is unique, and we denote it by A−1. Now we can define division of formal power series by defining B/A to be the product BA−1, provided that the inverse of A exists. For example, one can use the definition of multiplication above to verify the familiar formula

An important operation on formal power series is coefficient extraction. In its most basic form, the coefficient extraction operator applied to a formal power series in one variable extracts the coefficient of the th power of the variable, so that and . Other examples include

Similarly, many other operations that are carried out on polynomials can be extended to the formal power series setting, as explained below.

The ring of formal power series

| Algebraic structure → Ring theory Ring theory |

|---|

|

If one considers the set of all formal power series in X with coefficients in a commutative ring R, the elements of this set collectively constitute another ring which is written and called the ring of formal power series in the variable X over R.

Definition of the formal power series ring

One can characterize abstractly as the completion of the polynomial ring equipped with a particular metric. This automatically gives the structure of a topological ring (and even of a complete metric space). But the general construction of a completion of a metric space is more involved than what is needed here, and would make formal power series seem more complicated than they are. It is possible to describe more explicitly, and define the ring structure and topological structure separately, as follows.

Ring structure

As a set, can be constructed as the set of all infinite sequences of elements of , indexed by the natural numbers (taken to include 0). Designating a sequence whose term at index is by , one defines addition of two such sequences by

and multiplication by

This type of product is called the Cauchy product of the two sequences of coefficients, and is a sort of discrete convolution. With these operations, becomes a commutative ring with zero element and multiplicative identity .

The product is in fact the same one used to define the product of polynomials in one indeterminate, which suggests using a similar notation. One embeds into by sending any (constant) to the sequence and designates the sequence by ; then using the above definitions every sequence with only finitely many nonzero terms can be expressed in terms of these special elements as

these are precisely the polynomials in . Given this, it is quite natural and convenient to designate a general sequence by the formal expression , even though the latter is not an expression formed by the operations of addition and multiplication defined above (from which only finite sums can be constructed). This notational convention allows reformulation of the above definitions as

and

which is quite convenient, but one must be aware of the distinction between formal summation (a mere convention) and actual addition.

Topological structure

Having stipulated conventionally that

-

()

one would like to interpret the right hand side as a well-defined infinite summation. To that end, a notion of convergence in is defined and a topology on is constructed. There are several equivalent ways to define the desired topology.

- We may give the product topology, where each copy of is given the discrete topology.

- We may give the I-adic topology, where is the ideal generated by , which consists of all sequences whose first term is zero.

- The desired topology could also be derived from the following metric. The distance between distinct sequences is defined to be where is the smallest natural number such that ; the distance between two equal sequences is of course zero.

Informally, two sequences and become closer and closer if and only if more and more of their terms agree exactly. Formally, the sequence of partial sums of some infinite summation converges if for every fixed power of the coefficient stabilizes: there is a point beyond which all further partial sums have the same coefficient. This is clearly the case for the right hand side of (1), regardless of the values , since inclusion of the term for gives the last (and in fact only) change to the coefficient of . It is also obvious that the limit of the sequence of partial sums is equal to the left hand side.

This topological structure, together with the ring operations described above, form a topological ring. This is called the ring of formal power series over and is denoted by . The topology has the useful property that an infinite summation converges if and only if the sequence of its terms converges to 0, which just means that any fixed power of occurs in only finitely many terms.

The topological structure allows much more flexible usage of infinite summations. For instance the rule for multiplication can be restated simply as

since only finitely many terms on the right affect any fixed . Infinite products are also defined by the topological structure; it can be seen that an infinite product converges if and only if the sequence of its factors converges to 1 (in which case the product is nonzero) or infinitely many factors have no constant term (in which case the product is zero).

Alternative topologies

The above topology is the finest topology for which

always converges as a summation to the formal power series designated by the same expression, and it often suffices to give a meaning to infinite sums and products, or other kinds of limits that one wishes to use to designate particular formal power series. It can however happen occasionally that one wishes to use a coarser topology, so that certain expressions become convergent that would otherwise diverge. This applies in particular when the base ring already comes with a topology other than the discrete one, for instance if it is also a ring of formal power series.

In the ring of formal power series Failed to parse (unknown function "\ZXY"): {\displaystyle \ZXY} , the topology of above construction only relates to the indeterminate , since the topology that was put on Failed to parse (unknown function "\ZX"): {\displaystyle \ZX} has been replaced by the discrete topology when defining the topology of the whole ring. So

converges (and its sum can be written as ); however

would be considered to be divergent, since every term affects the coefficient of . This asymmetry disappears if the power series ring in is given the product topology where each copy of Failed to parse (unknown function "\ZX"): {\displaystyle \ZX} is given its topology as a ring of formal power series rather than the discrete topology. With this topology, a sequence of elements of Failed to parse (unknown function "\ZXY"): {\displaystyle \ZXY} converges if the coefficient of each power of converges to a formal power series in , a weaker condition than stabilizing entirely. For instance, with this topology, in the second example given above, the coefficient of converges to , so the whole summation converges to .

This way of defining the topology is in fact the standard one for repeated constructions of rings of formal power series, and gives the same topology as one would get by taking formal power series in all indeterminates at once. In the above example that would mean constructing Failed to parse (unknown function "\ZX"): {\displaystyle \ZX,Y} and here a sequence converges if and only if the coefficient of every monomial stabilizes. This topology, which is also the -adic topology, where is the ideal generated by and , still enjoys the property that a summation converges if and only if its terms tend to 0.

The same principle could be used to make other divergent limits converge. For instance in Failed to parse (unknown function "\RX"): {\displaystyle \RX} the limit

does not exist, so in particular it does not converge to

This is because for the coefficient of does not stabilize as . It does however converge in the usual topology of , and in fact to the coefficient of . Therefore, if one would give Failed to parse (unknown function "\RX"): {\displaystyle \RX} the product topology of where the topology of is the usual topology rather than the discrete one, then the above limit would converge to . This more permissive approach is not however the standard when considering formal power series, as it would lead to convergence considerations that are as subtle as they are in analysis, while the philosophy of formal power series is on the contrary to make convergence questions as trivial as they can possibly be. With this topology it would not be the case that a summation converges if and only if its terms tend to 0.

Universal property

The ring may be characterized by the following universal property. If is a commutative associative algebra over , if is an ideal of such that the -adic topology on is complete, and if is an element of , then there is a unique with the following properties:

- is an -algebra homomorphism

- is continuous

- .

Operations on formal power series

One can perform algebraic operations on power series to generate new power series.[1][2] Besides the ring structure operations defined above, we have the following.

Power series raised to powers

For any natural number n we have where

(This formula can only be used if m and a0 are invertible in the ring of coefficients.)

In the case of formal power series with complex coefficients, the complex powers are well defined at least for series f with constant term equal to 1. In this case, can be defined either by composition with the binomial series (1+x)α, or by composition with the exponential and the logarithmic series, or as the solution of the differential equation with constant term 1, the three definitions being equivalent. The rules of calculus and easily follow.

Multiplicative inverse

The series

is invertible in if and only if its constant coefficient is invertible in . This condition is necessary, for the following reason: if we suppose that has an inverse then the constant term of is the constant term of the identity series, i.e. it is 1. This condition is also sufficient; we may compute the coefficients of the inverse series via the explicit recursive formula

An important special case is that the geometric series formula is valid in :

If is a field, then a series is invertible if and only if the constant term is non-zero, i.e. if and only if the series is not divisible by . This means that is a discrete valuation ring with uniformizing parameter .

Division

The computation of a quotient

assuming the denominator is invertible (that is, is invertible in the ring of scalars), can be performed as a product and the inverse of , or directly equating the coefficients in :

Extracting coefficients

The coefficient extraction operator applied to a formal power series

in X is written

and extracts the coefficient of Xm, so that

Composition

Given formal power series

one may form the composition

where the coefficients cn are determined by "expanding out" the powers of f(X):

Here the sum is extended over all (k, j) with and with

A more explicit description of these coefficients is provided by Faà di Bruno's formula, at least in the case where the coefficient ring is a field of characteristic 0.

Composition is only valid when has no constant term, so that each depends on only a finite number of coefficients of and . In other words, the series for converges in the topology of .

Example

Assume that the ring has characteristic 0 and the nonzero integers are invertible in . If we denote by the formal power series

then the expression

makes perfect sense as a formal power series. However, the statement

is not a valid application of the composition operation for formal power series. Rather, it is confusing the notions of convergence in and convergence in ; indeed, the ring may not even contain any number with the appropriate properties.

Composition inverse

Whenever a formal series

has f0 = 0 and f1 being an invertible element of R, there exists a series

that is the composition inverse of , meaning that composing with gives the series representing the identity function . The coefficients of may be found recursively by using the above formula for the coefficients of a composition, equating them with those of the composition identity X (that is 1 at degree 1 and 0 at every degree greater than 1). In the case when the coefficient ring is a field of characteristic 0, the Lagrange inversion formula (discussed below) provides a powerful tool to compute the coefficients of g, as well as the coefficients of the (multiplicative) powers of g.

Formal differentiation

Given a formal power series

we define its formal derivative, denoted Df or f ′, by

The symbol D is called the formal differentiation operator. This definition simply mimics term-by-term differentiation of a polynomial.

This operation is R-linear:

for any a, b in R and any f, g in Additionally, the formal derivative has many of the properties of the usual derivative of calculus. For example, the product rule is valid:

and the chain rule works as well:

whenever the appropriate compositions of series are defined (see above under composition of series).

Thus, in these respects formal power series behave like Taylor series. Indeed, for the f defined above, we find that

where Dk denotes the kth formal derivative (that is, the result of formally differentiating k times).

Formal antidifferentiation

If is a ring with characteristic zero and the nonzero integers are invertible in , then given a formal power series

we define its formal antiderivative or formal indefinite integral by

for any constant .

This operation is R-linear:

for any a, b in R and any f, g in Additionally, the formal antiderivative has many of the properties of the usual antiderivative of calculus. For example, the formal antiderivative is the right inverse of the formal derivative:

for any .

Properties

Algebraic properties of the formal power series ring

is an associative algebra over which contains the ring of polynomials over ; the polynomials correspond to the sequences which end in zeros.

The Jacobson radical of is the ideal generated by and the Jacobson radical of ; this is implied by the element invertibility criterion discussed above.

The maximal ideals of all arise from those in in the following manner: an ideal of is maximal if and only if is a maximal ideal of and is generated as an ideal by and .

Several algebraic properties of are inherited by :

- if is a local ring, then so is (with the set of non units the unique maximal ideal),

- if is Noetherian, then so is (a version of the Hilbert basis theorem),

- if is an integral domain, then so is , and

- if is a field, then is a discrete valuation ring.

Topological properties of the formal power series ring

The metric space is complete.

The ring is compact if and only if R is finite. This follows from Tychonoff's theorem and the characterisation of the topology on as a product topology.

Weierstrass preparation

The ring of formal power series with coefficients in a complete local ring satisfies the Weierstrass preparation theorem.

Applications

Formal power series can be used to solve recurrences occurring in number theory and combinatorics. For an example involving finding a closed form expression for the Fibonacci numbers, see the article on Examples of generating functions.

One can use formal power series to prove several relations familiar from analysis in a purely algebraic setting. Consider for instance the following elements of Failed to parse (unknown function "\QX"): {\displaystyle \QX} :

Then one can show that

The last one being valid in the ring Failed to parse (unknown function "\QX"): {\displaystyle \QX, Y.}

For K a field, the ring is often used as the "standard, most general" complete local ring over K in algebra.

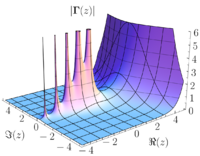

Interpreting formal power series as functions

| Mathematical analysis → Complex analysis |

| Complex analysis |

|---|

|

| Complex numbers |

| Complex functions |

| Basic Theory |

| Geometric function theory |

| People |

|

|

In mathematical analysis, every convergent power series defines a function with values in the real or complex numbers. Formal power series over certain special rings can also be interpreted as functions, but one has to be careful with the domain and codomain. Let

and suppose is a commutative associative algebra over , is an ideal in such that the I-adic topology on is complete, and is an element of . Define:

This series is guaranteed to converge in given the above assumptions on . Furthermore, we have

and

Unlike in the case of bona fide functions, these formulas are not definitions but have to be proved.

Since the topology on is the -adic topology and is complete, we can in particular apply power series to other power series, provided that the arguments don't have constant coefficients (so that they belong to the ideal ): , and are all well defined for any formal power series

With this formalism, we can give an explicit formula for the multiplicative inverse of a power series whose constant coefficient is invertible in :

If the formal power series with is given implicitly by the equation

where is a known power series with , then the coefficients of can be explicitly computed using the Lagrange inversion formula.

Generalizations

Formal Laurent series

The formal Laurent series over a ring are defined in a similar way to a formal power series, except that we also allow finitely many terms of negative degree. That is, they are the series that can be written as

for some integer , so that there are only finitely many negative with . (This is different from the classical Laurent series of complex analysis.) For a non-zero formal Laurent series, the minimal integer such that is called the order of and is denoted (The order of the zero series is .)

Multiplication of such series can be defined. Indeed, similarly to the definition for formal power series, the coefficient of of two series with respective sequences of coefficients and is This sum has only finitely many nonzero terms because of the assumed vanishing of coefficients at sufficiently negative indices.

The formal Laurent series form the ring of formal Laurent series over , denoted by .[lower-alpha 1] It is equal to the localization of the ring of formal power series with respect to the set of positive powers of . If is a field, then is in fact a field, which may alternatively be obtained as the field of fractions of the integral domain .

As with , the ring of formal Laurent series may be endowed with the structure of a topological ring by introducing the metric

One may define formal differentiation for formal Laurent series in the natural (term-by-term) way. Precisely, the formal derivative of the formal Laurent series above is which is again a formal Laurent series. If is a non-constant formal Laurent series and with coefficients in a field of characteristic 0, then one has However, in general this is not the case since the factor for the lowest order term could be equal to 0 in .

Formal residue

Assume that is a field of characteristic 0. Then the map

is a -derivation that satisfies

The latter shows that the coefficient of in is of particular interest; it is called formal residue of and denoted . The map

is -linear, and by the above observation one has an exact sequence

Some rules of calculus. As a quite direct consequence of the above definition, and of the rules of formal derivation, one has, for any

- if

Property (i) is part of the exact sequence above. Property (ii) follows from (i) as applied to . Property (iii): any can be written in the form , with and : then implies is invertible in whence Property (iv): Since we can write with . Consequently, and (iv) follows from (i) and (iii). Property (v) is clear from the definition.

The Lagrange inversion formula

As mentioned above, any formal series with f0 = 0 and f1 ≠ 0 has a composition inverse The following relation between the coefficients of gn and f−k holds ("Lagrange inversion formula"):

In particular, for n = 1 and all k ≥ 1,

Since the proof of the Lagrange inversion formula is a very short computation, it is worth reporting it here. Noting , we can apply the rules of calculus above, crucially Rule (iv) substituting , to get:

Generalizations. One may observe that the above computation can be repeated plainly in more general settings than K((X)): a generalization of the Lagrange inversion formula is already available working in the -modules where α is a complex exponent. As a consequence, if f and g are as above, with , we can relate the complex powers of f / X and g / X: precisely, if α and β are non-zero complex numbers with negative integer sum, then

For instance, this way one finds the power series for complex powers of the Lambert function.

Power series in several variables

Formal power series in any number of indeterminates (even infinitely many) can be defined. If I is an index set and XI is the set of indeterminates Xi for i∈I, then a monomial Xα is any finite product of elements of XI (repetitions allowed); a formal power series in XI with coefficients in a ring R is determined by any mapping from the set of monomials Xα to a corresponding coefficient cα, and is denoted . The set of all such formal power series is denoted and it is given a ring structure by defining

and

Topology

The topology on is such that a sequence of its elements converges only if for each monomial Xα the corresponding coefficient stabilizes. If I is finite, then this the J-adic topology, where J is the ideal of generated by all the indeterminates in XI. This does not hold if I is infinite. For example, if then the sequence with does not converge with respect to any J-adic topology on R, but clearly for each monomial the corresponding coefficient stabilizes.

As remarked above, the topology on a repeated formal power series ring like is usually chosen in such a way that it becomes isomorphic as a topological ring to

Operations

All of the operations defined for series in one variable may be extended to the several variables case.

- A series is invertible if and only if its constant term is invertible in R.

- The composition f(g(X)) of two series f and g is defined if f is a series in a single indeterminate, and the constant term of g is zero. For a series f in several indeterminates a form of "composition" can similarly be defined, with as many separate series in the place of g as there are indeterminates.

In the case of the formal derivative, there are now separate partial derivative operators, which differentiate with respect to each of the indeterminates. They all commute with each other.

Universal property

In the several variables case, the universal property characterizing becomes the following. If S is a commutative associative algebra over R, if I is an ideal of S such that the I-adic topology on S is complete, and if x1, …, xr are elements of I, then there is a unique map with the following properties:

- Φ is an R-algebra homomorphism

- Φ is continuous

- Φ(Xi) = xi for i = 1, …, r.

Non-commuting variables

The several variable case can be further generalised by taking non-commuting variables Xi for i ∈ I, where I is an index set and then a monomial Xα is any word in the XI; a formal power series in XI with coefficients in a ring R is determined by any mapping from the set of monomials Xα to a corresponding coefficient cα, and is denoted . The set of all such formal power series is denoted R«XI», and it is given a ring structure by defining addition pointwise

and multiplication by

where · denotes concatenation of words. These formal power series over R form the Magnus ring over R.[3][4]

On a semiring

Given an alphabet and a semiring . The formal power series over supported on the language is denoted by . It consists of all mappings , where is the free monoid generated by the non-empty set .

The elements of can be written as formal sums

where denotes the value of at the word . The elements are called the coefficients of .

For the support of is the set

A series where every coefficient is either or is called the characteristic series of its support.

The subset of consisting of all series with a finite support is denoted by and called polynomials.

For and , the sum is defined by

The (Cauchy) product is defined by

The Hadamard product is defined by

And the products by a scalar and by

- and , respectively.

With these operations and are semirings, where is the empty word in .

These formal power series are used to model the behavior of weighted automata, in theoretical computer science, when the coefficients of the series are taken to be the weight of a path with label in the automata.[5]

Replacing the index set by an ordered abelian group

Suppose is an ordered abelian group, meaning an abelian group with a total ordering respecting the group's addition, so that if and only if for all . Let I be a well-ordered subset of , meaning I contains no infinite descending chain. Consider the set consisting of

for all such I, with in a commutative ring , where we assume that for any index set, if all of the are zero then the sum is zero. Then is the ring of formal power series on ; because of the condition that the indexing set be well-ordered the product is well-defined, and we of course assume that two elements which differ by zero are the same. Sometimes the notation is used to denote .[6]

Various properties of transfer to . If is a field, then so is . If is an ordered field, we can order by setting any element to have the same sign as its leading coefficient, defined as the least element of the index set I associated to a non-zero coefficient. Finally if is a divisible group and is a real closed field, then is a real closed field, and if is algebraically closed, then so is .

This theory is due to Hans Hahn, who also showed that one obtains subfields when the number of (non-zero) terms is bounded by some fixed infinite cardinality.

Examples and related topics

- Bell series are used to study the properties of multiplicative arithmetic functions

- Formal groups are used to define an abstract group law using formal power series

- Puiseux series are an extension of formal Laurent series, allowing fractional exponents

- Rational series

See also

- Ring of restricted power series

Notes

- ↑ For each nonzero formal Laurent series, the order is an integer (that is, the degrees of the terms are bounded below). But the ring contains series of all orders.

References

- ↑ Zwillinger, Daniel; Moll, Victor Hugo, eds (2015). "0.313" (in en). Table of Integrals, Series, and Products (8 ed.). Academic Press, Inc.. p. 18. ISBN 978-0-12-384933-5. (Several previous editions as well.)

- ↑ Niven, Ivan (October 1969). "Formal Power Series". American Mathematical Monthly 76 (8): 871–889. doi:10.1080/00029890.1969.12000359.

- ↑ Koch, Helmut (1997). Algebraic Number Theory. Encycl. Math. Sci.. 62 (2nd printing of 1st ed.). Springer-Verlag. p. 167. ISBN 978-3-540-63003-6.

- ↑ Moran, Siegfried (1983). The Mathematical Theory of Knots and Braids: An Introduction. North-Holland Mathematics Studies. 82. Elsevier. p. 211. ISBN 978-0-444-86714-8.

- ↑ Droste, M., & Kuich, W. (2009). Semirings and Formal Power Series. Handbook of Weighted Automata, 3–28. doi:10.1007/978-3-642-01492-5_1, p. 12

- ↑ Shamseddine, Khodr; Berz, Martin (2010). "Analysis on the Levi-Civita Field: A Brief Overview". Contemporary Mathematics 508: 215–237. doi:10.1090/conm/508/10002. ISBN 9780821847404. http://www.physics.umanitoba.ca/~khodr/Publications/RS-Overview-offprints.pdf.

- Berstel, Jean; Reutenauer, Christophe (2011). Noncommutative rational series with applications. Encyclopedia of Mathematics and Its Applications. 137. Cambridge: Cambridge University Press. ISBN 978-0-521-19022-0.

- Nicolas Bourbaki: Algebra, IV, §4. Springer-Verlag 1988.

Further reading

- W. Kuich. Semirings and formal power series: Their relevance to formal languages and automata theory. In G. Rozenberg and A. Salomaa, editors, Handbook of Formal Languages, volume 1, Chapter 9, pages 609–677. Springer, Berlin, 1997, ISBN 3-540-60420-0

- Droste, M., & Kuich, W. (2009). Semirings and Formal Power Series. Handbook of Weighted Automata, 3–28. doi:10.1007/978-3-642-01492-5_1

- Arto Salomaa (1990). "Formal Languages and Power Series". in Jan van Leeuwen. Formal Models and Semantics. Handbook of Theoretical Computer Science. B. Elsevier. pp. 103–132. ISBN 0-444-88074-7.

|

KSF

KSF