Graduated optimization

From HandWiki - Reading time: 3 min

From HandWiki - Reading time: 3 min

Graduated optimization is a global optimization technique that attempts to solve a difficult optimization problem by initially solving a greatly simplified problem, and progressively transforming that problem (while optimizing) until it is equivalent to the difficult optimization problem.[1][2][3]

Technique description

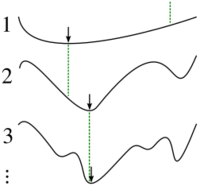

Graduated optimization is an improvement to hill climbing that enables a hill climber to avoid settling into local optima.[4] It breaks a difficult optimization problem into a sequence of optimization problems, such that the first problem in the sequence is convex (or nearly convex), the solution to each problem gives a good starting point to the next problem in the sequence, and the last problem in the sequence is the difficult optimization problem that it ultimately seeks to solve. Often, graduated optimization gives better results than simple hill climbing. Further, when certain conditions exist, it can be shown to find an optimal solution to the final problem in the sequence. These conditions are:

- The first optimization problem in the sequence can be solved given the initial starting point.

- The locally convex region around the global optimum of each problem in the sequence includes the point that corresponds to the global optimum of the previous problem in the sequence.

It can be shown inductively that if these conditions are met, then a hill climber will arrive at the global optimum for the difficult problem. Unfortunately, it can be difficult to find a sequence of optimization problems that meet these conditions. Often, graduated optimization yields good results even when the sequence of problems cannot be proven to strictly meet all of these conditions.

Some examples

Graduated optimization is commonly used in image processing for locating objects within a larger image. This problem can be made to be more convex by blurring the images. Thus, objects can be found by first searching the most-blurred image, then starting at that point and searching within a less-blurred image, and continuing in this manner until the object is located with precision in the original sharp image. The proper choice of the blurring operator depends on the geometric transformation relating the object in one image to the other.[5]

Graduated optimization can be used in manifold learning. The Manifold Sculpting algorithm, for example, uses graduated optimization to seek a manifold embedding for non-linear dimensionality reduction.[6] It gradually scales variance out of extra dimensions within a data set while optimizing in the remaining dimensions. It has also been used to calculate conditions for fractionation with tumors,[7] for object tracking in computer vision,[8] and other purposes.

A thorough review of the method and its applications can be found in.[3]

Related optimization techniques

Simulated annealing is closely related to graduated optimization. Instead of smoothing the function over which it is optimizing, simulated annealing randomly perturbs the current solution by a decaying amount, which may have a similar effect.[citation needed] Because simulated annealing relies on random sampling to find improvements, however, its computation complexity is exponential in the number of dimensions being optimized.[citation needed] By contrast, graduated optimization smooths the function being optimized, so local optimization techniques that are efficient in high-dimensional space (such as gradient-based techniques, hill climbers, etc.) may still be used.

See also

References

- ↑ Thacker, Neil; Cootes, Tim (1996). "Graduated Non-Convexity and Multi-Resolution Optimization Methods". Vision Through Optimization. http://homepages.inf.ed.ac.uk/rbf/CVonline/LOCAL_COPIES/BMVA96Tut/node29.html.

- ↑ Blake, Andrew; Zisserman, Andrew (1987). Visual Reconstruction. MIT Press. ISBN 0-262-02271-0. http://research.microsoft.com/en-us/um/people/ablake/papers/VisualReconstruction/.[page needed]

- ↑ 3.0 3.1 Hossein Mobahi, John W. Fisher III. On the Link Between Gaussian Homotopy Continuation and Convex Envelopes, In Lecture Notes in Computer Science (EMMCVPR 2015), Springer, 2015.

- ↑ Hulse, Daniel; Tumer, Kagan; Hoyle, Christopher; Tumer, Irem (February 2019). "Modeling multidisciplinary design with multiagent learning" (in en). AI EDAM 33: pp. 85–99. doi:10.1017/S0890060418000161. https://www.cambridge.org/core/journals/ai-edam/article/modeling-multidisciplinary-design-with-multiagent-learning/137CEF0A3E1BC783F37AC94FCCDDF0C6.

- ↑ Hossein Mobahi, C. Lawrence Zitnick, Yi Ma. Seeing through the Blur, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2012.

- ↑ Gashler, M.; Ventura, D.; Martinez, T. (2008). "Iterative Non-linear Dimensionality Reduction with Manifold Sculpting". in Platt, J. C.; Koller, D.; Singer, Y. et al.. Advances in Neural Information Processing Systems 20. Cambridge, MA: MIT Press. pp. 513–20. http://axon.cs.byu.edu/papers/gashler2007nips.pdf.

- ↑ Afanasjev, BP; Akimov, AA; Kozlov, AP; Berkovic, AN (1989). "Graduated optimization of fractionation using a 2-component model". Radiobiologia, Radiotherapia 30 (2): 131–5. PMID 2748803.

- ↑ Ming Ye; Haralick, R.M.; Shapiro, L.G. (2003). "Estimating piecewise-smooth optical flow with global matching and graduated optimization". IEEE Transactions on Pattern Analysis and Machine Intelligence 25 (12): 1625–30. doi:10.1109/TPAMI.2003.1251156.

|

KSF

KSF