History of free and open-source software

From HandWiki - Reading time: 33 min

From HandWiki - Reading time: 33 min

| History of computing |

|---|

| Hardware |

| Software |

| Computer science |

| Modern concepts |

| By country |

| Timeline of computing |

| Glossary of computer science |

In the 1950s and 1960s, computer operating software and compilers were delivered as a part of hardware purchases without separate fees. At the time, source code, the human-readable form of software, was generally distributed with the software providing the ability to fix bugs or add new functions.[1] Universities were early adopters of computing technology. Many of the modifications developed by universities were openly shared, in keeping with the academic principles of sharing knowledge, and organizations sprung up to facilitate sharing. As large-scale operating systems matured, fewer organizations allowed modifications to the operating software, and eventually such operating systems were closed to modification. However, utilities and other added-function applications are still shared and new organizations have been formed to promote the sharing of software.

Sharing techniques before software

The concept of free sharing of technological information existed long before computers. For example, in the early years of automobile development, one enterprise owned the rights to a 2-cycle gasoline engine patent originally filed by George B. Selden.[2] By controlling this patent, they were able to monopolize the industry and force car manufacturers to adhere to their demands, or risk a lawsuit. In 1911, independent automaker Henry Ford won a challenge to the Selden patent. The result was that the Selden patent became virtually worthless and a new association (which would eventually become the Motor Vehicle Manufacturers Association) was formed.[2] The new association instituted a cross-licensing agreement among all US auto manufacturers: although each company would develop technology and file patents, these patents were shared openly and without the exchange of money between all the manufacturers.[2] By the time the US entered World War 2, 92 Ford patents and 515 patents from other companies were being shared between these manufacturers, without any exchange of money (or lawsuits).[2][improper synthesis?]

Free software before the 1980s

In the 1950s and into the 1960s almost all software was produced by academics and corporate researchers working in collaboration,[3] often shared as public-domain software. As such, it was generally distributed under the principles of openness and cooperation long established in the fields of academia, and was not seen as a commodity in itself. Such communal behavior later became a central element of the so-called hacking culture (a term with a positive connotation among open-source programmers). At this time, source code, the human-readable form of software, was generally distributed with the software machine code because users frequently modified the software themselves, because it would not run on different hardware or OS without modification, and also to fix bugs or add new functions.[4][5][failed verification] The first example of free and open-source software is believed to be the A-2 system, developed at the UNIVAC division of Remington Rand in 1953,[6] which was released to customers with its source code. They were invited to send their improvements back to UNIVAC.[7] Later, almost all IBM mainframe software was also distributed with source code included. User groups such as that of the IBM 701, called SHARE, and that of Digital Equipment Corporation (DEC), called DECUS, were formed to facilitate the exchange of software. The SHARE Operating System, originally developed by General Motors, was distributed by SHARE for the IBM 709 and 7090 computers. Some university computer labs even had a policy requiring that all programs installed on the computer had to come with published source-code files.[8]

In 1969 the Advanced Research Projects Agency Network (ARPANET), a transcontinental, high-speed computer network was constructed. The network (later succeeded by the Internet) simplified the exchange of software code.[4]

Some free software which was developed in the 1970s continues to be developed and used, such as TeX (developed by Donald Knuth)[9] and SPICE.[10]

Initial decline of free software

By the late 1960s change was coming: as operating systems and programming language compilers evolved, software production costs were dramatically increasing relative to hardware. A growing software industry was competing with the hardware manufacturers' bundled software products (the cost of bundled products was included in the hardware cost), leased machines required software support while providing no revenue for software, and some customers, able to better meet their own needs,[11] did not want the costs of the manufacturer's software to be bundled with hardware product costs. In the United States vs. IBM antitrust suit, filed 17 January 1969, the U.S. government charged that bundled software was anticompetitive.[12] While some software continued to come at no cost, there was a growing amount of software that was for sale only under restrictive licenses.

In the early 1970s AT&T distributed early versions of Unix at no cost to the government and academic researchers, but these versions did not come with permission to redistribute or to distribute modified versions, and were thus not free software in the modern meaning of the phrase. After Unix became more widespread in the early 1980s, AT&T stopped the free distribution and charged for system patches. As it is quite difficult to switch to another architecture, most researchers paid for a commercial license.

Software was not considered copyrightable before the 1974 US Commission on New Technological Uses of Copyrighted Works (CONTU) decided that "computer programs, to the extent that they embody an author's original creation, are proper subject matter of copyright".[13][14] Therefore, software had no licenses attached and was shared as public-domain software, typically with source code. The CONTU decision plus later court decisions such as Apple v. Franklin in 1983 for object code, gave computer programs the copyright status of literary works and started the licensing of software and the shrink-wrap closed source software business model.[15]

In the late 1970s and early 1980s, computer vendors and software-only companies began routinely charging for software licenses, marketing software as "Program Products" and imposing legal restrictions on new software developments, now seen as assets, through copyrights, trademarks, and leasing contracts. In 1976 Bill Gates wrote an essay entitled "Open Letter to Hobbyists", in which he expressed dismay at the widespread sharing of Microsoft's product Altair BASIC by hobbyists without paying its licensing fee. In 1979, AT&T began to enforce its licenses when the company decided it might profit by selling the Unix system.[16] In an announcement letter dated 8 February 1983 IBM inaugurated a policy of no longer distributing sources with purchased software.[17][18]

To increase revenues, a general trend began to no longer distribute source code (easily readable by programmers), and only distribute the executable machine code that was compiled from the source code. One person especially distressed by this new practice was Richard Stallman. He was concerned that he could no longer study or further modify programs initially written by others. Stallman viewed this practice as ethically wrong. In response, he founded the GNU Project in 1983 so that people could use computers using only free software.[1] He established a non-profit organization, the Free Software Foundation, in 1985, to more formally organize the project. He invented copyleft, a legal mechanism to preserve the "free" status of a work subject to copyright, and implemented this in the GNU General Public License. Copyleft licenses allow authors to grant a number of rights to users (including rights to use a work without further charges, and rights to obtain, study and modify the program's complete corresponding source code) but requires derivatives to remain under the same license or one without any additional restrictions. Since derivatives include combinations with other original programs, downstream authors are prevented from turning the initial work into proprietary software, and invited to contribute to the copyleft commons.[4] Later, variations of such licenses were developed by others.

1980s and 1990s

Informal software sharing continues

However, there were still those who wished to share their source code with other programmers and/or with users on a free basis, then called "hobbyists" and "hackers".[19] Before the introduction and widespread public use of the internet, there were several alternative ways available to do this, including listings in computer magazines (like Dr. Dobb's Journal, Creative Computing, SoftSide, Compute!, Byte, etc.) and in computer programming books, like the bestseller BASIC Computer Games.[20] Though still copyrighted, annotated source code for key components of Atari 8-bit family system software was published in mass market books, including The Atari BASIC Source Book[21] (full source for Atari BASIC) and Inside Atari DOS (full source for Atari DOS).[22]

SHARE program library

The SHARE users group, founded in 1955, began collecting and distributing free software. The first documented distribution from SHARE was dated 17 October 1955.[23] The "SHARE Program Library Agency" (SPLA) distributed information and software, notably on magnetic tape.

DECUS tapes

In the early 1980s, the so-called DECUS tapes[24] were a worldwide system for the transmission of free software for users of DEC equipment. Operating systems were usually proprietary software, but many tools like the TECO editor, Runoff text formatter, or List file listing utility, etc. were developed to make users' lives easier, and distributed on the DECUS tapes. These utility packages benefited DEC, which sometimes incorporated them into new releases of their proprietary operating system. Even compilers could be distributed and for example Ratfor (and Ratfiv) helped researchers to move from Fortran coding to structured programming (suppressing the GO TO statement). The 1981 Decus tape was probably the most innovative by bringing the Lawrence Berkeley Laboratory Software Tools Virtual Operating System which permitted users to use a Unix-like system on DEC 16-bit PDP-11s and 32-bit VAXes running under the VMS operating system. It was similar to the current cygwin system for Windows. Binaries and libraries were often distributed, but users usually preferred to compile from source code.[citation needed]

Online software sharing communities in the 1980s

In the 1980s, parallel to the free software movement, software with source code was shared on BBS networks. This was sometimes a necessity; software written in BASIC and other interpreted languages could only be distributed as source code, and much of it was freeware. When users began gathering such source code, and setting up boards specifically to discuss its modification, this was a de facto open-source system.

One of the most obvious examples of this is one of the most-used BBS systems and networks, WWIV, developed initially in BASIC by Wayne Bell. A culture of "modding" his software, and distributing the mods, grew up so extensively that when the software was ported to first Pascal, then C++, its source code continued to be distributed to registered users, who would share mods and compile their own versions of the software. This may have contributed to it being a dominant system and network, despite being outside the Fidonet umbrella that was shared by so many other BBS makers.

Meanwhile, the advent of Usenet and UUCPNet in the early 1980s further connected the programming community and provided a simpler way for programmers to share their software and contribute to software others had written.[25]

Launch of the free software movement

In 1983, Richard Stallman launched the GNU Project to write a complete operating system free from constraints on use of its source code. Particular incidents that motivated this include a case where an annoying printer couldn't be fixed because the source code was withheld from users.[26] Stallman also published the GNU Manifesto in 1985 to outline the GNU Project's purpose and explain the importance of free software. Another probable inspiration for the GNU project and its manifesto was a disagreement between Stallman and Symbolics, Inc. over MIT's access to updates Symbolics had made to its Lisp machine, which was based on MIT code.[27] Soon after the launch, he[19] used[clarification needed] the existing term "free software" and founded the Free Software Foundation to promote the concept. The Free Software Definition was published in February 1986.

In 1989, the first version of the GNU General Public License was published.[28] A slightly updated version 2 was published in 1991. In 1989, some GNU developers formed the company Cygnus Solutions.[29] The GNU project's kernel, later called "GNU Hurd", was continually delayed, but most other components were completed by 1991. Some of these, especially the GNU Compiler Collection, had become market leaders[clarification needed] in their own right. The GNU Debugger and GNU Emacs were also notable successes.

Linux (1991–present)

The Linux kernel, started by Linus Torvalds, was released as freely modifiable source code in 1991. The license was not a free software license, but with version 0.12 in February 1992, Torvalds relicensed the project under the GNU General Public License.[30] Much like Unix, Torvalds' kernel attracted attention from volunteer programmers.

Until this point, the GNU project's lack of a kernel meant that no complete free software operating systems existed. The development of Torvalds' kernel closed that last gap. The combination of the almost-finished GNU operating system and the Linux kernel made the first complete free software operating system.

Among Linux distributions, Debian GNU/Linux, begun by Ian Murdock in 1993, is noteworthy for being explicitly committed to the GNU and FSF principles of free software. The Debian developers' principles are expressed in the Debian Social Contract. Since its inception, the Debian project has been closely linked with the FSF, and in fact was sponsored by the FSF for a year in 1994–1995. In 1997, former Debian project leader Bruce Perens also helped found Software in the Public Interest, a non-profit funding and support organization for various free software projects.[31]

Since 1996, the Linux kernel has included proprietary licensed components, so that it was no longer entirely free software.[32] Therefore, the Free Software Foundation Latin America released in 2008 a modified version of the Linux-kernel called Linux-libre, where all proprietary and non-free components were removed.

Many businesses offer customized Linux-based products, or distributions, with commercial support. The naming remains controversial. Referring to the complete system as simply "Linux" is common usage. However, the Free Software Foundation, and many others, advocate the use of the term "GNU/Linux", saying that it is a more accurate name for the whole operating system.[33]

Linux adoption grew among businesses and governments in the 1990s and 2000s. In the English-speaking world at least, Ubuntu and its derivatives became a relatively popular group of Linux distributions.

The free BSDs (1993–present)

When the USL v. BSDi lawsuit was settled out of court in 1993, FreeBSD and NetBSD (both derived from 386BSD) were released as free software. In 1995, OpenBSD forked from NetBSD. In 2004, Dragonfly BSD forked from FreeBSD.

The dot-com years (late 1990s)

In the mid to late 90s, when many website-based companies were starting up, free software became a popular choice for web servers. The Apache HTTP Server became the most-used web-server software, a title that still holds as of 2015.[34] Systems based on a common "stack" of software with the Linux kernel at the base, Apache providing web services, the MySQL database engine for data storage, and the PHP programming language for providing dynamic pages, came to be termed LAMP systems. In actuality, the programming language that predated PHP and dominated the web in the mid and late 1990s was Perl. Web forms were processed on the server side through Common Gateway Interface scripts written in Perl.

The term "open source," as related to free software, was in common use by 1995.[35] Other recollection have it in use during the 1980s.[36]

The launch of Open Source

In 1997, Eric S. Raymond published "The Cathedral and the Bazaar", a reflective analysis of the hacker community and free software principles. The paper received significant attention in early 1998 and was one factor in motivating Netscape Communications Corporation to release their popular Netscape Communicator Internet suite as free software.[37]

Netscape's act prompted Raymond and others to look into how to bring free software principles and benefits to the commercial-software industry. They concluded that FSF's social activism was not appealing to companies like Netscape, and looked for a way to rebrand the free software movement to emphasize the business potential of the sharing of source code.[38]

The label "open source" was adopted by some people in the free software movement at a strategy session[39] held at Palo Alto, California, in reaction to Netscape's January 1998 announcement of a source code release for Navigator. The group of individuals at the session included Christine Peterson who suggested "open source",[1] Todd Anderson, Larry Augustin, Jon Hall, Sam Ockman, Michael Tiemann, and Eric S. Raymond. Over the next week, Raymond and others worked on spreading the word. Linus Torvalds gave an all-important sanction the following day. Phil Hughes offered a pulpit in Linux Journal. Richard Stallman, pioneer of the free software movement, flirted with adopting the term, but changed his mind.[39] Those people who adopted the term used the opportunity before the release of Navigator's source code to free themselves of the ideological and confrontational connotations of the term "free software". Netscape released its source code under the Netscape Public License and later under the Mozilla Public License.[40]

The term was given a big boost at an event organized in April 1998 by technology publisher Tim O'Reilly. Originally titled the "Freeware Summit" and later named the "Open Source Summit",[41] the event brought together the leaders of many of the most important free and open-source projects, including Linus Torvalds, Larry Wall, Brian Behlendorf, Eric Allman, Guido van Rossum, Michael Tiemann, Paul Vixie, Jamie Zawinski of Netscape, and Eric Raymond. At that meeting, the confusion caused by the name free software was brought up. Tiemann argued for "sourceware" as a new term, while Raymond argued for "open source". The assembled developers took a vote, and the winner was announced at a press conference that evening. Five days later, Raymond made the first public call to the free software community to adopt the new term.[42] The Open Source Initiative was formed shortly thereafter.[1][39] According to the OSI Richard Stallman initially flirted with the idea of adopting the open source term.[43] But as the enormous success of the open source term buried Stallman's free software term and his message on social values and computer users' freedom,[44][45][46] later Stallman and his FSF strongly objected to the OSI's approach and terminology.[47] Due to Stallman's rejection of the term "open-source software", the FOSS ecosystem is divided in its terminology; see also Alternative terms for free software. For example, a 2002 FOSS developer survey revealed that 32.6% associated themselves with OSS, 48% with free software, and 19.4% in between or undecided.[48] Stallman still maintained, however, that users of each term were allies in the fight against proprietary software.

On 13 October 2000, Sun Microsystems released the StarOffice office suite as free software under the GNU Lesser General Public License. The free software version was renamed OpenOffice.org, and coexisted with StarOffice.

By the end of the 1990s, the term "open source" gained much traction in public media[49] and acceptance in software industry in context of the dotcom bubble and the open-source software driven Web 2.0.

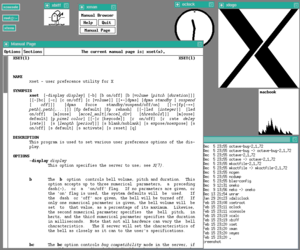

Desktop (1984–present)

The X Window System was created in 1984, and became the de facto standard window system in desktop free software operating systems by the mid-1990s. X runs as a server, and is responsible for communicating with graphics hardware on behalf of clients (which are individual software applications). It provides useful services such as having multiple virtual desktops for the same monitor, and transmitting visual data across the network so a desktop can be accessed remotely.

Initially, users or system administrators assembled their own environments from X and available window managers (which add standard controls to application windows; X itself does not do this), pagers, docks and other software. While X can be operated without a window manager, having one greatly increases convenience and ease of use.

Two key "heavyweight" desktop environments for free software operating systems emerged in the 1990s that were widely adopted: KDE and GNOME. KDE was founded in 1996 by Matthias Ettrich. At the time, he was troubled by the inconsistencies in the user interfaces of UNIX applications. He proposed a new desktop environment. He also wanted to make this desktop easy to use. His initial Usenet post spurred a lot of interest.[50]

Ettrich chose to use the Qt toolkit for the KDE project. At the time, Qt did not use a free software license. Members of the GNU project became concerned with the use of such a toolkit for building a free software desktop environment. In August 1997, two projects were started in response to KDE: the Harmony toolkit (a free replacement for the Qt libraries) and GNOME (a different desktop without Qt and built entirely on top of free software).[51] GTK+ was chosen as the base of GNOME in place of the Qt toolkit.

In November 1998, the Qt toolkit was licensed under the free/open source Q Public License (QPL) but debate continued about compatibility with the GNU General Public License (GPL). In September 2000, Trolltech made the Unix version of the Qt libraries available under the GPL, in addition to the QPL, which has eliminated the concerns of the Free Software Foundation. KDE has since been split into KDE Plasma Workspaces, a desktop environment, and KDE Software Compilation, a much broader set of software that includes the desktop environment.

Both KDE and GNOME now participate in freedesktop.org, an effort launched in 2000 to standardize Unix desktop interoperability, although there is still competition between them.[52]

Since 2000, software written for X almost always uses some widget toolkit written on top of X, like Qt or GTK.[citation needed]

In 2010, Canonical released the first version of Unity, a replacement for the prior default desktop environment for Ubuntu, GNOME. This change to a new, under-development desktop environment and user interface was initially somewhat controversial among Ubuntu users.

In 2011, GNOME 3 was introduced, which largely discarded the desktop metaphor in favor of a more mobile-oriented interface. The ensuing controversy led Debian to consider making the Xfce environment default on Debian 7. Several independent projects were begun to keep maintaining the GNOME 2 code.

Fedora did not adopt Unity, retaining its existing offering of a choice of GNOME, KDE and LXDE with GNOME being the default, and hence Red Hat Enterprise Linux (for which Fedora acts as the "initial testing ground") did not adopt Unity either. A fork of Ubuntu was made by interested third-party developers that kept GNOME and discarded Unity. In March 2017, Ubuntu announced that it will be abandoning Unity in favour of GNOME 3 in future versions, and ceasing its efforts in developing Unity-based smartphones and tablets.[53][54]

When Google built the Linux-based Android operating system, mostly for phone and tablet devices, it replaced X with the purpose-built SurfaceFlinger.

Open-source developers also criticized X as obsolete, carrying many unused or overly complicated elements in its protocol and libraries, while missing modern functionality, e.g., compositing, screen savers, and functions provided by window managers.[55] Several attempts have been made or are underway to replace X for these reasons, including:

- The Y Window System, which had ceased development by 2006.[56]

- The Wayland project, started in 2008.

- The Mir project, started in 2013 by Canonical Ltd. to produce a replacement windowing system for Ubuntu.

Microsoft, SCO and other attacks (1998–2014)

This section needs additional citations for verification. (December 2023) (Learn how and when to remove this template message) |

As free software became more popular, industry incumbents such as Microsoft started to see it as a serious threat. This was shown in a leaked 1998 document, confirmed by Microsoft as genuine, which came to be called the first of the Halloween Documents.

Steve Ballmer once compared the GPL to "a cancer", but has since stopped using this analogy. Indeed, Microsoft has softened its public stance towards open source in general, with open source since becoming an important part of the Microsoft Windows ecosystem.[57] However, at the same time, behind the scenes, Microsoft's actions have been less favorable toward the open-source community.[citation needed]

SCO v. IBM and related bad publicity (2003–present)

In 2003, a proprietary Unix vendor and former Linux distribution vendor called SCO alleged that Unix intellectual property had been inappropriately copied into the Linux kernel, and sued IBM, claiming that it bore responsibility for this. Several related lawsuits and countersuits followed, some originating from SCO, some from others suing SCO. However, SCO's allegations lacked specificity, and while some in the media reported them as credible, many critics of SCO believed the allegations to be highly dubious at best.

Over the course of the SCO v. IBM case, it emerged that not only had SCO been distributing the Linux kernel for years under the GPL, and continued to do so (thus rendering any claims hard to sustain legally), but that SCO did not even own the copyrights to much of the Unix code that it asserted copyright over, and had no right to sue over them on behalf of the presumed owner, Novell.

This was despite SCO's CEO, Darl McBride, having made many wild and damaging claims of inappropriate appropriation to the media, many of which were later shown to be false, or legally irrelevant even if true.

The blog Groklaw was one of the most forensic examiners of SCO's claims and related events, and gained its popularity from covering this material for many years.

SCO suffered defeat after defeat in SCO v. IBM and its various other court cases, and filed for Chapter 11 bankruptcy in 2007. However, despite the courts finding that SCO did not own the copyrights (see above), and SCO's lawsuit-happy CEO Darl McBride no longer running the company, the bankruptcy trustee in charge of SCO-in-bankruptcy decided to press on with some portions he claimed remained relevant in the SCO v. IBM lawsuit. He could apparently afford to do this because SCO's main law firm in SCO v. IBM had signed an agreement at the outset to represent SCO for a fixed amount of money no matter how long the case took to complete.

In 2004, the Alexis de Tocqueville Institution (ADTI) announced its intent to publish a book, Samizdat: And Other Issues Regarding the 'Source' of Open Source Code, showing that the Linux kernel was based on code stolen from Unix, in essence using the argument that it was impossible to believe that Linus Torvalds could produce something as sophisticated as the Linux kernel. The book was never published, after it was widely criticised and ridiculed, including by people supposedly interviewed for the book. It emerged that some of the people were never interviewed, and that ADTI had not tried to contact Linus Torvalds, or ever put the allegations to him to allow a response. Microsoft attempted to draw a line under this incident, stating that it was a "distraction".

Many suspected that some or all of these legal and fear, uncertainty and doubt (FUD) attacks against the Linux kernel were covertly arranged by Microsoft, although this has never been proven. Both ADTI and SCO, however, received funding from Microsoft.

European Commission v. Microsoft (2004–2007)

In 2004 the European Commission found Microsoft guilty of anti-competitive behaviour with respect to interoperability in the workgroup software market. Microsoft had formerly settled United States v. Microsoft in 2001, in a case which charged that it illegally abused its monopoly power to force computer manufacturers to preinstall Internet Explorer.

The Commission demanded that Microsoft produce full documentation of its workgroup protocols to allow competitors to interoperate with its workgroup software, and imposed fines of 1.5 million euros per day for Microsoft's failure to comply. The commission had jurisdiction because Microsoft sells the software in question in Europe.

Microsoft, after a failed attempt to appeal the decision through the Court of Justice of the European Union, eventually complied with the demand, producing volumes of detailed documentation.

The Samba project, as Microsoft's sole remaining competitor in the workgroup software market, was the key beneficiary of this documentation.

ISO OOXML controversy (2008–present)

In 2008 the International Organization for Standardization published Microsoft's Office Open XML as an international standard, which crucially meant that it, and therefore Microsoft Office, could be used in projects where the use of open standards were mandated by law or by policy. Critics of the standardisation process, including some members of ISO national committees involved in the process itself, alleged irregularities and procedural violations in the process, and argued that the ISO should not have approved OOXML as a standard because it made reference to undocumented Microsoft Office behaviour.

As of 2012[update], no correct open-source implementation of OOXML exists, which validates the critics' remarks about OOXML being difficult to implement and underspecified. Presently, Google cannot yet convert Office documents into its own proprietary Google Docs format correctly. This suggests that OOXML is not a true open standard, but rather a partial document describing what Microsoft Office does, and only involving certain file formats.

Microsoft's contributions to open source and acquisition of related projects

In 2006 Microsoft launched its CodePlex open source code hosting site, to provide hosting for open-source developers targeting Microsoft platforms. In July 2009 Microsoft even open sourced some Hyper-V-supporting patches to the Linux kernel, because they were required to do so by the GNU General Public License,[58][59] and contributed them to the mainline kernel. Note that Hyper-V itself is not open source. Microsoft's F# compiler, created in 2002, has also been released as open source under the Apache license. The F# compiler is a commercial product, as it has been incorporated into Microsoft Visual Studio, which is not open source.

Microsoft representatives have made regular appearances at various open source and Linux conferences for many years.

In 2012, Microsoft launched a subsidiary named Microsoft Open Technologies Inc., with the aim of bridging the gap between proprietary Microsoft technologies and non-Microsoft technologies by engaging with open-source standards.[60] This subsidiary was subsequently folded back into Microsoft as Microsoft's position on open source and non-Windows platforms became more favourable.

In January 2016 Microsoft released Chakra as open source under the MIT License; the code is available on GitHub.[61]

Microsoft's stance on open source has shifted as the company began endorsing more open-source software. In 2016, Steve Balmer, former CEO of Microsoft, has retracted his statement that Linux is a malignant cancer.[62] In 2017, the company became a platinum supporter of the Linux Foundation. By 2018, shortly before acquiring GitHub, Microsoft led the charts in the number of paid staff contributing to open-source projects there.[63] While Microsoft may or may not endorse the original philosophy of free software, data shows that it does endorse open source strategically.[original research?]

Critics have noted that, in March 2019, Microsoft sued Foxconn's subsidiary over a 2013 patent contract;[64] in 2013, Microsoft had announced a patent agreement with Foxconn related to Foxconn's use of the Linux-based Android and ChromeOS.[65]

Open source and programming languages

The vast majority of programming languages in use today have a free software implementation available.

Since the 1990s, the release of major new programming languages in the form of open-source compilers and/or interpreters has been the norm, rather than the exception. Examples include Python in 1991, Ruby in 1995, and Scala in 2003. In recent times, the most notable exceptions have been Java, ActionScript, C#, and Apple's Swift until version 2.2 was proprietary. Partly compatible open-source implementations have been developed for most, and in the case of Java, the main open-source implementation is by now very close to the commercial version.

Java

Since its first public release in 1996, the Java platform had not been open source, although the Java source code portion of the Java runtime was included in Java Development Kits (JDKs), on a purportedly "confidential" basis, despite it being freely downloadable by the general public in most countries. Sun later expanded this "confidential" source code access to include the full source code of the Java Runtime Environment via a separate program which was open to members of the public, and later made the source of the Java compiler javac available also. Sun also made the JDK source code available confidentially to the Blackdown Java project, which was a collection of volunteers who ported early versions of the JDK to Linux, or improved on Sun's Linux ports of the JDK. However, none of this was open source, because modification and redistribution without Sun's permission were forbidden in all cases. Sun stated at the time that they were concerned about preventing forking of the Java platform.

However, several independent partial reimplementations of the Java platform had been created, many of them by the open-source community, such as the GNU Compiler for Java (GCJ). Sun never filed lawsuits against any of the open source clone projects. GCJ notably caused a bad user experience for Java on free software supporting distributions such as Fedora and Ubuntu which shipped GCJ at the time as their Java implementation. How to replace GCJ with the Sun JDK was a frequently asked question by users, because GCJ was an incomplete implementation, incompatible and buggy.

In 2006 Jonathan I. Schwartz became CEO of Sun Microsystems, and signalled his commitment to open source. On 8 May 2007, Sun Microsystems released the Java Development Kit as OpenJDK under the GNU General Public License. Part of the class library (4%) could not be released as open source due to them being licensed from other parties and were included as binary plugs.[citation needed] Because of this, in June 2007, Red Hat launched IcedTea to resolve the encumbered components with the equivalents from GNU Classpath implementation. Since the release, most of the encumbrances have been solved, leaving only the audio engine code and colour management system (the latter is to be resolved using Little CMS).

Distributed version control (2001–present)

The first open-source distributed revision control system (DVCS) was 'tla' in 2001 (since renamed to GNU arch); however, it and its successors 'baz' and 'bzr' (Bazaar) never became very popular, and GNU arch was discontinued, although Bazaar still continues and is used by Canonical.

However, other DVCS projects sprung up, and some started to get significant adoption.

Git (2005–present)

Git, the most popular DVCS, was created in 2005.[66] Some developers of the Linux kernel started to use a proprietary DVCS called BitKeeper, notably Linux founder Linus Torvalds, although some other kernel developers never used it due to its proprietary nature. The unusual situation whereby Linux kernel development involved the use by some of proprietary software "came to a head" when Andrew Tridgell started to reverse-engineer BitKeeper with the aim of producing an open-source tool which could provide some of the same functionality as the commercial version. BitMover, the company that developed BitKeeper, in response, in 2005 revoked the special free of-charge license it had granted to certain kernel developers.

As a result of the removal of the BitKeeper license, Linus Torvalds decided to write his own DVCS, called git, because he thought none of the existing open-source DVCSs were suitable for his particular needs as a kernel maintainer (which was why he had adopted BitKeeper in the first place). A number of other developers quickly jumped in and helped him, and git over time grew from a relatively simple "stupid content tracker" (on which some developers developed "porcelain" extensions) into the sophisticated and powerful DVCS that it is today. Torvalds no longer maintains git himself, however; it has been maintained by Junio Hamano for many years, and has continued receiving contributions from many developers.

The increasing popularity of open-source DVCSs such as git, and then, later, DVCS hosting sites, the most popular of which is GitHub (founded 2008), incrementally reduced the barriers to participation in free software projects still further. With sites like GitHub, no longer did potential contributors have to do things like hunt for the URL for the source code repository (which could be in different places on each website, or sometimes tucked away in a README file or developer documentation), or work out how to generate a patch, and if necessary subscribe to the right mailing list so that their patch email would get to the right people. Contributors can simply fork their own copy of a repository with one click, and issue a pull request from the appropriate branch when their changes are ready. GitHub has become the most popular hosting site in the world for open-source software, and this, together with the ease of forking and the visibility of forks has made it a popular way for contributors to make changes, large and small.

Recent developments

While copyright is the primary legal mechanism that FOSS authors use to ensure license compliance for their software, other mechanisms such as legislation, software patents, and trademarks have uses also. In response to legal issues with patents and the DMCA, the Free Software Foundation released version 3 of its GNU Public License in 2007 that explicitly addressed the DMCA's digital rights management (DRM) provisions and patent rights.

After the development of the GNU GPLv3, as copyright holder of many pieces of the GNU system, such as the GNU Compiler Collection (GCC) software, the FSF updated most[citation needed] of the GNU programs' licenses from GPLv2 to GPLv3. Apple, a user of GCC and a heavy user of both DRM and patents, decided to switch the compiler in its Xcode IDE from GCC to Clang, another FOSS compiler,[67] but which is under a permissive license.[68] LWN speculated that Apple was motivated partly by a desire to avoid GPLv3.[67] The Samba project also switched to GPLv3, which Apple replaced in their software suite with a closed-source, proprietary software alternative.[69]

Recent mergers have affected major open-source software. Sun Microsystems (Sun) acquired MySQL AB, owner of the popular open-source MySQL database, in 2008.[70]

Oracle in turn purchased Sun in January 2010, acquiring their copyrights, patents, and trademarks. This made Oracle the owner of both the most popular proprietary database and the most popular open-source database (MySQL).[citation needed] Oracle's attempts to commercialize the open-source MySQL database have raised concerns in the FOSS community.[71] Partly in response to uncertainty about the future of MySQL, the FOSS community forked the project into new database systems outside of Oracle's control. These include MariaDB, Percona, and Drizzle.[72] All of these have distinct names; they are distinct projects and cannot use the trademarked name MySQL.[73]

Android (2008–present)

In September 2008, Google released the first version of Android, a new smartphone operating system, as open source (some Google applications that are sometimes but not always bundled with Android are not open source). Initially, the operating system was given away for free by Google, and was eagerly adopted by many handset makers; Google later bought Motorola Mobility and produced its own "vanilla" Android phones and tablets, while continuing to allow other manufacturers to use Android. Android is now the world's most popular mobile platform.[74]

Because Android is based on the Linux kernel, this means that Linux is now the dominant kernel on both mobile platforms (via Android), and supercomputers,[75] and a key player in server operating systems too.

Oracle v. Google

In August 2010, Oracle sued Google claiming that its use of Java in Android infringed on Oracle's copyrights and patents. The initial Oracle v. Google trial ended in May 2012, with the finding that Google did not infringe on Oracle's patents, and the trial judge ruled that the structure of the Java application programming interfaces (APIs) used by Google was not copyrightable. The jury found that Google made a trivial ("de minimis") copyright infringement, but the parties stipulated that Google would pay no damages, because it was so trivial.[76] However, Oracle appealed to the Federal Circuit, and Google filed a cross-appeal on the literal copying claim.[77] The Federal Circuit ruled that the small copyright infringement acknowledged by Google was not de minimis, and sent the fair use issue back to the trial judge for reconsideration. In 2016, the case was retried and a jury found for Google, on the grounds of fair use.

ChromiumOS (2009–present)

Until recently, Linux was still a relatively uncommon choice of operating system for desktops and laptops. However, Google's Chromebooks, running ChromeOS which is essentially a thin client, have captured 20–25% of the sub-$300 US laptop market.[78] ChromeOS is built from the open-source ChromiumOS, which is based on Linux, in much the same way that versions of Android shipped on commercially available phones are built from the open source version of Android.

See also

- Open-source video game § History

- History of software

- History of software engineering

- Timeline of free and open-source software

References

- ↑ 1.0 1.1 1.2 1.3 VM Brasseur (2018). Forge your Future with Open Source. Pragmatic Programmers. ISBN 978-1-68050-301-2. https://archive.org/details/isbn_9781680503012.

- ↑ 2.0 2.1 2.2 2.3 James J. Flink (1977). The Car Culture. MIT Press. ISBN 978-0-262-56015-3.

- ↑ Maracke, Catharina (2019-02-25). "Free and Open Source Software and FRAND‐based patent licenses: How to mediate between Standard Essential Patent and Free and Open Source Software" (in en). The Journal of World Intellectual Property 22 (3–4): 78–102. doi:10.1111/jwip.12114. ISSN 1422-2213.

- ↑ 4.0 4.1 4.2 Hippel, Eric von; Krogh, Georg von (2003-04-01). "Open Source Software and the "Private-Collective" Innovation Model: Issues for Organization Science". Organization Science 14 (2): 209–223. doi:10.1287/orsc.14.2.209.14992. ISSN 1047-7039. https://dspace.mit.edu/bitstream/1721.1/66145/1/SSRN-id1410789.pdf.

- ↑ "IBM 7090/7094 Page". http://www.cozx.com/~dpitts/ibm7090.html.

- ↑ Ceruzzi, Paul (1998). A History of Modern Computing. The MIT Press. ISBN 9780262032551. https://archive.org/details/historyofmodernc00ceru.

- ↑ "Heresy & Heretical Open Source: A Heretic's Perspective". http://www.infoq.com/presentations/Heretical-Open-Source.

- ↑ Sam Williams. Free as in Freedom: Richard Stallman's Crusade for Free Software. "Chapter 1: For Want of a Printer" . 2002.

- ↑ Gaudeul, Alexia (2007). "Do Open Source Developers Respond to Competition? The LATEX Case Study" (in en). Review of Network Economics 6 (2). doi:10.2202/1446-9022.1119. ISSN 1446-9022.

- ↑ "A brief history of spice". http://www.ecircuitcenter.com/SpiceTopics/History.htm.

- ↑ Fisher, Franklin M.; McKie, James W.; Mancke, Richard B. (1983). IBM and the U.S. Data Processing Industry: An Economic History. Praeger. ISBN 978-0-03-063059-0.page 176

- ↑ Fisher. op.cit..

- ↑ Apple Computer, Inc. v. Franklin Computer Corporation Puts the Byte Back into Copyright Protection for Computer Programs in Golden Gate University Law Review Volume 14, Issue 2, Article 3 by Jan L. Nussbaum (January 1984)

- ↑ Lemley, Menell, Merges and Samuelson. Software and Internet Law, p. 34.

- ↑ Landley, Rob (2009-05-23). "notes-2009". landley.net. http://landley.net/notes-2009.html. "So if open source used to be the norm back in the 1960s and 70s, how did this _change_? Where did proprietary software come from, and when, and how? How did Richard Stallman's little utopia at the MIT AI lab crumble and force him out into the wilderness to try to rebuild it? Two things changed in the early 80s: the exponentially growing installed base of microcomputer hardware reached critical mass around 1980, and a legal decision altered copyright law to cover binaries in 1983."

- ↑ Weber, Steven (2004). The Success of Open Source. Cambridge, MA: Harvard University Press. pp. 38–44. ISBN 978-0-674-01858-7. http://www.polisci.berkeley.edu/Faculty/bio/permanent/Weber,S/.

- ↑ IBM Corporation (1983-02-08). "DISTRIBUTION OF IBM LICENSED PROGRAMS AND LICENSED PROGRAM MATERIALS AND MODIFIED AGREEMENT FOR IBM LICENSED PROGRAMS". http://landley.net/history/mirror/ibm/oco.html.

- ↑ Gallant, John (1985-03-18). "IBM policy draws fire – Users say source code rules hamper change". Computerworld. https://books.google.com/books?id=4Wgmey4obagC&q=1983object-only+model+IBM&pg=PA8. "While IBM's policy of withholding source code for selected software products has already marked its second anniversary, users are only now beginning to cope with the impact of that decision. But whether or not the advent of object-code-only products has affected their day-to-day DP operations, some users remain angry about IBM's decision. Announced in February 1983, IBM's object-code-only policy has been applied to a growing list of Big Blue system software products"

- ↑ 19.0 19.1 Shea, Tom (1983-06-23). "Free software – Free software is a junkyard of software spare parts". InfoWorld. https://books.google.com/books?id=yy8EAAAAMBAJ&q=us+government+public+domain+software&pg=PA31.

- ↑ Ahl, David. "David H. Ahl biography from Who's Who in America". http://www.swapmeetdave.com/Ahl/DHAbio.htm.

- ↑ Wilkinson, Bill (1983). The Atari BASIC Source Book. COMPUTE! Books. ISBN 9780942386158. https://archive.org/details/ataribooks-the-atari-basic-source-book. Retrieved 2 October 2017.

- ↑ Wilkinson, Bill (1982). "Inside Atari DOS". COMPUTE! Books. http://atariarchives.org/iad/.

- ↑ Norman, Jeremy. "SHARE, The First Computer Users' Group, is Founded (1955)". http://www.historyofinformation.com/expanded.php?id=4805.

- ↑ "The DECUS tapes". http://www.ibiblio.org/pub/academic/computer-science/history/pdp-11/rsx/decus/.

- ↑ DiBona, C., et al. Open Sources 2.0. O'Reilly, ISBN 0-596-00802-3.

- ↑ "Talk transcript where Stallman tells the printer story". https://www.gnu.org/events/rms-nyu-2001-transcript.txt.

- ↑ "Transcript of Richard Stallman's Speech, 28 Oct 2002, at the International Lisp Conference". GNU Project. 28 October 2002. https://www.gnu.org/gnu/rms-lisp.html.

- ↑ "GNU General Public License v1.0". https://www.gnu.org/licenses/old-licenses/gpl-1.0.txt.

- ↑ Michael Tiemann (1999-03-29). "Future of Cygnus Solutions, An Entrepreneur's Account". http://www.oreilly.com/catalog/opensources/book/tiemans.html.

- ↑ "Release notes for Linux kernel 0.12". https://www.kernel.org/pub/linux/kernel/Historic/old-versions/RELNOTES-0.12.

- ↑ "A Brief History of Debian". http://www.debian.org/doc/manuals/project-history/ch-detailed.en.html.

- ↑ Take your freedom back, with Linux-2.6.33-libre FSFLA, 2010.

- ↑ "Linux and GNU – GNU Project – Free Software Foundation". Gnu.org. 2013-05-20. https://www.gnu.org/gnu/linux-and-gnu.html.

- ↑ "January 2015 Web Server Survey". http://news.netcraft.com/archives/2015/01/15/january-2015-web-server-survey.html.

- ↑ "On the term "Open Source"". 15 March 2018. http://hyperlogos.org/blog/drink/term-Open-Source.

- ↑ "Why do Tech Journalists so often get Computer History... totally wrong?". https://www.youtube.com/watch?v=OMoIEG-I6JQ.

- ↑ Kelty, Christpher M. (2008). "The Cultural Significance of free Software – Two Bits". Duke University press – durham and london. pp. 99. http://twobits.net/pub/Kelty-TwoBits.pdf.

- ↑ Karl Fogel (2016). "Producing Open Source Software – How to Run a Successful Free Software Project". O'Reilly Media. http://producingoss.com/en/introduction.html#free-vs-open-source.

- ↑ 39.0 39.1 39.2 Tiemann, Michael (19 September 2006). "History of the OSI". Open Source Initiative. http://www.opensource.org/history.

- ↑ Muffatto, Moreno (2006). Open Source: A Multidisciplinary Approach. Imperial College Press. ISBN 978-1-86094-665-3.

- ↑ Open Source Summit Linux Gazette. 1998.

- ↑ Eric S. Raymond. "Goodbye, 'free software'; hello, 'open source'". catb.org. http://www.catb.org/~esr/open-source.html.

- ↑ Tiemann, Michael (19 September 2006). "History of the OSI". Open Source Initiative. http://www.opensource.org/docs/history.php.

- ↑ Leander Kahney (5 March 1999). "Linux's Forgotten Man – You have to feel for Richard Stallman". Wired. https://www.wired.com/news/technology/0,1282,18291,00.html.

- ↑ "Toronto Star: Freedom's Forgotten Prophet (Richard Stallman)". Linux Today. 10 October 2000. http://www.linuxtoday.com/infrastructure/2000101000421OSCY.

- ↑ Nikolai Bezroukov (1 November 2014). "Portraits of Open Source Pioneers – Part IV. Prophet". http://www.softpanorama.org/People/Stallman/prophet.shtml#Linux_schism.

- ↑ Richard Stallman. "Why Open Source Misses the Point". https://www.gnu.org/philosophy/open-source-misses-the-point.html.

- ↑ Brian Fitzgerald, Pär J. Ågerfalk (2005). The Mysteries of Open Source Software: Black and White and Red All Over University of Limerick, Ireland. "Open Source software (OSS) has attracted enormous media and research attention since the term was coined in February 1998."

- ↑ Ettrich, Matthias (14 October 1996). "New Project: Kool Desktop Environment (KDE)". Newsgroup: de.comp.os.linux.misc. Usenet: 53tkvv$b4j@newsserv.zdv.uni-tuebingen.de. Archived from the original on 30 May 2013. Retrieved 2006-12-29.

- ↑ Richard Stallman (5 September 2000). "Stallman on Qt, the GPL, KDE, and GNOME". http://linuxtoday.com/news_story.php3?ltsn=2000-09-05-001-21-OP-LF-KE.

- ↑ "A tale of two desktops". PC & Tech Authority. http://www.pcauthority.com.au/Feature/79378,a-tale-of-two-desktops.aspx.

- ↑ Shuttleworth, Mark (5 April 2017). "Growing Ubuntu for cloud and IoT, rather than phone and convergence". Canonical Ltd.. https://insights.ubuntu.com/2017/04/05/growing-ubuntu-for-cloud-and-iot-rather-than-phone-and-convergence/.

- ↑ "Ubuntu To Abandon Unity 8, Switch Back To GNOME". Phoronix.com. http://phoronix.com/scan.php?page=news_item&px=Ubuntu-Dropping-Unity.

- ↑ "The Wayland Situation: Facts About X vs. Wayland – Phoronix". https://www.phoronix.com/scan.php?page=article&item=x_wayland_situation.

- ↑ "Post on y-devel by Brandon Black". 2006-01-03. http://www.y-windows.org/pipermail/y-devel/2006-January/001977.html.

- ↑ "When Open Source Came to Microsoft" (in en-us). https://www.codemag.com/Article/2009041/When-Open-Source-Came-to-Microsoft.

- ↑ Gavin Clarke (23 July 2009). "Microsoft opened Linux-driver code after 'violating' GPL". The Register. https://www.theregister.co.uk/2009/07/23/microsoft_hyperv_gpl_violation/.

- ↑ "GNU General Public License". https://www.gnu.org/licenses/gpl.html.

- ↑ Ovide, Shira (16 April 2012). "Microsoft Dips Further into Open-Source Software". The Wall Street Journal. https://www.wsj.com/articles/SB10001424052702304432704577347783238850756.

- ↑ "ChakraCore GitHub repository is now open". 2016-01-13. https://blogs.windows.com/msedgedev/2016/01/13/chakracore-now-open/.

- ↑ "Ballmer: I may have called Linux a cancer but now I love it". https://www.zdnet.com/article/ballmer-i-may-have-called-linux-a-cancer-but-now-i-love-it/.

- ↑ Asay, Matt (2018-02-07). "Who really contributes to open source" (in en). https://www.infoworld.com/article/3253948/who-really-contributes-to-open-source.html.

- ↑ "Foxconn rejects Microsoft patent lawsuit, says never had to pay royalties". Reuters. 19 March 2019. https://www.reuters.com/article/us-foxconn-microsoft-idUSKBN1QT0D9.

- ↑ "Microsoft and Foxconn Parent Hon Hai Sign Patent Agreement For Android and Chrome Devices - Stories". 2013-04-16. https://news.microsoft.com/2013/04/17/microsoft-and-foxconn-parent-hon-hai-sign-patent-agreement-for-android-and-chrome-devices/.

- ↑ "SCM Ranking, Q3 2013". Switch-Gears. http://beta.gitgear.com/why_git/SCM_Ranking_2013Q3_F1.pdf.

- ↑ 67.0 67.1 Brockmeier, Joe. "Apple's Selective Contributions to GCC". https://lwn.net/Articles/405417/.

- ↑ "LLVM Developer Policy". LLVM. http://llvm.org/docs/DeveloperPolicy.html#license.

- ↑ Holwerda, Thom. "Apple Ditches SAMBA in Favour of Homegrown Replacement". http://www.osnews.com/story/24572/.

- ↑ "Sun to Acquire MySQL". MySQL AB. http://mysql.com/news-and-events/sun-to-acquire-mysql.html.

- ↑ Thomson, Iain. "Oracle offers commercial extensions to MySQL". https://www.theregister.co.uk/2011/09/16/oracle_commercial_extensions_mysql/.

- ↑ Samson, Ted. "Non-Oracle MySQL fork deemed ready for prime time". http://www.infoworld.com/d/open-source-software/non-oracle-mysql-fork-deemed-ready-prime-time-853.

- ↑ Nelson, Russell. "Open Source, MySQL, and trademarks". http://www.opensource.org/node/496.

- ↑ "Android, the world's most popular mobile platform". http://developer.android.com/about/index.html.

- ↑ Steven J. Vaughan-Nichols (29 July 2013). "20 great years of Linux and supercomputers". ZDNet. http://www.zdnet.com/20-great-years-of-linux-and-supercomputers-7000018681/.

- ↑ Niccolai, James (20 June 2012). "Oracle agrees to 'zero' damages in Google lawsuit, eyes appeal". http://www.computerworld.com/s/article/9228298/Oracle_agrees_to_zero_damages_in_Google_lawsuit_eyes_appeal.

- ↑ Jones, Pamela (5 October 2012). "Oracle and Google File Appeals". Groklaw. http://www.groklaw.net/articlebasic.php?story=20121005082638280.

- ↑ Williams, Rhiannon (11 July 2013). "Google Chromebook sales soar in face of PC decline". https://www.telegraph.co.uk/technology/google/10173494/Google-Chromebook-sales-soar-in-face-of-PC-decline.html.

External links

- Elmer-Dewitt, Philip (30 July 1984). Software Is for Sharing, Time.

- Richard Stallman speaking about free software and the GNU project in 1986, Sweden

- David A. Wheeler on the history of free software, from his "Look at the numbers!" paper

- The Daemon, the GNU, and the Penguin, by Peter Salus

- Documents about the BSD lawsuit that lead to 386BSD and then FreeBSD

- Open Sources: Voices from the Open Source Revolution (January 1999)

- The history of Cygnus solutions, the largest free software company of the early 90s

- LWN.net's 1998–2008 timeline part 1 (part 2, 3, 4, 5, 6)

- A Brief History of FreeBSD, by Jordan Hubbard

- UNESCO Free Software Portal

- Infinite Hands, a free licensed folk song about the history of free software.

|

KSF

KSF