Limit of a function

From HandWiki - Reading time: 33 min

From HandWiki - Reading time: 33 min

| 1 | 0.841471... |

| 0.1 | 0.998334... |

| 0.01 | 0.999983... |

Although the function is not defined at zero, as x becomes closer and closer to zero, becomes arbitrarily close to 1. In other words, the limit of as x approaches zero, equals 1

| Part of a series of articles about |

| Calculus |

|---|

In mathematics, the limit of a function is a fundamental concept in calculus and analysis concerning the behavior of that function near a particular input which may or may not be in the domain of the function.

Formal definitions, first devised in the early 19th century, are given below. Informally, a function f assigns an output f(x) to every input x. We say that the function has a limit L at an input p, if f(x) gets closer and closer to L as x moves closer and closer to p. More specifically, the output value can be made arbitrarily close to L if the input to f is taken sufficiently close to p. On the other hand, if some inputs very close to p are taken to outputs that stay a fixed distance apart, then we say the limit does not exist.

The notion of a limit has many applications in modern calculus. In particular, the many definitions of continuity employ the concept of limit: roughly, a function is continuous if all of its limits agree with the values of the function. The concept of limit also appears in the definition of the derivative: in the calculus of one variable, this is the limiting value of the slope of secant lines to the graph of a function.

History

Although implicit in the development of calculus of the 17th and 18th centuries, the modern idea of the limit of a function goes back to Bolzano who, in 1817, introduced the basics of the epsilon-delta technique (see (ε, δ)-definition of limit below) to define continuous functions. However, his work was not known during his lifetime.[1]

In his 1821 book Cours d'analyse, Augustin-Louis Cauchy discussed variable quantities, infinitesimals and limits, and defined continuity of by saying that an infinitesimal change in x necessarily produces an infinitesimal change in y, while Grabiner claims that he used a rigorous epsilon-delta definition in proofs.[2] In 1861, Weierstrass first introduced the epsilon-delta definition of limit in the form it is usually written today.[3] He also introduced the notations and [4]

The modern notation of placing the arrow below the limit symbol is due to Hardy, which is introduced in his book A Course of Pure Mathematics in 1908.[5]

Motivation

Imagine a person walking on a landscape represented by the graph y = f(x). Their horizontal position is given by x, much like the position given by a map of the land or by a global positioning system. Their altitude is given by the coordinate y. Suppose they walk towards a position x = p, as they get closer and closer to this point, they will notice that their altitude approaches a specific value L. If asked about the altitude corresponding to x = p, they would reply by saying y = L.

What, then, does it mean to say, their altitude is approaching L? It means that their altitude gets nearer and nearer to L—except for a possible small error in accuracy. For example, suppose we set a particular accuracy goal for our traveler: they must get within ten meters of L. They report back that indeed, they can get within ten vertical meters of L, arguing that as long as they are within fifty horizontal meters of p, their altitude is always within ten meters of L.

The accuracy goal is then changed: can they get within one vertical meter? Yes, supposing that they are able to move within five horizontal meters of p, their altitude will always remain within one meter from the target altitude L. Summarizing the aforementioned concept we can say that the traveler's altitude approaches L as their horizontal position approaches p, so as to say that for every target accuracy goal, however small it may be, there is some neighbourhood of p where all (not just some) altitudes correspond to all the horizontal positions, except maybe the horizontal position p itself, in that neighbourhood fulfill that accuracy goal.

The initial informal statement can now be explicated:

In fact, this explicit statement is quite close to the formal definition of the limit of a function, with values in a topological space.

More specifically, to say that

is to say that f(x) can be made as close to L as desired, by making x close enough, but not equal, to p.

The following definitions, known as (ε, δ)-definitions, are the generally accepted definitions for the limit of a function in various contexts.

Functions of a single variable

(ε, δ)-definition of limit

Suppose is a function defined on the real line, and there are two real numbers p and L. One would say that the limit of f, as x approaches p, is L and written[6]

or alternatively, say f(x) tends to L as x tends to p, and written:

if the following property holds: for every real ε > 0, there exists a real δ > 0 such that for all real x, 0 < |x − p| < δ implies |f(x) − L| < ε.[6] Symbolically,

For example, we may say because for every real ε > 0, we can take δ = ε/4, so that for all real x, if 0 < |x − 2| < δ, then |4x + 1 − 9| < ε.

A more general definition applies for functions defined on subsets of the real line. Let S be a subset of Let be a real-valued function. Let p be a point such that there exists some open interval (a, b) containing p with It is then said that the limit of f as x approaches p is L, if:

Or, symbolically:

For example, we may say because for every real ε > 0, we can take δ = ε, so that for all real x ≥ −3, if 0 < |x − 1| < δ, then |f(x) − 2| < ε. In this example, S = [−3, ∞) contains open intervals around the point 1 (for example, the interval (0, 2)).

Here, note that the value of the limit does not depend on f being defined at p, nor on the value f(p)—if it is defined. For example, let because for every ε > 0, we can take δ = ε/2, so that for all real x ≠ 1, if 0 < |x − 1| < δ, then |f(x) − 3| < ε. Note that here f(1) is undefined.

In fact, a limit can exist in which equals where int S is the interior of S, and iso Sc are the isolated points of the complement of S. In our previous example where We see, specifically, this definition of limit allows a limit to exist at 1, but not 0 or 2.

The letters ε and δ can be understood as "error" and "distance". In fact, Cauchy used ε as an abbreviation for "error" in some of his work,[2] though in his definition of continuity, he used an infinitesimal rather than either ε or δ (see Cours d'Analyse). In these terms, the error (ε) in the measurement of the value at the limit can be made as small as desired, by reducing the distance (δ) to the limit point. As discussed below, this definition also works for functions in a more general context. The idea that δ and ε represent distances helps suggest these generalizations.

Existence and one-sided limits

Alternatively, x may approach p from above (right) or below (left), in which case the limits may be written as

or

respectively. If these limits exist at p and are equal there, then this can be referred to as the limit of f(x) at p.[7] If the one-sided limits exist at p, but are unequal, then there is no limit at p (i.e., the limit at p does not exist). If either one-sided limit does not exist at p, then the limit at p also does not exist.

A formal definition is as follows. The limit of f as x approaches p from above is L if:

- For every ε > 0, there exists a δ > 0 such that whenever 0 < x − p < δ, we have |f(x) − L| < ε.

The limit of f as x approaches p from below is L if:

- For every ε > 0, there exists a δ > 0 such that whenever 0 < p − x < δ, we have |f(x) − L| < ε.

If the limit does not exist, then the oscillation of f at p is non-zero.

More general definition using limit points and subsets

Limits can also be defined by approaching from subsets of the domain.

In general:[8] Let be a real-valued function defined on some Let p be a limit point of some —that is, p is the limit of some sequence of elements of T distinct from p. Then we say the limit of f, as x approaches p from values in T, is L, written if the following holds:

Note, T can be any subset of S, the domain of f. And the limit might depend on the selection of T. This generalization includes as special cases limits on an interval, as well as left-handed limits of real-valued functions (e.g., by taking T to be an open interval of the form (–∞, a)), and right-handed limits (e.g., by taking T to be an open interval of the form (a, ∞)). It also extends the notion of one-sided limits to the included endpoints of (half-)closed intervals, so the square root function can have limit 0 as x approaches 0 from above: since for every ε > 0, we may take δ = ε such that for all x ≥ 0, if 0 < |x − 0| < δ, then |f(x) − 0| < ε.

This definition allows a limit to be defined at limit points of the domain S, if a suitable subset T which has the same limit point is chosen.

Notably, the previous two-sided definition works on which is a subset of the limit points of S.

For example, let The previous two-sided definition would work at but it wouldn't work at 0 or 2, which are limit points of S.

Deleted versus non-deleted limits

The definition of limit given here does not depend on how (or whether) f is defined at p. Bartle[9] refers to this as a deleted limit, because it excludes the value of f at p. The corresponding non-deleted limit does depend on the value of f at p, if p is in the domain of f. Let be a real-valued function. The non-deleted limit of f, as x approaches p, is L if

The definition is the same, except that the neighborhood |x − p| < δ now includes the point p, in contrast to the deleted neighborhood 0 < |x − p| < δ. This makes the definition of a non-deleted limit less general. One of the advantages of working with non-deleted limits is that they allow to state the theorem about limits of compositions without any constraints on the functions (other than the existence of their non-deleted limits).[10]

Bartle[9] notes that although by "limit" some authors do mean this non-deleted limit, deleted limits are the most popular.[11]

Examples

Non-existence of one-sided limit(s)

The function has no limit at x0 = 1 (the left-hand limit does not exist due to the oscillatory nature of the sine function, and the right-hand limit does not exist due to the asymptotic behaviour of the reciprocal function, see picture), but has a limit at every other x-coordinate.

The function (a.k.a., the Dirichlet function) has no limit at any x-coordinate.

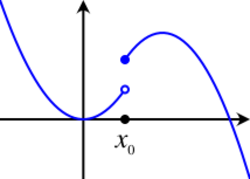

Non-equality of one-sided limits

The function has a limit at every non-zero x-coordinate (the limit equals 1 for negative x and equals 2 for positive x). The limit at x = 0 does not exist (the left-hand limit equals 1, whereas the right-hand limit equals 2).

Limits at only one point

The functions and both have a limit at x = 0 and it equals 0.

Limits at countably many points

The function has a limit at any x-coordinate of the form where n is any integer.

Limits involving infinity

Limits at infinity

Let be a function defined on The limit of f as x approaches infinity is L, denoted

means that:

Similarly, the limit of f as x approaches minus infinity is L, denoted

means that:

For example, because for every ε > 0, we can take c = 3/ε such that for all real x, if x > c, then |f(x) − 4| < ε.

Another example is that because for every ε > 0, we can take c = max{1, −ln(ε)} such that for all real x, if x < −c, then |f(x) − 0| < ε.

Infinite limits

For a function whose values grow without bound, the function diverges and the usual limit does not exist. However, in this case one may introduce limits with infinite values.

Let be a function defined on The statement the limit of f as x approaches p is infinity, denoted

means that:

The statement the limit of f as x approaches p is minus infinity, denoted

means that:

For example, because for every N > 0, we can take such that for all real x > 0, if 0 < x − 1 < δ, then f(x) > N.

These ideas can be used together to produce definitions for different combinations, such as

or

For example, because for every N > 0, we can take δ = e−N such that for all real x > 0, if 0 < x − 0 < δ, then f(x) < −N.

Limits involving infinity are connected with the concept of asymptotes.

These notions of a limit attempt to provide a metric space interpretation to limits at infinity. In fact, they are consistent with the topological space definition of limit if

- a neighborhood of −∞ is defined to contain an interval [−∞, c) for some

- a neighborhood of ∞ is defined to contain an interval (c, ∞] where and

- a neighborhood of is defined in the normal way metric space

In this case, is a topological space and any function of the form with is subject to the topological definition of a limit. Note that with this topological definition, it is easy to define infinite limits at finite points, which have not been defined above in the metric sense.

Alternative notation

Many authors[12] allow for the projectively extended real line to be used as a way to include infinite values as well as extended real line. With this notation, the extended real line is given as and the projectively extended real line is where a neighborhood of ∞ is a set of the form The advantage is that one only needs three definitions for limits (left, right, and central) to cover all the cases. As presented above, for a completely rigorous account, we would need to consider 15 separate cases for each combination of infinities (five directions: −∞, left, central, right, and +∞; three bounds: −∞, finite, or +∞). There are also noteworthy pitfalls. For example, when working with the extended real line, does not possess a central limit (which is normal):

In contrast, when working with the projective real line, infinities (much like 0) are unsigned, so, the central limit does exist in that context:

In fact there are a plethora of conflicting formal systems in use. In certain applications of numerical differentiation and integration, it is, for example, convenient to have signed zeroes. A simple reason has to do with the converse of namely, it is convenient for to be considered true. Such zeroes can be seen as an approximation to infinitesimals.

Limits at infinity for rational functions

There are three basic rules for evaluating limits at infinity for a rational function (where p and q are polynomials):

- If the degree of p is greater than the degree of q, then the limit is positive or negative infinity depending on the signs of the leading coefficients;

- If the degree of p and q are equal, the limit is the leading coefficient of p divided by the leading coefficient of q;

- If the degree of p is less than the degree of q, the limit is 0.

If the limit at infinity exists, it represents a horizontal asymptote at y = L. Polynomials do not have horizontal asymptotes; such asymptotes may however occur with rational functions.

Functions of more than one variable

Ordinary limits

By noting that |x − p| represents a distance, the definition of a limit can be extended to functions of more than one variable. In the case of a function defined on we defined the limit as follows: the limit of f as (x, y) approaches (p, q) is L, written

if the following condition holds:

- For every ε > 0, there exists a δ > 0 such that for all x in S and y in T, whenever we have |f(x, y) − L| < ε,[13]

or formally:

Here is the Euclidean distance between (x, y) and (p, q). (This can in fact be replaced by any norm ||(x, y) − (p, q)||, and be extended to any number of variables.)

For example, we may say because for every ε > 0, we can take such that for all real x ≠ 0 and real y ≠ 0, if then |f(x, y) − 0| < ε.

Similar to the case in single variable, the value of f at (p, q) does not matter in this definition of limit.

For such a multivariable limit to exist, this definition requires the value of f approaches L along every possible path approaching (p, q).[14] In the above example, the function satisfies this condition. This can be seen by considering the polar coordinates which gives Here θ = θ(r) is a function of r which controls the shape of the path along which f is approaching (p, q). Since cos θ is bounded between [−1, 1], by the sandwich theorem, this limit tends to 0.

In contrast, the function does not have a limit at (0, 0). Taking the path (x, y) = (t, 0) → (0, 0), we obtain while taking the path (x, y) = (t, t) → (0, 0), we obtain

Since the two values do not agree, f does not tend to a single value as (x, y) approaches (0, 0).

Multiple limits

Although less commonly used, there is another type of limit for a multivariable function, known as the multiple limit. For a two-variable function, this is the double limit.[15] Let be defined on we say the double limit of f as x approaches p and y approaches q is L, written

if the following condition holds:

For such a double limit to exist, this definition requires the value of f approaches L along every possible path approaching (p, q), excluding the two lines x = p and y = q. As a result, the multiple limit is a weaker notion than the ordinary limit: if the ordinary limit exists and equals L, then the multiple limit exists and also equals L. The converse is not true: the existence of the multiple limits does not imply the existence of the ordinary limit. Consider the example where but does not exist.

If the domain of f is restricted to then the two definitions of limits coincide.[15]

Multiple limits at infinity

The concept of multiple limit can extend to the limit at infinity, in a way similar to that of a single variable function. For we say the double limit of f as x and y approaches infinity is L, written

if the following condition holds:

We say the double limit of f as x and y approaches minus infinity is L, written

if the following condition holds:

Pointwise limits and uniform limits

Let Instead of taking limit as (x, y) → (p, q), we may consider taking the limit of just one variable, say, x → p, to obtain a single-variable function of y, namely In fact, this limiting process can be done in two distinct ways. The first one is called pointwise limit. We say the pointwise limit of f as x approaches p is g, denoted or

Alternatively, we may say f tends to g pointwise as x approaches p, denoted or

This limit exists if the following holds:

Here, δ = δ(ε, y) is a function of both ε and y. Each δ is chosen for a specific point of y. Hence we say the limit is pointwise in y. For example, has a pointwise limit of constant zero function because for every fixed y, the limit is clearly 0. This argument fails if y is not fixed: if y is very close to π/2, the value of the fraction may deviate from 0.

This leads to another definition of limit, namely the uniform limit. We say the uniform limit of f on T as x approaches p is g, denoted or

Alternatively, we may say f tends to g uniformly on T as x approaches p, denoted or

This limit exists if the following holds:

Here, δ = δ(ε) is a function of only ε but not y. In other words, δ is uniformly applicable to all y in T. Hence we say the limit is uniform in y. For example, has a uniform limit of constant zero function because for all real y, cos y is bounded between [−1, 1]. Hence no matter how y behaves, we may use the sandwich theorem to show that the limit is 0.

Iterated limits

Let We may consider taking the limit of just one variable, say, x → p, to obtain a single-variable function of y, namely and then take limit in the other variable, namely y → q, to get a number L. Symbolically,

This limit is known as iterated limit of the multivariable function.[17] The order of taking limits may affect the result, i.e.,

in general.

A sufficient condition of equality is given by the Moore-Osgood theorem, which requires the limit to be uniform on T.[18]

Functions on metric spaces

Suppose M and N are subsets of metric spaces A and B, respectively, and f : M → N is defined between M and N, with x ∈ M, p a limit point of M and L ∈ N. It is said that the limit of f as x approaches p is L and write

if the following property holds:

Again, note that p need not be in the domain of f, nor does L need to be in the range of f, and even if f(p) is defined it need not be equal to L.

Euclidean metric

The limit in Euclidean space is a direct generalization of limits to vector-valued functions. For example, we may consider a function such that Then, under the usual Euclidean metric, if the following holds:

In this example, the function concerned are finite-dimension vector-valued function. In this case, the limit theorem for vector-valued function states that if the limit of each component exists, then the limit of a vector-valued function equals the vector with each component taken the limit:[20]

Manhattan metric

One might also want to consider spaces other than Euclidean space. An example would be the Manhattan space. Consider such that Then, under the Manhattan metric, if the following holds:

Since this is also a finite-dimension vector-valued function, the limit theorem stated above also applies.[21]

Uniform metric

Finally, we will discuss the limit in function space, which has infinite dimensions. Consider a function f(x, y) in the function space We want to find out as x approaches p, how f(x, y) will tend to another function g(y), which is in the function space The "closeness" in this function space may be measured under the uniform metric.[22] Then, we will say the uniform limit of f on T as x approaches p is g and write or

if the following holds:

In fact, one can see that this definition is equivalent to that of the uniform limit of a multivariable function introduced in the previous section.

Functions on topological spaces

Suppose X and Y are topological spaces with Y a Hausdorff space. Let p be a limit point of Ω ⊆ X, and L ∈ Y. For a function f : Ω → Y, it is said that the limit of f as x approaches p is L, written

if the following property holds:

This last part of the definition can also be phrased "there exists an open punctured neighbourhood U of p such that f(U ∩ Ω) ⊆ V".

The domain of f does not need to contain p. If it does, then the value of f at p is irrelevant to the definition of the limit. In particular, if the domain of f is X − {p} (or all of X), then the limit of f as x → p exists and is equal to L if, for all subsets Ω of X with limit point p, the limit of the restriction of f to Ω exists and is equal to L. Sometimes this criterion is used to establish the non-existence of the two-sided limit of a function on by showing that the one-sided limits either fail to exist or do not agree. Such a view is fundamental in the field of general topology, where limits and continuity at a point are defined in terms of special families of subsets, called filters, or generalized sequences known as nets.

Alternatively, the requirement that Y be a Hausdorff space can be relaxed to the assumption that Y be a general topological space, but then the limit of a function may not be unique. In particular, one can no longer talk about the limit of a function at a point, but rather a limit or the set of limits at a point.

A function is continuous at a limit point p of and in its domain if and only if f(p) is the (or, in the general case, a) limit of f(x) as x tends to p.

There is another type of limit of a function, namely the sequential limit. Let f : X → Y be a mapping from a topological space X into a Hausdorff space Y, p ∈ X a limit point of X and L ∈ Y. The sequential limit of f as x tends to p is L if

If L is the limit (in the sense above) of f as x approaches p, then it is a sequential limit as well, however the converse need not hold in general. If in addition X is metrizable, then L is the sequential limit of f as x approaches p if and only if it is the limit (in the sense above) of f as x approaches p.

Other characterizations

In terms of sequences

For functions on the real line, one way to define the limit of a function is in terms of the limit of sequences. (This definition is usually attributed to Eduard Heine.) In this setting: if, and only if, for all sequences xn (with xn not equal to a for all n) converging to a the sequence f(xn) converges to L. It was shown by Sierpiński in 1916 that proving the equivalence of this definition and the definition above, requires and is equivalent to a weak form of the axiom of choice. Note that defining what it means for a sequence xn to converge to a requires the epsilon, delta method.

Similarly as it was the case of Weierstrass's definition, a more general Heine definition applies to functions defined on subsets of the real line. Let f be a real-valued function with the domain Dm(f ). Let a be the limit of a sequence of elements of Dm(f ) \ {a}. Then the limit (in this sense) of f is L as x approaches p if for every sequence xn ∈ Dm(f ) \ {a} (so that for all n, xn is not equal to a) that converges to a, the sequence f(xn) converges to L. This is the same as the definition of a sequential limit in the preceding section obtained by regarding the subset Dm(f ) of as a metric space with the induced metric.

In non-standard calculus

In non-standard calculus the limit of a function is defined by: if and only if for all is infinitesimal whenever x − a is infinitesimal. Here are the hyperreal numbers and f* is the natural extension of f to the non-standard real numbers. Keisler proved that such a hyperreal definition of limit reduces the quantifier complexity by two quantifiers.[23] On the other hand, Hrbacek writes that for the definitions to be valid for all hyperreal numbers they must implicitly be grounded in the ε-δ method, and claims that, from the pedagogical point of view, the hope that non-standard calculus could be done without ε-δ methods cannot be realized in full.[24] Bŀaszczyk et al. detail the usefulness of microcontinuity in developing a transparent definition of uniform continuity, and characterize Hrbacek's criticism as a "dubious lament".[25]

In terms of nearness

At the 1908 international congress of mathematics F. Riesz introduced an alternate way defining limits and continuity in concept called "nearness".[26] A point x is defined to be near a set if for every r > 0 there is a point a ∈ A so that |x − a| < r. In this setting the if and only if for all L is near f(A) whenever a is near A. Here f(A) is the set This definition can also be extended to metric and topological spaces.

Relationship to continuity

The notion of the limit of a function is very closely related to the concept of continuity. A function f is said to be continuous at c if it is both defined at c and its value at c equals the limit of f as x approaches c:

(We have here assumed that c is a limit point of the domain of f.)

Properties

If a function f is real-valued, then the limit of f at p is L if and only if both the right-handed limit and left-handed limit of f at p exist and are equal to L.[27]

The function f is continuous at p if and only if the limit of f(x) as x approaches p exists and is equal to f(p). If f : M → N is a function between metric spaces M and N, then it is equivalent that f transforms every sequence in M which converges towards p into a sequence in N which converges towards f(p).

If N is a normed vector space, then the limit operation is linear in the following sense: if the limit of f(x) as x approaches p is L and the limit of g(x) as x approaches p is P, then the limit of f(x) + g(x) as x approaches p is L + P. If a is a scalar from the base field, then the limit of af(x) as x approaches p is aL.

If f and g are real-valued (or complex-valued) functions, then taking the limit of an operation on f(x) and g(x) (e.g., f + g, f − g, f × g, f / g, f g) under certain conditions is compatible with the operation of limits of f(x) and g(x). This fact is often called the algebraic limit theorem. The main condition needed to apply the following rules is that the limits on the right-hand sides of the equations exist (in other words, these limits are finite values including 0). Additionally, the identity for division requires that the denominator on the right-hand side is non-zero (division by 0 is not defined), and the identity for exponentiation requires that the base is positive, or zero while the exponent is positive (finite).

These rules are also valid for one-sided limits, including when p is ∞ or −∞. In each rule above, when one of the limits on the right is ∞ or −∞, the limit on the left may sometimes still be determined by the following rules.

(see also Extended real number line).

In other cases the limit on the left may still exist, although the right-hand side, called an indeterminate form, does not allow one to determine the result. This depends on the functions f and g. These indeterminate forms are:

See further L'Hôpital's rule below and Indeterminate form.

Limits of compositions of functions

In general, from knowing that and it does not follow that However, this "chain rule" does hold if one of the following additional conditions holds:

- f(b) = c (that is, f is continuous at b), or

- g does not take the value b near a (that is, there exists a δ > 0 such that if 0 < |x − a| < δ then |g(x) − b| > 0).

As an example of this phenomenon, consider the following function that violates both additional restrictions:

Since the value at f(0) is a removable discontinuity, for all a. Thus, the naïve chain rule would suggest that the limit of f(f(x)) is 0. However, it is the case that and so for all a.

Limits of special interest

Rational functions

For n a nonnegative integer and constants and

This can be proven by dividing both the numerator and denominator by xn. If the numerator is a polynomial of higher degree, the limit does not exist. If the denominator is of higher degree, the limit is 0.

Trigonometric functions

Exponential functions

Logarithmic functions

L'Hôpital's rule

This rule uses derivatives to find limits of indeterminate forms 0/0 or ±∞/∞, and only applies to such cases. Other indeterminate forms may be manipulated into this form. Given two functions f(x) and g(x), defined over an open interval I containing the desired limit point c, then if:

- or and

- and are differentiable over and

- for all and

- exists,

then:

Normally, the first condition is the most important one.

For example:

Summations and integrals

Specifying an infinite bound on a summation or integral is a common shorthand for specifying a limit.

A short way to write the limit is An important example of limits of sums such as these are series.

A short way to write the limit is

A short way to write the limit is

See also

- Big O notation – Describes limiting behavior of a function

- L'Hôpital's rule – Mathematical rule for evaluating some limits

- List of limits

- Limit of a sequence – Value to which tends an infinite sequence

- Limit point – A point x in a topological space, all of whose neighborhoods contain some other point in a given subset that is different from x

- Limit superior and limit inferior – Bounds of a sequence

- Net (mathematics) – A generalization of a sequence of points

- Non-standard calculus

- Squeeze theorem – Method for finding limits in calculus

- Subsequential limit – The limit of some subsequence

Notes

- ↑ Felscher, Walter (2000), "Bolzano, Cauchy, Epsilon, Delta", American Mathematical Monthly 107 (9): 844–862, doi:10.2307/2695743

- ↑ 2.0 2.1 Grabiner, Judith V. (1983), "Who Gave You the Epsilon? Cauchy and the Origins of Rigorous Calculus", American Mathematical Monthly 90 (3): 185–194, doi:10.2307/2975545, collected in Who Gave You the Epsilon?, ISBN 978-0-88385-569-0 pp. 5–13. Also available at: http://www.maa.org/pubs/Calc_articles/ma002.pdf

- ↑ Sinkevich, G. I. (2017), "Historia epsylontyki", Antiquitates Mathematicae (Cornell University) 10, doi:10.14708/am.v10i0.805

- ↑ Burton, David M. (1997), The History of Mathematics: An introduction (Third ed.), New York: McGraw–Hill, pp. 558–559, ISBN 978-0-07-009465-9

- ↑ Miller, Jeff (1 December 2004), Earliest Uses of Symbols of Calculus, http://jeff560.tripod.com/calculus.html, retrieved 2008-12-18

- ↑ 6.0 6.1 Swokowski, Earl W. (1979), Calculus with Analytic Geometry (2nd ed.), Taylor & Francis, p. 58, https://books.google.com/books?id=gJlAOiCZRnwC&pg=PA58

- ↑ Swokowski (1979), p. 72–73.

- ↑ (Bartle Sherbert)

- ↑ 9.0 9.1 (Bartle 1967)

- ↑ (Hubbard 2015)

- ↑ For example, (Apostol 1974), (Courant 1924), (Hardy 1921), (Rudin 1964), (Whittaker Watson) all take "limit" to mean the deleted limit.

- ↑ For example, Limit at Encyclopedia of Mathematics

- ↑ "Chapter 14.2 Limits and Continuity", Multivariable Calculus (9th ed.), 2020, pp. 952, ISBN 9780357042922

- ↑ Stewart (2020), p. 953.

- ↑ 15.0 15.1 15.2 Zakon, Elias (2011), "Chapter 4. Function Limits and Continuity", Mathematical Anaylysis, Volume I, pp. 219–220, ISBN 9781617386473

- ↑ 16.0 16.1 Zakon (2011), p. 220.

- ↑ Zakon (2011), p. 223.

- ↑ Taylor, Angus E. (2012), General Theory of Functions and Integration, Dover Books on Mathematics Series, pp. 139–140, ISBN 9780486152141

- ↑ Rudin, W. (1986), Principles of mathematical analysis, McGraw - Hill Book C, pp. 84, OCLC 962920758, http://worldcat.org/oclc/962920758

- ↑ 20.0 20.1 Hartman, Gregory (2019) (in en), The Calculus of Vector-Valued Functions II, https://math.libretexts.org/Courses/Georgia_State_University_-_Perimeter_College/MATH_2215%3A_Calculus_III/13%3A_Vector-valued_Functions/The_Calculus_of_Vector-Valued_Functions_II, retrieved 2022-10-31

- ↑ Zakon (2011), p. 172.

- ↑ Rudin, W (1986), Principles of mathematical analysis, McGraw - Hill Book C, pp. 150–151, OCLC 962920758, http://worldcat.org/oclc/962920758

- ↑ Keisler, H. Jerome (2008), "Quantifiers in limits", Andrzej Mostowski and foundational studies, IOS, Amsterdam, pp. 151–170, http://www.math.wisc.edu/~keisler/limquant7.pdf

- ↑ Hrbacek, K. (2007), "Stratified Analysis?", in Van Den Berg, I.; Neves, V., The Strength of Nonstandard Analysis, Springer

- ↑ Bŀaszczyk, Piotr (2012), "Ten misconceptions from the history of analysis and their debunking", Foundations of Science 18 (1): 43–74, doi:10.1007/s10699-012-9285-8

- ↑ F. Riesz (7 April 1908), "Stetigkeitsbegriff und abstrakte Mengenlehre (The Concept of Continuity and Abstract Set Theory)", 1908 International Congress of Mathematicians

- ↑ Swokowski (1979), p. 73.

References

- Apostol, Tom M. (1974). Mathematical Analysis (2 ed.). Addison–Wesley. ISBN 0-201-00288-4.

- Bartle, Robert (1967). The elements of real analysis. Wiley.

- Bartle, Robert G.; Sherbert, Donald R. (2000). Introduction to real analysis. Wiley.

- Courant, Richard (1924) (in de). Vorlesungen über Differential- und Integralrechnung. Springer.

- Hardy, G. H. (1921). A course in pure mathematics. Cambridge University Press.

- Hubbard, John H. (2015). Vector calculus, linear algebra, and differential forms: A unified approach (5th ed.). Matrix Editions.

- Page, Warren; Hersh, Reuben; Selden, Annie et al., eds (2002). "Media Highlights". The College Mathematics 33 (2): 147–154..

- Rudin, Walter (1964). Principles of mathematical analysis. McGraw-Hill.

- Sutherland, W. A. (1975). Introduction to Metric and Topological Spaces. Oxford: Oxford University Press. ISBN 0-19-853161-3.

- Whittaker; Watson (1904). A Course of Modern Analysis. Cambridge University Press.

External links

- MacTutor History of Weierstrass.

- MacTutor History of Bolzano

- Visual Calculus by Lawrence S. Husch, University of Tennessee (2001)

|

KSF

KSF