Lyapunov exponent

From HandWiki - Reading time: 11 min

From HandWiki - Reading time: 11 min

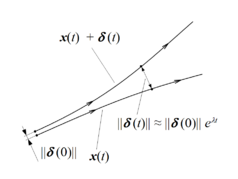

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge (provided that the divergence can be treated within the linearized approximation) at a rate given by

where is the Lyapunov exponent.

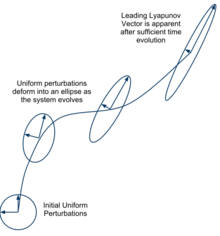

The rate of separation can be different for different orientations of initial separation vector. Thus, there is a spectrum of Lyapunov exponents—equal in number to the dimensionality of the phase space. It is common to refer to the largest one as the maximal Lyapunov exponent (MLE), because it determines a notion of predictability for a dynamical system. A positive MLE is usually taken as an indication that the system is chaotic (provided some other conditions are met, e.g., phase space compactness). Note that an arbitrary initial separation vector will typically contain some component in the direction associated with the MLE, and because of the exponential growth rate, the effect of the other exponents will be obliterated over time.

The exponent is named after Aleksandr Lyapunov.

Definition of the maximal Lyapunov exponent

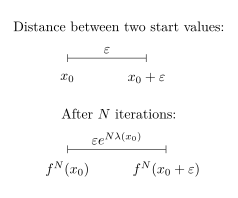

The maximal Lyapunov exponent can be defined as follows:

The limit ensures the validity of the linear approximation at any time.[1]

For discrete time system (maps or fixed point iterations) , for an orbit starting with this translates into:

Definition of the Lyapunov spectrum

For a dynamical system with evolution equation in an n–dimensional phase space, the spectrum of Lyapunov exponents

in general, depends on the starting point . However, we will usually be interested in the attractor (or attractors) of a dynamical system, and there will normally be one set of exponents associated with each attractor. The choice of starting point may determine which attractor the system ends up on, if there is more than one. (For Hamiltonian systems, which do not have attractors, this is not a concern.) The Lyapunov exponents describe the behavior of vectors in the tangent space of the phase space and are defined from the Jacobian matrix

this Jacobian defines the evolution of the tangent vectors, given by the matrix , via the equation

with the initial condition . The matrix describes how a small change at the point propagates to the final point . The limit

defines a matrix (the conditions for the existence of the limit are given by the Oseledets theorem). The Lyapunov exponents are defined by the eigenvalues of .

The set of Lyapunov exponents will be the same for almost all starting points of an ergodic component of the dynamical system.

Lyapunov exponent for time-varying linearization

To introduce Lyapunov exponent consider a fundamental matrix (e.g., for linearization along a stationary solution in a continuous system), the fundamental matrix is consisting of the linearly-independent solutions of the first-order approximation of the system. The singular values of the matrix are the square roots of the eigenvalues of the matrix . The largest Lyapunov exponent is as follows [2]

A.M. Lyapunov proved that if the system of the first approximation is regular (e.g., all systems with constant and periodic coefficients are regular) and its largest Lyapunov exponent is negative, then the solution of the original system is asymptotically Lyapunov stable. Later, it was stated by O. Perron that the requirement of regularity of the first approximation is substantial.

Perron effects of largest Lyapunov exponent sign inversion

In 1930 O. Perron constructed an example of a second-order system, where the first approximation has negative Lyapunov exponents along a zero solution of the original system but, at the same time, this zero solution of the original nonlinear system is Lyapunov unstable. Furthermore, in a certain neighborhood of this zero solution almost all solutions of original system have positive Lyapunov exponents. Also, it is possible to construct a reverse example in which the first approximation has positive Lyapunov exponents along a zero solution of the original system but, at the same time, this zero solution of original nonlinear system is Lyapunov stable.[3][4] The effect of sign inversion of Lyapunov exponents of solutions of the original system and the system of first approximation with the same initial data was subsequently called the Perron effect.[3][4]

Perron's counterexample shows that a negative largest Lyapunov exponent does not, in general, indicate stability, and that a positive largest Lyapunov exponent does not, in general, indicate chaos.

Therefore, time-varying linearization requires additional justification.[4]

Basic properties

If the system is conservative (i.e., there is no dissipation), a volume element of the phase space will stay the same along a trajectory. Thus the sum of all Lyapunov exponents must be zero. If the system is dissipative, the sum of Lyapunov exponents is negative.

If the system is a flow and the trajectory does not converge to a single point, one exponent is always zero—the Lyapunov exponent corresponding to the eigenvalue of with an eigenvector in the direction of the flow.

Significance of the Lyapunov spectrum

The Lyapunov spectrum can be used to give an estimate of the rate of entropy production, of the fractal dimension, and of the Hausdorff dimension of the considered dynamical system.[5] In particular from the knowledge of the Lyapunov spectrum it is possible to obtain the so-called Lyapunov dimension (or Kaplan–Yorke dimension) , which is defined as follows:

where is the maximum integer such that the sum of the largest exponents is still non-negative. represents an upper bound for the information dimension of the system.[6] Moreover, the sum of all the positive Lyapunov exponents gives an estimate of the Kolmogorov–Sinai entropy accordingly to Pesin's theorem.[7] Along with widely used numerical methods for estimating and computing the Lyapunov dimension there is an effective analytical approach, which is based on the direct Lyapunov method with special Lyapunov-like functions.[8] The Lyapunov exponents of bounded trajectory and the Lyapunov dimension of attractor are invariant under diffeomorphism of the phase space. [9]

The multiplicative inverse of the largest Lyapunov exponent is sometimes referred in literature as Lyapunov time, and defines the characteristic e-folding time. For chaotic orbits, the Lyapunov time will be finite, whereas for regular orbits it will be infinite.

Numerical calculation

Generally the calculation of Lyapunov exponents, as defined above, cannot be carried out analytically, and in most cases one must resort to numerical techniques. An early example, which also constituted the first demonstration of the exponential divergence of chaotic trajectories, was carried out by R. H. Miller in 1964.[10] Currently, the most commonly used numerical procedure estimates the matrix based on averaging several finite time approximations of the limit defining .

One of the most used and effective numerical techniques to calculate the Lyapunov spectrum for a smooth dynamical system relies on periodic Gram–Schmidt orthonormalization of the Lyapunov vectors to avoid a misalignment of all the vectors along the direction of maximal expansion.[11][12][13][14] The Lyapunov spectrum of various models are described.[15] Source codes for nonlinear systems such as the Hénon map, the Lorenz equations, a delay differential equation and so on are introduced.[16][17][18]

For the calculation of Lyapunov exponents from limited experimental data, various methods have been proposed. However, there are many difficulties with applying these methods and such problems should be approached with care. The main difficulty is that the data does not fully explore the phase space, rather it is confined to the attractor which has very limited (if any) extension along certain directions. These thinner or more singular directions within the data set are the ones associated with the more negative exponents. The use of nonlinear mappings to model the evolution of small displacements from the attractor has been shown to dramatically improve the ability to recover the Lyapunov spectrum,[19][20] provided the data has a very low level of noise. The singular nature of the data and its connection to the more negative exponents has also been explored.[21]

Local Lyapunov exponent

Whereas the (global) Lyapunov exponent gives a measure for the total predictability of a system, it is sometimes of interest to estimate the local predictability around a point x0 in phase space. This may be done through the eigenvalues of the Jacobian matrix J 0(x0). These eigenvalues are also called local Lyapunov exponents.[22] (A word of caution: unlike the global exponents, these local exponents are not invariant under a nonlinear change of coordinates).

Conditional Lyapunov exponent

This term is normally used regarding synchronization of chaos, in which there are two systems that are coupled, usually in a unidirectional manner so that there is a drive (or master) system and a response (or slave) system. The conditional exponents are those of the response system with the drive system treated as simply the source of a (chaotic) drive signal. Synchronization occurs when all of the conditional exponents are negative.[23]

See also

- Chaos Theory

- Chaotic mixing for an alternative derivation

- Eden's conjecture on the Lyapunov dimension

- Floquet theory

- Liouville's theorem (Hamiltonian)

- Lyapunov dimension

- Lyapunov time

- Recurrence quantification analysis

- Oseledets theorem

- Butterfly effect

References

- ↑ Cencini, M. (2010). World Scientific. ed. Chaos From Simple models to complex systems. World Scientific. ISBN 978-981-4277-65-5.

- ↑ Temam, R. (1988). Infinite Dimensional Dynamical Systems in Mechanics and Physics. Cambridge: Springer-Verlag.

- ↑ 3.0 3.1 N.V. Kuznetsov; G.A. Leonov (2005). "On stability by the first approximation for discrete systems". Proceedings. 2005 International Conference Physics and Control, 2005. Proceedings Volume 2005. pp. 596–599. doi:10.1109/PHYCON.2005.1514053. ISBN 978-0-7803-9235-9. http://www.math.spbu.ru/user/nk/PDF/2005-IEEE-Discrete-system-stability-Lyapunov-exponent.pdf.

- ↑ 4.0 4.1 4.2 G.A. Leonov; N.V. Kuznetsov (2007). "Time-Varying Linearization and the Perron effects". International Journal of Bifurcation and Chaos 17 (4): 1079–1107. doi:10.1142/S0218127407017732. Bibcode: 2007IJBC...17.1079L. http://www.math.spbu.ru/user/nk/PDF/2007_IJBC_Lyapunov_exponent_Linearization_Chaos_Perron_effects.pdf.

- ↑ Kuznetsov, Nikolay; Reitmann, Volker (2020). Attractor Dimension Estimates for Dynamical Systems: Theory and Computation. Cham: Springer. https://www.springer.com/gp/book/9783030509866.

- ↑ Kaplan, J.; Yorke, J. (1979). "Chaotic behavior of multidimensional difference equations". in Peitgen, H. O.; Walther, H. O.. Functional Differential Equations and Approximation of Fixed Points. New York: Springer. ISBN 978-3-540-09518-7.

- ↑ Pesin, Y. B. (1977). "Characteristic Lyapunov Exponents and Smooth Ergodic Theory". Russian Math. Surveys 32 (4): 55–114. doi:10.1070/RM1977v032n04ABEH001639. Bibcode: 1977RuMaS..32...55P.

- ↑ Kuznetsov, N.V. (2016). "The Lyapunov dimension and its estimation via the Leonov method". Physics Letters A 380 (25–26): 2142–2149. doi:10.1016/j.physleta.2016.04.036. Bibcode: 2016PhLA..380.2142K.

- ↑ Kuznetsov, N.V.; Alexeeva, T.A.; Leonov, G.A. (2016). "Invariance of Lyapunov exponents and Lyapunov dimension for regular and irregular linearizations". Nonlinear Dynamics 85 (1): 195–201. doi:10.1007/s11071-016-2678-4.

- ↑ Miller, R. H. (1964). "Irreversibility in Small Stellar Dynamical Systems". The Astrophysical Journal 140: 250. doi:10.1086/147911. Bibcode: 1964ApJ...140..250M.

- ↑ Benettin, G.; Galgani, L.; Giorgilli, A.; Strelcyn, J. M. (1980). "Lyapunov Characteristic Exponents for smooth dynamical systems and for hamiltonian systems; a method for computing all of them. Part 1: Theory". Meccanica 15: 9–20. doi:10.1007/BF02128236.

- ↑ Benettin, G.; Galgani, L.; Giorgilli, A.; Strelcyn, J. M. (1980). "Lyapunov Characteristic Exponents for smooth dynamical systems and for hamiltonian systems; A method for computing all of them. Part 2: Numerical application". Meccanica 15: 21–30. doi:10.1007/BF02128237.

- ↑ Shimada, I.; Nagashima, T. (1979). "A Numerical Approach to Ergodic Problem of Dissipative Dynamical Systems". Progress of Theoretical Physics 61 (6): 1605–1616. doi:10.1143/PTP.61.1605. Bibcode: 1979PThPh..61.1605S.

- ↑ Eckmann, J. -P.; Ruelle, D. (1985). "Ergodic theory of chaos and strange attractors". Reviews of Modern Physics 57 (3): 617–656. doi:10.1103/RevModPhys.57.617. Bibcode: 1985RvMP...57..617E.

- ↑ Sprott, Julien Clinton (September 27, 2001). Chaos and Time-Series Analysis. Oxford University Press. ISBN 978-0198508403.

- ↑ Sprott, Julien Clinton (May 26, 2005). "Lyapunov Exponent Spectrum Software". https://sprott.physics.wisc.edu/chaos/lespec.htm.

- ↑ Sprott, Julien Clinton (October 4, 2006). "Lyapunov Exponents for Delay Differential Equations". https://sprott.physics.wisc.edu/chaos/ddele.htm.

- ↑ Tomo, Nakamura (19 October 2022). "nonlinear systems and Lyapunov spectrum". https://sites.google.com/view/lyapunov-spectrum/home.

- ↑ Bryant, P.; Brown, R.; Abarbanel, H. (1990). "Lyapunov exponents from observed time series". Physical Review Letters 65 (13): 1523–1526. doi:10.1103/PhysRevLett.65.1523. PMID 10042292. Bibcode: 1990PhRvL..65.1523B.

- ↑ Brown, R.; Bryant, P.; Abarbanel, H. (1991). "Computing the Lyapunov spectrum of a dynamical system from an observed time series". Physical Review A 43 (6): 2787–2806. doi:10.1103/PhysRevA.43.2787. PMID 9905344. Bibcode: 1991PhRvA..43.2787B.

- ↑ Bryant, P. H. (1993). "Extensional singularity dimensions for strange attractors". Physics Letters A 179 (3): 186–190. doi:10.1016/0375-9601(93)91136-S. Bibcode: 1993PhLA..179..186B.

- ↑ Abarbanel, H.D.I.; Brown, R.; Kennel, M.B. (1992). "Local Lyapunov exponents computed from observed data". Journal of Nonlinear Science 2 (3): 343–365. doi:10.1007/BF01208929. Bibcode: 1992JNS.....2..343A.

- ↑ See, e.g., Pecora, L. M.; Carroll, T. L.; Johnson, G. A.; Mar, D. J.; Heagy, J. F. (1997). "Fundamentals of synchronization in chaotic systems, concepts, and applications". Chaos: An Interdisciplinary Journal of Nonlinear Science 7 (4): 520–543. doi:10.1063/1.166278. PMID 12779679. Bibcode: 1997Chaos...7..520P.

Further reading

- Kuznetsov, Nikolay; Reitmann, Volker (2020). Attractor Dimension Estimates for Dynamical Systems: Theory and Computation. Cham: Springer. https://www.springer.com/gp/book/9783030509866.

- M.-F. Danca; N.V. Kuznetsov (2018). "Matlab Code for Lyapunov Exponents of Fractional-Order Systems". International Journal of Bifurcation and Chaos 25 (5): 1850067–1851392. doi:10.1142/S0218127418500670. Bibcode: 2018IJBC...2850067D.

- Cvitanović P., Artuso R., Mainieri R., Tanner G. and Vattay G.Chaos: Classical and Quantum Niels Bohr Institute, Copenhagen 2005 – textbook about chaos available under Free Documentation License

- Freddy Christiansen; Hans Henrik Rugh (1997). "Computing Lyapunov spectra with continuous Gram–Schmidt orthonormalization". Nonlinearity 10 (5): 1063–1072. doi:10.1088/0951-7715/10/5/004. Bibcode: 1997Nonli..10.1063C. http://www.mpipks-dresden.mpg.de/eprint/freddy/9702017/9702017.ps.

- Salman Habib; Robert D. Ryne (1995). "Symplectic Calculation of Lyapunov Exponents". Physical Review Letters 74 (1): 70–73. doi:10.1103/PhysRevLett.74.70. PMID 10057701. Bibcode: 1995PhRvL..74...70H.

- Govindan Rangarajan; Salman Habib; Robert D. Ryne (1998). "Lyapunov Exponents without Rescaling and Reorthogonalization". Physical Review Letters 80 (17): 3747–3750. doi:10.1103/PhysRevLett.80.3747. Bibcode: 1998PhRvL..80.3747R.

- X. Zeng; R. Eykholt; R. A. Pielke (1991). "Estimating the Lyapunov-exponent spectrum from short time series of low precision". Physical Review Letters 66 (25): 3229–3232. doi:10.1103/PhysRevLett.66.3229. PMID 10043734. Bibcode: 1991PhRvL..66.3229Z.

- E Aurell; G Boffetta; A Crisanti; G Paladin; A Vulpiani (1997). "Predictability in the large: an extension of the concept of Lyapunov exponent". J. Phys. A: Math. Gen. 30 (1): 1–26. doi:10.1088/0305-4470/30/1/003. Bibcode: 1997JPhA...30....1A.

- F Ginelli; P Poggi; A Turchi; H Chaté; R Livi; A Politi (2007). "Characterizing Dynamics with Covariant Lyapunov Vectors". Physical Review Letters 99 (13): 130601. doi:10.1103/PhysRevLett.99.130601. PMID 17930570. Bibcode: 2007PhRvL..99m0601G. http://www.fi.isc.cnr.it/users/antonio.politi/Reprints/145.pdf.

Software

- [1] R. Hegger, H. Kantz, and T. Schreiber, Nonlinear Time Series Analysis, TISEAN 3.0.1 (March 2007).

- [2] Scientio's ChaosKit product calculates Lyapunov exponents amongst other Chaotic measures. Access is provided online via a web service and Silverlight demo .

- [3] Dr. Ronald Joe Record's mathematical recreations software laboratory includes an X11 graphical client, lyap, for graphically exploring the Lyapunov exponents of a forced logistic map and other maps of the unit interval. The contents and manual pages of the mathrec software laboratory are also available.

- [4] Software on this page was developed specifically for the efficient and accurate calculation of the full spectrum of exponents. This includes LyapOde for cases where the equations of motion are known and also Lyap for cases involving experimental time series data. LyapOde, which includes source code written in "C", can also calculate the conditional Lyapunov exponents for coupled identical systems. It is intended to allow the user to provide their own set of model equations or to use one of the ones included. There are no inherent limitations on the number of variables, parameters etc. Lyap which includes source code written in Fortran, can also calculate the Lyapunov direction vectors and can characterize the singularity of the attractor, which is the main reason for difficulties in calculating the more negative exponents from time series data. In both cases there is extensive documentation and sample input files. The software can be compiled for running on Windows, Mac, or Linux/Unix systems. The software runs in a text window and has no graphics capabilities, but can generate output files that could easily be plotted with a program like excel.

External links

|

KSF

KSF