Phase (waves)

Topic: Physics

From HandWiki - Reading time: 10 min

From HandWiki - Reading time: 10 min

In physics and mathematics, the phase (symbol φ or ϕ) of a wave or other periodic function of some real variable (such as time) is an angle-like quantity representing the fraction of the cycle covered up to . It is expressed in such a scale that it varies by one full turn as the variable goes through each period (and goes through each complete cycle). It may be measured in any angular unit such as degrees or radians, thus increasing by 360° or as the variable completes a full period.[1]

This convention is especially appropriate for a sinusoidal function, since its value at any argument then can be expressed as , the sine of the phase, multiplied by some factor (the amplitude of the sinusoid). (The cosine may be used instead of sine, depending on where one considers each period to start.)

Usually, whole turns are ignored when expressing the phase; so that is also a periodic function, with the same period as , that repeatedly scans the same range of angles as goes through each period. Then, is said to be "at the same phase" at two argument values and (that is, ) if the difference between them is a whole number of periods.

The numeric value of the phase depends on the arbitrary choice of the start of each period, and on the interval of angles that each period is to be mapped to.

The term "phase" is also used when comparing a periodic function with a shifted version of it. If the shift in is expressed as a fraction of the period, and then scaled to an angle spanning a whole turn, one gets the phase shift, phase offset, or phase difference of relative to . If is a "canonical" function for a class of signals, like is for all sinusoidal signals, then is called the initial phase of .

Mathematical definition

Let be a periodic signal (that is, a function of one real variable), and be its period (that is, the smallest positive real number such that for all ). Then the phase of at any argument is

Here denotes the fractional part of a real number, discarding its integer part; that is, ; and is an arbitrary "origin" value of the argument, that one considers to be the beginning of a cycle.

This concept can be visualized by imagining a clock with a hand that turns at constant speed, making a full turn every seconds, and is pointing straight up at time . The phase is then the angle from the 12:00 position to the current position of the hand, at time , measured clockwise.

The phase concept is most useful when the origin is chosen based on features of . For example, for a sinusoid, a convenient choice is any where the function's value changes from zero to positive.

The formula above gives the phase as an angle in radians between 0 and . To get the phase as an angle between and , one uses instead

The phase expressed in degrees (from 0° to 360°, or from −180° to +180°) is defined the same way, except with "360°" in place of "2π".

Consequences

With any of the above definitions, the phase of a periodic signal is periodic too, with the same period :

The phase is zero at the start of each period; that is

Moreover, for any given choice of the origin , the value of the signal for any argument depends only on its phase at . Namely, one can write , where is a function of an angle, defined only for a single full turn, that describes the variation of as ranges over a single period.

In fact, every periodic signal with a specific waveform can be expressed as where is a "canonical" function of a phase angle in 0 to 2π, that describes just one cycle of that waveform; and is a scaling factor for the amplitude. (This claim assumes that the starting time chosen to compute the phase of corresponds to argument 0 of .)

Adding and comparing phases

Since phases are angles, any whole full turns should usually be ignored when performing arithmetic operations on them. That is, the sum and difference of two phases (in degrees) should be computed by the formulas respectively. Thus, for example, the sum of phase angles 190° + 200° is 30° (190 + 200 = 390, minus one full turn), and subtracting 50° from 30° gives a phase of 340° (30 − 50 = −20, plus one full turn).

Similar formulas hold for radians, with instead of 360.

Phase shift

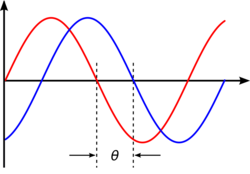

The difference between the phases of two periodic signals and is called the phase difference or phase shift of relative to .[1] At values of when the difference is zero, the two signals are said to be in phase; otherwise, they are out of phase with each other.

In the clock analogy, each signal is represented by a hand (or pointer) of the same clock, both turning at constant but possibly different speeds. The phase difference is then the angle between the two hands, measured clockwise.

The phase difference is particularly important when two signals are added together by a physical process, such as two periodic sound waves emitted by two sources and recorded together by a microphone. This is usually the case in linear systems, when the superposition principle holds.

For arguments when the phase difference is zero, the two signals will have the same sign and will be reinforcing each other. One says that constructive interference is occurring. At arguments when the phases are different, the value of the sum depends on the waveform.

For sinusoids

For sinusoidal signals, when the phase difference is 180° ( radians), one says that the phases are opposite, and that the signals are in antiphase. Then the signals have opposite signs, and destructive interference occurs. Conversely, a phase reversal or phase inversion implies a 180-degree phase shift.[2]

When the phase difference is a quarter of turn (a right angle, +90° = π/2 or −90° = 270° = −π/2 = 3π/2), sinusoidal signals are sometimes said to be in quadrature, e.g., in-phase and quadrature components of a composite signal or even different signals (e.g., voltage and current).

If the frequencies are different, the phase difference increases linearly with the argument . The periodic changes from reinforcement and opposition cause a phenomenon called beating.

For shifted signals

The phase difference is especially important when comparing a periodic signal with a shifted and possibly scaled version of it. That is, suppose that for some constants and all . Suppose also that the origin for computing the phase of has been shifted too. In that case, the phase difference is a constant (independent of ), called the 'phase shift' or 'phase offset' of relative to . In the clock analogy, this situation corresponds to the two hands turning at the same speed, so that the angle between them is constant.

In this case, the phase shift is simply the argument shift , expressed as a fraction of the common period (in terms of the modulo operation) of the two signals and then scaled to a full turn:

If is a "canonical" representative for a class of signals, like is for all sinusoidal signals, then the phase shift called simply the initial phase of .

Therefore, when two periodic signals have the same frequency, they are always in phase, or always out of phase. Physically, this situation commonly occurs, for many reasons. For example, the two signals may be a periodic soundwave recorded by two microphones at separate locations. Or, conversely, they may be periodic soundwaves created by two separate speakers from the same electrical signal, and recorded by a single microphone. They may be a radio signal that reaches the receiving antenna in a straight line, and a copy of it that was reflected off a large building nearby.

A well-known example of phase difference is the length of shadows seen at different points of Earth. To a first approximation, if is the length seen at time at one spot, and is the length seen at the same time at a longitude 30° west of that point, then the phase difference between the two signals will be 30° (assuming that, in each signal, each period starts when the shadow is shortest).

For sinusoids with same frequency

For sinusoidal signals (and a few other waveforms, like square or symmetric triangular), a phase shift of 180° is equivalent to a phase shift of 0° with negation of the amplitude. When two signals with these waveforms, same period, and opposite phases are added together, the sum is either identically zero, or is a sinusoidal signal with the same period and phase, whose amplitude is the difference of the original amplitudes.

The phase shift of the co-sine function relative to the sine function is +90°. It follows that, for two sinusoidal signals and with same frequency and amplitudes and , and has phase shift +90° relative to , the sum is a sinusoidal signal with the same frequency, with amplitude and phase shift from , such that

File:Out of phase AE.gif A real-world example of a sonic phase difference occurs in the warble of a Native American flute. The amplitude of different harmonic components of same long-held note on the flute come into dominance at different points in the phase cycle. The phase difference between the different harmonics can be observed on a spectrogram of the sound of a warbling flute.[4]

Phase comparison

Phase comparison is a comparison of the phase of two waveforms, usually of the same nominal frequency. In time and frequency, the purpose of a phase comparison is generally to determine the frequency offset (difference between signal cycles) with respect to a reference.[3]

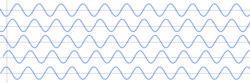

A phase comparison can be made by connecting two signals to a two-channel oscilloscope. The oscilloscope will display two sine signals, as shown in the graphic to the right. In the adjacent image, the top sine signal is the test frequency, and the bottom sine signal represents a signal from the reference.

If the two frequencies were exactly the same, their phase relationship would not change and both would appear to be stationary on the oscilloscope display. Since the two frequencies are not exactly the same, the reference appears to be stationary and the test signal moves. By measuring the rate of motion of the test signal the offset between frequencies can be determined.

Vertical lines have been drawn through the points where each sine signal passes through zero. The bottom of the figure shows bars whose width represents the phase difference between the signals. In this case the phase difference is increasing, indicating that the test signal is lower in frequency than the reference.[3]

Formula for phase of an oscillation or a periodic signal

The phase of a simple harmonic oscillation or sinusoidal signal is the value of in the following functions: where , , and are constant parameters called the amplitude, frequency, and phase of the sinusoid. These signals are periodic with period , and they are identical except for a displacement of along the axis. The term phase can refer to several different things:

- It can refer to a specified reference, such as , in which case we would say the phase of is , and the phase of is .

- It can refer to , in which case we would say and have the same phase but are relative to their own specific references.

- In the context of communication waveforms, the time-variant angle , or its principal value, is referred to as instantaneous phase, often just phase.

Absolute phase

See also

- Absolute phase

- AC phase

- In-phase and quadrature components

- Instantaneous phase

- Lissajous curve

- Phase cancellation

- Phase problem

- Phase spectrum

- Phase velocity

- Phasor

- Polarization (waves)

- Coherence, the quality of a wave to display a well defined phase relationship in different regions of its domain of definition

- Hilbert transform, a method of changing phase by 90°

- Reflection phase shift, a phase change that happens when a wave is reflected off of a boundary from fast medium to slow medium

References

- ↑ 1.0 1.1 Ballou, Glen (2005). Handbook for sound engineers (3 ed.). Focal Press, Gulf Professional Publishing. p. 1499. ISBN 978-0-240-80758-4. https://books.google.com/books?id=y0d9VA0lkogC&pg=PA1499.

- ↑ "Federal Standard 1037C: Glossary of Telecommunications Terms". https://www.its.bldrdoc.gov/fs-1037/fs-1037c.htm.

- ↑ 3.0 3.1 3.2 Time and Frequency from A to Z (2010-05-12). "Phase". Nist (National Institute of Standards and Technology (NIST)). https://www.nist.gov/pml/div688/grp40/enc-p.cfm. Retrieved 12 June 2016. This content has been copied and pasted from an NIST web page and is in the public domain.

- ↑ "The Warble". Flutopedia. 2013. http://Flutopedia.com/warble.htm.

External links

- "What is a phase?". Prof. Jeffrey Hass. "An Acoustics Primer", Section 8. Indiana University, 2003. See also: (pages 1 thru 3, 2013)

- Phase angle, phase difference, time delay, and frequency

- ECE 209: Sources of Phase Shift — Discusses the time-domain sources of phase shift in simple linear time-invariant circuits.

- Open Source Physics JavaScript HTML5

- Phase Difference Java Applet

|

KSF

KSF