Robust optimization

From HandWiki - Reading time: 12 min

From HandWiki - Reading time: 12 min

Robust optimization is a field of mathematical optimization theory that deals with optimization problems in which a certain measure of robustness is sought against uncertainty that can be represented as deterministic variability in the value of the parameters of the problem itself and/or its solution. It is related to, but often distinguished from, probabilistic optimization methods such as chance-constrained optimization.

History

The origins of robust optimization date back to the establishment of modern decision theory in the 1950s and the use of worst case analysis and Wald's maximin model as a tool for the treatment of severe uncertainty. It became a discipline of its own in the 1970s with parallel developments in several scientific and technological fields. Over the years, it has been applied in statistics, but also in operations research,[1] electrical engineering,[2][3][4] control theory,[5] finance,[6] portfolio management[7] logistics,[8] manufacturing engineering,[9] chemical engineering,[10] medicine,[11] and computer science. In engineering problems, these formulations often take the name of "Robust Design Optimization", RDO or "Reliability Based Design Optimization", RBDO.

Example 1

Consider the following linear programming problem

where is a given subset of .

What makes this a 'robust optimization' problem is the clause in the constraints. Its implication is that for a pair to be admissible, the constraint must be satisfied by the worst pertaining to , namely the pair that maximizes the value of for the given value of .

If the parameter space is finite (consisting of finitely many elements), then this robust optimization problem itself is a linear programming problem: for each there is a linear constraint .

If is not a finite set, then this problem is a linear semi-infinite programming problem, namely a linear programming problem with finitely many (2) decision variables and infinitely many constraints.

Classification

There are a number of classification criteria for robust optimization problems/models. In particular, one can distinguish between problems dealing with local and global models of robustness; and between probabilistic and non-probabilistic models of robustness. Modern robust optimization deals primarily with non-probabilistic models of robustness that are worst case oriented and as such usually deploy Wald's maximin models.

Local robustness

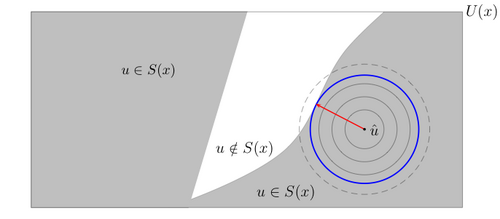

There are cases where robustness is sought against small perturbations in a nominal value of a parameter. A very popular model of local robustness is the radius of stability model:

where denotes the nominal value of the parameter, denotes a ball of radius centered at and denotes the set of values of that satisfy given stability/performance conditions associated with decision .

In words, the robustness (radius of stability) of decision is the radius of the largest ball centered at all of whose elements satisfy the stability requirements imposed on . The picture is this:

where the rectangle represents the set of all the values associated with decision .

Global robustness

Consider the simple abstract robust optimization problem

where denotes the set of all possible values of under consideration.

This is a global robust optimization problem in the sense that the robustness constraint represents all the possible values of .

The difficulty is that such a "global" constraint can be too demanding in that there is no that satisfies this constraint. But even if such an exists, the constraint can be too "conservative" in that it yields a solution that generates a very small payoff that is not representative of the performance of other decisions in . For instance, there could be an that only slightly violates the robustness constraint but yields a very large payoff . In such cases it might be necessary to relax a bit the robustness constraint and/or modify the statement of the problem.

Example 2

Consider the case where the objective is to satisfy a constraint . where denotes the decision variable and is a parameter whose set of possible values in . If there is no such that , then the following intuitive measure of robustness suggests itself:

where denotes an appropriate measure of the "size" of set . For example, if is a finite set, then could be defined as the cardinality of set .

In words, the robustness of decision is the size of the largest subset of for which the constraint is satisfied for each in this set. An optimal decision is then a decision whose robustness is the largest.

This yields the following robust optimization problem:

This intuitive notion of global robustness is not used often in practice because the robust optimization problems that it induces are usually (not always) very difficult to solve.

Example 3

Consider the robust optimization problem

where is a real-valued function on , and assume that there is no feasible solution to this problem because the robustness constraint is too demanding.

To overcome this difficulty, let be a relatively small subset of representing "normal" values of and consider the following robust optimization problem:

Since is much smaller than , its optimal solution may not perform well on a large portion of and therefore may not be robust against the variability of over .

One way to fix this difficulty is to relax the constraint for values of outside the set in a controlled manner so that larger violations are allowed as the distance of from increases. For instance, consider the relaxed robustness constraint

where is a control parameter and denotes the distance of from . Thus, for the relaxed robustness constraint reduces back to the original robustness constraint. This yields the following (relaxed) robust optimization problem:

The function is defined in such a manner that

and

and therefore the optimal solution to the relaxed problem satisfies the original constraint for all values of in . It also satisfies the relaxed constraint

outside .

Non-probabilistic robust optimization models

The dominating paradigm in this area of robust optimization is Wald's maximin model, namely

where the represents the decision maker, the represents Nature, namely uncertainty, represents the decision space and denotes the set of possible values of associated with decision . This is the classic format of the generic model, and is often referred to as minimax or maximin optimization problem. The non-probabilistic (deterministic) model has been and is being extensively used for robust optimization especially in the field of signal processing.[12][13][14]

The equivalent mathematical programming (MP) of the classic format above is

Constraints can be incorporated explicitly in these models. The generic constrained classic format is

The equivalent constrained MP format is defined as:

Probabilistically robust optimization models

These models quantify the uncertainty in the "true" value of the parameter of interest by probability distribution functions. They have been traditionally classified as stochastic programming and stochastic optimization models. Recently, probabilistically robust optimization has gained popularity by the introduction of rigorous theories such as scenario optimization able to quantify the robustness level of solutions obtained by randomization. These methods are also relevant to data-driven optimization methods.

Robust counterpart

The solution method to many robust program involves creating a deterministic equivalent, called the robust counterpart. The practical difficulty of a robust program depends on if its robust counterpart is computationally tractable.[15][16]

See also

- Stability radius

- Minimax

- Minimax estimator

- Minimax regret

- Robust statistics

- Robust decision making

- Stochastic programming

- Stochastic optimization

- Info-gap decision theory

- Taguchi methods

References

- ↑ Bertsimas, Dimitris; Sim, Melvyn (2004). "The Price of Robustness". Operations Research 52 (1): 35–53. doi:10.1287/opre.1030.0065.

- ↑ Giraldo, Juan S.; Castrillon, Jhon A.; Lopez, Juan Camilo; Rider, Marcos J.; Castro, Carlos A. (July 2019). "Microgrids Energy Management Using Robust Convex Programming". IEEE Transactions on Smart Grid 10 (4): 4520–4530. doi:10.1109/TSG.2018.2863049. ISSN 1949-3053. https://ieeexplore.ieee.org/document/8424876.

- ↑ Shabanzadeh M; Sheikh-El-Eslami, M-K; Haghifam, P; M-R (October 2015). "The design of a risk-hedging tool for virtual power plants via robust optimization approach". Applied Energy 155: 766–777. doi:10.1016/j.apenergy.2015.06.059.

- ↑ Shabanzadeh M; Fattahi, M (July 2015). "Generation Maintenance Scheduling via robust optimization". 2015 23rd Iranian Conference on Electrical Engineering. pp. 1504–1509. doi:10.1109/IranianCEE.2015.7146458. ISBN 978-1-4799-1972-7.

- ↑ Khargonekar, P.P.; Petersen, I.R.; Zhou, K. (1990). "Robust stabilization of uncertain linear systems: quadratic stabilizability and H/sup infinity / control theory". IEEE Transactions on Automatic Control 35 (3): 356–361. doi:10.1109/9.50357.

- ↑ Robust portfolio optimization

- ↑ Md. Asadujjaman and Kais Zaman, "Robust Portfolio Optimization under Data Uncertainty" 15th National Statistical Conference, December 2014, Dhaka, Bangladesh.

- ↑ Yu, Chian-Son; Li, Han-Lin (2000). "A robust optimization model for stochastic logistic problems". International Journal of Production Economics 64 (1–3): 385–397. doi:10.1016/S0925-5273(99)00074-2.

- ↑ Strano, M (2006). "Optimization under uncertainty of sheet-metal-forming processes by the finite element method". Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture 220 (8): 1305–1315. doi:10.1243/09544054JEM480.

- ↑ Bernardo, Fernando P.; Saraiva, Pedro M. (1998). "Robust optimization framework for process parameter and tolerance design". AIChE Journal 44 (9): 2007–2017. doi:10.1002/aic.690440908.

- ↑ Chu, Millie; Zinchenko, Yuriy; Henderson, Shane G; Sharpe, Michael B (2005). "Robust optimization for intensity modulated radiation therapy treatment planning under uncertainty". Physics in Medicine and Biology 50 (23): 5463–5477. doi:10.1088/0031-9155/50/23/003. PMID 16306645. Bibcode: 2005PMB....50.5463C.

- ↑ Verdu, S.; Poor, H. V. (1984). "On Minimax Robustness: A general approach and applications". IEEE Transactions on Information Theory 30 (2): 328–340. doi:10.1109/tit.1984.1056876.

- ↑ Kassam, S. A.; Poor, H. V. (1985). "Robust Techniques for Signal Processing: A Survey". Proceedings of the IEEE 73 (3): 433–481. doi:10.1109/proc.1985.13167.

- ↑ M. Danish Nisar. "Minimax Robustness in Signal Processing for Communications", Shaker Verlag, ISBN 978-3-8440-0332-1, August 2011.

- ↑ Ben-Tal A., El Ghaoui, L. and Nemirovski, A. (2009). Robust Optimization. Princeton Series in Applied Mathematics, Princeton University Press, 9-16.

- ↑ Leyffer S., Menickelly M., Munson T., Vanaret C. and Wild S. M (2020). A survey of nonlinear robust optimization. INFOR: Information Systems and Operational Research, Taylor \& Francis.

Further reading

- H.J. Greenberg. Mathematical Programming Glossary. World Wide Web, http://glossary.computing.society.informs.org/, 1996-2006. Edited by the INFORMS Computing Society.

- Ben-Tal, A.; Nemirovski, A. (1998). "Robust Convex Optimization". Mathematics of Operations Research 23 (4): 769–805. doi:10.1287/moor.23.4.769.

- Ben-Tal, A.; Nemirovski, A. (1999). "Robust solutions to uncertain linear programs". Operations Research Letters 25: 1–13. doi:10.1016/s0167-6377(99)00016-4.

- Ben-Tal, A.; Arkadi Nemirovski, A. (2002). "Robust optimization—methodology and applications". Mathematical Programming, Series B 92 (3): 453–480. doi:10.1007/s101070100286.

- Ben-Tal A., El Ghaoui, L. and Nemirovski, A. (2006). Mathematical Programming, Special issue on Robust Optimization, Volume 107(1-2).

- Ben-Tal A., El Ghaoui, L. and Nemirovski, A. (2009). Robust Optimization. Princeton Series in Applied Mathematics, Princeton University Press.

- Bertsimas, D.; Sim, M. (2003). "Robust Discrete Optimization and Network Flows". Mathematical Programming 98 (1–3): 49–71. doi:10.1007/s10107-003-0396-4.

- Bertsimas, D.; Sim, M. (2006). "Tractable Approximations to Robust Conic Optimization Problems Dimitris Bertsimas". Mathematical Programming 107 (1): 5–36. doi:10.1007/s10107-005-0677-1.

- Chen, W.; Sim, M. (2009). "Goal Driven Optimization". Operations Research 57 (2): 342–357. doi:10.1287/opre.1080.0570.

- Chen, X.; Sim, M.; Sun, P.; Zhang, J. (2008). "A Linear-Decision Based Approximation Approach to Stochastic Programming". Operations Research 56 (2): 344–357. doi:10.1287/opre.1070.0457.

- Chen, X.; Sim, M.; Sun, P. (2007). "A Robust Optimization Perspective on Stochastic Programming". Operations Research 55 (6): 1058–1071. doi:10.1287/opre.1070.0441.

- Dembo, R (1991). "Scenario optimization". Annals of Operations Research 30 (1): 63–80. doi:10.1007/bf02204809.

- Dodson, B., Hammett, P., and Klerx, R. (2014) Probabilistic Design for Optimization and Robustness for Engineers John Wiley & Sons, Inc. ISBN 978-1-118-79619-1

- Gupta, S.K.; Rosenhead, J. (1968). "Robustness in sequential investment decisions". Management Science 15 (2): 18–29. doi:10.1287/mnsc.15.2.B18.

- Kouvelis P. and Yu G. (1997). Robust Discrete Optimization and Its Applications, Kluwer.

- Mutapcic, Almir; Boyd, Stephen (2009). "Cutting-set methods for robust convex optimization with pessimizing oracles". Optimization Methods and Software 24 (3): 381–406. doi:10.1080/10556780802712889.

- Mulvey, J.M.; Vanderbei, R.J.; Zenios, S.A. (1995). "Robust Optimization of Large-Scale Systems". Operations Research 43 (2): 264–281. doi:10.1287/opre.43.2.264.

- Nejadseyfi, O., Geijselaers H.J.M, van den Boogaard A.H. (2018). "Robust optimization based on analytical evaluation of uncertainty propagation". Engineering Optimization 51 (9): 1581-1603. doi:10.1080/0305215X.2018.1536752.

- Rosenblat, M.J. (1987). "A robust approach to facility design". International Journal of Production Research 25 (4): 479–486. doi:10.1080/00207548708919855.

- Rosenhead, M.J; Elton, M; Gupta, S.K. (1972). "Robustness and Optimality as Criteria for Strategic Decisions". Operational Research Quarterly 23 (4): 413–430. doi:10.2307/3007957.

- Rustem B. and Howe M. (2002). Algorithms for Worst-case Design and Applications to Risk Management, Princeton University Press.

- Sniedovich, M (2007). "The art and science of modeling decision-making under severe uncertainty". Decision Making in Manufacturing and Services 1 (1–2): 111–136. doi:10.7494/dmms.2007.1.2.111.

- Sniedovich, M (2008). "Wald's Maximin Model: a Treasure in Disguise!". Journal of Risk Finance 9 (3): 287–291. doi:10.1108/15265940810875603.

- Sniedovich, M (2010). "A bird's view of info-gap decision theory". Journal of Risk Finance 11 (3): 268–283. doi:10.1108/15265941011043648.

- Wald, A (1939). "Contributions to the theory of statistical estimation and testing hypotheses". The Annals of Mathematics 10 (4): 299–326. doi:10.1214/aoms/1177732144.

- Wald, A (1945). "Statistical decision functions which minimize the maximum risk". The Annals of Mathematics 46 (2): 265–280. doi:10.2307/1969022.

- Wald, A. (1950). Statistical Decision Functions, John Wiley, NY.

- Shabanzadeh, Morteza; Fattahi, Mohammad (2015). "Generation Maintenance Scheduling via robust optimization". 2015 23rd Iranian Conference on Electrical Engineering. pp. 1504–1509. doi:10.1109/IranianCEE.2015.7146458. ISBN 978-1-4799-1972-7.

External links

- ROME: Robust Optimization Made Easy

- Robust Decision-Making Under Severe Uncertainty

- Robustimizer: Robust optimization software

|

KSF

KSF