Audio search engine

Topic: Software

From HandWiki - Reading time: 4 min

From HandWiki - Reading time: 4 min

This article does not cite any external source. HandWiki requires at least one external source. See citing external sources. (April 2020) (Learn how and when to remove this template message) |

An audio search engine is a web-based search engine which crawls the web for audio content. The information can consist of web pages, images, audio files, or another type of document. Various techniques exist for research on these engines.

Types of search

Audio search from text

Text entered into a search bar by the user is compared to the search engine's database. Matching results are accompanied by a brief description of the audio file and its characteristics such as sample frequency, bit rate, type of file, length, duration, or coding type. The user is given the option of downloading the resulting files.

Audio search from image

The Query by Example (QBE) system is a searching algorithm that uses content-based image retrieval (CBIR). Keywords are generated from the analysed image. These keywords are used to search for audio files in the database. The results of the search are displayed according to the user preferences regarding to the type of file (wav, mp3, aiff…) or other characteristics.

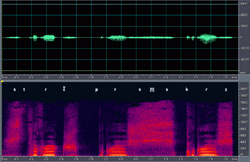

Below: a sound A spectrogram

Audio search from audio

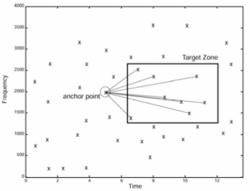

In audio search from audio, the user must play the audio of a song either with a music player, by singing or by humming to the computer microphone. Subsequently, a sound pattern, A, is derived from the audio waveform, and a frequency representation is derived from its Fourier Transform. This pattern will be matched with a pattern, B, corresponding to the waveform and transform of sound files found in the database. All those audio files in the database whose patterns are similar to the pattern searched will be displayed as search results.

Design and algorithms

Audio search has evolved slowly through several basic search formats which exist today and all use keywords. The keywords for each search can be found in the title of the media, any text attached to the media and content linked web pages, also defined by authors and users of video hosted resources.

Some search engines can search recorded speech such as podcasts, though this can be difficult if there is background noise. Around 40 phonemes exist in every language with about 400 in all spoken languages. Rather than applying a text search algorithm after speech-to-text processing is completed, some engines use a phonetic search algorithm to find results within the spoken word. Others work by listening to the entire podcast and creating a text transcription.

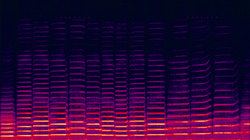

Applications as Munax, use several independent ranking algorithms processes, that the inverted index together with hundreds of search parameters to produce the final ranking for each document. Also like Shazam that works by analyzing the captured sound and seeking a match based on an acoustic fingerprint in a database of more than 11 million songs. Shazam identifies songs based on an audio fingerprint based on a time-frequency graph called a spectrogram. Shazam stores a catalogue of audio fingerprints in a database. The user tags a song for 10 seconds and the application creates an audio fingerprint. Once it creates the fingerprint of the audio, Shazam starts the search for matches in the database. If there is a match, it returns the information to the user; otherwise it returns a "song not known" dialogue. Shazam can identify prerecorded music being broadcast from any source, such as a radio, television, cinema or music in a club, provided that the background noise level is not high enough to prevent an acoustic fingerprint being taken, and that the song is present in the software's database.[citation needed]

Notable engines

Deep audio search

- Picsearch Audio Search has been licensed to search portals since 2006. Picsearch is a search technology provider who powers image, video and audio search for over 100 major search engines around the world.

For smartphones

- SoundHound (previously known as Midomi) is a software and company (both with the same name) that lets users find results with audio. Its features are both an audio-based artificial intelligence service and services to find songs and details about them by singing, humming or recording them.

- Shazam is an app for smartphone or Mac best known for its music identification capabilities. It uses a built-in microphone to gather a brief sample of the audio being played. It creates an acoustic fingerprint based on the sample, and compares it against a central database for a match. If it finds a match, it sends information such as the artist, song title, and album back to the user.

- Doreso identifies a song by humming or singing the melody using a microphone; and by direct input of the name of a song or singer. The app gives information about the song title, its singer and allows you to purchase the song.

- Munax (defunct) is a company that released their all-content search engine in its first version in 2005. Their PlayAudioVideo multimedia search engine, created in July 2007, was the first true search engine for multimedia, providing search on the web for images, video and audio in the same search engine, and allowing users to preview them on the same page.[citation needed] Munax has since shut down.[citation needed]

See also

- Search by sound

- Music information retrieval

- Video search engine

References

|

KSF

KSF