Tachyon

Topic: Software

From HandWiki - Reading time: 11 min

From HandWiki - Reading time: 11 min

| Original author(s) | John E. Stone |

|---|---|

| Written in | C |

| Type | Ray tracing/3D rendering software |

| License | BSD-3-Clause |

| Website | jedi |

Tachyon is a parallel/multiprocessor ray tracing software. It is a parallel ray tracing library for use on distributed memory parallel computers, shared memory computers, and clusters of workstations. Tachyon implements rendering features such as ambient occlusion lighting, depth-of-field focal blur, shadows, reflections, and others. It was originally developed for the Intel iPSC/860 by John Stone for his M.S. thesis at University of Missouri-Rolla.[1] Tachyon subsequently became a more functional and complete ray tracing engine, and it is now incorporated into a number of other open source software packages such as VMD, and SageMath. Tachyon is released under a permissive license (included in the tarball).

Evolution and Features

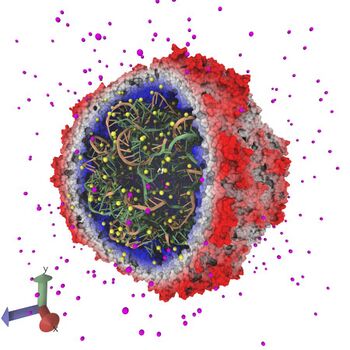

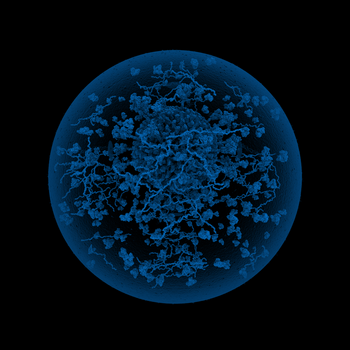

Tachyon was originally developed for the Intel iPSC/860, a distributed memory parallel computer based on a hypercube interconnect topology based on the Intel i860, an early RISC CPU with VLIW architecture and . Tachyon was originally written using Intel's proprietary NX message passing interface for the iPSC series, but it was ported to the earliest versions of MPI shortly thereafter in 1995. Tachyon was adapted to run on the Intel Paragon platform using the Paragon XP/S 150 MP at Oak Ridge National Laboratory. The ORNL XP/S 150 MP was the first platform Tachyon supported that combined both large-scale distributed memory message passing among nodes, and shared memory multithreading within nodes. Adaptation of Tachyon to a variety of conventional Unix-based workstation platforms and early clusters followed, including porting to the IBM SP2. Tachyon was incorporated into the PARAFLOW CFD code to allow in-situ volume visualization of supersonic combustor flows performed on the Paragon XP/S at NASA Langley Research Center, providing a significant performance gain over conventional post-processing visualization approaches that had been used previously.[2] Beginning in 1999, support for Tachyon was incorporated into the molecular graphics program VMD, and this began an ongoing period co-development of Tachyon and VMD where many new Tachyon features were added specifically for molecular graphics. Tachyon was used to render the winning image illustration category for the NSF 2004 Visualization Challenge.[3] In 2007, Tachyon added support for ambient occlusion lighting, which was one of the features that made it increasingly popular for molecular visualization in conjunction with VMD. VMD and Tachyon were gradually adapted to support routine visualization and analysis tasks on clusters, and later for large petascale supercomputers. Tachyon was used to produce figures, movies, and the Nature cover image of the atomic structure of the HIV-1 capsid solved by Zhao et al. in 2013, on the Blue Waters petascale supercomputer at NCSA, U. Illinois.[4][5] Both CPU and GPU versions of Tachyon were used to render images of the SARS-CoV-2 virion, spike protein, and aerosolized virion in three separate ACM Gordon Bell COVID-19 research projects, including the winning project at Supercomputing 2020,[6] and two finalist projects at Supercomputing 2021.[citation needed]

Use in Parallel Computing Demonstrations, Training, and Benchmarking

Owing in part to its portability to a diverse range of platforms Tachyon has been used as a test case for a variety of parallel computing and compiler research articles.

In 1999, John Stone assisted Bill Magro with adaptation of Tachyon to support early versions of the OpenMP directive-based parallel computing standard, using Kuck and Associates' KCC compiler. Tachyon was shown as a demo performing interactive ray tracing on DEC Alpha workstations using KCC and OpenMP.

In 2000, Intel acquired Kuck and Associates Inc.,[7] and Tachyon continued to be used as an OpenMP demonstration. Intel later used Tachyon to develop a variety of programming examples for its Threading Building Blocks (TBB) parallel programming system, where an old version of the program continues to be incorporated as an example to the present day.[8][9]

In 2006, Tachyon was selected by the SPEC HPG for inclusion in the SPEC MPI 2007 benchmark suite.[10][11]

Beyond Tachyon's typical use as tool for rendering high quality images, likely due to its portability and inclusion in SPEC MPI 2007, it has also been used as a test case and point of comparison for a variety of research projects related to parallel rendering and visualization,[12][13][14][15][16][17][18][19][20] cloud computing,[21][22][23][24][25] and parallel computing,[26][27][28] compilers,[29][30][31][32] runtime systems,[33][34] and computer architecture,[35][36][37] performance analysis tools,[38][39][40] and energy efficiency of HPC systems.[41][42][43]

See also

External links

- Tachyon Parallel/Multiprocessor Ray Tracing System website

- Tachyon ray tracer (built into VMD)

- John Stone's M.S. thesis describing the earliest versions of Tachyon

References

- ↑ Stone, John E. (January 1998). "An Efficient Library for Parallel Ray Tracing and Animation". Masters Theses. http://scholarsmine.mst.edu/masters_theses/1747/.

- ↑ Stone, J.; Underwood, M. (1996). "Rendering of numerical flow simulations using MPI". Proceedings. Second MPI Developer's Conference. pp. 138–141. doi:10.1109/MPIDC.1996.534105. ISBN 978-0-8186-7533-1.

- ↑ "Water Permeation Through Aquaporins.". Theoretical and Computational Biophysics Group, University of Illinois at Urbana-Champaign. https://www.nsf.gov/news/special_reports/scivis/winners_2004.jsp#illustration.

- ↑ Zhao, Gongpu; Perilla, Juan R.; Yufenyuy, Ernest L.; Meng, Xin; Chen, Bo; Ning, Jiying; Ahn, Jinwoo; Gronenborn, Angela M. et al. (2013). "Mature HIV-1 capsid structure by cryo-electron microscopy and all-atom molecular dynamics". Nature 497 (7451): 643–646. doi:10.1038/nature12162. PMID 23719463. Bibcode: 2013Natur.497..643Z.

- ↑ Stone, John E.; Isralewitz, Barry; Schulten, Klaus (2013). "Early experiences scaling VMD molecular visualization and analysis jobs on blue waters". 2013 Extreme Scaling Workshop (XSW 2013). pp. 43–50. doi:10.1109/XSW.2013.10. ISBN 978-1-4799-3691-5.

- ↑ Casalino, Lorenzo; Dommer, Abigail C; Gaieb, Zied; Barros, Emilia P; Sztain, Terra; Ahn, Surl-Hee; Trifan, Anda; Brace, Alexander et al. (September 2021). "AI-driven multiscale simulations illuminate mechanisms of SARS-CoV-2 spike dynamics" (in en). The International Journal of High Performance Computing Applications 35 (5): 432–451. doi:10.1177/10943420211006452. ISSN 1094-3420.

- ↑ "Intel To Acquire Kuck & Associates. Acquisition Expands Intel's Capabilities in Software Development Tools for Multiprocessor Computing". http://www.intel.com/pressroom/archive/releases/2000/cn040600.htm.

- ↑ "Intel® Threading Building Blocks (Intel® TBB)". https://www.threadingbuildingblocks.org/.

- ↑ "Parallel for -Tachyon". Intel Corporation. 2009-03-09. https://software.intel.com/en-us/articles/parallel-for-tachyon.

- ↑ "122.tachyon SPEC MPI2007 Benchmark Description". https://www.spec.org/auto/mpi2007/Docs/122.tachyon.html.

- ↑ Müller, Matthias S.; Van Waveren, Matthijs; Lieberman, Ron; Whitney, Brian; Saito, Hideki; Kumaran, Kalyan; Baron, John; Brantley, William C. et al. (2009). "SPEC MPI2007—an application benchmark suite for parallel systems using MPI". Concurrency and Computation: Practice and Experience: n/a. doi:10.1002/cpe.1535.

- ↑ Rosenberg, Robert O.; Lanzagorta, Marco O.; Chtchelkanova, Almadena; Khokhlov, Alexei (2000). "Parallel visualization of large data sets". in Erbacher, Robert F; Chen, Philip C; Roberts, Jonathan C et al.. Visual Data Exploration and Analysis VII. 3960. pp. 135–143. doi:10.1117/12.378889.

- ↑ Lawlor, Orion Sky. "IMPOSTORS FOR PARALLEL INTERACTIVE COMPUTER GRAPHICS". M.S., University of Illinois at Urbana-Champaign, 2001. http://lawlor.cs.uaf.edu/~olawlor/academic/thesis/lawlor_thesis.pdf.

- ↑ "Lawlor, Orion Sky, Matthew Page, and Jon Genetti. "MPIglut: powerwall programming made easier." (2008).". https://www.cs.uaf.edu/sw/mpiglut/mpiglut_2008wscg.pdf.

- ↑ McGuigan, Michael (2008-01-09). "Toward the Graphics Turing Scale on a Blue Gene Supercomputer". arXiv:0801.1500 [cs.GR].

- ↑ "Lawlor, Orion Sky, and Joe Genetti. "Interactive volume rendering aurora on the GPU." (2011).". https://www.cs.uaf.edu/~olawlor/papers/2010/aurora/lawlor_aurora_2010.pdf.

- ↑ Grottel, Sebastian; Krone, Michael; Scharnowski, Katrin; Ertl, Thomas (2012). "Object-space ambient occlusion for molecular dynamics". 2012 IEEE Pacific Visualization Symposium. pp. 209–216. doi:10.1109/PacificVis.2012.6183593. ISBN 978-1-4673-0866-3.

- ↑ Stone, John E.; Isralewitz, Barry; Schulten, Klaus (2013). "Early experiences scaling VMD molecular visualization and analysis jobs on blue waters". 2013 Extreme Scaling Workshop (XSW 2013). pp. 43–50. doi:10.1109/XSW.2013.10. ISBN 978-1-4799-3691-5.

- ↑ Stone, John E.; Vandivort, Kirby L.; Schulten, Klaus (2013). "GPU-accelerated molecular visualization on petascale supercomputing platforms". Proceedings of the 8th International Workshop on Ultrascale Visualization - Ultra Vis '13. pp. 1–8. doi:10.1145/2535571.2535595. ISBN 9781450325004.

- ↑ Sener, Melih. "Visualization of Energy Conversion Processes in a Light Harvesting Organelle at Atomic Detail". http://sc14.supercomputing.org/sites/all/themes/sc14/files/archive/sci_vis/sci_vis_files/svs115s3-file4.pdf.

- ↑ Patchin, Philip; Lagar-Cavilla, H. Andrés; De Lara, Eyal; Brudno, Michael (2009). "Adding the easy button to the cloud with Snow Flock and MPI". Proceedings of the 3rd ACM Workshop on System-level Virtualization for High Performance Computing - HPCVirt '09. pp. 1–8. doi:10.1145/1519138.1519139. ISBN 9781605584652.

- ↑ Neill, Richard; Carloni, Luca P.; Shabarshin, Alexander; Sigaev, Valeriy; Tcherepanov, Serguei (2011). "Embedded Processor Virtualization for Broadband Grid Computing". 2011 IEEE/ACM 12th International Conference on Grid Computing. pp. 145–156. doi:10.1109/Grid.2011.27. ISBN 978-1-4577-1904-2.

- ↑ A Workflow Engine for Computing Clouds, Daniel Franz, Jie Tao, Holger Marten, and Achim Streit. CLOUD COMPUTING 2011 : The Second International Conference on Cloud Computing, GRIDs, and Virtualization.. 2011. pp. 1–6.

- ↑ Tao, Jie (2012). "An Implementation Approach for Inter-Cloud Service Combination". International Journal on Advances in Software 5 (1&2): 65–75. http://www.chinacloud.cn/upload/2012-08/12080207287134.pdf.

- ↑ Neill, Richard W. (2013). Heterogeneous Cloud Systems Based on Broadband Embedded Computing (Thesis). Columbia University. doi:10.7916/d8hh6jg1.

- ↑ Manjikian, Naraig (2010). "Exploring Multiprocessor Design and Implementation Issues with In-Class Demonstrations". Proceedings of the Canadian Engineering Education Association. doi:10.24908/pceea.v0i0.3110. http://queens.scholarsportal.info/ojs/index.php/PCEEA/article/download/3110/3048. Retrieved January 30, 2016.

- ↑ Kim, Wooyoung; Voss, M. (2011-01-01). "Multicore Desktop Programming with Intel Threading Building Blocks". IEEE Software 28 (1): 23–31. doi:10.1109/MS.2011.12. ISSN 0740-7459.

- ↑ Tchiboukdjian, Marc; Carribault, Patrick; Perache, Marc (2012). "Hierarchical Local Storage: Exploiting Flexible User-Data Sharing Between MPI Tasks". 2012 IEEE 26th International Parallel and Distributed Processing Symposium. pp. 366–377. doi:10.1109/IPDPS.2012.42. ISBN 978-1-4673-0975-2.

- ↑ Ghodrat, Mohammad Ali; Givargis, Tony; Nicolau, Alex (2008). "Control flow optimization in loops using interval analysis". Proceedings of the 2008 international conference on Compilers, architectures and synthesis for embedded systems - CASES '08. pp. 157. doi:10.1145/1450095.1450120. ISBN 9781605584690.

- ↑ Guerin, Xavier (2010-05-12). Guerin, Xavier. An Efficient Embedded Software Development Approach for Multiprocessor System-on-Chips. Diss. Institut National Polytechnique de Grenoble-INPG, 2010 (phdthesis). Institut National Polytechnique de Grenoble - INPG. Retrieved January 30, 2016.

- ↑ Milanez, Teo; Collange, Sylvain; Quintão Pereira, Fernando Magno; Meira Jr., Wagner; Ferreira, Renato (2014-10-01). "Thread scheduling and memory coalescing for dynamic vectorization of SPMD workloads". Parallel Computing 40 (9): 548–558. doi:10.1016/j.parco.2014.03.006. https://hal.inria.fr/hal-01087054.

- ↑ Ojha, Davendar Kumar; Sikka, Geeta (2014-01-01). Satapathy, Suresh Chandra. ed (in en). A Study on Vectorization Methods for Multicore SIMD Architecture Provided by Compilers. Advances in Intelligent Systems and Computing. Springer International Publishing. pp. 723–728. doi:10.1007/978-3-319-03107-1_79. ISBN 9783319031064.

- ↑ Kang, Mikyung; Kang, Dong-In; Lee, Seungwon; Lee, Jaedon (2013). "A system framework and API for run-time adaptable parallel software". Proceedings of the 2013 Research in Adaptive and Convergent Systems on - RACS '13. pp. 51–56. doi:10.1145/2513228.2513239. ISBN 9781450323482.

- ↑ Biswas, Susmit; Supinski, Bronis R. de; Schulz, Martin; Franklin, Diana; Sherwood, Timothy; Chong, Frederic T. (2011). "Exploiting Data Similarity to Reduce Memory Footprints". 2011 IEEE International Parallel & Distributed Processing Symposium. pp. 152–163. doi:10.1109/IPDPS.2011.24. ISBN 978-1-61284-372-8.

- ↑ Man-Lap Li; Sasanka, R.; Adve, S.V.; Yen-Kuang Chen; Debes, E. (2005). "The ALPbench benchmark suite for complex multimedia applications". IEEE International. 2005 Proceedings of the IEEE Workload Characterization Symposium, 2005. pp. 34–45. doi:10.1109/IISWC.2005.1525999. ISBN 978-0-7803-9461-2.

- ↑ Zhang, Jiaqi; Chen, Wenguang; Tian, Xinmin; Zheng, Weimin (2008). "Exploring the Emerging Applications for Transactional Memory". 2008 Ninth International Conference on Parallel and Distributed Computing, Applications and Technologies. pp. 474–480. doi:10.1109/PDCAT.2008.77. ISBN 978-0-7695-3443-5.

- ↑ "Almaless, Ghassan, and Franck Wajsburt. "On the scalability of image and signal processing parallel applications on emerging cc-NUMA many-cores." Design and Architectures for Signal and Image Processing (DASIP), 2012 Conference on. IEEE, 2012.". https://www.almos.fr/trac/almos/chrome/site/ALMOS-image-processing-Almaless.pdf.

- ↑ Szebenyi, Zolt´n; Wolf, Felix; Wylie, Brian J.N. (2011). "Performance Analysis of Long-Running Applications". 2011 IEEE International Symposium on Parallel and Distributed Processing Workshops and PHD Forum. pp. 2105–2108. doi:10.1109/IPDPS.2011.388. ISBN 978-1-61284-425-1.

- ↑ Szebenyi, Zoltán; Wylie, Brian J. N.; Wolf, Felix (2008-06-27). Kounev, Samuel. ed (in en). SCALASCA Parallel Performance Analyses of SPEC MPI2007 Applications. Lecture Notes in Computer Science. Springer Berlin Heidelberg. pp. 99–123. doi:10.1007/978-3-540-69814-2_8. ISBN 9783540698135.

- ↑ Wagner, Michael; Knupfer, Andreas; Nagel, Wolfgang E. (2013). "Hierarchical Memory Buffering Techniques for an In-Memory Event Tracing Extension to the Open Trace Format 2". 2013 42nd International Conference on Parallel Processing. pp. 970–976. doi:10.1109/ICPP.2013.115. ISBN 978-0-7695-5117-3.

- ↑ Wonyoung Kim; Gupta, Meeta S.; Wei, Gu-Yeon; Brooks, David (2008). "System level analysis of fast, per-core DVFS using on-chip switching regulators". 2008 IEEE 14th International Symposium on High Performance Computer Architecture. pp. 123–134. doi:10.1109/HPCA.2008.4658633. ISBN 978-1-4244-2070-4.

- ↑ Hackenberg, Daniel; Schöne, Robert; Molka, Daniel; Müller, Matthias S.; Knüpfer, Andreas (2010). "Quantifying power consumption variations of HPC systems using SPEC MPI benchmarks". Computer Science - Research and Development 25 (3–4): 155–163. doi:10.1007/s00450-010-0118-0.

- ↑ Ioannou, Nikolas; Kauschke, Michael; Gries, Matthias; Cintra, Marcelo (2011). "Phase-Based Application-Driven Hierarchical Power Management on the Single-chip Cloud Computer". 2011 International Conference on Parallel Architectures and Compilation Techniques. pp. 131–142. doi:10.1109/PACT.2011.19. ISBN 978-1-4577-1794-9.

KSF

KSF