Sonar signal processing

From HandWiki - Reading time: 7 min

From HandWiki - Reading time: 7 min

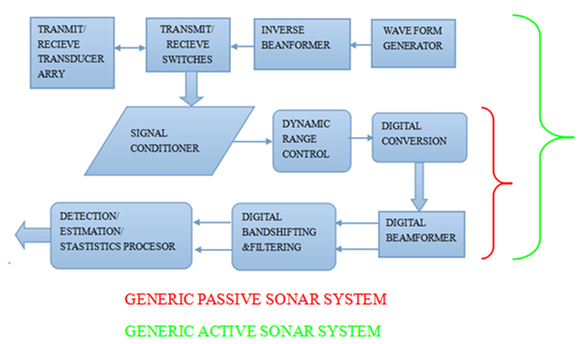

Sonar systems are generally used underwater for range finding and detection. Active sonar emits an acoustic signal, or pulse of sound, into the water. The sound bounces off the target object and returns an “echo” to the sonar transducer. Unlike active sonar, passive sonar does not emit its own signal, which is an advantage for military vessels. But passive sonar cannot measure the range of an object unless it is used in conjunction with other passive listening devices. Multiple passive sonar devices must be used for triangulation of a sound source. No matter whether active sonar or passive sonar, the information included in the reflected signal can not be used without technical signal processing. To extract the useful information from the mixed signal, some steps are taken to transfer the raw acoustic data.

Active Sonar

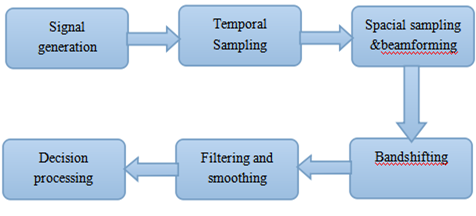

For active sonar, six steps are needed during the signal processing system.

Signal generation

To generate a signal pulse typical analog implementations are oscillators and voltage controlled oscillators (VCO) which are followed by modulators. Amplitude modulation is used to weight the pulse envelopes and to translate the signal spectrum up to some suitable carrier frequency for transmission.

First, in sonar system, the acoustic pressure field can be represented as . The field function includes four variables: time and spatial coordinate . Thus, according to the Fourier transform, in frequency domain[1]

In the formula is temporal frequency and is spatial frequency. We often define as elemental signal, for the reason that any 4-D can be generated by taking a linear combination of elemental signals. Obviously, the direction of gives the direction of propagation of waves, and the speed of the waves is

The wavelength is

Temporal sampling

In modern world, digital computers do contribute a lot to higher speed and efficiency in data analysis. Thus, it is necessary to convert an analog signal into a digital signal by sample the signal in time domain. The operation can be realized by three devices: a digital conversion device, a dynamic range controller and a digital conversion device.

For simplicity, the sampling is done at equal time intervals. In order to prevent the distortion (that is aliasing in frequency domain) after reconstructing the signal from sampled signal, one must sample at a faster rate.The sampling rate, which can well preserves the information content of an analog signal , is submitted to the Nyquist–Shannon sampling theorem. Assuming the sampling period is T, thus after temporal sampling, the signal is

n is the integer.

Spatial sampling and beamforming

It is really an important part for good system performance in sonar system to have appropriate sensor array and beamformer. To infer information about the acoustic field it is necessary to sample the field in space and time. Temporal sampling has already been discussed in a previous section. The sensor array samples the spatial domain, while the beamformer integrate the sensor’s output in a special way to enhance detection and estimation performance of the system. The input to the beamformer is a set of time series, while the output of the beamformer is another set of time series or a set of Fourier coefficient.

For a desired direction , set .

Beamforming is one kind of filtering that can be applied to isolate signal components that are propagating in a particular direction.. In the picture is the most simple beamformer-the weighted delay-and-sum beamformer, which can be accomplished by an array of receivers or sensors. Every triangle is a sensor to sample in spatial domain. After spatial sampling, the sample signal will be weighted and the result is summing all the weighted signals. Assuming an array of M sensors distributed in space, such that the th sensor is located at the position of and the signal received by it is denoted .Thus after beamforming, the signal is

Bandshifting

Bandshifting is employed in active and passive sonar to reduce the complexity of the hardware and software required for subsequent processing. For example,in active sonars the received signal is contained in a very narrow band of frequencies, typically about 2 kHz, centered at some high frequency, typically about 50 kHz. To avoid having to sample the received process at the Nyquist rate of 100 kHz, it is more efficient to demodulate the process to baseband and then employ sampling of the complex envelope at only 2 kHz.

Filtering and smoothing

Filters and smoothers are used extensively in modem sonar systems. After sampling, the signal is converted from analog signal into a discrete time signal, thus digital filters are only into consideration. What’s more, although some filters are time varying or adaptive, most of the filters are linear shift invariant. Digital filters used in sonar signal processors perform two major functions, the filtering of waveforms to modify the frequency content and the smoothing of waveforms to reduce the effects of noise. The two generic types of digital filters are FIR and infinite impulse response (IIR) filters. Input-output relationship of an FIR filter is

(1-D)

(2-D)

Input-output relationship of an IIR filter is

(1-D)

(2-D)

Both FIR filters and IIR filters have their advantages and disadvantages. First, the computational requirements of a sonar processor are more severe when implementing FIR filters. Second, for an IIR filter, linear phase is always difficult to obtain, so FIR filter is stable as opposed to an IIR filter. What’s more, FIR filters are more easily designed using the windowing technique.

Decision processing

In a word, the goal of the sonar is to extract the informations and data from acoustic space-time field, and put them into designed and prescribed process so that we can apply the different cases into one fixed pattern. Thus, to realize the goal, the final stage of sonar system consists of the following functions:

- Detection:Sonar detection determine if there is noise around the target.

- Classification:Sonar classification distinguish a detected target signal.

- Parameter estimation and tracking:Estimation in sonar is often associated with the localization of a target which has already been detected.

- Normalization: Normalization is to make the noise-only response of the detection statistic as uniform as possible.

- Display processing:Display processing addresses the operability and data management problems of the sonar system.

See also

- Filter

- Echo sounding

- Passive Radar

- Radar

- Scientific Echosounder

- Digital signal processing

References

- ↑ Mazur, Martin. Sonar Signal Processing. Penn State Applied Research Laboratory. pp. 14. http://www.personal.psu.edu/faculty/m/x/mxm14/sonar/Mazur-sonar_signal_processing_combined.pdf.

- William C. Knight. Digital Signal Processing for Sonar. IEEE PROCEEDINGS.Vol-69.No-11, NOV 1981

- Hossein Peyvandi. Sonar Systems and Underwater Signal Processing: Classic and Modern Approaches.Scientific Applied College of Telecommunication, Tehran.

|

KSF

KSF