Symmetric difference

From HandWiki - Reading time: 9 min

From HandWiki - Reading time: 9 min

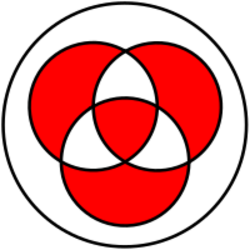

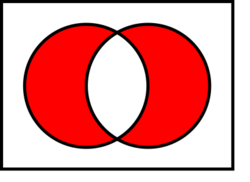

Venn diagram of [math]\displaystyle{ A \vartriangle B }[/math]. The symmetric difference is the union without the intersection:

| |

| Type | Set operation |

|---|---|

| Field | Set theory |

| Statement | The symmetric difference is the set of elements that are in either set, but not in the intersection. |

In mathematics, the symmetric difference of two sets, also known as the disjunctive union and set sum, is the set of elements which are in either of the sets, but not in their intersection. For example, the symmetric difference of the sets [math]\displaystyle{ \{1,2,3\} }[/math] and [math]\displaystyle{ \{3,4\} }[/math] is [math]\displaystyle{ \{1,2,4\} }[/math].

The symmetric difference of the sets A and B is commonly denoted by [math]\displaystyle{ A \operatorname\vartriangle B }[/math] (traditionally, [math]\displaystyle{ A\ \Delta\ B }[/math]), [math]\displaystyle{ A \oplus B }[/math], or [math]\displaystyle{ A \ominus B }[/math]. It can be viewed as a form of addition modulo 2.

The power set of any set becomes an abelian group under the operation of symmetric difference, with the empty set as the neutral element of the group and every element in this group being its own inverse. The power set of any set becomes a Boolean ring, with symmetric difference as the addition of the ring and intersection as the multiplication of the ring.

Properties

The symmetric difference is equivalent to the union of both relative complements, that is:[1]

- [math]\displaystyle{ A\, \vartriangle\,B = \left(A \setminus B\right) \cup \left(B \setminus A\right), }[/math]

The symmetric difference can also be expressed using the XOR operation ⊕ on the predicates describing the two sets in set-builder notation:

- [math]\displaystyle{ A\mathbin{ \vartriangle}B = \{x : (x \in A) \oplus (x \in B)\}. }[/math]

The same fact can be stated as the indicator function (denoted here by [math]\displaystyle{ \chi }[/math]) of the symmetric difference, being the XOR (or addition mod 2) of the indicator functions of its two arguments: [math]\displaystyle{ \chi_{(A\, \vartriangle\,B)} = \chi_A \oplus \chi_B }[/math] or using the Iverson bracket notation [math]\displaystyle{ [x \in A\, \vartriangle\,B] = [x \in A] \oplus [x \in B] }[/math].

The symmetric difference can also be expressed as the union of the two sets, minus their intersection:

- [math]\displaystyle{ A\, \vartriangle\,B = (A \cup B) \setminus (A \cap B), }[/math][1]

In particular, [math]\displaystyle{ A \mathbin{ \vartriangle} B\subseteq A\cup B }[/math]; the equality in this non-strict inclusion occurs if and only if [math]\displaystyle{ A }[/math] and [math]\displaystyle{ B }[/math] are disjoint sets. Furthermore, denoting [math]\displaystyle{ D = A \mathbin{ \vartriangle} B }[/math] and [math]\displaystyle{ I = A \cap B }[/math], then [math]\displaystyle{ D }[/math] and [math]\displaystyle{ I }[/math] are always disjoint, so [math]\displaystyle{ D }[/math] and [math]\displaystyle{ I }[/math] partition [math]\displaystyle{ A \cup B }[/math]. Consequently, assuming intersection and symmetric difference as primitive operations, the union of two sets can be well defined in terms of symmetric difference by the right-hand side of the equality

- [math]\displaystyle{ A\,\cup\,B = (A\, \vartriangle\,B)\, \vartriangle\,(A \cap B) }[/math].

The symmetric difference is commutative and associative:

- [math]\displaystyle{ \begin{align} A\, \vartriangle\,B &= B\, \vartriangle\,A, \\ (A\, \vartriangle\,B)\, \vartriangle\,C &= A\, \vartriangle\,(B\, \vartriangle\,C). \end{align} }[/math]

The empty set is neutral, and every set is its own inverse:

- [math]\displaystyle{ \begin{align} A\, \vartriangle\,\varnothing &= A, \\ A\, \vartriangle\,A &= \varnothing. \end{align} }[/math]

Thus, the power set of any set X becomes an abelian group under the symmetric difference operation. (More generally, any field of sets forms a group with the symmetric difference as operation.) A group in which every element is its own inverse (or, equivalently, in which every element has order 2) is sometimes called a Boolean group;[2][3] the symmetric difference provides a prototypical example of such groups. Sometimes the Boolean group is actually defined as the symmetric difference operation on a set.[4] In the case where X has only two elements, the group thus obtained is the Klein four-group.

Equivalently, a Boolean group is an elementary abelian 2-group. Consequently, the group induced by the symmetric difference is in fact a vector space over the field with 2 elements Z2. If X is finite, then the singletons form a basis of this vector space, and its dimension is therefore equal to the number of elements of X. This construction is used in graph theory, to define the cycle space of a graph.

From the property of the inverses in a Boolean group, it follows that the symmetric difference of two repeated symmetric differences is equivalent to the repeated symmetric difference of the join of the two multisets, where for each double set both can be removed. In particular:

- [math]\displaystyle{ (A\, \vartriangle\,B)\, \vartriangle\,(B\, \vartriangle\,C) = A\, \vartriangle\,C. }[/math]

This implies triangle inequality:[5] the symmetric difference of A and C is contained in the union of the symmetric difference of A and B and that of B and C.

Intersection distributes over symmetric difference:

- [math]\displaystyle{ A \cap (B\, \vartriangle\,C) = (A \cap B)\, \vartriangle\,(A \cap C), }[/math]

and this shows that the power set of X becomes a ring, with symmetric difference as addition and intersection as multiplication. This is the prototypical example of a Boolean ring.

Further properties of the symmetric difference include:

- [math]\displaystyle{ A \mathbin{ \vartriangle} B = \emptyset }[/math] if and only if [math]\displaystyle{ A = B }[/math].

- [math]\displaystyle{ A \mathbin{ \vartriangle} B = A^c \mathbin{ \vartriangle} B^c }[/math], where [math]\displaystyle{ A^c }[/math], [math]\displaystyle{ B^c }[/math] is [math]\displaystyle{ A }[/math]'s complement, [math]\displaystyle{ B }[/math]'s complement, respectively, relative to any (fixed) set that contains both.

- [math]\displaystyle{ \left(\bigcup_{\alpha\in\mathcal{I}}A_\alpha\right) \vartriangle\left(\bigcup_{\alpha\in\mathcal{I}}B_\alpha\right)\subseteq\bigcup_{\alpha\in\mathcal{I}}\left(A_\alpha \mathbin{ \vartriangle} B_\alpha\right) }[/math], where [math]\displaystyle{ \mathcal{I} }[/math] is an arbitrary non-empty index set.

- If [math]\displaystyle{ f : S \rightarrow T }[/math] is any function and [math]\displaystyle{ A, B \subseteq T }[/math] are any sets in [math]\displaystyle{ f }[/math]'s codomain, then [math]\displaystyle{ f^{-1}\left(A \mathbin{ \vartriangle} B\right) = f^{-1}\left(A\right) \mathbin{ \vartriangle} f^{-1}\left(B\right). }[/math]

The symmetric difference can be defined in any Boolean algebra, by writing

- [math]\displaystyle{ x\, \vartriangle\,y = (x \lor y) \land \lnot(x \land y) = (x \land \lnot y) \lor (y \land \lnot x) = x \oplus y. }[/math]

This operation has the same properties as the symmetric difference of sets.

n-ary symmetric difference

Repeated symmetric difference is in a sense equivalent to an operation on a multitude of sets (possibly with multiple appearances of the same set) giving the set of elements which are in an odd number of sets.

The symmetric difference of a collection of sets contains just elements which are in an odd number of the sets in the collection: [math]\displaystyle{ \vartriangle M = \left\{ a \in \bigcup M: \left|\{A \in M:a \in A\}\right| \text{ is odd}\right\}. }[/math]

Evidently, this is well-defined only when each element of the union [math]\displaystyle{ \bigcup M }[/math] is contributed by a finite number of elements of [math]\displaystyle{ M }[/math].

Suppose [math]\displaystyle{ M = \left\{M_1, M_2, \ldots, M_n\right\} }[/math] is a multiset and [math]\displaystyle{ n \ge 2 }[/math]. Then there is a formula for [math]\displaystyle{ | \vartriangle M| }[/math], the number of elements in [math]\displaystyle{ \vartriangle M }[/math], given solely in terms of intersections of elements of [math]\displaystyle{ M }[/math]: [math]\displaystyle{ | \vartriangle M| = \sum_{l=1}^n (-2)^{l-1} \sum_{1 \leq i_1 \lt i_2 \lt \ldots \lt i_l \leq n} \left|M_{i_1} \cap M_{i_2} \cap \ldots \cap M_{i_l}\right|. }[/math]

Symmetric difference on measure spaces

As long as there is a notion of "how big" a set is, the symmetric difference between two sets can be considered a measure of how "far apart" they are.

First consider a finite set S and the counting measure on subsets given by their size. Now consider two subsets of S and set their distance apart as the size of their symmetric difference. This distance is in fact a metric, which makes the power set on S a metric space. If S has n elements, then the distance from the empty set to S is n, and this is the maximum distance for any pair of subsets.[6]

Using the ideas of measure theory, the separation of measurable sets can be defined to be the measure of their symmetric difference. If μ is a σ-finite measure defined on a σ-algebra Σ, the function

- [math]\displaystyle{ d_\mu(X, Y) = \mu(X\, \vartriangle\,Y) }[/math]

is a pseudometric on Σ. dμ becomes a metric if Σ is considered modulo the equivalence relation X ~ Y if and only if [math]\displaystyle{ \mu(X\, \vartriangle\,Y) = 0 }[/math]. It is sometimes called Fréchet-Nikodym metric. The resulting metric space is separable if and only if L2(μ) is separable.

If [math]\displaystyle{ \mu(X), \mu(Y) \lt \infty }[/math], we have: [math]\displaystyle{ |\mu(X) - \mu(Y)| \leq \mu(X\, \vartriangle\,Y) }[/math]. Indeed,

- [math]\displaystyle{ \begin{align} |\mu(X) - \mu(Y)| &= \left|\left(\mu\left(X \setminus Y\right) + \mu\left(X \cap Y\right)\right) - \left(\mu\left(X \cap Y\right) + \mu\left(Y \setminus X\right)\right)\right| \\ &= \left|\mu\left(X \setminus Y\right) - \mu\left(Y \setminus X\right)\right| \\ &\leq \left|\mu\left(X \setminus Y\right)\right| + \left|\mu\left(Y \setminus X\right)\right| \\ &= \mu\left(X \setminus Y\right) + \mu\left(Y \setminus X\right) \\ &= \mu\left(\left(X \setminus Y\right) \cup \left(Y \setminus X\right)\right) \\ &= \mu\left(X\, \vartriangle \, Y\right) \end{align} }[/math]

If [math]\displaystyle{ S = \left(\Omega, \mathcal{A},\mu\right) }[/math] is a measure space and [math]\displaystyle{ F, G \in \mathcal{A} }[/math] are measurable sets, then their symmetric difference is also measurable: [math]\displaystyle{ F \vartriangle G \in \mathcal{A} }[/math]. One may define an equivalence relation on measurable sets by letting [math]\displaystyle{ F }[/math] and [math]\displaystyle{ G }[/math] be related if [math]\displaystyle{ \mu\left(F \vartriangle G\right) = 0 }[/math]. This relation is denoted [math]\displaystyle{ F = G\left[\mathcal{A}, \mu\right] }[/math].

Given [math]\displaystyle{ \mathcal{D}, \mathcal{E} \subseteq \mathcal{A} }[/math], one writes [math]\displaystyle{ \mathcal{D}\subseteq\mathcal{E}\left[\mathcal{A}, \mu\right] }[/math] if to each [math]\displaystyle{ D\in\mathcal{D} }[/math] there's some [math]\displaystyle{ E \in \mathcal{E} }[/math] such that [math]\displaystyle{ D = E\left[\mathcal{A}, \mu\right] }[/math]. The relation "[math]\displaystyle{ \subseteq\left[\mathcal{A}, \mu\right] }[/math]" is a partial order on the family of subsets of [math]\displaystyle{ \mathcal{A} }[/math].

We write [math]\displaystyle{ \mathcal{D} = \mathcal{E}\left[\mathcal{A}, \mu\right] }[/math] if [math]\displaystyle{ \mathcal{D}\subseteq\mathcal{E}\left[\mathcal{A}, \mu\right] }[/math] and [math]\displaystyle{ \mathcal{E} \subseteq \mathcal{D}\left[\mathcal{A}, \mu\right] }[/math]. The relation "[math]\displaystyle{ = \left[\mathcal{A}, \mu\right] }[/math]" is an equivalence relationship between the subsets of [math]\displaystyle{ \mathcal{A} }[/math].

The symmetric closure of [math]\displaystyle{ \mathcal{D} }[/math] is the collection of all [math]\displaystyle{ \mathcal{A} }[/math]-measurable sets that are [math]\displaystyle{ = \left[\mathcal{A}, \mu\right] }[/math] to some [math]\displaystyle{ D \in \mathcal{D} }[/math]. The symmetric closure of [math]\displaystyle{ \mathcal{D} }[/math] contains [math]\displaystyle{ \mathcal{D} }[/math]. If [math]\displaystyle{ \mathcal{D} }[/math] is a sub-[math]\displaystyle{ \sigma }[/math]-algebra of [math]\displaystyle{ \mathcal{A} }[/math], so is the symmetric closure of [math]\displaystyle{ \mathcal{D} }[/math].

[math]\displaystyle{ F = G\left[\mathcal{A}, \mu\right] }[/math] iff [math]\displaystyle{ \left|\mathbf{1}_F - \mathbf{1}_G\right| = 0 }[/math] [math]\displaystyle{ \left[\mathcal{A}, \mu\right] }[/math] almost everywhere.

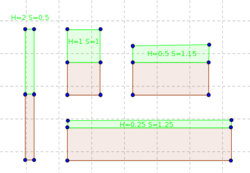

Hausdorff distance vs. symmetric difference

The Hausdorff distance and the (area of the) symmetric difference are both pseudo-metrics on the set of measurable geometric shapes. However, they behave quite differently. The figure at the right shows two sequences of shapes, "Red" and "Red ∪ Green". When the Hausdorff distance between them becomes smaller, the area of the symmetric difference between them becomes larger, and vice versa. By continuing these sequences in both directions, it is possible to get two sequences such that the Hausdorff distance between them converges to 0 and the symmetric distance between them diverges, or vice versa.

See also

- Algebra of sets

- Boolean function

- Complement (set theory)

- Difference (set theory)

- Exclusive or

- Fuzzy set

- Intersection (set theory)

- Jaccard index

- List of set identities and relations

- Logical graph

- Separable sigma algebras

- Set theory

- Symmetry

- Union (set theory)

- inclusion–exclusion principle

References

- ↑ 1.0 1.1 Taylor, Courtney (March 31, 2019). "What Is Symmetric Difference in Math?" (in en). https://www.thoughtco.com/what-is-the-symmetric-difference-3126594.

- ↑ Givant, Steven; Halmos, Paul (2009). Introduction to Boolean Algebras. Springer Science & Business Media. p. 6. ISBN 978-0-387-40293-2.

- ↑ Humberstone, Lloyd (2011). The Connectives. MIT Press. p. 782. ISBN 978-0-262-01654-4. https://archive.org/details/connectives00humb.

- ↑ Rotman, Joseph J. (2010). Advanced Modern Algebra. American Mathematical Soc.. p. 19. ISBN 978-0-8218-4741-1.

- ↑ Rudin, Walter (January 1, 1976). Principles of Mathematical Analysis (3rd ed.). McGraw-Hill Education. p. 306. ISBN 978-0070542358. https://archive.org/details/principlesofmath00rudi.

- ↑ Claude Flament (1963) Applications of Graph Theory to Group Structure, page 16, Prentice-Hall MR0157785

Bibliography

- Halmos, Paul R. (1960). Naive set theory. The University Series in Undergraduate Mathematics. van Nostrand Company.

- Symmetric difference of sets. In Encyclopaedia of Mathematics

|

KSF

KSF