Tempest (codename)

From HandWiki - Reading time: 15 min

From HandWiki - Reading time: 15 min

TEMPEST is a U.S. National Security Agency specification and a NATO certification[1][2] referring to spying on information systems through leaking emanations, including unintentional radio or electrical signals, sounds, and vibrations.[3][4] TEMPEST covers both methods to spy upon others and how to shield equipment against such spying. The protection efforts are also known as emission security (EMSEC), which is a subset of communications security (COMSEC).[5]

The NSA methods for spying on computer emissions are classified, but some of the protection standards have been released by either the NSA or the Department of Defense.[6] Protecting equipment from spying is done with distance, shielding, filtering, and masking.[7] The TEMPEST standards mandate elements such as equipment distance from walls, amount of shielding in buildings and equipment, and distance separating wires carrying classified vs. unclassified materials,[6] filters on cables, and even distance and shielding between wires or equipment and building pipes. Noise can also protect information by masking the actual data.[7]

While much of TEMPEST is about leaking electromagnetic emanations, it also encompasses sounds and mechanical vibrations.[6] For example, it is possible to log a user's keystrokes using the motion sensor inside smartphones.[8] Compromising emissions are defined as unintentional intelligence-bearing signals which, if intercepted and analyzed (side-channel attack), may disclose the information transmitted, received, handled, or otherwise processed by any information-processing equipment.[9]

History

During World War II, Bell Telephone supplied the U.S. military with the 131-B2 mixer device that encrypted teleprinter signals by XOR’ing them with key material from one-time tapes (the SIGTOT system) or, earlier, a rotor-based key generator called SIGCUM. It used electromechanical relays in its operation. Later, Bell informed the Signal Corps that they were able to detect electromagnetic spikes at a distance from the mixer and recover the plain text. Meeting skepticism over whether the phenomenon they discovered in the laboratory could really be dangerous, they demonstrated their ability to recover plain text from a Signal Corps’ crypto center on Varick Street in Lower Manhattan. Now alarmed, the Signal Corps asked Bell to investigate further. Bell identified three problem areas: radiated signals, signals conducted on wires extending from the facility, and magnetic fields. As possible solutions, they suggested shielding, filtering and masking.

Bell developed a modified mixer, the 131-A1 with shielding and filtering, but it proved difficult to maintain and too expensive to deploy. Instead, relevant commanders were warned of the problem and advised to control a 100-foot (30 m)-diameter zone around their communications center to prevent covert interception, and things were left at that. Then in 1951, the CIA rediscovered the problem with the 131-B2 mixer and found they could recover plain text off the line carrying the encrypted signal from a quarter mile away. Filters for signal and power lines were developed, and the recommended control-perimeter radius was extended to 200 feet (61 m), based more on what commanders could be expected to accomplish than any technical criteria.

A long process of evaluating systems and developing possible solutions followed. Other compromising effects were discovered, such as fluctuations in the power line as rotors stepped. The question of exploiting the noise of electromechanical encryption systems had been raised in the late 1940s but was re-evaluated now as a possible threat. Acoustical emanations could reveal plain text, but only if the pick-up device was close to the source. Nevertheless, even mediocre microphones would do. Soundproofing the room made the problem worse by removing reflections and providing a cleaner signal to the recorder.

In 1956, the Naval Research Laboratory developed a better mixer that operated at much lower voltages and currents and therefore radiated far less. It was incorporated in newer NSA encryption systems. However, many users needed the higher signal levels to drive teleprinters at greater distances or where multiple teleprinters were connected, so the newer encryption devices included the option to switch the signal back up to the higher strength. The NSA began developing techniques and specifications for isolating sensitive-communications pathways through filtering, shielding, grounding, and physical separation: of those lines that carried sensitive plain text—from those intended to carry only non-sensitive data, the latter often extending outside of the secure environment. This separation effort became known as the Red/Black Concept. A 1958 joint policy called NAG-1 set radiation standards for equipment and installations based on a 50 ft (15 m) limit of control. It also specified the classification levels of various aspects of the TEMPEST problem. The policy was adopted by Canada and the UK the next year. Six organizations—the Navy, Army, Air Force, NSA, CIA, and the State Department—were to provide the bulk of the effort for its implementation.

Difficulties quickly emerged. Computerization was becoming important to processing intelligence data, and computers and their peripherals had to be evaluated, wherein many of them evidenced vulnerabilities. The Friden Flexowriter, a popular I/O typewriter at the time, proved to be among the strongest emitters, readable at distances up to 3,200 ft (0.98 km) in field tests. The U.S. Communications Security Board (USCSB) produced a Flexowriter Policy that banned its use overseas for classified information and limited its use within the U.S. to the Confidential level, and then only within a 400 ft (120 m) security zone, but users found the policy onerous and impractical. Later, the NSA found similar problems with the introduction of cathode-ray-tube displays (CRTs), which were also powerful radiators.

There was a multiyear process of moving from policy recommendations to more strictly enforced TEMPEST rules. The resulting Directive 5200.19, coordinated with 22 separate agencies, was signed by Secretary of Defense Robert McNamara in December 1964, but still took months to fully implement. The NSA's formal implementation took effect in June 1966.

Meanwhile, the problem of acoustic emanations became more critical with the discovery of some 900 microphones in U.S. installations overseas, most behind the Iron Curtain. The response was to build room-within-a-room enclosures, some transparent, nicknamed "fish bowls". Other units[clarification needed] were fully shielded[clarification needed] to contain electronic emanations, but were unpopular with the personnel who were supposed to work inside; they called the enclosures "meat lockers", and sometimes just left their doors open. Nonetheless, they were installed in critical locations, such as the embassy in Moscow, where two were installed: one for State Department use and one for military attachés. A unit installed at the NSA for its key-generation equipment cost $134,000.

Tempest standards continued to evolve in the 1970s and later, with newer testing methods and more nuanced guidelines that took account of the risks in specific locations and situations.[10]: Vol I, Ch. 10 But then as now, security needs often met with resistance. According to NSA's David G. Boak, "Some of what we still hear today in our own circles, when rigorous technical standards are whittled down in the interest of money and time, are frighteningly reminiscent of the arrogant Third Reich with their Enigma cryptomachine." : ibid p. 19

Shielding standards

Many specifics of the TEMPEST standards are classified, but some elements are public. Current United States and NATO Tempest standards define three levels of protection requirements:[11]

- NATO SDIP-27 Level A (formerly AMSG 720B) and USA NSTISSAM Level I

- "Compromising Emanations Laboratory Test Standard"

- This is the strictest standard for devices that will be operated in NATO Zone 0 environments, where it is assumed that an attacker has almost immediate access (e.g. neighbouring room, 1 metre; 3' distance).

- NATO SDIP-27 Level B (formerly AMSG 788A) and USA NSTISSAM Level II

- "Laboratory Test Standard for Protected Facility Equipment"

- This is a slightly relaxed standard for devices that are operated in NATO Zone 1 environments, where it is assumed that an attacker cannot get closer than about 20 metres (65') (or where building materials ensure an attenuation equivalent to the free-space attenuation of this distance).

- NATO SDIP-27 Level C (formerly AMSG 784) and USA NSTISSAM Level III

- "Laboratory Test Standard for Tactical Mobile Equipment/Systems"

- An even more relaxed standard for devices operated in NATO Zone 2 environments, where attackers have to deal with the equivalent of 100 metres (300') of free-space attenuation (or equivalent attenuation through building materials).

Additional standards include:

- NATO SDIP-29 (formerly AMSG 719G)

- "Installation of Electrical Equipment for the Processing of Classified Information"

- This standard defines installation requirements, for example in respect to grounding and cable distances.

- AMSG 799B

- "NATO Zoning Procedures"

- Defines an attenuation measurement procedure, according to which individual rooms within a security perimeter can be classified into Zone 0, Zone 1, Zone 2, or Zone 3, which then determines what shielding test standard is required for equipment that processes secret data in these rooms.

The NSA and Department of Defense have declassified some TEMPEST elements after Freedom of Information Act requests, but the documents black out many key values and descriptions. The declassified version of the TEMPEST test standard is heavily redacted, with emanation limits and test procedures blacked out.[citation needed][12] A redacted version of the introductory Tempest handbook NACSIM 5000 was publicly released in December 2000. Additionally, the current NATO standard SDIP-27 (before 2006 known as AMSG 720B, AMSG 788A, and AMSG 784) is still classified.

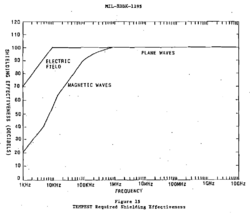

Despite this, some declassified documents give information on the shielding required by TEMPEST standards. For example, Military Handbook 1195 includes the chart at the right, showing electromagnetic shielding requirements at different frequencies. A declassified NSA specification for shielded enclosures offers similar shielding values, requiring, "a minimum of 100 dB insertion loss from 1 KHz to 10 GHz."[13] Since much of the current requirements are still classified, there are no publicly available correlations between this 100 dB shielding requirement and the newer zone-based shielding standards.

In addition, many separation distance requirements and other elements are provided by the declassified NSA red-black installation guidance, NSTISSAM TEMPEST/2-95.[14]

Certification

The information-security agencies of several NATO countries publish lists of accredited testing labs and of equipment that has passed these tests:

- In Canada: Canadian Industrial TEMPEST Program[15]

- In Germany: BSI German Zoned Products List[16]

- In the UK: UK CESG Directory of Infosec Assured Products, Section 12[17]

- In the U.S.: NSA TEMPEST Certification Program[18]

The United States Army also has a Tempest testing facility, as part of the U.S. Army Electronic Proving Ground, at Fort Huachuca, Arizona. Similar lists and facilities exist in other NATO countries.

Tempest certification must apply to entire systems, not just to individual components, since connecting a single unshielded component (such as a cable or device) to an otherwise secure system could dramatically alter the system RF characteristics.

RED/BLACK separation

TEMPEST standards require "RED/BLACK separation", i.e., maintaining distance or installing shielding between circuits and equipment used to handle plaintext classified or sensitive information that is not encrypted (RED) and secured circuits and equipment (BLACK), the latter including those carrying encrypted signals. Manufacture of TEMPEST-approved equipment must be done under careful quality control to ensure that additional units are built exactly the same as the units that were tested. Changing even a single wire can invalidate the tests.[citation needed]

Correlated emanations

One aspect of Tempest testing that distinguishes it from limits on spurious emissions (e.g., FCC Part 15) is a requirement of absolute minimal correlation between radiated energy or detectable emissions and any plaintext data that are being processed.

Public research

In 1985, Wim van Eck published the first unclassified technical analysis of the security risks of emanations from computer monitors. This paper caused some consternation in the security community, which had previously believed that such monitoring was a highly sophisticated attack available only to governments; van Eck successfully eavesdropped on a real system, at a range of hundreds of metres, using just $15 worth of equipment plus a television set.

As a consequence of this research, such emanations are sometimes called "van Eck radiation", and the eavesdropping technique van Eck phreaking, although government researchers were already aware of the danger, as Bell Labs noted this vulnerability to secure teleprinter communications during World War II and was able to produce 75% of the plaintext being processed in a secure facility from a distance of 80 feet (24 metres)[19] Additionally the NSA published Tempest Fundamentals, NSA-82-89, NACSIM 5000, National Security Agency (Classified) on February 1, 1982. In addition, the van Eck technique was successfully demonstrated to non-TEMPEST personnel in Korea during the Korean War in the 1950s.[20]

Markus Kuhn has discovered several low-cost techniques for reducing the chances that emanations from computer displays can be monitored remotely.[21] With CRT displays and analog video cables, filtering out high-frequency components from fonts before rendering them on a computer screen will attenuate the energy at which text characters are broadcast.[22][23] With modern flat panel displays, the high-speed digital serial interface (DVI) cables from the graphics controller are a main source of compromising emanations. Adding random noise to the least significant bits of pixel values may render the emanations from flat-panel displays unintelligible to eavesdroppers but is not a secure method. Since DVI uses a certain bit code scheme that tries to transport a balanced signal of 0 bits and 1 bits, there may not be much difference between two pixel colors that differ very much in their color or intensity. The emanations can differ drastically even if only the last bit of a pixel's color is changed. The signal received by the eavesdropper also depends on the frequency where the emanations are detected. The signal can be received on many frequencies at once and each frequency's signal differs in contrast and brightness related to a certain color on the screen. Usually, the technique of smothering the RED signal with noise is not effective unless the power of the noise is sufficient to drive the eavesdropper's receiver into saturation thus overwhelming the receiver input.

LED indicators on computer equipment can be a source of compromising optical emanations.[24] One such technique involves the monitoring of the lights on a dial-up modem. Almost all modems flash an LED to show activity, and it is common for the flashes to be directly taken from the data line. As such, a fast optical system can easily see the changes in the flickers from the data being transmitted down the wire.

Recent research[25] has shown it is possible to detect the radiation corresponding to a keypress event from not only wireless (radio) keyboards, but also from traditional wired keyboards [the PS/2 keyboard, for example, contains a microprocessor which will radiate some amount of radio frequency energy when responding to keypresses], and even from laptop keyboards. From the 1970s onward, Soviet bugging of US Embassy IBM Selectric typewriters allowed the keypress-derived mechanical motion of bails, with attached magnets, to be detected by implanted magnetometers, and converted via hidden electronics to a digital radio frequency signal. Each eight character transmission provided Soviet access to sensitive documents, as they were being typed, at US facilities in Moscow and Leningrad.[26]

In 2014, researchers introduced "AirHopper", a bifurcated attack pattern showing the feasibility of data exfiltration from an isolated computer to a nearby mobile phone, using FM frequency signals.[27]

In 2015, "BitWhisper", a Covert Signaling Channel between Air-Gapped Computers using Thermal Manipulations was introduced. "BitWhisper" supports bidirectional communication and requires no additional dedicated peripheral hardware.[28] Later in 2015, researchers introduced GSMem, a method for exfiltrating data from air-gapped computers over cellular frequencies. The transmission - generated by a standard internal bus - renders the computer into a small cellular transmitter antenna.[29] In February 2018, research was published describing how low frequency magnetic fields can be used to escape sensitive data from Faraday-caged, air-gapped computers with malware code-named ’ODINI’ that can control the low frequency magnetic fields emitted from infected computers by regulating the load of CPU cores.[30]

In 2018, a class of side-channel attack was introduced at ACM and Black Hat by Eurecom's researchers: "Screaming Channels".[31] This kind of attack targets mixed-signal chips — containing an analog and digital circuit on the same silicon die — with a radio transmitter. The results of this architecture, often found in connected objects, is that the digital part of the chip will leak some metadata on its computations into the analog part, which leads to metadata's leak being encoded in the noise of the radio transmission. Thanks to signal-processing techniques, researchers were able to extract cryptographic keys used during the communication and decrypt the content. This attack class is supposed, by the authors, to be known already for many years by governmental intelligence agencies.

In popular culture

- In the television series Numb3rs, season 1 episode "Sacrifice", a wire connected to a high gain antenna was used to "read" from a computer monitor.

- In the television series Spooks, season 4 episode "The Sting", a failed attempt to read information from a computer that has no network link is described.

- In the novel Cryptonomicon by Neal Stephenson, characters use Van Eck phreaking to likewise read information from a computer monitor in a neighboring room.

- In the television series Agents of S.H.I.E.L.D., season 1 episode "Ragtag", an office is scanned for digital signatures in the UHF spectrum.

- In the video game Tom Clancy's Splinter Cell, part of the final mission involves spying on a meeting in a Tempest-hardened war room. Throughout the entire Splinter Cell series, a laser microphone is used as well.

- In the video game Rainbow Six: Siege, the operator Mute has experience in TEMPEST specifications. He designed a Signal Disrupter initially to ensure that hidden microphones in sensitive meetings would not transmit, and adapted them for combat, capable of disrupting remotely activated devices like breaching charges.

- In the novel series The Laundry Files by Charles Stross, the character James Angleton (high-ranking officer of an ultra-secret intelligence agency) always uses low tech devices such as a typewriter or a Memex to defend against TEMPEST (despite the building being tempest-shielded).[32]

See also

- Air gap (networking) - air gaps can be breached by TEMPEST-like techniques[33]

- Computer and network surveillance

- Computer security

- MIL-STD-461

References

- ↑ Product Delivery Order Requirements Package Checklist, US Air Force, http://www.netcents.af.mil/shared/media/document/AFD-140107-011.pdf

- ↑ TEMPEST Equipment Selection Process, NATO Information Assurance, 1981, http://www.ia.nato.int/niapc/tempest/certification-scheme, retrieved 2014-09-16

- ↑ "How Old IsTEMPEST?". Cryptome.org. http://cryptome.org/tempest-old.htm.

- ↑ Easter, David (2020-07-26). "The impact of 'Tempest' on Anglo-American communications security and intelligence, 1943–1970". Intelligence and National Security 36: 1–16. doi:10.1080/02684527.2020.1798604. ISSN 0268-4527. https://doi.org/10.1080/02684527.2020.1798604.

- ↑ "Emission Security". US Air Force. 14 April 2009. http://cryptome.org/dodi/2013/afssi-7700.pdf.

- ↑ 6.0 6.1 6.2 Goodman, Cassi (April 18, 2001), An Introduction to TEMPEST, Sans.org, https://www.sans.org/reading-room/whitepapers/privacy/introduction-tempest-981

- ↑ 7.0 7.1 N.S.A., TEMPEST: A Signal Problem, https://www.nsa.gov/public_info/_files/cryptologic_spectrum/tempest.pdf, retrieved 2014-01-28

- ↑ Marquardt et al. 2011, pp. 551–562.

- ↑ "NACSIM 5000 Tempest Fundamentals". Cryptome.org. http://cryptome.org/jya/nacsim-5000/nacsim-5000.htm.

- ↑ A History of U.S. Communications Security; the David G. Boak Lectures, National Security Agency (NSA), Volumes I, 1973, Volumes II 1981, partially released 2008, additional portions declassified October 14, 2015

- ↑ "SST: TEMPEST Standards SDIP 27 Level A, Level B & AMSG 784, 720B, 788A". Sst.ws. http://www.sst.ws/tempest_standards.php.

- ↑ Ulaş, Cihan, Serhat Şahin, Emir Memişoğlu, Ulaş Aşık, Cantürk Karadeniz, Bilal Kılıç, and Uğur Saraç. "Development of an automatic TEMPEST test and analysis system". Scientific Cooperations International Workshops on Electrical and Computer Engineering Subfields.

- ↑ Specification nsa no. 94-106 national security agency specification for shielded enclosures, Cryptome.info, http://cryptome.info/0001/nsa-94-106.htm, retrieved 2014-01-28

- ↑ N.S.A., NSTISSAM TEMPEST/2-95 RED/BLACK INSTALLATION, Cryptome.org, http://cryptome.org/tempest-2-95.htm, retrieved 2014-01-28

- ↑ "Canadian Industrial TEMPEST Program". CSE (Communications Security Establishment ). https://www.cse-cst.gc.ca/en/publication/list/EMSEC-and-TEMPEST.

- ↑ "German Zoned Product List". BSI (German Federal Office for Information Security). https://www.bsi.bund.de/DE/Themen/Oeffentliche-Verwaltung/Zulassung/BSI-TL-03305/AbstrahlgepHardwZone/zone_node.html.

- ↑ "The Directory of Infosec Assured Products". CESG. October 2010. http://www.cesg.gov.uk/publications/media/directory.pdf.

- ↑ "TEMPEST Certification Program - NSA/CSS". iad.gov. https://www.iad.gov/iad/programs/iad-initiatives/tempest.cfm.

- ↑ "A History of U.S. Communications Security (Volumes I and II)"; David G. Boak Lectures". National Security Agency. 1973. p. 90. https://www.governmentattic.org/2docs/Hist_US_COMSEC_Boak_NSA_1973.pdf.

- ↑ "1. Der Problembereich der Rechtsinformatik", Rechtsinformatik (Berlin, Boston: De Gruyter), 1977, doi:10.1515/9783110833164-003, ISBN 978-3-11-083316-4, http://dx.doi.org/10.1515/9783110833164-003, retrieved 2020-11-30

- ↑ Kuhn, Markus G. (December 2003). "Compromising emanations: eavesdropping risks of computer displays". Technical Report (Cambridge, United Kingdom: University of Cambridge Computer Laboratory) (577). UCAM-CL-TR-577. ISSN 1476-2986. http://www.cl.cam.ac.uk/techreports/UCAM-CL-TR-577.pdf. Retrieved 2010-10-29.

- ↑ Kubiak 2018, pp. 582–592: Very often the devices do not have enough space on the inside to install new elements such as filters, electromagnetic shielding, and others. [...] a new solution [...] does not change the construction of the devices (e.g., printers, screens). [...] based on computer fonts called TEMPEST fonts (safe fonts). In contrast to traditional fonts (e.g., Arial or Times New Roman), the new fonts are devoid of distinctive features. Without these features the characters of the new fonts are similar each other.

- ↑ Kubiak 2019: Computer fonts can be one of solutions supporting a protection of information against electromagnetic penetration. This solution is called „Soft TEMPEST”. However, not every font has features which counteract the process of electromagnetic infiltration. The distinctive features of characters of font determine it. This article presents two sets of new computer fonts. These fonts are fully usable in everyday work. Simultaneously they make it impossible to obtain information using the non-invasive method.

- ↑ J. Loughry and D. A. Umphress. Information Leakage from Optical Emanations (.pdf file), ACM Transactions on Information and System Security, Vol. 5, No. 3, August 2002, pp. 262-289

- ↑ Vuagnoux, Martin; Pasini, Sylvain. "Compromising radiation emanations of wired keyboards". Lasecwww.epfl.ch. http://lasecwww.epfl.ch/keyboard/.

- ↑ Maneki, Sharon (8 January 2007). "Learning from the Enemy: The GUNMAN project". Center for Cryptologic History, National Security Agency. https://www.nsa.gov/Portals/70/documents/news-features/declassified-documents/cryptologic-histories/Learning_from_the_Enemy.pdf. "All of the implants were quite sophisticated. Each implant had a magnetometer that converted the mechanical energy of key strokes into local magnetic disturbances. The electronics package in the implant responded to these disturbances, categorized the underlying data, and transmitted the results to a nearby listening post. Data were transmitted via radio frequency. The implant was enabled by remote control.[...] the movement of the bails determined which character had been typed because each character had a unique binary movement corresponding to the bails. The magnetic energy picked up by the sensors in the bar was converted into a digital electrical signal. The signals were compressed into a four-bit frequency select word. The bug was able to store up to eight four-bit characters. When the buffer was full, a transmitter in the bar sent the information out to Soviet sensors."

- ↑ Guri et al. 2014, pp. 58–67.

- ↑ Guri et al. 2015, pp. 276–289.

- ↑ Guri et al. 2015.

- ↑ Guri, Zadov & Elovici 2018, pp. 1190–1203.

- ↑ Camurati, Giovanni; Poeplau, Sebastian; Muench, Marius; Hayes, Tom; Francillon, Aurélien (2018). "Screaming Channels: When Electromagnetic Side Channels Meet Radio Transceivers". Proceedings of the 25th ACM Conference on Computer and Communications Security (CCS) CCS '18: 163–177. http://www.s3.eurecom.fr/docs/ccs18_camurati_preprint.pdf.

- ↑ Stross 2010, p. 98.

- ↑ Cimpanu, Catalin. "Academics turn RAM into Wi-Fi cards to steal data from air-gapped systems". https://www.zdnet.com/article/academics-turn-ram-into-wifi-cards-to-steal-data-from-air-gapped-systems/.

Sources

- Ciampa, Mark (2017). CompTIA Security+ Guide to Network Security Fundamentals. Cengage Learning. ISBN 978-1-337-51477-4. https://books.google.com/books?id=Ksw2DwAAQBAJ&pg=PA378.

- Guri, Mordechai; Kedma, Gabi; Kachlon, Assaf; Elovici, Yuval (2014). "AirHopper: Bridging the air-gap between isolated networks and mobile phones using radio frequencies". pp. 58–67. doi:10.1109/MALWARE.2014.6999418.

- Guri, Mordechai; Monitz, Matan; Mirski, Yisroel; Elovici, Yuval (2015). "BitWhisper: Covert Signaling Channel between Air-Gapped Computers Using Thermal Manipulations". pp. 276–289. doi:10.1109/CSF.2015.26.

- Guri, Mordechai; Zadov, Boris; Elovici, Yuval (2018). "ODINI: Escaping Sensitive Data From Faraday-Caged, Air-Gapped Computers via Magnetic Fields". IEEE Transactions on Information Forensics and Security 15: 1190–1203. doi:10.1109/TIFS.2019.2938404. ISSN 1556-6013. Bibcode: 2018arXiv180202700G.

- Guri, Mordechai; Kachlon, Assaf; Hasson, Ofer; Kedma, Gabi; Mirsky, Yisroel; Elovici, Yuval (August 2015). "GSMem: Data Exfiltration from Air-Gapped Computers over GSM Frequencies". 24th USENIX Security Symposium (USENIX Security 15): 849–864. Video on YouTube. ISBN 9781939133113. http://blogs.usenix.org/conference/usenixsecurity15/technical-sessions/presentation/guri.

- Kubiak, Ireneusz (2018). "TEMPEST font counteracting a noninvasive acquisition of text data". Turkish Journal of Electrical Engineering & Computer Sciences 26: 582–592. doi:10.3906/elk-1704-263. ISSN 1300-0632.

- Kubiak, Ireneusz (2019). "Font Design—Shape Processing of Text Information Structures in the Process of Non-Invasive Data Acquisition". Computers 8 (4): 70. doi:10.3390/computers8040070. ISSN 2073-431X.

- Marquardt, P.; Verma, A.; Carter, H.; Traynor, P. (2011), "(sp)i Phone", Proceedings of the 18th ACM conference on Computer and communications security - CCS '11, pp. 551–561, doi:10.1145/2046707.2046771, ISBN 9781450309486

- Stross, Charles (2010). The Atrocity Archives: Book 1 in The Laundry Files. Little, Brown. ISBN 978-0-7481-2413-8. https://books.google.com/books?id=kvkzzmUcHKsC&pg=PT98.

- Boitan, Alexandru; Halunga, Simona; Bindar, Valerica; Fratu, Octavian (2020). "Compromising electromagnetic emanations of usb mass storage devices". Wireless Personal Communications: 1–26.

|

KSF

KSF