Transparent Inter-process Communication

From HandWiki - Reading time: 11 min

From HandWiki - Reading time: 11 min

Transparent Inter Process Communication (TIPC) is an Inter-process communication (IPC) service in Linux designed for cluster wide operation. It is sometimes presented as Cluster Domain Sockets, in contrast to the well-known Unix Domain Socket service; the latter working only on a single kernel.

| Internet protocol suite |

|---|

| Application layer |

| Transport layer |

| Internet layer |

| Link layer |

Features

Some features of TIPC:

- Service addressing, - address services rather than sockets

- Service tracking, - subscribe for binding/unbinding of service addresses to sockets

- Cluster wide IPC service, - service location is transparent to sender

- Datagram messaging with unicast, anycast and multicast, - unreliable delivery

- Connection oriented messaging, - reliable delivery

- Group messaging, - datagram messaging with reliable delivery

- Cluster topology tracking, - subscribe for added/lost cluster nodes

- Connectivity tracking, - subscribe for up/down of individual links between nodes

- Automatic discovery of new cluster nodes

- Scales up to 1000 nodes with second-speed failure discovery

- Very good performance

- Implemented as in-tree kernel module at kernel.org

Implementations

The TIPC protocol is available as a module in the mainstream Linux kernel, and hence in most Linux distributions. The TIPC project also provides open source implementations of the protocol for other operating systems including Wind River's VxWorks and Sun Microsystems' Solaris. TIPC applications are typically written in C (or C++) and utilize sockets of the AF_TIPC address family. Support for Go, D, Perl, Python, and Ruby is also available.

Service Addressing

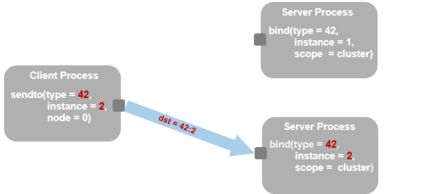

A TIPC application may use three types of addresses.

- Service Address. This address type consists of a 32 bit service type identifier and a 32 bit service instance identifier. The type identifier is typically determined and hard coded by the user application programmer, but its value may have to be coordinated with other applications which might be present in the same cluster. The instance identifier is often calculated by the program, based on application specific criteria.

- Service Range. This address type represents a range of service addresses of the same type and with instances between a lower and an upper range limit. By binding a socket to this address type one can make it represent many instances, something which has proved useful in many cases.

- Socket Address. This address is a reference to a specific socket in the cluster. It contains a 32 bit port number and a 32 bit node number. The port number is generated by the system when the socket is created, and the node number is either set by configuration or, - from Linux 4.17, generated from the corresponding node identity. An address of this type can be used for connecting or for sending messages in the same way as service addresses can be used, but is only valid as long as long as the referenced socket exists.

A socket can be bound to several different service addresses or ranges, just as different sockets can be bound to the same service address or range. Bindings are also qualified with a visibility scope, i.e., node local or cluster global visibility.

Datagram Messaging

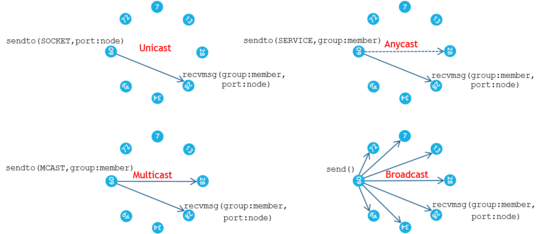

Datagram messages are discrete data units between 1 and 66,000 byte of length, transmitted between non-connected sockets. Just like their UDP counterparts, TIPC datagrams are not guaranteed to reach their destination, but their chances of being delivered are still much better than for the former. Because of the link layer delivery guarantee, the only limiting factor for datagram delivery is the socket receive buffer size. The chances of success can also be increased by the sender, by giving his socket an appropriate delivery importance priority. Datagrams can be transmitted in three different ways.

- Unicast. If a socket address is indicated the message is transmitted to that exact socket. In TIPC the term unicast is reserved to denote this addressing mode.

- Anycast. When a service address is used, there might be several matching destinations, and the transmission method becomes what is often called anycast, i.e., that any of the matching destinations may be selected. The internal function translating from service address to socket address uses a round-robin algorithm to decrease the risk of load bias among the destinations.

- Multicast. The service range address type also doubles as multicast address. When an application specifies a service range as destination address, a copy of the message is sent to all matching sockets in the cluster. Any socket bound to a matching service instance inside the indicated multicast range will receive one copy of the message. TIPC multicast will leverage use of UDP multicast or Ethernet broadcast whenever possible.

Connection Oriented Messaging

Connections can be established the same way as with TCP, by means of accept() and connect() on SOCK_STREAM sockets. However, in TIPC the client and server use service addresses or ranges instead of port numbers and IP addresses. TIPC does also provide two alternatives to this standard setup scenario.

- The sockets can be created as SOCK_SEQPACKET, implying that data exchange must happen in units of maximum 66,000 byte messages.

- A client can initialize a connection by simply sending a data message to an accepting socket. Likewise, the spawned server socket can respond with a data message back to the client to complete the connection. This way, TIPC provides an implied, also known as 0-RTT connection setup mechanism that is particularly time saving in many cases.

The most distinguishing property of TIPC connections is still their ability to react promptly to loss of contact with the peer socket, without resorting to active neighbor heart-beating.

- When a socket is ungraciously closed, either by the user or because of a process crash, the kernel socket cleanup code will by its own initiative issue a FIN/ERROR message to the peer.

- When contact to a cluster node is lost, the local link layer will issue FIN/ERROR messages to all sockets having connections towards that node. The peer node failure discovery time is configurable down to 50 ms, while the default value is 1,500 ms.

Group Messaging

Group messaging is similar to datagram messaging, as described above, but with end-to-end flow control, and hence with delivery guarantee. There are however a few notable differences.

- Messaging can only be done within a closed group of member sockets.

Transmission Modes within a Communication Group - A socket joins a group by using a service address, where the type field indicates the group identity and the instance field indicates member identity. Hence, a member can only bind to one single service address.

- When sending an anycast message, the lookup algorithm applies the regular round-robin algorithm, but also considers the current load, i.e., the advertised send window, on potential receivers before selecting one.

- Multicast is performed by a service address, not a range, so a copy of the sent message will reach all members which have joined the group with exactly that address.

- There is a group broadcast mode which transmits a message to all group members, without considering their member identity.

- Message sequentiality is guaranteed, even between the transmission modes.

When joining a group, a member may indicate if it wants to receive join or leave events for other members of the group. This feature leverages the service tracking feature, and the group member will receive the events in the member socket proper.

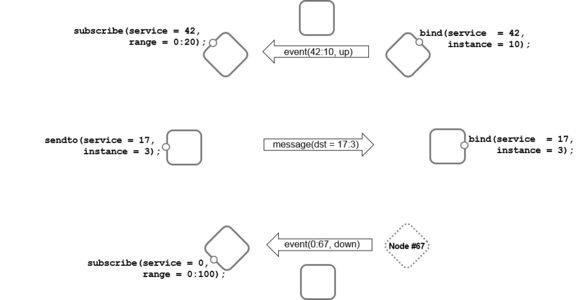

Service Tracking

An application accesses the tracking service by opening a connection to the TIPC internal topology server, using a reserved service address. It can then send one or more service subscription messages to the tracking service, indicating the service address or range it wants to track. In return, the topology service sends service event messages back to the application whenever matching addresses are bound or unbound by sockets within the cluster. A service event contains the found matching service range, plus the port and node number of the bound/unbound socket. There are two special cases of service tracking:

- Cluster topology tracking. When TIPC establishes contact with another node, it does internally create a node local binding, using a reserved service type, in the service binding table. This makes it possible for applications on the node to keep track of reachable peer nodes at any time.

- Cluster connectivity tracking. When TIPC establishes a new link to another node, it does internally create a node local binding, using a reserved service type, in the node's binding table. This makes it possible for applications on the node to keep track of all working links to the peer nodes at any time.

Although most service subscriptions are directed towards the node local topology server, it is possible to establish connections to other nodes' servers and observe their local bindings. This might be useful if e.g., a connectivity subscriber wants to create a matrix of all connectivity across the cluster, - not limited to what can be seen from the local node.

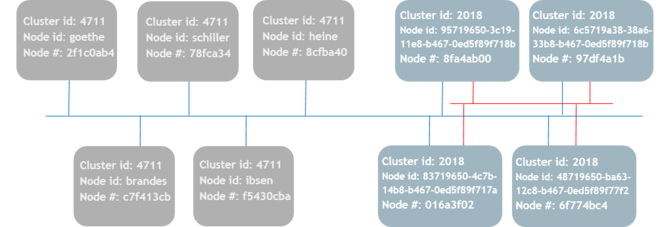

Cluster

A TIPC network consists of individual processing elements or nodes. Nodes can be either physical processors, virtual machines or network namespaces, e.g., in the form of Docker Containers. Those nodes are arranged into a cluster according to their assigned cluster identity. All nodes having the same cluster identity will establish links to each other, provided the network is set up to allow mutual neighbor discovery between them. It is only necessary to change the cluster identity from its default value if nodes in different clusters potentially may discover each other, e.g., if they are attached to the same subnet. Nodes in different clusters cannot communicate with each other using TIPC.

Before Linux 4.17, nodes must be configured a unique 32-bit node number or address, which must comply with certain restrictions. As from Linux 4.17, each node has a 128 bit node identity which must be unique within the node's cluster. The node number is then calculated as a guaranteed unique hash from that identity.

If the node will be part of a cluster, the user can either rely on the auto configuration capability of the node, where the identity is generated when the first interface is attached, or he can set the identity explicitly, e.g., from the node's host name or a UUID. If a node will not be part of a cluster its identity can remain at the default value, zero.

Neighbor discovery is performed by UDP multicast or L2 broadcast, when available. If broadcast/multicast support is missing in the infrastructure, discovery can be performed by explicitly configured IP addresses.

Inter Node Links

A cluster consists of nodes interconnected with one or two links. A link constitutes a reliable packet transport service, sometimes referred to as an "L2.5" data link layer.

- It guarantees delivery and sequentiality for all packets.

- It acts as a trunk for inter-node connections, and keeps track of those.

Nodes are interconnected with one or two links - When all contact to the peer node is lost, sockets with connections to that peer are notified so they can break the connections.

- Each endpoint keeps track of the peer node's address bindings in the local replica of the service binding table.

- When contact to the peer node is lost all bindings from that peer are purged and service tracking events issued to all matching subscribers.

- When there is no regular data packet traffic each link is actively supervised by probing/heartbeats.

- Failure detection tolerance is configurable from 50 ms to 30 seconds, - default setting is 1.5 seconds.

- For performance and redundancy reasons it is possible to establish two links per node pair, - on separate network interfaces.

- A link pair can be configured for load sharing or active-standby.

- If a link fails there will be a disturbance-free failover to the remaining link, if any.

Cluster Scalability

Since Linux 4.7, TIPC comes with a unique, patent pending, auto-adaptive hierarchical neighbor monitoring algorithm. This Overlapping Ring Monitoring algorithm, in reality a combination of ring monitoring and the Gossip protocol, makes it possible to establish full-mesh clusters of up to 1000 nodes with a failure discovery time of 1.5 seconds, while it in smaller clusters can be made much shorter.

Performance

TIPC provides outstanding performance, especially regarding round-trip latency times. Inter-node it is typically 33% faster than TCP, intra-node 2 times faster for small messages and 7 times faster for large messages. Inter-node, it provides a 10-30% lower maximal throughput than TCP, while its intra-node throughput is 25-30% higher. The TIPC team is currently studying how to add GSO/GRO support for intra node messaging, in order to match TCP even here.

Transport Media

While designed to be able to use all kinds of transport media, as of May 2018[update] implementations support UDP, Ethernet and Infiniband. The VxWorks implementation also supports shared memory which can be accessed by multiple instances of the operating system, running simultaneously on the same hardware.

Security

Security must currently be provided by the transport media carrying TIPC. When running across UDP, IPSec can be used, when on Ethernet, MACSec is the best option. The TIPC team is currently looking into how to support TLS or DTLS, ether natively or by an addition to OpenSSL.

History

This protocol was originally developed by Jon Paul Maloy at Ericsson during 1996-2005 and was used by that company in cluster applications for several years, before subsequently being released to the open source community and integrated in the mainstream Linux kernel. It has since then undergone numerous improvements and upgrades, all performed by a dedicated TIPC project team with participants from various companies. The management tool for TIPC is part of the iproute2 tool package which comes as standard with all Linux distributions.

Reference links

- Iproute2

- IProute2 website

- TIPC Home Page

- TIPC Project Page at SourceForge

- Demos and Utilities downloads at SourceForge

This article uses only URLs for external sources. (2021) (Learn how and when to remove this template message) |

This article does not cite any external source. HandWiki requires at least one external source. See citing external sources. (2021) (Learn how and when to remove this template message) |

|

KSF

KSF