software

From HandWiki - Reading time: 16 min

From HandWiki - Reading time: 16 min

Software is a set of computer programs and associated documentation and data.[1] This is in contrast to hardware, from which the system is built and which actually performs the work.

At the lowest programming level, executable code consists of machine language instructions supported by an individual processor—typically a central processing unit (CPU) or a graphics processing unit (GPU). Machine language consists of groups of binary values signifying processor instructions that change the state of the computer from its preceding state. For example, an instruction may change the value stored in a particular storage location in the computer—an effect that is not directly observable to the user. An instruction may also invoke one of many input or output operations, for example, displaying some text on a computer screen, causing state changes that should be visible to the user. The processor executes the instructions in the order they are provided, unless it is instructed to "jump" to a different instruction or is interrupted by the operating system. As of 2023[update], most personal computers, smartphone devices, and servers have processors with multiple execution units, or multiple processors performing computation together, so computing has become a much more concurrent activity than in the past.

The majority of software is written in high-level programming languages. They are easier and more efficient for programmers because they are closer to natural languages than machine languages.[2] High-level languages are translated into machine language using a compiler, an interpreter, or a combination of the two. Software may also be written in a low-level assembly language that has a strong correspondence to the computer's machine language instructions and is translated into machine language using an assembler.

History

An algorithm for what would have been the first piece of software was written by Ada Lovelace in the 19th century, for the planned Analytical Engine.[3] She created proofs to show how the engine would calculate Bernoulli numbers.[3] Because of the proofs and the algorithm, she is considered the first computer programmer.[4][5]

The first theory about software, prior to the creation of computers as we know them today, was proposed by Alan Turing in his 1936 essay, On Computable Numbers, with an Application to the Entscheidungsproblem (decision problem).[6] This eventually led to the creation of the academic fields of computer science and software engineering; both fields study software and its creation.[citation needed] Computer science is the theoretical study of computer and software (Turing's essay is an example of computer science), whereas software engineering is the application of engineering principles to development of software.[7]

In 2000, Fred Shapiro, a librarian at the Yale Law School, published a letter revealing that John Wilder Tukey's 1958 paper "The Teaching of Concrete Mathematics"[8][9] contained the earliest known usage of the term "software" found in a search of JSTOR's electronic archives, predating the Oxford English Dictionary's citation by two years.[10] This led many to credit Tukey with coining the term, particularly in obituaries published that same year,[11] although Tukey never claimed credit for any such coinage. In 1995, Paul Niquette claimed he had originally coined the term in October 1953, although he could not find any documents supporting his claim.[12] The earliest known publication of the term "software" in an engineering context was in August 1953 by Richard R. Carhart, in a Rand Corporation Research Memorandum.[13]

Types

On virtually all computer platforms, software can be grouped into a few broad categories.

Purpose, or domain of use

Based on the goal, computer software can be divided into:

- Application software uses the computer system to perform special functions beyond the basic operation of the computer itself. There are many different types of application software because the range of tasks that can be performed with a modern computer is so large—see list of software.

- System software manages hardware behaviour, as to provide basic functionalities that are required by users, or for other software to run properly, if at all. System software is also designed for providing a platform for running application software,[14] and it includes the following:

- Operating systems are essential collections of software that manage resources and provide common services for other software that runs "on top" of them. Supervisory programs, boot loaders, shells and window systems are core parts of operating systems. In practice, an operating system comes bundled with additional software (including application software) so that a user can potentially do some work with a computer that only has one operating system.

- Device drivers operate or control a particular type of device that is attached to a computer. Each device needs at least one corresponding device driver; because a computer typically has at minimum at least one input device and at least one output device, a computer typically needs more than one device driver.

- Utilities are computer programs designed to assist users in the maintenance and care of their computers.

- Malicious software, or malware, is software that is developed to harm or disrupt computers. Malware is closely associated with computer-related crimes, though some malicious programs may have been designed as practical jokes.

Nature or domain of execution

- Desktop applications such as web browsers and Microsoft Office and LibreOffice and WordPerfect, as well as smartphone and tablet applications (called "apps").[citation needed]

- JavaScript scripts are pieces of software traditionally embedded in web pages that are run directly inside the web browser when a web page is loaded without the need for a web browser plugin. Software written in other programming languages can also be run within the web browser if the software is either translated into JavaScript, or if a web browser plugin that supports that language is installed; the most common example of the latter is ActionScript scripts, which are supported by the Adobe Flash plugin.[citation needed]

- Server software, including:

- Web applications, which usually run on the web server and output dynamically generated web pages to web browsers, using e.g. PHP, Java, ASP.NET, or even JavaScript that runs on the server. In modern times these commonly include some JavaScript to be run in the web browser as well, in which case they typically run partly on the server, partly in the web browser.[citation needed]

- Plugins and extensions are software that extends or modifies the functionality of another piece of software, and require that software be used in order to function.[15]

- Embedded software resides as firmware within embedded systems, devices dedicated to a single use or a few uses such as cars and televisions (although some embedded devices such as wireless chipsets can themselves be part of an ordinary, non-embedded computer system such as a PC or smartphone).[16] In the embedded system context there is sometimes no clear distinction between the system software and the application software. However, some embedded systems run embedded operating systems, and these systems do retain the distinction between system software and application software (although typically there will only be one, fixed application which is always run).[citation needed]

- Microcode is a special, relatively obscure type of embedded software which tells the processor itself how to execute machine code, so it is actually a lower level than machine code.[citation needed] It is typically proprietary to the processor manufacturer, and any necessary correctional microcode software updates are supplied by them to users (which is much cheaper than shipping replacement processor hardware). Thus an ordinary programmer would not expect to ever have to deal with it.[citation needed]

Programming tools

Programming tools are also software in the form of programs or applications that developers use to create, debug, maintain, or otherwise support software.[17][better source needed]

Software is written in one or more programming languages; there are many programming languages in existence, and each has at least one implementation, each of which consists of its own set of programming tools. These tools may be relatively self-contained programs such as compilers, debuggers, interpreters, linkers, and text editors, that can be combined to accomplish a task; or they may form an integrated development environment (IDE), which combines much or all of the functionality of such self-contained tools.[citation needed] IDEs may do this by either invoking the relevant individual tools or by re-implementing their functionality in a new way.[citation needed] An IDE can make it easier to do specific tasks, such as searching in files in a particular project.[citation needed] Many programming language implementations provide the option of using both individual tools or an IDE.[citation needed]

Topics

Architecture

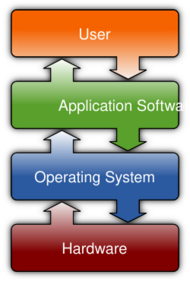

People who use modern general purpose computers (as opposed to embedded systems, analog computers and supercomputers) usually see three layers of software performing a variety of tasks: platform, application, and user software.[citation needed]

- Platform software: The platform includes the firmware, device drivers, an operating system, and typically a graphical user interface which, in total, allow a user to interact with the computer and its peripherals (associated equipment). Platform software often comes bundled with the computer. On a PC one will usually have the ability to change the platform software.

- Application software: Application software is what most people think of when they think of software.[citation needed] Typical examples include office suites and video games. Application software is often purchased separately from computer hardware. Sometimes applications are bundled with the computer, but that does not change the fact that they run as independent applications. Applications are usually independent programs from the operating system, though they are often tailored for specific platforms. Most users think of compilers, databases, and other "system software" as applications.[citation needed]

- User-written software: End-user development tailors systems to meet users' specific needs. User software includes spreadsheet templates and word processor templates.[citation needed] Even email filters are a kind of user software. Users create this software themselves and often overlook how important it is.[citation needed] Depending on how competently the user-written software has been integrated into default application packages, many users may not be aware of the distinction between the original packages, and what has been added by co-workers.[citation needed]

Execution

Computer software has to be "loaded" into the computer's storage (such as the hard drive or memory). Once the software has loaded, the computer is able to execute the software. This involves passing instructions from the application software, through the system software, to the hardware which ultimately receives the instruction as machine code. Each instruction causes the computer to carry out an operation—moving data, carrying out a computation, or altering the control flow of instructions.[citation needed]

Data movement is typically from one place in memory to another. Sometimes it involves moving data between memory and registers which enable high-speed data access in the CPU. Moving data, especially large amounts of it, can be costly; this is sometimes avoided by using "pointers" to data instead.[citation needed] Computations include simple operations such as incrementing the value of a variable data element. More complex computations may involve many operations and data elements together.[citation needed]

Quality and reliability

Software quality is very important, especially for commercial and system software. If software is faulty, it can delete a person's work, crash the computer and do other unexpected things. Faults and errors are called "bugs" which are often discovered during alpha and beta testing.[citation needed] Software is often also a victim to what is known as software aging, the progressive performance degradation resulting from a combination of unseen bugs.[citation needed]

Many bugs are discovered and fixed through software testing. However, software testing rarely—if ever—eliminates every bug; some programmers say that "every program has at least one more bug" (Lubarsky's Law).[18] In the waterfall method of software development, separate testing teams are typically employed, but in newer approaches, collectively termed agile software development, developers often do all their own testing, and demonstrate the software to users/clients regularly to obtain feedback.[citation needed] Software can be tested through unit testing, regression testing and other methods, which are done manually, or most commonly, automatically, since the amount of code to be tested can be large.[citation needed] Programs containing command software enable hardware engineering and system operations to function much easier together.[19]

License

The software's license gives the user the right to use the software in the licensed environment, and in the case of free software licenses, also grants other rights such as the right to make copies.[20]

Proprietary software can be divided into two types:

- freeware, which includes the category of "free trial" software or "freemium" software (in the past, the term shareware was often used for free trial/freemium software). As the name suggests, freeware can be used for free, although in the case of free trials or freemium software, this is sometimes only true for a limited period of time or with limited functionality.[21]

- software available for a fee, which can only be legally used on purchase of a license.[22]

Open-source software comes with a free software license, granting the recipient the rights to modify and redistribute the software.[23]

Patents

Software patents, like other types of patents, are theoretically supposed to give an inventor an exclusive, time-limited license for a detailed idea (e.g. an algorithm) on how to implement a piece of software, or a component of a piece of software. Ideas for useful things that software could do, and user requirements, are not supposed to be patentable, and concrete implementations (i.e. the actual software packages implementing the patent) are not supposed to be patentable either—the latter are already covered by copyright, generally automatically. So software patents are supposed to cover the middle area, between requirements and concrete implementation. In some countries, a requirement for the claimed invention to have an effect on the physical world may also be part of the requirements for a software patent to be held valid—although since all useful software has effects on the physical world, this requirement may be open to debate. Meanwhile, American copyright law was applied to various aspects of the writing of the software code.[24]

Software patents are controversial in the software industry with many people holding different views about them. One of the sources of controversy is that the aforementioned split between initial ideas and patent does not seem to be honored in practice by patent lawyers—for example the patent for aspect-oriented programming (AOP), which purported to claim rights over any programming tool implementing the idea of AOP, howsoever implemented.[citation needed] Another source of controversy is the effect on innovation, with many distinguished experts and companies arguing that software is such a fast-moving field that software patents merely create vast additional litigation costs and risks, and actually retard innovation.[citation needed] In the case of debates about software patents outside the United States, the argument has been made that large American corporations and patent lawyers are likely to be the primary beneficiaries of allowing or continue to allow software patents.[citation needed]

Design and implementation

Design and implementation of software vary depending on the complexity of the software. For instance, the design and creation of Microsoft Word took much more time than designing and developing Microsoft Notepad because the former has much more basic functionality.[citation needed]

Software is usually developed in integrated development environments (IDE) like Eclipse, IntelliJ and Microsoft Visual Studio that can simplify the process and compile the software.[citation needed] As noted in a different section, software is usually created on top of existing software and the application programming interface (API) that the underlying software provides like GTK+, JavaBeans or Swing.[citation needed] Libraries (APIs) can be categorized by their purpose. For instance, the Spring Framework is used for implementing enterprise applications, the Windows Forms library is used for designing graphical user interface (GUI) applications like Microsoft Word, and Windows Communication Foundation is used for designing web services.[citation needed] When a program is designed, it relies upon the API. For instance, a Microsoft Windows desktop application might call API functions in the .NET Windows Forms library like Form1.Close() and Form1.Show()[25] to close or open the application. Without these APIs, the programmer needs to write these functionalities entirely themselves. Companies like Oracle and Microsoft provide their own APIs so that many applications are written using their software libraries that usually have numerous APIs in them.[citation needed]

Data structures such as hash tables, arrays, and binary trees, and algorithms such as quicksort, can be useful for creating software.

Computer software has special economic characteristics that make its design, creation, and distribution different from most other economic goods.[specify][26][27]

A person who creates software is called a programmer, software engineer or software developer, terms that all have a similar meaning. More informal terms for programmer also exist such as "coder" and "hacker" – although use of the latter word may cause confusion, because it is more often used to mean someone who illegally breaks into computer systems.

See also

- Computer program

- Independent software vendor

- Open-source software

- Outline of software

- Software asset management

- Software release life cycle

References

- ↑ "ISO/IEC 2382:2015" (in en-US). 2020-09-03. https://www.iso.org/cms/render/live/en/sites/isoorg/contents/data/standard/06/35/63598.html. "[Software includes] all or part of the programs, procedures, rules, and associated documentation of an information processing system."

- ↑ "Compiler construction". http://www.cs.uu.nl/education/vak.php?vak=INFOMCCO.

- ↑ 3.0 3.1 Evans 2018, p. 21.

- ↑ Fuegi, J.; Francis, J. (2003). "Lovelace & Babbage and the creation of the 1843 'notes'". Annals of the History of Computing 25 (4): 16–26. doi:10.1109/MAHC.2003.1253887. https://pdfs.semanticscholar.org/81bb/f32d2642a7a8c6b0a867379a4e9e99d872bc.pdf.

- ↑ "Ada Lovelace honoured by Google doodle" (in en-US). The Guardian. December 10, 2012. https://www.theguardian.com/technology/2012/dec/10/ada-lovelace-honoured-google-doodle.

- ↑ Turing, Alan Mathison (1936). "On Computable Numbers, with an Application to the Entscheidungsproblem". Journal of Mathematics 58: 230–265. https://www.wolframscience.com/prizes/tm23/images/Turing.pdf. Retrieved 2022-08-28.

- ↑ Lorge Parnas, David (1984-11-01). "Software Engineering Principles". INFOR: Information Systems and Operational Research 22 (4): 303–316. doi:10.1080/03155986.1984.11731932. ISSN 0315-5986. https://doi.org/10.1080/03155986.1984.11731932.

- ↑ "The Teaching of Concrete Mathematics". American Mathematical Monthly (Taylor & Francis, Ltd. / Mathematical Association of America) 65 (1): 1–9, 2. January 1958. doi:10.2307/2310294. CODEN AMMYAE. ISSN 0002-9890. "[…] Today the "software" comprising the carefully planned interpretive routines, compilers, and other aspects of automative programming are at least as important to the modern electronic calculator as its "hardware" of tubes, transistors, wires, tapes, and the like. […]".

- ↑ "Chapter I - Integer arithmetic". The Mathematical-Function Computation Handbook - Programming Using the MathCW Portable Software Library (1 ed.). Salt Lake City, UT, US: Springer International Publishing AG. 2017-08-22. pp. 969, 1035. doi:10.1007/978-3-319-64110-2. ISBN 978-3-319-64109-6.

- ↑ "Origin of the Term Software: Evidence from the JSTOR Electronic Journal Archive". IEEE Annals of the History of Computing 22 (2): 69–71. 2000. doi:10.1109/mahc.2000.887997. http://computer.org/annals/an2000/pdf/a2069.pdf. Retrieved 2013-06-25.

- ↑ "John Tukey, 85, Statistician; Coined the Word 'Software'". The New York Times. 2000-07-28. https://www.nytimes.com/2000/07/28/us/john-tukey-85-statistician-coined-the-word-software.html.

- ↑ Softword: Provenance for the Word 'Software, 2006, ISBN 1-58922-233-4, http://www.niquette.com/books/softword/tocsoft.html, retrieved 2019-08-18

- ↑ A survey of the current status of the electronic reliability problem. Santa Monica, CA: Rand Corporation. 1953. p. 69. https://www.rand.org/content/dam/rand/pubs/research_memoranda/2013/RM1131.pdf#79. "[…] It will be recalled from Sec. 1.6 that the term personnel was defined to include people who come into direct contact with the hardware, from production to field use, i.e., people who assemble, inspect, pack, ship, handle, install, operate, and maintain electronic equipment. In any of these phases personnel failures may result in unoperational gear. As with the hardware factors, there is almost no quantitative data concerning these software or human factors in reliability: How many faults are caused by personnel, why they occur, and what can be done to remove the errors. […]"

- ↑ "System Software". The University of Mississippi. http://home.olemiss.edu/~misbook/sfsysfm.htm.

- ↑ Hope, Computer. "What is a Plugin?" (in en-US). https://www.computerhope.com/jargon/p/plugin.htm.

- ↑ "Embedded Software—Technologies and Trends". IEEE Computer Society. May–June 2009. http://www.computer.org/csdl/mags/so/2009/03/mso2009030014.html.

- ↑ "What is a Programming Tool? - Definition from Techopedia" (in en-US). http://www.techopedia.com/definition/8996/programming-tool.

- ↑ "scripting intelligence book examples". 2018-05-09. https://github.com/mark-watson/scripting-intelligence-book-examples/blob/master/part1/wikipedia_text/software.txt.

- ↑ "What is System Software? – Definition from WhatIs.Com" (in en). https://www.techtarget.com/whatis/definition/system-software.

- ↑ "What is a Software License? Everything You Need to Know" (in en). https://www.techtarget.com/searchcio/definition/software-license.

- ↑ "Freeware vs Shareware - Difference and Comparison | Diffen" (in en). https://www.diffen.com/difference/Freeware_vs_Shareware.

- ↑ Morin, Andrew; Urban, Jennifer; Sliz, Piotr (2012-07-26). "A Quick Guide to Software Licensing for the Scientist-Programmer" (in en). PLOS Computational Biology 8 (7): e1002598. doi:10.1371/journal.pcbi.1002598. ISSN 1553-7358. PMID 22844236. Bibcode: 2012PLSCB...8E2598M.

- ↑ "Open source software explained" (in en-US). https://www.ionos.ca/digitalguide/server/know-how/what-is-open-source/.

- ↑ Gerardo Con Díaz, "The Text in the Machine: American Copyright Law and the Many Natures of Software, 1974–1978," Technology and Culture 57 (October 2016), 753–79.

- ↑ "MSDN Library". http://msdn.microsoft.com/en-us/library/default.aspx.

- ↑ v. Engelhardt, Sebastian (2008). "The Economic Properties of Software". Jena Economic Research Papers 2 (2008–045). https://ideas.repec.org/p/jrp/jrpwrp/2008-045.html.

- ↑ Kaminsky, Dan (1999-03-02). "Why Open Source Is The Optimum Economic Paradigm for Software" (in en-US). http://dankaminsky.com/1999/03/02/69/.

Sources

- Evans, Claire L. (2018). Broad Band: The Untold Story of the Women Who Made the Internet. New York: Portfolio/Penguin. ISBN 9780735211759. https://books.google.com/books?id=C8ouDwAAQBAJ&q=9780735211759&pg=PP1.

External links

KSF

KSF