Electrification

From Wikipedia - Reading time: 35 min

From Wikipedia - Reading time: 35 min

Electrification is the process of powering by electricity and, in many contexts, the introduction of such power by changing over from an earlier power source. In the context of history of technology and economic development, electrification refers to the build-out of the electricity generation and electric power distribution systems. In the context of sustainable energy, electrification refers to the build-out of super grids and smart grids with distributed energy resources (such as energy storage) to accommodate the energy transition to renewable energy and the switch of end-uses to electricity.[citation needed]

The electrification of particular sectors of the economy, particularly out of context, is called by modified terms such as factory electrification, household electrification, rural electrification and railway electrification. In the context of sustainable energy, terms such as transport electrification (referring to electric vehicles) or heating electrification (referring to heat pumps) are used. It may also apply to changing industrial processes such as smelting, melting, separating or refining from coal or coke heating,[clarification needed] or from chemical processes to some type of electric process such as electric arc furnace, electric induction or resistance heating, or electrolysis or electrolytic separating.

Benefits of electrification

[edit]Electrification was called "the greatest engineering achievement of the 20th Century" by the National Academy of Engineering,[1] and it continues in both rich and poor countries.[2][3]

Benefits of electric lighting

[edit]Electric lighting is highly desirable. The light is much brighter than oil or gas lamps, and there is no soot. Although early electricity was very expensive compared to today, it was far cheaper and more convenient than oil or gas lighting. Electric lighting was so much safer than oil or gas that some companies were able to pay for the electricity with the insurance savings.[4]

Pre-electric power

[edit]In 1851, Charles Babbage stated:

One of the inventions most important to a class of highly skilled workers (engineers) would be a small motive power - ranging perhaps from the force of from half a man to that of two horses, which might commence as well as cease its action at a moment's notice, require no expense of time for its management and be of modest cost both in original cost and in daily expense.[5]

To be efficient steam engines needed to be several hundred horsepower. Steam engines and boilers also required operators and maintenance. For these reasons the smallest commercial steam engines were about 2 horsepower. This was above the need for many small shops. Also, a small steam engine and boiler cost about $7,000 while an old blind horse that could develop 1/2 horsepower cost $20 or less.[6] Machinery to use horses for power cost $300 or less.[7]

Many power requirements were less than that of a horse. Shop machines, such as woodworking lathes, were often powered with a one- or two-man crank. Household sewing machines were powered with a foot treadle; however, factory sewing machines were steam-powered from a line shaft. Dogs were sometimes used on machines such as a treadmill, which could be adapted to churn butter.[8]

In the late 19th century specially designed power buildings leased space to small shops. These building supplied power to the tenants from a steam engine through line shafts.[8]

Electric motors were several times more efficient than small steam engines because central station generation was more efficient than small steam engines and because line shafts and belts had high friction losses.[9][8]

Electric motors were more efficient than human or animal power. The conversion efficiency for animal feed to work is between 4 and 5% compared to over 30% for electricity generated using coal.[10][11]

Economic impact of electrification

[edit]Electrification and economic growth are highly correlated.[12] In economics, the efficiency of electrical generation has been shown to correlate with technological progress.[10][12]

In the U.S. from 1870 to 1880 each man-hour was provided with .55 hp. In 1950 each man-hour was provided with 5 hp, or a 2.8% annual increase, declining to 1.5% from 1930 to 1950.[13] The period of electrification of factories and households from 1900 to 1940, was one of high productivity and economic growth.

Most studies of electrification and electric grids focused on industrial core countries in Europe and the United States. Elsewhere, wired electricity was often carried on and through the circuits of colonial rule. Some historians and sociologists considered the interplay of colonial politics and the development of electric grids: in India, Rao[14] showed that linguistics-based regional politics—not techno-geographical considerations—led to the creation of two separate grids; in colonial Zimbabwe (Rhodesia), Chikowero[15] showed that electrification was racially based and served the white settler community while excluding Africans; and in Mandate Palestine, Shamir[16] claimed that British electric concessions to a Zionist-owned company deepened the economic disparities between Arabs and Jews.[citation needed]

Current extent of electrification

[edit]

While electrification of cities and homes has existed since the late 19th century, about 840 million people (mostly in Africa) had no access to grid electricity in 2017, down from 1.2 billion in 2010.[18]

Vast gains in electrification were seen in the 1970s and 1980s—from 49% of the world's population in 1970 to 76% in 1990.[19][20] By the early 2010s, 81–83% of the world's population had access to electricity.[21]

Electrification for sustainable energy

[edit]

Clean energy is mostly generated in the form of electricity, such as renewable energy or nuclear power. Switching to these energy sources requires that end uses, such as transport and heating, be electrified for the world's energy systems to be sustainable.

In the U.S. and Canada the use of heat pumps (HP) is economic if powered with solar photovoltaic (PV) devices to offset propane heating in rural areas[24] and natural gas heating in cities.[25] A 2023 study[26] investigated: (1) a residential natural gas-based heating system and grid electricity, (2) a residential natural gas-based heating system with PV to serve the electric load, (3) a residential HP system with grid electricity, and (4) a residential HP+PV system. It found that under typical inflation conditions, the lifecycle cost of natural gas and reversible, air-source heat pumps are nearly identical, which in part explains why heat pump sales have surpassed gas furnace sales in the U.S. for the first time during a period of high inflation.[27] With higher rates of inflation or lower PV capital costs, PV becomes a hedge against rising prices and encourages the adoption of heat pumps by also locking in both electricity and heating cost growth. The study[26] concludes: "The real internal rate of return for such prosumer technologies is 20x greater than a long-term certificate of deposit, which demonstrates the additional value PV and HP technologies offer prosumers over comparably secure investment vehicles while making substantive reductions in carbon emissions." This approach can be improved by integrating a thermal battery into the heat pump+solar energy heating system.[28][29]

Transport electrification

[edit]It is easier to sustainably produce electricity than it is to sustainably produce liquid fuels. Therefore, adoption of electric vehicles is a way to make transport more sustainable.[30] Hydrogen vehicles may be an option for larger vehicles which have not yet been widely electrified, such as long distance lorries.[31] While electric vehicle technology is relatively mature in road transport, electric shipping and aviation are still early in their development, hence sustainable liquid fuels may have a larger role to play in these sectors.[32]

Heating electrification

[edit]A large fraction of the world population cannot afford sufficient cooling for their homes. In addition to air conditioning, which requires electrification and additional power demand, passive building design and urban planning will be needed to ensure cooling needs are met in a sustainable way.[33] Similarly, many households in the developing and developed world suffer from fuel poverty and cannot heat their houses enough.[34] Existing heating practices are often polluting.

A key sustainable solution to heating is electrification (heat pumps, or the less efficient electric heater). The IEA estimates that heat pumps currently provide only 5% of space and water heating requirements globally, but could provide over 90%.[35] Use of ground source heat pumps not only reduces total annual energy loads associated with heating and cooling, it also flattens the electric demand curve by eliminating the extreme summer peak electric supply requirements.[36] However, heat pumps and resistive heating alone will not be sufficient for the electrification of industrial heat. This because in several processes higher temperatures are required which cannot be achieved with these types of equipment. For example, for the production of ethylene via steam cracking temperatures as high as 900 °C are required. Hence, drastically new processes are required. Nevertheless, power-to-heat is expected to be the first step in the electrification of the chemical industry with an expected large-scale implementation by 2025.[37]

Some cities in the United States have started prohibiting gas hookups for new houses, with state laws passed and under consideration to either require electrification or prohibit local requirements.[38] The UK government is experimenting with electrification for home heating to meet its climate goals.[39] Ceramic and Induction heating for cooktops as well as industrial applications (for instance steam crackers) are examples of technologies that can be used to transition away from natural gas.[40]

Energy resilience

[edit]

Electricity is a "sticky" form of energy, in that it tends to stay in the continent or island where it is produced. It is also multi-sourced; if one source suffers a shortage, electricity can be produced from other sources, including renewable sources. As a result, in the long term it is a relatively resilient means of energy transmission.[41] In the short term, because electricity must be supplied at the same moment it is consumed, it is somewhat unstable, compared to fuels that can be delivered and stored on-site. However, that can be mitigated by grid energy storage and distributed generation.

Managing variable energy sources

[edit]

Solar and wind are variable renewable energy sources that supply electricity intermittently depending on the weather and the time of day.[42][43] Most electrical grids were constructed for non-intermittent energy sources such as coal-fired power plants.[44] As larger amounts of solar and wind energy are integrated into the grid, changes have to be made to the energy system to ensure that the supply of electricity is matched to demand.[45] In 2019, these sources generated 8.5% of worldwide electricity, a share that has grown rapidly.[46]

There are various ways to make the electricity system more flexible. In many places, wind and solar production are complementary on a daily and a season scale: There is more wind during the night and in winter, when solar energy production is low.[45] Linking distant geographical regions through long-distance transmission lines allows for further cancelling out of variability.[47] Energy demand can be shifted in time through energy demand management and the use of smart grids, matching the times when variable energy production is highest. With storage, energy produced in excess can be released when needed.[45] Building additional capacity for wind and solar generation can help to ensure that enough electricity is produced even during poor weather; during optimal weather energy generation may have to be curtailed. The final mismatch may be covered by using dispatchable energy sources such as hydropower, bioenergy, or natural gas.[48]

Energy storage

[edit]

Energy storage helps overcome barriers for intermittent renewable energy, and is therefore an important aspect of a sustainable energy system.[49] The most commonly used storage method is pumped-storage hydroelectricity, which requires locations with large differences in height and access to water.[49] Batteries, and specifically lithium-ion batteries, are also deployed widely.[50] They contain cobalt, which is largely mined in Congo, a politically unstable region. More diverse geographical sourcing may ensure the stability of the supply-chain and their environmental impacts can be reduced by downcycling and recycling.[51][52] Batteries typically store electricity for short periods; research is ongoing into technology with sufficient capacity to last through seasons.[53] Pumped hydro storage and power-to-gas with capacity for multi-month usage has been implemented in some locations.[54][55]

As of 2018, thermal energy storage is typically not as convenient as burning fossil fuels. High upfront costs form a barrier for implementation. Seasonal thermal energy storage requires large capacity; it has been implemented in some high-latitude regions for household heat.[56]

History of electrification

[edit]The earliest commercial uses of electricity were electroplating and the telegraph.[57]

Development of magnetos, dynamos and generators

[edit]

In the years 1831–1832, Michael Faraday discovered the operating principle of electromagnetic generators. The principle, later called Faraday's law, is based on an electromotive force generated in an electrical conductor that is subjected to a varying magnetic flux as, for example, a wire moving through a magnetic field. Faraday built the first electromagnetic generator, called the Faraday disk, a type of homopolar generator, using a copper disc rotating between the poles of a horseshoe magnet. Faraday's first electromagnetic generator produced a small DC voltage.

Around 1832, Hippolyte Pixii improved the magneto by using a wire wound horseshoe, with the extra coils of conductor generating more current, but it was AC. André-Marie Ampère suggested a means of converting current from Pixii's magneto to DC using a rocking switch. Later segmented commutators were used to produce direct current.[58]

Around 1838-40, William Fothergill Cooke and Charles Wheatstone developed a telegraph. In 1840 Wheatstone was using a magneto that he developed to power the telegraph. Wheatstone and Cooke made an important improvement in electrical generation by using a battery-powered electromagnet in place of a permanent magnet, which they patented in 1845.[59] The self-excited magnetic field dynamo did away with the battery to power electromagnets. This type of dynamo was made by several people in 1866.

The first practical generator, the Gramme machine, was made by Z.T. Gramme, who sold many of these machines in the 1870s. British engineer R.E.B. Crompton improved the generator to allow better air cooling and made other mechanical improvements. Compound winding, which gave more stable voltage with load, improved the operating characteristics of generators.[60]

The improvements in electrical generation technology in the 19th century increased its efficiency and reliability greatly. The first magnetos only converted a few percent of mechanical energy to electricity. By the end of the 19th century the highest efficiencies were over 90%.

Electric lighting

[edit]Arc lighting

[edit]

Sir Humphry Davy invented the carbon arc lamp in 1802 upon discovering that electricity could produce a light arc with carbon electrodes. However, it was not used to any great extent until a practical means of generating electricity was developed.

Carbon arc lamps were started by making contact between two carbon electrodes, which were then separated to within a narrow gap. Because the carbon burned away, the gap had to be constantly readjusted. Several mechanisms were developed to regulate the arc. A common approach was to feed a carbon electrode by gravity and maintain the gap with a pair of electromagnets, one of which retracted the upper carbon after the arc was started and the second controlled a brake on the gravity feed.[8]

Arc lamps of the time had very intense light output – in the range of 4,000 candlepower (candelas) – and released a lot of heat, and they were a fire hazard, all of which made them inappropriate for lighting homes.[58]

In the 1850s, many of these problems were solved by the arc lamp invented by William Petrie and William Staite. The lamp used a magneto-electric generator and had a self-regulating mechanism to control the gap between the two carbon rods. Their light was used to light up the National Gallery in London and was a great novelty at the time. These arc lamps and designs similar to it, powered by large magnetos, were first installed on English lighthouses in the mid 1850s, but the technology suffered power limitations.[61]

The first successful arc lamp (the Yablochkov candle) was developed by Russian engineer Pavel Yablochkov using the Gramme generator. Its advantage lay in the fact that it did not require the use of a mechanical regulator like its predecessors. It was first exhibited at the Paris Exposition of 1878 and was heavily promoted by Gramme.[62] The arc light was installed along the half mile length of Avenue de l'Opéra, Place du Theatre Francais and around the Place de l'Opéra in 1878.[63]

R. E. B. Crompton developed a more sophisticated design in 1878 which gave a much brighter and steadier light than the Yablochkov candle. In 1878, he formed Crompton & Co. and began to manufacture, sell and install the Crompton lamp. His concern was one of the first electrical engineering firms in the world.

Incandescent light bulbs

[edit]Various forms of incandescent light bulbs had numerous inventors; however, the most successful early bulbs were those that used a carbon filament sealed in a high vacuum. These were invented by Joseph Swan in 1878 in Britain and by Thomas Edison in 1879 in the US. Edison’s lamp was more successful than Swan’s because Edison used a thinner filament, giving it higher resistance and thus conducting much less current. Edison began commercial production of carbon filament bulbs in 1880. Swan's light began commercial production in 1881.[64]

Swan's house, in Low Fell, Gateshead, was the world's first to have working light bulbs installed. The Lit & Phil Library in Newcastle, was the first public room lit by electric light,[65][66] and the Savoy Theatre was the first public building in the world lit entirely by electricity.[67]

Central power stations and isolated systems

[edit]

The first central station providing public power is believed to be one at Godalming, Surrey, UK, in autumn 1881. The system was proposed after the town failed to reach an agreement on the rate charged by the gas company, so the town council decided to use electricity. The system lit up arc lamps on the main streets and incandescent lamps on a few side streets with hydroelectric power. By 1882 between 8 and 10 households were connected, with a total of 57 lights. The system was not a commercial success, and the town reverted to gas.[68]

The first large scale central distribution supply plant was opened at Holborn Viaduct in London in 1882.[69] Equipped with 1000 incandescent lightbulbs that replaced the older gas lighting, the station lit up Holborn Circus including the offices of the General Post Office and the famous City Temple church. The supply was a direct current at 110 V; due to power loss in the copper wires, this amounted to 100 V for the customer.

Within weeks, a parliamentary committee recommended passage of the landmark 1882 Electric Lighting Act, which allowed the licensing of persons, companies or local authorities to supply electricity for any public or private purposes.

The first large scale central power station in America was Edison's Pearl Street Station in New York, which began operating in September 1882. The station had six 200 horsepower Edison dynamos, each powered by a separate steam engine. It was located in a business and commercial district and supplied 110 volt direct current to 85 customers with 400 lamps. By 1884 Pearl Street was supplying 508 customers with 10,164 lamps.[70]

By the mid-1880s, other electric companies were establishing central power stations and distributing electricity, including Crompton & Co. and the Swan Electric Light Company in the UK, Thomson-Houston Electric Company and Westinghouse in the US and Siemens in Germany. By 1890 there were 1000 central stations in operation.[8] The 1902 census listed 3,620 central stations. By 1925 half of power was provided by central stations.[71]

Load factor and isolated systems

[edit]One of the biggest problems facing the early power companies was the hourly variable demand. When lighting was practically the only use of electricity, demand was high during the first hours before the workday and the evening hours when demand peaked.[72] As a consequence, most early electric companies did not provide daytime service, with two-thirds providing no daytime service in 1897.[73]

The ratio of the average load to the peak load of a central station is called the load factor.[72] For electric companies to increase profitability and lower rates, it was necessary to increase the load factor. The way this was eventually accomplished was through motor load.[72] Motors are used more during daytime and many run continuously. Electric street railways were ideal for load balancing. Many electric railways generated their own power and also sold power and operated distribution systems.[4]

The load factor adjusted upward by the turn of the 20th century—at Pearl Street the load factor increased from 19.3% in 1884 to 29.4% in 1908. By 1929, the load factor around the world was greater than 50%, mainly due to motor load.[74]

Before widespread power distribution from central stations, many factories, large hotels, apartment and office buildings had their own power generation. Often this was economically attractive because the exhaust steam could be used for building and industrial process heat, which today is known as cogeneration or combined heat and power (CHP). Most self-generated power became uneconomical as power prices fell. As late as the early 20th century, isolated power systems greatly outnumbered central stations.[8] Cogeneration is still commonly practiced in many industries that use large amounts of both steam and power, such as pulp and paper, chemicals and refining. The continued use of private electric generators is called microgeneration.

Direct current electric motors

[edit]The first commutator DC electric motor capable of turning machinery was invented by the British scientist William Sturgeon in 1832.[75] The crucial advance that this represented over the motor demonstrated by Michael Faraday was the incorporation of a commutator. This allowed Sturgeon's motor to be the first capable of providing continuous rotary motion.[76]

Frank J. Sprague improved on the DC motor in 1884 by solving the problem of maintaining a constant speed with varying load and reducing sparking from the brushes. Sprague sold his motor through Edison Co.[77] It is easy to vary speed with DC motors, which made them suited for a number of applications such as electric street railways, machine tools and certain other industrial applications where speed control was desirable.[8]

Manufacturing was transitioned from line shaft and belt drive using steam engines and water power to electric motors.[4][9]

Alternating current

[edit]Although the first power stations supplied direct current, the distribution of alternating current soon became the most favored option. The main advantages of AC were that it could be transformed to high voltage to reduce transmission losses and that AC motors could easily run at constant speeds.

Alternating current technology was rooted in Faraday's 1830–31 discovery that a changing magnetic field can induce an electric current in a circuit.[78]

The first person to conceive of a rotating magnetic field was Walter Baily who gave a workable demonstration of his battery-operated polyphase motor aided by a commutator on June 28, 1879, to the Physical Society of London.[79] Nearly identical to Baily’s apparatus, French electrical engineer Marcel Deprez in 1880 published a paper that identified the rotating magnetic field principle and that of a two-phase AC system of currents to produce it.[80] In 1886, English engineer Elihu Thomson built an AC motor by expanding upon the induction-repulsion principle and his wattmeter.[81]

It was in the 1880s that the technology was commercially developed for large scale electricity generation and transmission. In 1882 the British inventor and electrical engineer Sebastian de Ferranti, working for the company Siemens collaborated with the distinguished physicist Lord Kelvin to pioneer AC power technology including an early transformer.[82]

A power transformer developed by Lucien Gaulard and John Dixon Gibbs was demonstrated in London in 1881, and attracted the interest of Westinghouse. They also exhibited the invention in Turin in 1884, where it was adopted for an electric lighting system. Many of their designs were adapted to the particular laws governing electrical distribution in the UK.[citation needed]

Sebastian Ziani de Ferranti went into this business in 1882 when he set up a shop in London designing various electrical devices. Ferranti believed in the success of alternating current power distribution early on, and was one of the few experts in this system in the UK. With the help of Lord Kelvin, Ferranti pioneered the first AC power generator and transformer in 1882.[83] John Hopkinson, a British physicist, invented the three-wire (three-phase) system for the distribution of electrical power, for which he was granted a patent in 1882.[84]

The Italian inventor Galileo Ferraris invented a polyphase AC induction motor in 1885. The idea was that two out-of-phase, but synchronized, currents might be used to produce two magnetic fields that could be combined to produce a rotating field without any need for switching or for moving parts. Other inventors were the American engineers Charles S. Bradley and Nikola Tesla, and the German technician Friedrich August Haselwander.[85] They were able to overcome the problem of starting up the AC motor by using a rotating magnetic field produced by a poly-phase current.[86] Mikhail Dolivo-Dobrovolsky introduced the first three-phase induction motor in 1890, a much more capable design that became the prototype used in Europe and the U.S.[87] By 1895 GE and Westinghouse both had AC motors on the market.[88] With single phase current either a capacitor or coil (creating inductance) can be used on part of the circuit inside the motor to create a rotating magnetic field.[89] Multi-speed AC motors that have separately wired poles have long been available, the most common being two speed. Speed of these motors is changed by switching sets of poles on or off, which was done with a special motor starter for larger motors, or a simple multiple speed switch for fractional horsepower motors.

AC power stations

[edit]The first AC power station was built by the English electrical engineer Sebastian de Ferranti. In 1887 the London Electric Supply Corporation hired Ferranti for the design of their power station at Deptford. He designed the building, the generating plant and the distribution system. It was built at the Stowage, a site to the west of the mouth of Deptford Creek once used by the East India Company. Built on an unprecedented scale and pioneering the use of high voltage (10,000 V) AC current, it generated 800 kilowatts and supplied central London. On its completion in 1891 it was the first truly modern power station, supplying high-voltage AC power that was then "stepped down" with transformers for consumer use on each street. This basic system remains in use today around the world.

In the U.S., George Westinghouse, who had become interested in the power transformer developed by Gaulard and Gibbs, began to develop his AC lighting system, using a transmission system with a 20:1 step up voltage with step-down. In 1890 Westinghouse and Stanley built a system to transmit power several miles to a mine in Colorado. A decision was taken to use AC for power transmission from the Niagara Power Project to Buffalo, New York. Proposals submitted by vendors in 1890 included DC and compressed air systems. A combination DC and compressed air system remained under consideration until late in the schedule. Despite the protestations of the Niagara commissioner William Thomson (Lord Kelvin) the decision was taken to build an AC system, which had been proposed by both Westinghouse and General Electric. In October 1893 Westinghouse was awarded the contract to provide the first three 5,000 hp, 250 rpm, 25 Hz, two phase generators.[90] The hydro power plant went online in 1895,[91] and it was the largest one until that date.[92]

By the 1890s, single and poly-phase AC was undergoing rapid introduction.[93] In the U.S. by 1902, 61% of generating capacity was AC, increasing to 95% in 1917.[94] Despite the superiority of alternating current for most applications, a few existing DC systems continued to operate for several decades after AC became the standard for new systems.

Steam turbines

[edit]The efficiency of steam prime movers in converting the heat energy of fuel into mechanical work was a critical factor in the economic operation of steam central generating stations. Early projects used reciprocating steam engines, operating at relatively low speeds. The introduction of the steam turbine fundamentally changed the economics of central station operations. Steam turbines could be made in larger ratings than reciprocating engines, and generally had higher efficiency. The speed of steam turbines did not fluctuate cyclically during each revolution. This made parallel operation of AC generators feasible, and improved the stability of rotary converters for production of direct current for traction and industrial uses. Steam turbines ran at higher speed than reciprocating engines, not being limited by the allowable speed of a piston in a cylinder. This made them more compatible with AC generators with only two or four poles; no gearbox or belted speed increaser was needed between the engine and the generator. It was costly and ultimately impossible to provide a belt-drive between a low-speed engine and a high-speed generator in the very large ratings required for central station service.

The modern steam turbine was invented in 1884 by British engineer Sir Charles Parsons, whose first model was connected to a dynamo that generated 7.5 kW (10 hp) of electricity.[95] The invention of Parsons's steam turbine made cheap and plentiful electricity possible. Parsons turbines were widely introduced in English central stations by 1894; the first electric supply company in the world to generate electricity using turbo generators was Parsons's own electricity supply company Newcastle and District Electric Lighting Company, set up in 1894.[96] Within Parsons's lifetime, the generating capacity of a unit was scaled up by about 10,000 times.[97]

The first U.S. turbines were two De Leval units at Edison Co. in New York in 1895. The first U.S. Parsons turbine was at Westinghouse Air Brake Co. near Pittsburgh.[98]

Steam turbines also had capital cost and operating advantages over reciprocating engines. The condensate from steam engines was contaminated with oil and could not be reused, while condensate from a turbine is clean and typically reused. Steam turbines were a fraction of the size and weight of comparably rated reciprocating steam engine. Steam turbines can operate for years with almost no wear. Reciprocating steam engines required high maintenance. Steam turbines can be manufactured with capacities far larger than any steam engines ever made, giving important economies of scale.

Steam turbines could be built to operate on higher pressure and temperature steam. A fundamental principle of thermodynamics is that the higher the temperature of the steam entering an engine, the higher the efficiency. The introduction of steam turbines motivated a series of improvements in temperatures and pressures. The resulting increased conversion efficiency lowered electricity prices.[99]

The power density of boilers was increased by using forced combustion air and by using compressed air to feed pulverized coal. Also, coal handling was mechanized and automated.[100]

Electrical grid

[edit]

With the realization of long distance power transmission it was possible to interconnect different central stations to balance loads and improve load factors. Interconnection became increasingly desirable as electrification grew rapidly in the early years of the 20th century.

Charles Merz, of the Merz & McLellan consulting partnership, built the Neptune Bank Power Station near Newcastle upon Tyne in 1901,[101] and by 1912 had developed into the largest integrated power system in Europe.[102] In 1905 he tried to influence Parliament to unify the variety of voltages and frequencies in the country's electricity supply industry, but it was not until World War I that Parliament began to take this idea seriously, appointing him head of a Parliamentary Committee to address the problem. In 1916 Merz pointed out that the UK could use its small size to its advantage, by creating a dense distribution grid to feed its industries efficiently. His findings led to the Williamson Report of 1918, which in turn created the Electricity Supply Bill of 1919. The bill was the first step towards an integrated electricity system in the UK.

The more significant Electricity (Supply) Act of 1926, led to the setting up of the National Grid.[103] The Central Electricity Board standardised the nation's electricity supply and established the first synchronised AC grid, running at 132 kilovolts and 50 Hertz. This started operating as a national system, the National Grid, in 1938.

In the United States it became a national objective after the power crisis during the summer of 1918 in the midst of World War I to consolidate supply. In 1934 the Public Utility Holding Company Act recognized electric utilities as public goods of importance along with gas, water, and telephone companies and thereby were given outlined restrictions and regulatory oversight of their operations.[104]

Household electrification

[edit]The examples and perspective in this section may not represent a worldwide view of the subject. (May 2021) |

The electrification of households in Europe and North America began in the early 20th century in major cities and in areas served by electric railways and increased rapidly until about 1930 when 70% of households were electrified in the U.S.

Rural areas were electrified first in Europe, and in the U.S. the Rural Electric Administration, established in 1935 brought electrification to the underserviced rural areas.[105]

In the Soviet Union, as in the United States, rural electrification progressed more slowly than in urban areas. It wasn't until the Brezhnev era that electrification became widespread in rural regions, with the Soviet rural electrification drive largely completed by the early 1970s. [106]

In China, the turmoil of the Warlord Era, the Civil War and the Japanese invasion in the early 20th century delayed large-scale electrification for decades. It was only after the establishment of the People's Republic of China in 1949 that the country was positioned to pursue widespread electrification. During the Mao years, while electricity became commonplace in cities, rural areas were largely neglected. [107] At the time of Mao's death in 1976, 25% of Chinese households still lacked access to electricity.[108]

Deng Xiaoping, who became China's paramount leader in 1978, initiated a rural electrification drive as part of a broader modernization effort. By the late 1990s, electricity had become ubiquitous in regional areas. [109] The very last remote villages in China were connected to the grid in 2015. [110]

Historical cost of electricity

[edit]Central station electric power generating provided power more efficiently and at lower cost than small generators. The capital and operating cost per unit of power were also cheaper with central stations.[9] The cost of electricity fell dramatically in the first decades of the twentieth century due to the introduction of steam turbines and the improved load factor after the introduction of AC motors. As electricity prices fell, usage increased dramatically and central stations were scaled up to enormous sizes, creating significant economies of scale.[111] For the historical cost see Ayres-Warr (2002) Fig. 7.[11]

See also

[edit]- GOELRO plan

- Mains electricity by country - Plugs, voltages and frequencies

- Renewable electricity

- Renewable energy development

References

[edit]Citations

[edit]- ^ Constable, George; Somerville, Bob (2003). A Century of Innovation. doi:10.17226/10726. ISBN 978-0-309-08908-1.[page needed]

- ^ Agutu, Churchill; Egli, Florian; Williams, Nathaniel J.; Schmidt, Tobias S.; Steffen, Bjarne (9 June 2022). "Accounting for finance in electrification models for sub-Saharan Africa". Nature Energy. 7 (7): 631–641. Bibcode:2022NatEn...7..631A. doi:10.1038/s41560-022-01041-6.

- ^ Hakimian, Rob (2022-06-10). "Procurement launched for largest rail electrification project in the world". New Civil Engineer. Retrieved 2022-06-10.

- ^ a b c Nye 1990, p. [page needed].

- ^ Cardwell, D. S. L. (1972). Technology Science and History. London: Heinemann. p. 163.

- ^ Unskilled labor made approximately $1.25 per 10- to 12-hour day. Hunter and Bryant cite a letter from Benjamin Latrobe to John Stevens ca. 1814 giving the cost of two old blind horses used to power a mill at $20 and $14. A good dray horse cost $165.

- ^ Hunter & Bryant 1991, pp. 29–30.

- ^ a b c d e f g Hunter & Bryant 1991, p. [page needed].

- ^ a b c Devine, Warren D. (June 1983). "From Shafts to Wires: Historical Perspective on Electrification". The Journal of Economic History. 43 (2): 347–372. doi:10.1017/S0022050700029673.

- ^ a b Ayres, R (March 2003). "Exergy, power and work in the US economy, 1900–1998". Energy. 28 (3): 219–273. Bibcode:2003Ene....28..219A. doi:10.1016/S0360-5442(02)00089-0.

- ^ a b Robert U. Ayres; Benjamin Warr. "Two Paradigms of Production and Growth" (PDF). Archived from the original (PDF) on 2013-05-02.

- ^ a b National Research Council (1986). Electricity in Economic Growth. pp. 16, 40. doi:10.17226/900. ISBN 978-0-309-03677-1.

- ^ Kendrick, John W. (1980). Productivity in the United States: Trends and Cycles. The Johns Hopkins University Press. p. 97. ISBN 978-0-8018-2289-6.

- ^ Rao, Yenda Srinivasa (2010). "Electricity, Politics and Regional Economic Imbalance in Madras Presidency, 1900-1947". Economic and Political Weekly. 45 (23): 59–66. JSTOR 27807107.

- ^ Chikowero, Moses (2007). "Subalternating Currents: Electrification and Power Politics in Bulawayo, Colonial Zimbabwe, 1894-1939". Journal of Southern African Studies. 33 (2): 287–306. doi:10.1080/03057070701292590. JSTOR 25065197.

- ^ Shamir, Ronen (2013). Current Flow: The Electrification of Palestine (1 ed.). Stanford University Press. doi:10.2307/j.ctvqr1d51. ISBN 978-0-8047-8706-2. JSTOR j.ctvqr1d51.[page needed]

- ^ "Access to electricity (% of population)". Data. The World Bank. Archived from the original on 16 September 2017. Retrieved 5 October 2019.

- ^ Odarno, Lily (14 August 2019). "Closing Sub-Saharan Africa's Electricity Access Gap: Why Cities Must Be Part of the Solution". World Resources Institute. Archived from the original on 2019-12-19. Retrieved 2019-11-26.

- ^ "IEA - Energy Access". worldenergyoutlook.org. Archived from the original on 2013-05-31. Retrieved 2013-05-30.

- ^ Zerriffi, Hisham (11 April 2008). "From açaí to access: distributed electrification in rural Brazil". International Journal of Energy Sector Management. 2 (1): 90–117. Bibcode:2008IJESM...2...90Z. doi:10.1108/17506220810859114.

- ^ "Population growth erodes sustainable energy gains - UN report". trust.org. Thomson Reuters Foundation. Archived from the original on 2014-11-10. Retrieved 2013-06-17.

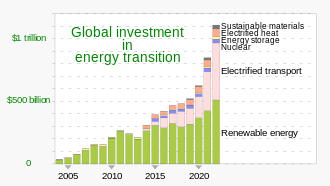

- ^ "Global Clean Energy Investment Jumps 17%, Hits $1.8 Trillion in 2023, According to BloombergNEF Report". BNEF.com. Bloomberg NEF. 30 January 2024. Archived from the original on June 28, 2024.

Start years differ by sector but all sectors are present from 2020 onwards.

- ^ 2024 data: "Energy Transition Investment Trends 2025 / Abridged report" (PDF). BloombergNEF. 30 January 2025. p. 9. Archived (PDF) from the original on 2 February 2025.

- ^ Padovani, Filippo; Sommerfeldt, Nelson; Longobardi, Francesca; Pearce, Joshua M. (2021-11-01). "Decarbonizing rural residential buildings in cold climates: A techno-economic analysis of heating electrification". Energy and Buildings. 250 111284. Bibcode:2021EneBu.25011284P. doi:10.1016/j.enbuild.2021.111284.

- ^ Pearce, Joshua M.; Sommerfeldt, Nelson (5 February 2021). "Economics of Grid-Tied Solar Photovoltaic Systems Coupled to Heat Pumps: The Case of Northern Climates of the U.S. and Canada". Energies. 14 (4): 834. doi:10.3390/en14040834.

- ^ a b Sommerfeldt, Nelson; Pearce, Joshua M. (2023-04-15). "Can grid-tied solar photovoltaics lead to residential heating electrification? A techno-economic case study in the midwestern U.S." Applied Energy. 336 120838. Bibcode:2023ApEn..33620838S. doi:10.1016/j.apenergy.2023.120838.

- ^ "Chart: Americans bought more heat pumps than gas furnaces last year". Canary Media. 10 February 2023. Retrieved 2023-03-01.

- ^ Li, Yuanyuan; Rosengarten, Gary; Stanley, Cameron; Mojiri, Ahmad (December 2022). "Electrification of residential heating, cooling and hot water: Load smoothing using onsite photovoltaics, heat pump and thermal batteries". Journal of Energy Storage. 56 105873. Bibcode:2022JEnSt..5605873L. doi:10.1016/j.est.2022.105873.

- ^ Ermel, Conrado; Bianchi, Marcus V.A.; Cardoso, Ana Paula; Schneider, Paulo S. (October 2022). "Thermal storage integrated into air-source heat pumps to leverage building electrification: A systematic literature review". Applied Thermal Engineering. 215 118975. Bibcode:2022AppTE.21518975E. doi:10.1016/j.applthermaleng.2022.118975.

- ^ Bogdanov, Dmitrii; Farfan, Javier; Sadovskaia, Kristina; Aghahosseini, Arman; Child, Michael; Gulagi, Ashish; Oyewo, Ayobami Solomon; de Souza Noel Simas Barbosa, Larissa; Breyer, Christian (6 March 2019). "Radical transformation pathway towards sustainable electricity via evolutionary steps". Nature Communications. 10 (1) 1077. Bibcode:2019NatCo..10.1077B. doi:10.1038/s41467-019-08855-1. PMC 6403340. PMID 30842423.

- ^ Miller, Joe (2020-09-09). "Hydrogen takes a back seat to electric for passenger vehicles". Financial Times. Archived from the original on 2020-09-20. Retrieved 2020-09-20.

- ^ International Energy Agency 2020, p. 139.

- ^ Mastrucci, Alessio; Byers, Edward; Pachauri, Shonali; Rao, Narasimha D. (March 2019). "Improving the SDG energy poverty targets: Residential cooling needs in the Global South". Energy and Buildings. 186: 405–415. Bibcode:2019EneBu.186..405M. doi:10.1016/j.enbuild.2019.01.015.

- ^ Bouzarovski, Stefan; Petrova, Saska (November 2015). "A global perspective on domestic energy deprivation: Overcoming the energy poverty–fuel poverty binary". Energy Research & Social Science. 10: 31–40. Bibcode:2015ERSS...10...31B. doi:10.1016/j.erss.2015.06.007.

- ^ Abergel, Thibaut (June 2020). "Heat Pumps". IEA. Archived from the original on 3 March 2021. Retrieved 12 April 2021.

- ^ Mueller, Mike (August 1, 2017). "5 Things You Should Know about Geothermal Heat Pumps". Office of Energy Efficiency & Renewable Energy. US Department of Energy. Archived from the original on 15 April 2021. Retrieved 17 April 2021.

- ^ "Dream or Reality? Electrification of the Chemical Process Industries". www.aiche-cep.com. Retrieved 2022-01-16.

- ^ "Dozens Of US Cities Are Banning Natural Gas Hookups In New Buildings — #CancelGas #ElectrifyEverything". 9 March 2021. Archived from the original on 2021-08-09. Retrieved 2021-08-09.

- ^ "Heat in Buildings". Archived from the original on 2021-08-18. Retrieved 2021-08-09.

- ^ "BASF, SABIC and Linde join forces to realize the world's first electrically heated steam cracker furnace". www.basf.com. Archived from the original on 2021-09-24. Retrieved 2021-09-24.

- ^ "Our Electric Future — The American, A Magazine of Ideas". American.com. 2009-06-15. Archived from the original on 2014-08-25. Retrieved 2009-06-19.

- ^ Jerez, Sonia; Tobin, Isabelle; Turco, Marco; María López-Romero, Jose; Montávez, Juan Pedro; Jiménez-Guerrero, Pedro; Vautard, Robert (2018). "Resilience of the combined wind-plus-solar power production in Europe to climate change: a focus on the supply intermittence". EGUGA: 15424. Bibcode:2018EGUGA..2015424J.

- ^ Lave, Matthew; Ellis, Abraham (2016). "Comparison of solar and wind power generation impact on net load across a utility balancing area". 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC). pp. 1837–1842. doi:10.1109/PVSC.2016.7749939. ISBN 978-1-5090-2724-8. OSTI 1368867.

- ^ "Introduction to System Integration of Renewables – Analysis". IEA. 11 March 2020. Archived from the original on 2020-05-15. Retrieved 2020-05-30.

- ^ a b c Blanco, Herib; Faaij, André (January 2018). "A review at the role of storage in energy systems with a focus on Power to Gas and long-term storage". Renewable and Sustainable Energy Reviews. 81: 1049–1086. Bibcode:2018RSERv..81.1049B. doi:10.1016/j.rser.2017.07.062.

- ^ "Wind & Solar Share in Electricity Production Data". Enerdata. Archived from the original on 2019-07-19. Retrieved 2021-05-21.

- ^ REN21 2020, p. 177.

- ^ International Energy Agency 2020, p. 109.

- ^ a b Koohi-Fayegh, S.; Rosen, M.A. (February 2020). "A review of energy storage types, applications and recent developments". Journal of Energy Storage. 27 101047. Bibcode:2020JEnSt..2701047K. doi:10.1016/j.est.2019.101047.

- ^ Katz, Cheryl (18 December 2020). "The batteries that could make fossil fuels obsolete". BBC. Archived from the original on 2021-01-11. Retrieved 2021-01-10.

- ^ Babbitt, Callie W. (August 2020). "Sustainability perspectives on lithium-ion batteries". Clean Technologies and Environmental Policy. 22 (6): 1213–1214. Bibcode:2020CTEP...22.1213B. doi:10.1007/s10098-020-01890-3.

- ^ Baumann-Pauly, Dorothée (16 September 2020). "Cobalt can be sourced responsibly, and it's time to act". SWI swissinfo.ch. Archived from the original on 2020-11-26. Retrieved 2021-04-10.

- ^ Blanco, Herib; Faaij, André (January 2018). "A review at the role of storage in energy systems with a focus on Power to Gas and long-term storage". Renewable and Sustainable Energy Reviews. 81: 1049–1086. Bibcode:2018RSERv..81.1049B. doi:10.1016/j.rser.2017.07.062.

- ^ Hunt, Julian D.; Byers, Edward; Wada, Yoshihide; Parkinson, Simon; Gernaat, David E. H. J.; Langan, Simon; van Vuuren, Detlef P.; Riahi, Keywan (19 February 2020). "Global resource potential of seasonal pumped hydropower storage for energy and water storage". Nature Communications. 11 (1) 947. Bibcode:2020NatCo..11..947H. doi:10.1038/s41467-020-14555-y. PMC 7031375. PMID 32075965.

- ^ Balaraman, Kavya (2020-10-12). "To batteries and beyond: With seasonal storage potential, hydrogen offers 'a different ballgame entirely'". Utility Dive. Archived from the original on 2021-01-18. Retrieved 2021-01-10.

- ^ Alva, Guruprasad; Lin, Yaxue; Fang, Guiyin (February 2018). "An overview of thermal energy storage systems". Energy. 144: 341–378. Bibcode:2018Ene...144..341A. doi:10.1016/j.energy.2017.12.037.

- ^ "Early Applications of Electricity". ETHW. 2015-09-14. Retrieved 2023-11-16.

- ^ a b McNeil 1990, p. [page needed].

- ^ McNeil 1990, p. 359.

- ^ McNeil 1990, p. 360.

- ^ McNeil 1990, pp. 360–365.

- ^ Woodbury, David Oakes (1949). A Measure for Greatness: A Short Biography of Edward Weston. McGraw-Hill. p. 83. Retrieved 2009-01-04.

- ^ Barrett, John Patrick (1894). Electricity at the Columbian Exposition. R. R. Donnelley & sons company. p. 1. Retrieved 2009-01-04.

- ^ McNeil 1990, pp. 366–368.

- ^ Glover, Andrew (8 February 2011). "Alexander Armstrong in appeal to save Lit and Phil". The Journal. Archived from the original on 15 February 2011. Retrieved 8 February 2011.

The society's lecture theatre was the first public room to be lit by electric light, during a lecture by Sir Joseph Swan on October 20, 1880.

- ^ History in pictures - The Lit & Phil Archived 2012-07-19 at archive.today BBC. Retrieved 8 August 2011

- ^ Burgess, Michael. "Richard D'Oyly Carte", The Savoyard, January 1975, pp. 7–11

- ^ McNeil 1990, p. 369.

- ^ "History of public supply in the UK". Archived from the original on 2010-12-01.

- ^ Hunter & Bryant 1991, p. 191.

- ^ Hunter & Bryant 1991, p. 242.

- ^ a b c Hunter & Bryant 1991, pp. 276–279.

- ^ Hunter & Bryant 1991, pp. 212, Note 53.

- ^ Hunter & Bryant 1991, pp. 283–284.

- ^ Gee, William (2004). "Sturgeon, William (1783–1850)". Oxford Dictionary of National Biography. Oxford Dictionary of National Biography (online ed.). Oxford University Press. doi:10.1093/ref:odnb/26748. (Subscription, Wikipedia Library access or UK public library membership required.)

- ^ "DC Motors". Archived from the original on 2013-05-16. Retrieved 2013-10-06.

- ^ Nye 1990, p. 195.

- ^ Historical Encyclopedia of Natural and Mathematical Sciences, Volume 1. Springer. 6 March 2009. ISBN 9783540688310. Archived from the original on 25 January 2021. Retrieved 25 October 2020.

- ^ Wizard: the life and times of Nikola Tesla : biography of a genius. Citadel Press. 1998. p. 24. ISBN 9780806519609. Archived from the original on 2021-08-16. Retrieved 2020-10-25.

- ^ Polyphase electric currents and alternate-current motors. Spon. 1895. p. 87.

- ^ Innovation as a Social Process. Cambridge University Press. 13 February 2003. p. 258. ISBN 9780521533126. Archived from the original on 14 August 2021. Retrieved 25 October 2020.

- ^ "Nikola Tesla The Electrical Genius". Archived from the original on 2015-09-09. Retrieved 2013-10-06.

- ^ "AC Power History and Timeline". Archived from the original on 2013-10-17. Retrieved 2013-10-06.

- ^ Oxford Dictionary of National Biography: Hopkinson, John by T. H. Beare

- ^ Hughes, Thomas Parke (March 1993). Networks of Power. JHU Press. ISBN 9780801846144. Archived from the original on 2020-10-30. Retrieved 2016-05-18.

- ^ Hunter & Bryant 1991, p. 248.

- ^ Arnold Heertje; Mark Perlman, eds. (1990). Evolving Technology and Market Structure: Studies in Schumpeterian Economics. University of Michigan Press. p. 138. ISBN 0472101927. Archived from the original on 2018-05-05. Retrieved 2016-05-18.

- ^ Hunter & Bryant 1991, p. 250.

- ^ McNeil 1990, p. 383.

- ^ Hunter & Bryant 1991, pp. 285–286.

- ^ A. Madrigal (Mar 6, 2010). "June 3, 1889: Power Flows Long-distance". wired.com. Archived from the original on 2017-07-01. Retrieved 2019-01-30.

- ^ "The History of Electrification: List of important early power stations". edisontechcenter.org. Archived from the original on 2018-08-25. Retrieved 2019-01-30.

- ^ Hunter & Bryant 1991, p. 221.

- ^ Hunter & Bryant 1991, pp. 253, Note 18.

- ^ "The Steam Turbine". Birr Castle Demesne. Archived from the original on May 13, 2010.

- ^ Forbes, Ross (17 April 1997). "A marriage took place last week that wedded two technologies possibly 120 years too late". wiki-north-east.co.uk/. The Journal. Retrieved 2009-01-02.[dead link]

- ^ Parsons, Charles A. "The Steam Turbine". Archived from the original on 2011-01-14.

- ^ Hunter & Bryant 1991, p. 336.

- ^ Steam-its generation and use. Babcock & Wilcox. 1913.

- ^ Jerome, Harry (1934). Mechanization in Industry, National Bureau of Economic Research (PDF). Archived (PDF) from the original on 2017-10-18. Retrieved 2018-03-09.

- ^ Shaw, Alan (29 September 2005). "Kelvin to Weir, and on to GB SYS 2005" (PDF). Royal Society of Edinburgh. Archived (PDF) from the original on 4 March 2009. Retrieved 6 October 2013.

- ^ "Survey of Belford 1995". North Northumberland Online. Archived from the original on 2016-04-12. Retrieved 2013-10-06.

- ^ "Lighting by electricity". The National Trust. Archived from the original on 2011-06-29.

- ^ Mazer, Arthur (2007). Electric Power Planning for Regulated and Deregulated Markets. doi:10.1002/9780470130575. ISBN 978-0-470-11882-5.[page needed]

- ^ Moore, Stephen; Simon, Julian (December 15, 1999). The Greatest Century That Ever Was: 25 Miraculous Trends of the last 100 Years (PDF). Policy Analysis (Report). The Cato Institute. p. 20 Fig. 16. No. 364. Archived (PDF) from the original on October 12, 2012. Retrieved June 16, 2011.

- ^ Fresne, Patrick (2024-07-25). "Two Billion Light Up: The South Asian Electric Wave". Gold and Revolution. Retrieved 2024-08-10.

- ^ Peng, W & Pan, J, 2006, Rural Electrification in China: History and Institution, China and World Economy, Vol 14, No. 1, p 77

- ^ Fresne, Patrick (2024-07-25). "Two Billion Light Up: The South Asian Electric Wave". Gold and Revolution. Retrieved 2024-08-10.

- ^ Fresne, Patrick (2024-07-25). "Two Billion Light Up: The South Asian Electric Wave". Gold and Revolution. Retrieved 2024-08-10.

- ^ "Three lessons from China's effort to bring electricity to 1.4 billion people". Dialogue Earth. 24 July 2017. Retrieved 10 August 2024.

- ^ Smil, Vaclav (2006). Transforming the Twentieth Century: Technical Innovations and Their Consequences. Oxford, New York: Oxford University Press. p. 33. ISBN 978-0-19-516875-4. (Maximum turbine size grew to about 200 MW in the 1920s and again to about 1000 MW in 1960. Significant increases in efficiency accompanied each increase in scale.)

General and cited references

[edit]- Hunter, Louis C.; Bryant, Lynwood (1991). A History of Industrial Power in the United States, 1730–1930, Vol. 3: The Transmission of Power. Cambridge, Massachusetts: MIT Press. ISBN 0-262-08198-9.

- Hills, Richard Leslie (1993). Power from Steam: A History of the Stationary Steam Engine (paperback ed.). Cambridge University Press. p. 244. ISBN 0-521-45834-X.

- International Energy Agency (2020). World Energy Outlook 2020. International Energy Agency. ISBN 978-92-64-44923-7. Archived from the original on 22 August 2021.

- McNeil, Ian (1990). An Encyclopedia of the History of Technology. London: Routledge. ISBN 0-415-14792-1.

- Nye, David E. (1990). Electrifying America: Social Meanings of a New Technology. Cambridge, MA, USA and London, England: The MIT Press.

- REN21 (2020). Renewables 2020: Global Status Report (PDF). REN21 Secretariat. ISBN 978-3-948393-00-7. Archived (PDF) from the original on 23 September 2020.

{{cite book}}: CS1 maint: numeric names: authors list (link) - Aalto, Pami; Haukkala, Teresa; Kilpeläinen, Sarah; Kojo, Matti (2021). "Introduction". Electrification. pp. 3–24. doi:10.1016/B978-0-12-822143-3.00006-8. ISBN 978-0-12-822143-3.

External links

[edit] The dictionary definition of electrification at Wiktionary

The dictionary definition of electrification at Wiktionary- 20190809 The Truth of Lightning 01 (English)2-An`s Safe Zone

- 20190809 The Truth of Lightning 01 (Japanese)2-An`s Safe Zone

- 20190809 The Truth of Lightning 02 (English)2-An`s Safe Zone

- Zambesi Rapids - Rural electrification with water power. (in French)

KSF

KSF