History of science

From Wikipedia - Reading time: 84 min

From Wikipedia - Reading time: 84 min

| Part of a series on |

| Science |

|---|

|

| General |

| Branches |

| In society |

The history of science covers the development of science from ancient times to the present. It encompasses all three major branches of science: natural, social, and formal.[1] Protoscience, early sciences, and natural philosophies such as alchemy and astrology that existed during the Bronze Age, Iron Age, classical antiquity and the Middle Ages, declined during the early modern period after the establishment of formal disciplines of science in the Age of Enlightenment.

The earliest roots of scientific thinking and practice can be traced to Ancient Egypt and Mesopotamia during the 3rd and 2nd millennia BCE.[2][3] These civilizations' contributions to mathematics, astronomy, and medicine influenced later Greek natural philosophy of classical antiquity, wherein formal attempts were made to provide explanations of events in the physical world based on natural causes.[2][3] After the fall of the Western Roman Empire, knowledge of Greek conceptions of the world deteriorated in Latin-speaking Western Europe during the early centuries (400 to 1000 CE) of the Middle Ages,[4] but continued to thrive in the Greek-speaking Byzantine Empire. Aided by translations of Greek texts, the Hellenistic worldview was preserved and absorbed into the Arabic-speaking Muslim world during the Islamic Golden Age.[5] The recovery and assimilation of Greek works and Islamic inquiries into Western Europe from the 10th to 13th century revived the learning of natural philosophy in the West.[4][6] Traditions of early science were also developed in ancient India and separately in ancient China, the Chinese model having influenced Vietnam, Korea and Japan before Western exploration.[7] Among the Pre-Columbian peoples of Mesoamerica, the Zapotec civilization established their first known traditions of astronomy and mathematics for producing calendars, followed by other civilizations such as the Maya.

Natural philosophy was transformed by the Scientific Revolution that transpired during the 16th and 17th centuries in Europe,[8][9][10] as new ideas and discoveries departed from previous Greek conceptions and traditions.[11][12][13][14] The New Science that emerged was more mechanistic in its worldview, more integrated with mathematics, and more reliable and open as its knowledge was based on a newly defined scientific method.[12][15][16] More "revolutions" in subsequent centuries soon followed. The chemical revolution of the 18th century, for instance, introduced new quantitative methods and measurements for chemistry.[17] In the 19th century, new perspectives regarding the conservation of energy, age of Earth, and evolution came into focus.[18][19][20][21][22][23] And in the 20th century, new discoveries in genetics and physics laid the foundations for new sub disciplines such as molecular biology and particle physics.[24][25] Moreover, industrial and military concerns as well as the increasing complexity of new research endeavors ushered in the era of "big science," particularly after World War II.[24][25][26]

Approaches to history of science

[edit]The nature of the history of science is a topic of debate (as is, by implication, the definition of science itself). The history of science is often seen as a linear story of progress,[27] but historians have come to see the story as more complex.[28][29][30] Alfred Edward Taylor has characterised lean periods in the advance of scientific discovery as "periodical bankruptcies of science".[31]

Science is a human activity, and scientific contributions have come from people from a wide range of different backgrounds and cultures. Historians of science increasingly see their field as part of a global history of exchange, conflict and collaboration.[32]

The relationship between science and religion has been variously characterized in terms of "conflict", "harmony", "complexity", and "mutual independence", among others. Events in Europe such as the Galileo affair of the early 17th century – associated with the scientific revolution and the Age of Enlightenment – led scholars such as John William Draper to postulate (c. 1874) a conflict thesis, suggesting that religion and science have been in conflict methodologically, factually and politically throughout history. The "conflict thesis" has since lost favor among the majority of contemporary scientists and historians of science.[33][34][35] However, some contemporary philosophers and scientists, such as Richard Dawkins,[36] still subscribe to this thesis.

Historians have emphasized[37] that trust is necessary for agreement on claims about nature. In this light, the 1660 establishment of the Royal Society and its code of experiment – trustworthy because witnessed by its members – has become an important chapter in the historiography of science.[38] Many people in modern history (typically women and persons of color) were excluded from elite scientific communities and characterized by the science establishment as inferior. Historians in the 1980s and 1990s described the structural barriers to participation and began to recover the contributions of overlooked individuals.[39][40] Historians have also investigated the mundane practices of science such as fieldwork and specimen collection,[41] correspondence,[42] drawing,[43] record-keeping,[44] and the use of laboratory and field equipment.[45]

Prehistory

[edit]In prehistoric times, knowledge and technique were passed from generation to generation in an oral tradition. For instance, the domestication of maize for agriculture has been dated to about 9,000 years ago in southern Mexico, before the development of writing systems.[46][47][48] Similarly, archaeological evidence indicates the development of astronomical knowledge in preliterate societies.[49][50]

The oral tradition of preliterate societies had several features, the first of which was its fluidity.[2] New information was constantly absorbed and adjusted to new circumstances or community needs. There were no archives or reports. This fluidity was closely related to the practical need to explain and justify a present state of affairs.[2] Another feature was the tendency to describe the universe as just sky and earth, with a potential underworld. They were also prone to identify causes with beginnings, thereby providing a historical origin with an explanation. There was also a reliance on a "medicine man" or "wise woman" for healing, knowledge of divine or demonic causes of diseases, and in more extreme cases, for rituals such as exorcism, divination, songs, and incantations.[2] Finally, there was an inclination to unquestioningly accept explanations that might be deemed implausible in more modern times while at the same time not being aware that such credulous behaviors could have posed problems.[2]

The development of writing enabled humans to store and communicate knowledge across generations with much greater accuracy. Its invention was a prerequisite for the development of philosophy and later science in ancient times.[2] Moreover, the extent to which philosophy and science would flourish in ancient times depended on the efficiency of a writing system (e.g., use of alphabets).[2]

Ancient Near East

[edit]The earliest roots of science can be traced to the Ancient Near East c. 3000–1200 BCE – in particular to Ancient Egypt and Mesopotamia.[2]

Ancient Egypt

[edit]Number system and geometry

[edit]Starting c. 3000 BCE, the ancient Egyptians developed a numbering system that was decimal in character and had oriented their knowledge of geometry to solving practical problems such as those of surveyors and builders.[2] Their development of geometry was itself a necessary development of surveying to preserve the layout and ownership of farmland, which was flooded annually by the Nile. The 3-4-5 right triangle and other rules of geometry were used to build rectilinear structures, and the post and lintel architecture of Egypt.

Disease and healing

[edit]

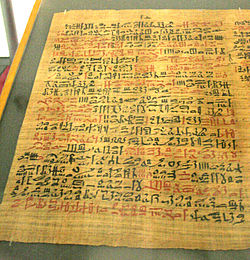

Egypt was also a center of alchemy research for much of the Mediterranean. According to the medical papyri (written c. 2500–1200 BCE), the ancient Egyptians believed that disease was mainly caused by the invasion of bodies by evil forces or spirits.[2] Thus, in addition to medicine, therapies included prayer, incantation, and ritual.[2] The Ebers Papyrus, written c. 1600 BCE, contains medical recipes for treating diseases related to the eyes, mouth, skin, internal organs, and extremities, as well as abscesses, wounds, burns, ulcers, swollen glands, tumors, headaches, and bad breath. The Edwin Smith Papyrus, written at about the same time, contains a surgical manual for treating wounds, fractures, and dislocations. The Egyptians believed that the effectiveness of their medicines depended on the preparation and administration under appropriate rituals.[2] Medical historians believe that ancient Egyptian pharmacology, for example, was largely ineffective.[51] Both the Ebers and Edwin Smith papyri applied the following components to the treatment of disease: examination, diagnosis, treatment, and prognosis,[52] which display strong parallels to the basic empirical method of science and, according to G. E. R. Lloyd,[53] played a significant role in the development of this methodology.

Calendar

[edit]The ancient Egyptians even developed an official calendar that contained twelve months, thirty days each, and five days at the end of the year.[2] Unlike the Babylonian calendar or the ones used in Greek city-states at the time, the official Egyptian calendar was much simpler as it was fixed and did not take lunar and solar cycles into consideration.[2]

Mesopotamia

[edit]

The ancient Mesopotamians had extensive knowledge about the chemical properties of clay, sand, metal ore, bitumen, stone, and other natural materials, and applied this knowledge to practical use in manufacturing pottery, faience, glass, soap, metals, lime plaster, and waterproofing. Metallurgy required knowledge about the properties of metals. Nonetheless, the Mesopotamians seem to have had little interest in gathering information about the natural world for the mere sake of gathering information and were far more interested in studying the manner in which the gods had ordered the universe. Biology of non-human organisms was generally only written about in the context of mainstream academic disciplines. Animal physiology was studied extensively for the purpose of divination; the anatomy of the liver, which was seen as an important organ in haruspicy, was studied in particularly intensive detail. Animal behavior was also studied for divinatory purposes. Most information about the training and domestication of animals was probably transmitted orally without being written down, but one text dealing with the training of horses has survived.[54]

Mesopotamian medicine

[edit]The ancient Mesopotamians had no distinction between "rational science" and magic.[55][56][57] When a person became ill, doctors prescribed magical formulas to be recited as well as medicinal treatments.[55][56][57][54] The earliest medical prescriptions appear in Sumerian during the Third Dynasty of Ur (c. 2112 BCE – c. 2004 BCE).[58] The most extensive Babylonian medical text, however, is the Diagnostic Handbook written by the ummânū, or chief scholar, Esagil-kin-apli of Borsippa,[59] during the reign of the Babylonian king Adad-apla-iddina (1069–1046 BCE).[60] In East Semitic cultures, the main medicinal authority was a kind of exorcist-healer known as an āšipu.[55][56][57] The profession was generally passed down from father to son and was held in extremely high regard.[55] Of less frequent recourse was another kind of healer known as an asu, who corresponds more closely to a modern physician and treated physical symptoms using primarily folk remedies composed of various herbs, animal products, and minerals, as well as potions, enemas, and ointments or poultices. These physicians, who could be either male or female, also dressed wounds, set limbs, and performed simple surgeries. The ancient Mesopotamians also practiced prophylaxis and took measures to prevent the spread of disease.[54]

Astronomy and celestial divination

[edit]

In Babylonian astronomy, records of the motions of the stars, planets, and the moon are left on thousands of clay tablets created by scribes. Even today, astronomical periods identified by Mesopotamian proto-scientists are still widely used in Western calendars such as the solar year and the lunar month. Using this data, they developed mathematical methods to compute the changing length of daylight in the course of the year, predict the appearances and disappearances of the Moon and planets, and eclipses of the Sun and Moon. Only a few astronomers' names are known, such as that of Kidinnu, a Chaldean astronomer and mathematician. Kiddinu's value for the solar year is in use for today's calendars. Babylonian astronomy was "the first and highly successful attempt at giving a refined mathematical description of astronomical phenomena." According to the historian A. Aaboe, "all subsequent varieties of scientific astronomy, in the Hellenistic world, in India, in Islam, and in the West—if not indeed all subsequent endeavour in the exact sciences—depend upon Babylonian astronomy in decisive and fundamental ways."[61]

To the Babylonians and other Near Eastern cultures, messages from the gods or omens were concealed in all natural phenomena that could be deciphered and interpreted by those who are adept.[2] Hence, it was believed that the gods could speak through all terrestrial objects (e.g., animal entrails, dreams, malformed births, or even the color of a dog urinating on a person) and celestial phenomena.[2] Moreover, Babylonian astrology was inseparable from Babylonian astronomy.

Mathematics

[edit]The Mesopotamian cuneiform tablet Plimpton 322, dating to the 18th century BCE, records a number of Pythagorean triplets (3, 4, 5) and (5, 12, 13) ...,[62] hinting that the ancient Mesopotamians might have been aware of the Pythagorean theorem over a millennium before Pythagoras.[63][64][65]

Ancient and medieval South Asia and East Asia

[edit]Mathematical achievements from Mesopotamia had some influence on the development of mathematics in India, and there were confirmed transmissions of mathematical ideas between India and China, which were bidirectional.[66] Nevertheless, the mathematical and scientific achievements in India and particularly in China occurred largely independently[67] from those of Europe and the confirmed early influences that these two civilizations had on the development of science in Europe in the pre-modern era were indirect, with Mesopotamia and later the Islamic World acting as intermediaries.[66] The arrival of modern science, which grew out of the Scientific Revolution, in India and China and the greater Asian region in general can be traced to the scientific activities of Jesuit missionaries who were interested in studying the region's flora and fauna during the 16th to 17th century.[68]

India

[edit]Mathematics

[edit]

The earliest traces of mathematical knowledge in the Indian subcontinent appear with the Indus Valley Civilisation (c. 3300 – c. 1300 BCE). The people of this civilization made bricks whose dimensions were in the proportion 4:2:1, which is favorable for the stability of a brick structure.[69] They also tried to standardize measurement of length to a high degree of accuracy. They designed a ruler—the Mohenjo-daro ruler—whose length of approximately 1.32 in (34 mm) was divided into ten equal parts. Bricks manufactured in ancient Mohenjo-daro often had dimensions that were integral multiples of this unit of length.[70]

The Bakhshali manuscript contains problems involving arithmetic, algebra and geometry, including mensuration. The topics covered include fractions, square roots, arithmetic and geometric progressions, solutions of simple equations, simultaneous linear equations, quadratic equations and indeterminate equations of the second degree.[71] In the 3rd century BCE, Pingala presents the Pingala-sutras, the earliest known treatise on Sanskrit prosody.[72] He also presents a numerical system by adding one to the sum of place values.[73] Pingala's work also includes material related to the Fibonacci numbers, called mātrāmeru.[74]

Indian astronomer and mathematician Aryabhata (476–550), in his Aryabhatiya (499) introduced the sine function in trigonometry and the number 0. In 628, Brahmagupta suggested that gravity was a force of attraction.[75][76] He also lucidly explained the use of zero as both a placeholder and a decimal digit, along with the Hindu–Arabic numeral system now used universally throughout the world. Arabic translations of the two astronomers' texts were soon available in the Islamic world, introducing what would become Arabic numerals to the Islamic world by the 9th century.[77][78]

Narayana Pandita (1340–1400[79]) was an Indian mathematician. Plofker writes that his texts were the most significant Sanskrit mathematics treatises after those of Bhaskara II, other than the Kerala school.[80]: 52 He wrote the Ganita Kaumudi (lit. "Moonlight of mathematics") in 1356 about mathematical operations.[81] The work anticipated many developments in combinatorics.

Between the 14th and 16th centuries, the Kerala school of astronomy and mathematics made significant advances in astronomy and especially mathematics, including fields such as trigonometry and analysis. In particular, Madhava of Sangamagrama led advancement in analysis by providing the infinite and taylor series expansion of some trigonometric functions and pi approximation.[82] Parameshvara (1380–1460), presents a case of the Mean Value theorem in his commentaries on Govindasvāmi and Bhāskara II.[83] The Yuktibhāṣā was written by Jyeshtadeva in 1530.[84]

Astronomy

[edit]

The first textual mention of astronomical concepts comes from the Vedas, religious literature of India.[85] According to Sarma (2008): "One finds in the Rigveda intelligent speculations about the genesis of the universe from nonexistence, the configuration of the universe, the spherical self-supporting earth, and the year of 360 days divided into 12 equal parts of 30 days each with a periodical intercalary month.".[85]

The first 12 chapters of the Siddhanta Shiromani, written by Bhāskara in the 12th century, cover topics such as: mean longitudes of the planets; true longitudes of the planets; the three problems of diurnal rotation; syzygies; lunar eclipses; solar eclipses; latitudes of the planets; risings and settings; the moon's crescent; conjunctions of the planets with each other; conjunctions of the planets with the fixed stars; and the patas of the sun and moon. The 13 chapters of the second part cover the nature of the sphere, as well as significant astronomical and trigonometric calculations based on it.

In the Tantrasangraha treatise, Nilakantha Somayaji's updated the Aryabhatan model for the interior planets, Mercury, and Venus and the equation that he specified for the center of these planets was more accurate than the ones in European or Islamic astronomy until the time of Johannes Kepler in the 17th century.[86] Jai Singh II of Jaipur constructed five observatories called Jantar Mantars in total, in New Delhi, Jaipur, Ujjain, Mathura and Varanasi; they were completed between 1724 and 1735.[87]

Grammar

[edit]Some of the earliest linguistic activities can be found in Iron Age India (1st millennium BCE) with the analysis of Sanskrit for the purpose of the correct recitation and interpretation of Vedic texts. The most notable grammarian of Sanskrit was Pāṇini (c. 520–460 BCE), whose grammar formulates close to 4,000 rules for Sanskrit. Inherent in his analytic approach are the concepts of the phoneme, the morpheme and the root. The Tolkāppiyam text, composed in the early centuries of the common era,[88] is a comprehensive text on Tamil grammar, which includes sutras on orthography, phonology, etymology, morphology, semantics, prosody, sentence structure and the significance of context in language.

Medicine

[edit]

Findings from Neolithic graveyards in what is now Pakistan show evidence of proto-dentistry among an early farming culture.[89] The ancient text Suśrutasamhitā of Suśruta describes procedures on various forms of surgery, including rhinoplasty, the repair of torn ear lobes, perineal lithotomy, cataract surgery, and several other excisions and other surgical procedures.[90][91] The Charaka Samhita of Charaka describes ancient theories on human body, etiology, symptomology and therapeutics for a wide range of diseases.[92] It also includes sections on the importance of diet, hygiene, prevention, medical education, and the teamwork of a physician, nurse and patient necessary for recovery to health.[93][94][95]

Politics and state

[edit]An ancient Indian treatise on statecraft, economic policy and military strategy by Kautilya[96] and Viṣhṇugupta,[97] who are traditionally identified with Chāṇakya (c. 350–283 BCE). In this treatise, the behaviors and relationships of the people, the King, the State, the Government Superintendents, Courtiers, Enemies, Invaders, and Corporations are analyzed and documented. Roger Boesche describes the Arthaśāstra as "a book of political realism, a book analyzing how the political world does work and not very often stating how it ought to work, a book that frequently discloses to a king what calculating and sometimes brutal measures he must carry out to preserve the state and the common good."[98]

Logic

[edit]The development of Indian logic dates back to the Chandahsutra of Pingala and anviksiki of Medhatithi Gautama (c. 6th century BCE); the Sanskrit grammar rules of Pāṇini (c. 5th century BCE); the Vaisheshika school's analysis of atomism (c. 6th century BCE to 2nd century BCE); the analysis of inference by Gotama (c. 6th century BCE to 2nd century CE), founder of the Nyaya school of Hindu philosophy; and the tetralemma of Nagarjuna (c. 2nd century CE).

Indian logic stands as one of the three original traditions of logic, alongside the Greek and the Chinese logic. The Indian tradition continued to develop through early to modern times, in the form of the Navya-Nyāya school of logic.

In the 2nd century, the Buddhist philosopher Nagarjuna refined the Catuskoti form of logic. The Catuskoti is also often glossed Tetralemma (Greek) which is the name for a largely comparable, but not equatable, 'four corner argument' within the tradition of Classical logic.

Navya-Nyāya developed a sophisticated language and conceptual scheme that allowed it to raise, analyse, and solve problems in logic and epistemology. It systematised all the Nyāya concepts into four main categories: sense or perception (pratyakşa), inference (anumāna), comparison or similarity (upamāna), and testimony (sound or word; śabda).

China

[edit]

Chinese mathematics

[edit]From the earliest[citation needed] the Chinese used a positional decimal system on counting boards in order to calculate. To express 10, a single rod is placed in the second box from the right. The spoken language uses a similar system to English: e.g. four thousand two hundred and seven. No symbol was used for zero. By the 1st century BCE, negative numbers and decimal fractions were in use and The Nine Chapters on the Mathematical Art included methods for extracting higher order roots by Horner's method and solving linear equations and by Pythagoras' theorem. Cubic equations were solved in the Tang dynasty and solutions of equations of order higher than 3 appeared in print in 1245 CE by Ch'in Chiu-shao. Pascal's triangle for binomial coefficients was described around 1100 by Jia Xian.[99]

Although the first attempts at an axiomatization of geometry appear in the Mohist canon in 330 BCE, Liu Hui developed algebraic methods in geometry in the 3rd century CE and also calculated pi to 5 significant figures. In 480, Zu Chongzhi improved this by discovering the ratio which remained the most accurate value for 1200 years.

Astronomical observations

[edit]

Astronomical observations from China constitute the longest continuous sequence from any civilization and include records of sunspots (112 records from 364 BCE), supernovas (1054), lunar and solar eclipses. By the 12th century, they could reasonably accurately make predictions of eclipses, but the knowledge of this was lost during the Ming dynasty, so that the Jesuit Matteo Ricci gained much favor in 1601 by his predictions.[101][incomplete short citation] By 635 Chinese astronomers had observed that the tails of comets always point away from the sun.

From antiquity, the Chinese used an equatorial system for describing the skies and a star map from 940 was drawn using a cylindrical (Mercator) projection. The use of an armillary sphere is recorded from the 4th century BCE and a sphere permanently mounted in equatorial axis from 52 BCE. In 125 CE Zhang Heng used water power to rotate the sphere in real time. This included rings for the meridian and ecliptic. By 1270 they had incorporated the principles of the Arab torquetum.

In the Song Empire (960–1279) of Imperial China, Chinese scholar-officials unearthed, studied, and cataloged ancient artifacts.

Inventions

[edit]

To better prepare for calamities, Zhang Heng invented a seismometer in 132 CE which provided instant alert to authorities in the capital Luoyang that an earthquake had occurred in a location indicated by a specific cardinal or ordinal direction.[102][103] Although no tremors could be felt in the capital when Zhang told the court that an earthquake had just occurred in the northwest, a message came soon afterwards that an earthquake had indeed struck 400 to 500 km (250 to 310 mi) northwest of Luoyang (in what is now modern Gansu).[104] Zhang called his device the 'instrument for measuring the seasonal winds and the movements of the Earth' (Houfeng didong yi 候风地动仪), so-named because he and others thought that earthquakes were most likely caused by the enormous compression of trapped air.[105]

There are many notable contributors to early Chinese disciplines, inventions, and practices throughout the ages. One of the best examples would be the medieval Song Chinese Shen Kuo (1031–1095), a polymath and statesman who was the first to describe the magnetic-needle compass used for navigation, discovered the concept of true north, improved the design of the astronomical gnomon, armillary sphere, sight tube, and clepsydra, and described the use of drydocks to repair boats. After observing the natural process of the inundation of silt and the find of marine fossils in the Taihang Mountains (hundreds of miles from the Pacific Ocean), Shen Kuo devised a theory of land formation, or geomorphology. He also adopted a theory of gradual climate change in regions over time, after observing petrified bamboo found underground at Yan'an, Shaanxi. If not for Shen Kuo's writing,[106] the architectural works of Yu Hao would be little known, along with the inventor of movable type printing, Bi Sheng (990–1051). Shen's contemporary Su Song (1020–1101) was also a brilliant polymath, an astronomer who created a celestial atlas of star maps, wrote a treatise related to botany, zoology, mineralogy, and metallurgy, and had erected a large astronomical clocktower in Kaifeng city in 1088. To operate the crowning armillary sphere, his clocktower featured an escapement mechanism and the world's oldest known use of an endless power-transmitting chain drive.[107]

The Jesuit China missions of the 16th and 17th centuries "learned to appreciate the scientific achievements of this ancient culture and made them known in Europe. Through their correspondence European scientists first learned about the Chinese science and culture."[108] Western academic thought on the history of Chinese technology and science was galvanized by the work of Joseph Needham and the Needham Research Institute. Among the technological accomplishments of China were, according to the British scholar Needham, the water-powered celestial globe (Zhang Heng),[109] dry docks, sliding calipers, the double-action piston pump,[109] the blast furnace,[110] the multi-tube seed drill, the wheelbarrow,[110] the suspension bridge,[110] the winnowing machine,[109] gunpowder,[110] the raised-relief map, toilet paper,[110] the efficient harness,[109] along with contributions in logic, astronomy, medicine, and other fields.

However, cultural factors prevented these Chinese achievements from developing into "modern science". According to Needham, it may have been the religious and philosophical framework of Chinese intellectuals which made them unable to accept the ideas of laws of nature:

It was not that there was no order in nature for the Chinese, but rather that it was not an order ordained by a rational personal being, and hence there was no conviction that rational personal beings would be able to spell out in their lesser earthly languages the divine code of laws which he had decreed aforetime. The Taoists, indeed, would have scorned such an idea as being too naïve for the subtlety and complexity of the universe as they intuited it.[111]

Pre-Columbian Mesoamerica

[edit]

During the Middle Formative Period (c. 900 BCE – c. 300 BCE) of Pre-Columbian Mesoamerica, the Zapotec civilization, heavily influenced by the Olmec civilization, established the first known full writing system of the region (possibly predated by the Olmec Cascajal Block),[112] as well as the first known astronomical calendar in Mesoamerica.[113][114] Following a period of initial urban development in the Preclassical period, the Classic Maya civilization (c. 250 CE – c. 900 CE) built on the shared heritage of the Olmecs by developing the most sophisticated systems of writing, astronomy, calendrical science, and mathematics among Mesoamerican peoples.[113] The Maya developed a positional numeral system with a base of 20 that included the use of zero for constructing their calendars.[115][116] Maya writing, which was developed by 200 BCE, widespread by 100 BCE, and rooted in Olmec and Zapotec scripts, contains easily discernible calendar dates in the form of logographs representing numbers, coefficients, and calendar periods amounting to 20 days and even 20 years for tracking social, religious, political, and economic events in 360-day years.[117]

Classical antiquity and Greco-Roman science

[edit]The contributions of the Ancient Egyptians and Mesopotamians in the areas of astronomy, mathematics, and medicine had entered and shaped Greek natural philosophy of classical antiquity, whereby formal attempts were made to provide explanations of events in the physical world based on natural causes.[2][3] Inquiries were also aimed at such practical goals such as establishing a reliable calendar or determining how to cure a variety of illnesses. The ancient people who were considered the first scientists may have thought of themselves as natural philosophers, as practitioners of a skilled profession (for example, physicians), or as followers of a religious tradition (for example, temple healers).

Pre-socratics

[edit]The earliest Greek philosophers, known as the pre-Socratics,[118] provided competing answers to the question found in the myths of their neighbors: "How did the ordered cosmos in which we live come to be?"[119] The pre-Socratic philosopher Thales (640–546 BCE) of Miletus,[120] identified by later authors such as Aristotle as the first of the Ionian philosophers,[2] postulated non-supernatural explanations for natural phenomena. For example, that land floats on water and that earthquakes are caused by the agitation of the water upon which the land floats, rather than the god Poseidon.[121] Thales' student Pythagoras of Samos founded the Pythagorean school, which investigated mathematics for its own sake, and was the first to postulate that the Earth is spherical in shape.[122] Leucippus (5th century BCE) introduced atomism, the theory that all matter is made of indivisible, imperishable units called atoms. This was greatly expanded on by his pupil Democritus and later Epicurus.

Natural philosophy

[edit]

Plato and Aristotle produced the first systematic discussions of natural philosophy, which did much to shape later investigations of nature. Their development of deductive reasoning was of particular importance and usefulness to later scientific inquiry. Plato founded the Platonic Academy in 387 BCE, whose motto was "Let none unversed in geometry enter here," and also turned out many notable philosophers. Plato's student Aristotle introduced empiricism and the notion that universal truths can be arrived at via observation and induction, thereby laying the foundations of the scientific method.[123] Aristotle also produced many biological writings that were empirical in nature, focusing on biological causation and the diversity of life. He made countless observations of nature, especially the habits and attributes of plants and animals on Lesbos, classified more than 540 animal species, and dissected at least 50.[124] Aristotle's writings profoundly influenced subsequent Islamic and European scholarship, though they were eventually superseded in the Scientific Revolution.[125][126]

Aristotle also contributed to theories of the elements and the cosmos. He believed that the celestial bodies (such as the planets and the Sun) had something called an unmoved mover that put the celestial bodies in motion. Aristotle tried to explain everything through mathematics and physics, but sometimes explained things such as the motion of celestial bodies through a higher power such as God. Aristotle did not have the technological advancements that would have explained the motion of celestial bodies.[127] In addition, Aristotle had many views on the elements. He believed that everything was derived of the elements earth, water, air, fire, and lastly the Aether. The Aether was a celestial element, and therefore made up the matter of the celestial bodies.[128] The elements of earth, water, air and fire were derived of a combination of two of the characteristics of hot, wet, cold, and dry, and all had their inevitable place and motion. The motion of these elements begins with earth being the closest to "the Earth," then water, air, fire, and finally Aether. In addition to the makeup of all things, Aristotle came up with theories as to why things did not return to their natural motion. He understood that water sits above earth, air above water, and fire above air in their natural state. He explained that although all elements must return to their natural state, the human body and other living things have a constraint on the elements – thus not allowing the elements making one who they are to return to their natural state.[129]

The important legacy of this period included substantial advances in factual knowledge, especially in anatomy, zoology, botany, mineralogy, geography, mathematics and astronomy; an awareness of the importance of certain scientific problems, especially those related to the problem of change and its causes; and a recognition of the methodological importance of applying mathematics to natural phenomena and of undertaking empirical research.[130][120] In the Hellenistic age scholars frequently employed the principles developed in earlier Greek thought: the application of mathematics and deliberate empirical research, in their scientific investigations.[131] Thus, clear unbroken lines of influence lead from ancient Greek and Hellenistic philosophers, to medieval Muslim philosophers and scientists, to the European Renaissance and Enlightenment, to the secular sciences of the modern day. Neither reason nor inquiry began with the Ancient Greeks, but the Socratic method did, along with the idea of Forms, give great advances in geometry, logic, and the natural sciences. According to Benjamin Farrington, former professor of Classics at Swansea University:

- "Men were weighing for thousands of years before Archimedes worked out the laws of equilibrium; they must have had practical and intuitional knowledge of the principals involved. What Archimedes did was to sort out the theoretical implications of this practical knowledge and present the resulting body of knowledge as a logically coherent system."

and again:

- "With astonishment we find ourselves on the threshold of modern science. Nor should it be supposed that by some trick of translation the extracts have been given an air of modernity. Far from it. The vocabulary of these writings and their style are the source from which our own vocabulary and style have been derived."[132]

Greek astronomy

[edit]

The astronomer Aristarchus of Samos was the first known person to propose a heliocentric model of the Solar System, while the geographer Eratosthenes accurately calculated the circumference of the Earth. Hipparchus (c. 190 – c. 120 BCE) produced the first systematic star catalog. The level of achievement in Hellenistic astronomy and engineering is impressively shown by the Antikythera mechanism (150–100 BCE), an analog computer for calculating the position of planets. Technological artifacts of similar complexity did not reappear until the 14th century, when mechanical astronomical clocks appeared in Europe.[133]

Hellenistic medicine

[edit]There was not a defined societal structure for healthcare during the age of Hippocrates.[134] At that time, society was not organized and knowledgeable as people still relied on pure religious reasoning to explain illnesses.[134] Hippocrates introduced the first healthcare system based on science and clinical protocols.[135] Hippocrates' theories about physics and medicine helped pave the way in creating an organized medical structure for society.[135] In medicine, Hippocrates (c. 460–370 BCE) and his followers were the first to describe many diseases and medical conditions and developed the Hippocratic Oath for physicians, still relevant and in use today. Hippocrates' ideas are expressed in The Hippocratic Corpus. The collection notes descriptions of medical philosophies and how disease and lifestyle choices reflect on the physical body.[135] Hippocrates influenced a Westernized, professional relationship among physician and patient.[136] Hippocrates is also known as "the Father of Medicine".[135] Herophilos (335–280 BCE) was the first to base his conclusions on dissection of the human body and to describe the nervous system. Galen (129 – c. 200 CE) performed many audacious operations—including brain and eye surgeries— that were not tried again for almost two millennia.

Greek mathematics

[edit]

In Hellenistic Egypt, the mathematician Euclid laid down the foundations of mathematical rigor and introduced the concepts of definition, axiom, theorem and proof still in use today in his Elements, considered the most influential textbook ever written.[138] Archimedes, considered one of the greatest mathematicians of all time,[139] is credited with using the method of exhaustion to calculate the area under the arc of a parabola with the summation of an infinite series, and gave a remarkably accurate approximation of pi.[140] He is also known in physics for laying the foundations of hydrostatics, statics, and the explanation of the principle of the lever.

Other developments

[edit]Theophrastus wrote some of the earliest descriptions of plants and animals, establishing the first taxonomy and looking at minerals in terms of their properties, such as hardness. Pliny the Elder produced one of the largest encyclopedias of the natural world in 77 CE, and was a successor to Theophrastus. For example, he accurately describes the octahedral shape of the diamond and noted that diamond dust is used by engravers to cut and polish other gems owing to its great hardness. His recognition of the importance of crystal shape is a precursor to modern crystallography, while notes on other minerals presages mineralogy. He recognizes other minerals have characteristic crystal shapes, but in one example, confuses the crystal habit with the work of lapidaries. Pliny was the first to show amber was a resin from pine trees, because of trapped insects within them.[141][142]

The development of archaeology has its roots in history and with those who were interested in the past, such as kings and queens who wanted to show past glories of their respective nations. The 5th-century-BCE Greek historian Herodotus was the first scholar to systematically study the past and perhaps the first to examine artifacts.

Greek scholarship under Roman rule

[edit]During the rule of Rome, famous historians such as Polybius, Livy and Plutarch documented the rise of the Roman Republic, and the organization and histories of other nations, while statesmen like Julius Caesar, Cicero, and others provided examples of the politics of the republic and Rome's empire and wars. The study of politics during this age was oriented toward understanding history, understanding methods of governing, and describing the operation of governments.

The Roman conquest of Greece did not diminish learning and culture in the Greek provinces.[143] On the contrary, the appreciation of Greek achievements in literature, philosophy, politics, and the arts by Rome's upper class coincided with the increased prosperity of the Roman Empire. Greek settlements had existed in Italy for centuries and the ability to read and speak Greek was not uncommon in Italian cities such as Rome.[143] Moreover, the settlement of Greek scholars in Rome, whether voluntarily or as slaves, gave Romans access to teachers of Greek literature and philosophy. Conversely, young Roman scholars also studied abroad in Greece and upon their return to Rome, were able to convey Greek achievements to their Latin leadership.[143] And despite the translation of a few Greek texts into Latin, Roman scholars who aspired to the highest level did so using the Greek language. The Roman statesman and philosopher Cicero (106 – 43 BCE) was a prime example. He had studied under Greek teachers in Rome and then in Athens and Rhodes. He mastered considerable portions of Greek philosophy, wrote Latin treatises on several topics, and even wrote Greek commentaries of Plato's Timaeus as well as a Latin translation of it, which has not survived.[143]

In the beginning, support for scholarship in Greek knowledge was almost entirely funded by the Roman upper class.[143] There were all sorts of arrangements, ranging from a talented scholar being attached to a wealthy household to owning educated Greek-speaking slaves.[143] In exchange, scholars who succeeded at the highest level had an obligation to provide advice or intellectual companionship to their Roman benefactors, or to even take care of their libraries. The less fortunate or accomplished ones would teach their children or perform menial tasks.[143] The level of detail and sophistication of Greek knowledge was adjusted to suit the interests of their Roman patrons. That meant popularizing Greek knowledge by presenting information that were of practical value such as medicine or logic (for courts and politics) but excluding subtle details of Greek metaphysics and epistemology. Beyond the basics, the Romans did not value natural philosophy and considered it an amusement for leisure time.[143]

Commentaries and encyclopedias were the means by which Greek knowledge was popularized for Roman audiences.[143] The Greek scholar Posidonius (c. 135-c. 51 BCE), a native of Syria, wrote prolifically on history, geography, moral philosophy, and natural philosophy. He greatly influenced Latin writers such as Marcus Terentius Varro (116-27 BCE), who wrote the encyclopedia Nine Books of Disciplines, which covered nine arts: grammar, rhetoric, logic, arithmetic, geometry, astronomy, musical theory, medicine, and architecture.[143] The Disciplines became a model for subsequent Roman encyclopedias and Varro's nine liberal arts were considered suitable education for a Roman gentleman. The first seven of Varro's nine arts would later define the seven liberal arts of medieval schools.[143] The pinnacle of the popularization movement was the Roman scholar Pliny the Elder (23/24–79 CE), a native of northern Italy, who wrote several books on the history of Rome and grammar. His most famous work was his voluminous Natural History.[143]

After the death of the Roman Emperor Marcus Aurelius in 180 CE, the favorable conditions for scholarship and learning in the Roman Empire were upended by political unrest, civil war, urban decay, and looming economic crisis.[143] In around 250 CE, barbarians began attacking and invading the Roman frontiers. These combined events led to a general decline in political and economic conditions. The living standards of the Roman upper class was severely impacted, and their loss of leisure diminished scholarly pursuits.[143] Moreover, during the 3rd and 4th centuries CE, the Roman Empire was administratively divided into two halves: Greek East and Latin West. These administrative divisions weakened the intellectual contact between the two regions.[143] Eventually, both halves went their separate ways, with the Greek East becoming the Byzantine Empire.[143] Christianity was also steadily expanding during this time and soon became a major patron of education in the Latin West. Initially, the Christian church adopted some of the reasoning tools of Greek philosophy in the 2nd and 3rd centuries CE to defend its faith against sophisticated opponents.[143] Nevertheless, Greek philosophy received a mixed reception from leaders and adherents of the Christian faith.[143] Some such as Tertullian (c. 155-c. 230 CE) were vehemently opposed to philosophy, denouncing it as heretic. Others such as Augustine of Hippo (354-430 CE) were ambivalent and defended Greek philosophy and science as the best ways to understand the natural world and therefore treated it as a handmaiden (or servant) of religion.[143] Education in the West began its gradual decline, along with the rest of Western Roman Empire, due to invasions by Germanic tribes, civil unrest, and economic collapse. Contact with the classical tradition was lost in specific regions such as Roman Britain and northern Gaul but continued to exist in Rome, northern Italy, southern Gaul, Spain, and North Africa.[143]

Middle Ages

[edit]In the Middle Ages, the classical learning continued in three major linguistic cultures and civilizations: Greek (the Byzantine Empire), Arabic (the Islamic world), and Latin (Western Europe).

Byzantine Empire

[edit]

Preservation of Greek heritage

[edit]The fall of the Western Roman Empire led to a deterioration of the classical tradition in the western part (or Latin West) of Europe during the 5th century. In contrast, the Byzantine Empire resisted the barbarian attacks and preserved and improved the learning.[144]

While the Byzantine Empire still held learning centers such as Constantinople, Alexandria and Antioch, Western Europe's knowledge was concentrated in monasteries until the development of medieval universities in the 12th centuries. The curriculum of monastic schools included the study of the few available ancient texts and of new works on practical subjects like medicine[145] and timekeeping.[146]

In the sixth century in the Byzantine Empire, Isidore of Miletus compiled Archimedes' mathematical works in the Archimedes Palimpsest, where all Archimedes' mathematical contributions were collected and studied.

John Philoponus, another Byzantine scholar, was the first to question Aristotle's teaching of physics, introducing the theory of impetus.[147][148] The theory of impetus was an auxiliary or secondary theory of Aristotelian dynamics, put forth initially to explain projectile motion against gravity. It is the intellectual precursor to the concepts of inertia, momentum and acceleration in classical mechanics.[149] The works of John Philoponus inspired Galileo Galilei ten centuries later.[150][151]

Collapse

[edit]During the Fall of Constantinople in 1453, a number of Greek scholars fled to North Italy in which they fueled the era later commonly known as the "Renaissance" as they brought with them a great deal of classical learning including an understanding of botany, medicine, and zoology. Byzantium also gave the West important inputs: John Philoponus' criticism of Aristotelian physics, and the works of Dioscorides.[152]

Islamic world

[edit]

This was the period (8th–14th century CE) of the Islamic Golden Age where commerce thrived, and new ideas and technologies emerged such as the importation of papermaking from China, which made the copying of manuscripts inexpensive.

Translations and Hellenization

[edit]The eastward transmission of Greek heritage to Western Asia was a slow and gradual process that spanned over a thousand years, beginning with the Asian conquests of Alexander the Great in 335 BCE to the founding of Islam in the 7th century CE.[5] The birth and expansion of Islam during the 7th century was quickly followed by its Hellenization. Knowledge of Greek conceptions of the world was preserved and absorbed into Islamic theology, law, culture, and commerce, which were aided by the translations of traditional Greek texts and some Syriac intermediary sources into Arabic during the 8th–9th century.

Education and scholarly pursuits

[edit]

Madrasas were centers for many different religious and scientific studies and were the culmination of different institutions such as mosques based around religious studies, housing for out-of-town visitors, and finally educational institutions focused on the natural sciences.[153] Unlike Western universities, students at a madrasa would learn from one specific teacher, who would issue a certificate at the completion of their studies called an Ijazah. An Ijazah differs from a western university degree in many ways one being that it is issued by a single person rather than an institution, and another being that it is not an individual degree declaring adequate knowledge over broad subjects, but rather a license to teach and pass on a very specific set of texts.[154] Women were also allowed to attend madrasas, as both students and teachers, something not seen in high western education until the 1800s.[154] Madrasas were more than just academic centers. The Suleymaniye Mosque, for example, was one of the earliest and most well-known madrasas, which was built by Suleiman the Magnificent in the 16th century.[155] The Suleymaniye Mosque was home to a hospital and medical college, a kitchen, and children's school, as well as serving as a temporary home for travelers.[155]

Higher education at a madrasa (or college) was focused on Islamic law and religious science and students had to engage in self-study for everything else.[5] And despite the occasional theological backlash, many Islamic scholars of science were able to conduct their work in relatively tolerant urban centers (e.g., Baghdad and Cairo) and were protected by powerful patrons.[5] They could also travel freely and exchange ideas as there were no political barriers within the unified Islamic state.[5] Islamic science during this time was primarily focused on the correction, extension, articulation, and application of Greek ideas to new problems.[5]

Advancements in mathematics

[edit]Most of the achievements by Islamic scholars during this period were in mathematics.[5] Arabic mathematics was a direct descendant of Greek and Indian mathematics.[5] For instance, what is now known as Arabic numerals originally came from India, but Muslim mathematicians made several key refinements to the number system, such as the introduction of decimal point notation. Mathematicians such as Muhammad ibn Musa al-Khwarizmi (c. 780–850) gave his name to the concept of the algorithm, while the term algebra is derived from al-jabr, the beginning of the title of one of his publications.[156] Islamic trigonometry continued from the works of Ptolemy's Almagest and Indian Siddhanta, from which they added trigonometric functions, drew up tables, and applied trignometry to spheres and planes. Many of their engineers, instruments makers, and surveyors contributed books in applied mathematics. It was in astronomy where Islamic mathematicians made their greatest contributions. Al-Battani (c. 858–929) improved the measurements of Hipparchus, preserved in the translation of Ptolemy's Hè Megalè Syntaxis (The great treatise) translated as Almagest. Al-Battani also improved the precision of the measurement of the precession of the Earth's axis. Corrections were made to Ptolemy's geocentric model by al-Battani, Ibn al-Haytham,[157] Averroes and the Maragha astronomers such as Nasir al-Din al-Tusi, Mu'ayyad al-Din al-Urdi and Ibn al-Shatir.[158][159]

Scholars with geometric skills made significant improvements to the earlier classical texts on light and sight by Euclid, Aristotle, and Ptolemy.[5] The earliest surviving Arabic treatises were written in the 9th century by Abū Ishāq al-Kindī, Qustā ibn Lūqā, and (in fragmentary form) Ahmad ibn Isā. Later in the 11th century, Ibn al-Haytham (known as Alhazen in the West), a mathematician and astronomer, synthesized a new theory of vision based on the works of his predecessors.[5] His new theory included a complete system of geometrical optics, which was set in great detail in his Book of Optics.[5][160] His book was translated into Latin and was relied upon as a principal source on the science of optics in Europe until the 17th century.[5]

Institutionalization of medicine

[edit]The medical sciences were prominently cultivated in the Islamic world.[5] The works of Greek medical theories, especially those of Galen, were translated into Arabic and there was an outpouring of medical texts by Islamic physicians, which were aimed at organizing, elaborating, and disseminating classical medical knowledge.[5] Medical specialties started to emerge, such as those involved in the treatment of eye diseases such as cataracts. Ibn Sina (known as Avicenna in the West, c. 980–1037) was a prolific Persian medical encyclopedist[161] wrote extensively on medicine,[162][163] with his two most notable works in medicine being the Kitāb al-shifāʾ ("Book of Healing") and The Canon of Medicine, both of which were used as standard medicinal texts in both the Muslim world and in Europe well into the 17th century. Amongst his many contributions are the discovery of the contagious nature of infectious diseases,[162] and the introduction of clinical pharmacology.[164] Institutionalization of medicine was another important achievement in the Islamic world. Although hospitals as an institution for the sick emerged in the Byzantium empire, the model of institutionalized medicine for all social classes was extensive in the Islamic empire and was scattered throughout. In addition to treating patients, physicians could teach apprentice physicians, as well write and do research. The discovery of the pulmonary transit of blood in the human body by Ibn al-Nafis occurred in a hospital setting.[5]

Decline

[edit]Islamic science began its decline in the 12th–13th century, before the Renaissance in Europe, due in part to the Christian reconquest of Spain and the Mongol conquests in the East in the 11th–13th century. The Mongols sacked Baghdad, capital of the Abbasid Caliphate, in 1258, which ended the Abbasid empire.[5][165] Nevertheless, many of the conquerors became patrons of the sciences. Hulagu Khan, for example, who led the siege of Baghdad, became a patron of the Maragheh observatory.[5] Islamic astronomy continued to flourish into the 16th century.[5]

Western Europe

[edit]

By the eleventh century, most of Europe had become Christian; stronger monarchies emerged; borders were restored; technological developments and agricultural innovations were made, increasing the food supply and population. Classical Greek texts were translated from Arabic and Greek into Latin, stimulating scientific discussion in Western Europe.[166]

In classical antiquity, Greek and Roman taboos had meant that dissection was usually banned, but in the Middle Ages medical teachers and students at Bologna began to open human bodies, and Mondino de Luzzi (c. 1275–1326) produced the first known anatomy textbook based on human dissection.[167][168]

As a result of the Pax Mongolica, Europeans, such as Marco Polo, began to venture further and further east. The written accounts of Polo and his fellow travelers inspired other Western European maritime explorers to search for a direct sea route to Asia, ultimately leading to the Age of Discovery.[169]

Technological advances were also made, such as the early flight of Eilmer of Malmesbury (who had studied mathematics in 11th-century England),[170] and the metallurgical achievements of the Cistercian blast furnace at Laskill.[171][172]

Medieval universities

[edit]An intellectual revitalization of Western Europe started with the birth of medieval universities in the 12th century. These urban institutions grew from the informal scholarly activities of learned friars who visited monasteries, consulted libraries, and conversed with other fellow scholars.[173] A friar who became well-known would attract a following of disciples, giving rise to a brotherhood of scholars (or collegium in Latin). A collegium might travel to a town or request a monastery to host them. However, if the number of scholars within a collegium grew too large, they would opt to settle in a town instead.[173] As the number of collegia within a town grew, the collegia might request that their king grant them a charter that would convert them into a universitas.[173] Many universities were chartered during this period, with the first in Bologna in 1088, followed by Paris in 1150, Oxford in 1167, and Cambridge in 1231.[173] The granting of a charter meant that the medieval universities were partially sovereign and independent from local authorities.[173] Their independence allowed them to conduct themselves and judge their own members based on their own rules. Furthermore, as initially religious institutions, their faculties and students were protected from capital punishment (e.g., gallows).[173] Such independence was a matter of custom, which could, in principle, be revoked by their respective rulers if they felt threatened. Discussions of various subjects or claims at these medieval institutions, no matter how controversial, were done in a formalized way so as to declare such discussions as being within the bounds of a university and therefore protected by the privileges of that institution's sovereignty.[173] A claim could be described as ex cathedra (literally "from the chair", used within the context of teaching) or ex hypothesi (by hypothesis). This meant that the discussions were presented as purely an intellectual exercise that did not require those involved to commit themselves to the truth of a claim or to proselytize. Modern academic concepts and practices such as academic freedom or freedom of inquiry are remnants of these medieval privileges that were tolerated in the past.[173]

The curriculum of these medieval institutions centered on the seven liberal arts, which were aimed at providing beginning students with the skills for reasoning and scholarly language.[173] Students would begin their studies starting with the first three liberal arts or Trivium (grammar, rhetoric, and logic) followed by the next four liberal arts or Quadrivium (arithmetic, geometry, astronomy, and music).[173][143] Those who completed these requirements and received their baccalaureate (or Bachelor of Arts) had the option to join the higher faculty (law, medicine, or theology), which would confer an LLD for a lawyer, an MD for a physician, or ThD for a theologian.[173] Students who chose to remain in the lower faculty (arts) could work towards a Magister (or Master's) degree and would study three philosophies: metaphysics, ethics, and natural philosophy.[173] Latin translations of Aristotle's works such as De Anima (On the Soul) and the commentaries on them were required readings. As time passed, the lower faculty was allowed to confer its own doctoral degree called the PhD.[173] Many of the Masters were drawn to encyclopedias and had used them as textbooks. But these scholars yearned for the complete original texts of the Ancient Greek philosophers, mathematicians, and physicians such as Aristotle, Euclid, and Galen, which were not available to them at the time. These Ancient Greek texts were to be found in the Byzantine Empire and the Islamic World.[173]

Translations of Greek and Arabic sources

[edit]Contact with the Byzantine Empire,[150] and with the Islamic world during the Reconquista and the Crusades, allowed Latin Europe access to scientific Greek and Arabic texts, including the works of Aristotle, Ptolemy, Isidore of Miletus, John Philoponus, Jābir ibn Hayyān, al-Khwarizmi, Alhazen, Avicenna, and Averroes. European scholars had access to the translation programs of Raymond of Toledo, who sponsored the 12th century Toledo School of Translators from Arabic to Latin. Later translators like Michael Scotus would learn Arabic in order to study these texts directly. The European universities aided materially in the translation and propagation of these texts and started a new infrastructure which was needed for scientific communities. In fact, European university put many works about the natural world and the study of nature at the center of its curriculum,[174] with the result that the "medieval university laid far greater emphasis on science than does its modern counterpart and descendent."[175]

At the beginning of the 13th century, there were reasonably accurate Latin translations of the main works of almost all the intellectually crucial ancient authors, allowing a sound transfer of scientific ideas via both the universities and the monasteries. By then, the natural philosophy in these texts began to be extended by scholastics such as Robert Grosseteste, Roger Bacon, Albertus Magnus and Duns Scotus. Precursors of the modern scientific method, influenced by earlier contributions of the Islamic world, can be seen already in Grosseteste's emphasis on mathematics as a way to understand nature, and in the empirical approach admired by Bacon, particularly in his Opus Majus. Pierre Duhem's thesis is that Stephen Tempier – the Bishop of Paris – Condemnation of 1277 led to the study of medieval science as a serious discipline, "but no one in the field any longer endorses his view that modern science started in 1277".[176] However, many scholars agree with Duhem's view that the mid-late Middle Ages saw important scientific developments.[177][178][179]

Medieval science

[edit]The first half of the 14th century saw much important scientific work, largely within the framework of scholastic commentaries on Aristotle's scientific writings.[180] William of Ockham emphasized the principle of parsimony: natural philosophers should not postulate unnecessary entities, so that motion is not a distinct thing but is only the moving object[181] and an intermediary "sensible species" is not needed to transmit an image of an object to the eye.[182] Scholars such as Jean Buridan and Nicole Oresme started to reinterpret elements of Aristotle's mechanics. In particular, Buridan developed the theory that impetus was the cause of the motion of projectiles, which was a first step towards the modern concept of inertia.[183] The Oxford Calculators began to mathematically analyze the kinematics of motion, making this analysis without considering the causes of motion.[184]

In 1348, the Black Death and other disasters sealed a sudden end to philosophic and scientific development. Yet, the rediscovery of ancient texts was stimulated by the Fall of Constantinople in 1453, when many Byzantine scholars sought refuge in the West. Meanwhile, the introduction of printing was to have great effect on European society. The facilitated dissemination of the printed word democratized learning and allowed ideas such as algebra to propagate more rapidly. These developments paved the way for the Scientific Revolution, where scientific inquiry, halted at the start of the Black Death, resumed.[185][186]

Renaissance

[edit]Revival of learning

[edit]The renewal of learning in Europe began with 12th century Scholasticism. The Northern Renaissance showed a decisive shift in focus from Aristotelian natural philosophy to chemistry and the biological sciences (botany, anatomy, and medicine).[187] Thus modern science in Europe was resumed in a period of great upheaval: the Protestant Reformation and Catholic Counter-Reformation; the discovery of the Americas by Christopher Columbus; the Fall of Constantinople; but also the re-discovery of Aristotle during the Scholastic period presaged large social and political changes. Thus, a suitable environment was created in which it became possible to question scientific doctrine, in much the same way that Martin Luther and John Calvin questioned religious doctrine. The works of Ptolemy (astronomy) and Galen (medicine) were found not always to match everyday observations. Work by Vesalius on human cadavers found problems with the Galenic view of anatomy.[188]

The discovery of Cristallo contributed to the advancement of science in the period as well with its appearance out of Venice around 1450. The new glass allowed for better spectacles and eventually to the inventions of the telescope and microscope.

Theophrastus' work on rocks, Peri lithōn, remained authoritative for millennia: its interpretation of fossils was not overturned until after the Scientific Revolution.

During the Italian Renaissance, Niccolò Machiavelli established the emphasis of modern political science on direct empirical observation of political institutions and actors. Later, the expansion of the scientific paradigm during the Enlightenment further pushed the study of politics beyond normative determinations.[189] In particular, the study of statistics, to study the subjects of the state, has been applied to polling and voting.

In archaeology, the 15th and 16th centuries saw the rise of antiquarians in Renaissance Europe who were interested in the collection of artifacts.

Scientific Revolution and birth of New Science

[edit]

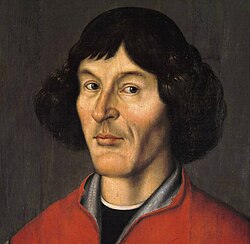

The early modern period is seen as a flowering of the European Renaissance. There was a willingness to question previously held truths and search for new answers. This resulted in a period of major scientific advancements, now known as the Scientific Revolution, which led to the emergence of a New Science that was more mechanistic in its worldview, more integrated with mathematics, and more reliable and open as its knowledge was based on a newly defined scientific method.[12][15][16][190] The Scientific Revolution is a convenient boundary between ancient thought and classical physics, and is traditionally held to have begun in 1543, when the books De humani corporis fabrica (On the Workings of the Human Body) by Andreas Vesalius, and also De Revolutionibus, by the astronomer Nicolaus Copernicus, were first printed. The period culminated with the publication of the Philosophiæ Naturalis Principia Mathematica in 1687 by Isaac Newton, representative of the unprecedented growth of scientific publications throughout Europe.

Other significant scientific advances were made during this time by Galileo Galilei, Johannes Kepler, Edmond Halley, William Harvey, Pierre Fermat, Robert Hooke, Christiaan Huygens, Tycho Brahe, Marin Mersenne, Gottfried Leibniz, Isaac Newton, and Blaise Pascal.[191] In philosophy, major contributions were made by Francis Bacon, Sir Thomas Browne, René Descartes, Baruch Spinoza, Pierre Gassendi, Robert Boyle, and Thomas Hobbes.[191] Christiaan Huygens derived the centripetal and centrifugal forces and was the first to transfer mathematical inquiry to describe unobservable physical phenomena. William Gilbert did some of the earliest experiments with electricity and magnetism, establishing that the Earth itself is magnetic.

Heliocentrism

[edit]

The heliocentric astronomical model of the universe was refined by Nicolaus Copernicus. Copernicus proposed the idea that the Earth and all heavenly spheres, containing the planets and other objects in the cosmos, rotated around the Sun.[192] His heliocentric model also proposed that all stars were fixed and did not rotate on an axis, nor in any motion at all.[193] His theory proposed the yearly rotation of the Earth and the other heavenly spheres around the Sun and was able to calculate the distances of planets using deferents and epicycles. Although these calculations were not completely accurate, Copernicus was able to understand the distance order of each heavenly sphere. The Copernican heliocentric system was a revival of the hypotheses of Aristarchus of Samos and Seleucus of Seleucia.[194] Aristarchus of Samos did propose that the Earth rotated around the Sun but did not mention anything about the other heavenly spheres' order, motion, or rotation.[195] Seleucus of Seleucia also proposed the rotation of the Earth around the Sun but did not mention anything about the other heavenly spheres. In addition, Seleucus of Seleucia understood that the Moon rotated around the Earth and could be used to explain the tides of the oceans, thus further proving his understanding of the heliocentric idea.[196]

Age of Enlightenment

[edit]

Continuation of Scientific Revolution

[edit]The Scientific Revolution continued into the Age of Enlightenment, which accelerated the development of modern science.

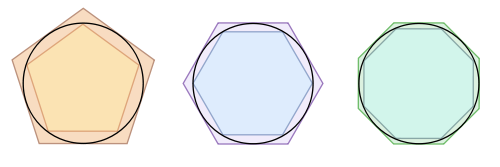

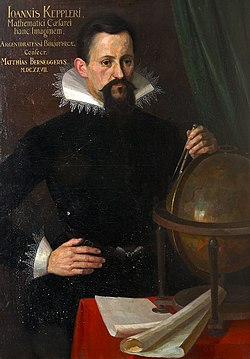

Planets and orbits

[edit]The heliocentric model revived by Nicolaus Copernicus was followed by the model of planetary motion given by Johannes Kepler in the early 17th century, which proposed that the planets follow elliptical orbits, with the Sun at one focus of the ellipse. In Astronomia Nova (A New Astronomy), the first two of the laws of planetary motion were shown by the analysis of the orbit of Mars. Kepler introduced the revolutionary concept of planetary orbit. Because of his work astronomical phenomena came to be seen as being governed by physical laws.[200]

Emergence of chemistry

[edit]A decisive moment came when "chemistry" was distinguished from alchemy by Robert Boyle in his work The Sceptical Chymist, in 1661; although the alchemical tradition continued for some time after his work. Other important steps included the gravimetric experimental practices of medical chemists like William Cullen, Joseph Black, Torbern Bergman and Pierre Macquer and through the work of Antoine Lavoisier ("father of modern chemistry") on oxygen and the law of conservation of mass, which refuted phlogiston theory. Modern chemistry emerged from the sixteenth through the eighteenth centuries through the material practices and theories promoted by alchemy, medicine, manufacturing and mining.[201][202][203]

Calculus and Newtonian mechanics

[edit]In 1687, Isaac Newton published the Principia Mathematica, detailing two comprehensive and successful physical theories: Newton's laws of motion, which led to classical mechanics; and Newton's law of universal gravitation, which describes the fundamental force of gravity.

Circulatory system

[edit]William Harvey published De Motu Cordis in 1628, which revealed his conclusions based on his extensive studies of vertebrate circulatory systems.[191] He identified the central role of the heart, arteries, and veins in producing blood movement in a circuit, and failed to find any confirmation of Galen's pre-existing notions of heating and cooling functions.[204] The history of early modern biology and medicine is often told through the search for the seat of the soul.[205] Galen in his descriptions of his foundational work in medicine presents the distinctions between arteries, veins, and nerves using the vocabulary of the soul.[206]

Scientific societies and journals

[edit]A critical innovation was the creation of permanent scientific societies and their scholarly journals, which dramatically sped the diffusion of new ideas. Typical was the founding of the Royal Society in London in 1660 and its journal in 1665 the Philosophical Transaction of the Royal Society, the first scientific journal in English.[207] 1665 also saw the first journal in French, the Journal des sçavans. Science drawing on the works[208] of Newton, Descartes, Pascal and Leibniz, science was on a path to modern mathematics, physics and technology by the time of the generation of Benjamin Franklin (1706–1790), Leonhard Euler (1707–1783), Mikhail Lomonosov (1711–1765) and Jean le Rond d'Alembert (1717–1783). Denis Diderot's Encyclopédie, published between 1751 and 1772 brought this new understanding to a wider audience. The impact of this process was not limited to science and technology, but affected philosophy (Immanuel Kant, David Hume), religion (the increasingly significant impact of science upon religion), and society and politics in general (Adam Smith, Voltaire).

Developments in geology

[edit]Geology did not undergo systematic restructuring during the Scientific Revolution but instead existed as a cloud of isolated, disconnected ideas about rocks, minerals, and landforms long before it became a coherent science. Robert Hooke formulated a theory of earthquakes, and Nicholas Steno developed the theory of superposition and argued that fossils were the remains of once-living creatures. Beginning with Thomas Burnet's Sacred Theory of the Earth in 1681, natural philosophers began to explore the idea that the Earth had changed over time. Burnet and his contemporaries interpreted Earth's past in terms of events described in the Bible, but their work laid the intellectual foundations for secular interpretations of Earth history.

Post-Scientific Revolution

[edit]Bioelectricity

[edit]During the late 18th century, researchers such as Hugh Williamson[209] and John Walsh experimented on the effects of electricity on the human body. Further studies by Luigi Galvani and Alessandro Volta established the electrical nature of what Volta called galvanism.[210][211]

Developments in geology

[edit]

Modern geology, like modern chemistry, gradually evolved during the 18th and early 19th centuries. Benoît de Maillet and the Comte de Buffon saw the Earth as much older than the 6,000 years envisioned by biblical scholars. Jean-Étienne Guettard and Nicolas Desmarest hiked central France and recorded their observations on some of the first geological maps. Aided by chemical experimentation, naturalists such as Scotland's John Walker,[212] Sweden's Torbern Bergman, and Germany's Abraham Werner created comprehensive classification systems for rocks and minerals—a collective achievement that transformed geology into a cutting edge field by the end of the eighteenth century. These early geologists also proposed a generalized interpretations of Earth history that led James Hutton, Georges Cuvier and Alexandre Brongniart, following in the steps of Steno, to argue that layers of rock could be dated by the fossils they contained: a principle first applied to the geology of the Paris Basin. The use of index fossils became a powerful tool for making geological maps, because it allowed geologists to correlate the rocks in one locality with those of similar age in other, distant localities.

Birth of modern economics

[edit]

The basis for classical economics forms Adam Smith's An Inquiry into the Nature and Causes of the Wealth of Nations, published in 1776. Smith criticized mercantilism, advocating a system of free trade with division of labour. He postulated an "invisible hand" that regulated economic systems made up of actors guided only by self-interest. The "invisible hand" mentioned in a lost page in the middle of a chapter in the middle of the "Wealth of Nations", 1776, advances as Smith's central message.

Social science

[edit]Anthropology can best be understood as an outgrowth of the Age of Enlightenment. It was during this period that Europeans attempted systematically to study human behavior. Traditions of jurisprudence, history, philology and sociology developed during this time and informed the development of the social sciences of which anthropology was a part.

19th century

[edit]The 19th century saw the birth of science as a profession. William Whewell had coined the term scientist in 1833,[213] which soon replaced the older term natural philosopher.

Developments in physics

[edit]

In physics, the behavior of electricity and magnetism was studied by Giovanni Aldini, Alessandro Volta, Michael Faraday, Georg Ohm, and others. The experiments, theories and discoveries of Michael Faraday, Andre-Marie Ampere, James Clerk Maxwell, and their contemporaries led to the unification of the two phenomena into a single theory of electromagnetism as described by Maxwell's equations. Thermodynamics led to an understanding of heat and the notion of energy being defined.

Discovery of Neptune

[edit]In astronomy, the planet Neptune was discovered. Advances in astronomy and in optical systems in the 19th century resulted in the first observation of an asteroid (1 Ceres) in 1801, and the discovery of Neptune in 1846.

Developments in mathematics

[edit]In mathematics, the notion of complex numbers finally matured and led to a subsequent analytical theory; they also began the use of hypercomplex numbers. Karl Weierstrass and others carried out the arithmetization of analysis for functions of real and complex variables. It also saw rise to new progress in geometry beyond those classical theories of Euclid, after a period of nearly two thousand years. The mathematical science of logic likewise had revolutionary breakthroughs after a similarly long period of stagnation. But the most important step in science at this time were the ideas formulated by the creators of electrical science. Their work changed the face of physics and made possible for new technology to come about such as electric power, electrical telegraphy, the telephone, and radio.

Developments in chemistry

[edit]