Programming paradigm

From Wikipedia - Reading time: 18 min

From Wikipedia - Reading time: 18 min

A programming paradigm is a relatively high-level way to conceptualize and structure the implementation of a computer program. A programming language can be classified as supporting one or more paradigms.[1]

Paradigms are separated along and described by different dimensions of programming. Some paradigms are about implications of the execution model, such as allowing side effects, or whether the sequence of operations is defined by the execution model. Other paradigms are about the way code is organized, such as grouping into units that include both state and behavior. Yet others are about syntax and grammar.

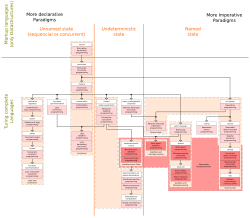

Some common programming paradigms include (shown in hierarchical relationship):[2][3][4]

- Imperative – code directly controls execution flow and state change, explicit statements that change a program state

- procedural – organized as procedures that call each other

- object-oriented – organized as objects that contain both data structure and associated behavior, uses data structures consisting of data fields and methods together with their interactions (objects) to design programs

- Class-based – object-oriented programming in which inheritance is achieved by defining classes of objects, versus the objects themselves

- Prototype-based – object-oriented programming that avoids classes and implements inheritance via cloning of instances

- Declarative – code declares properties of the desired result, but not how to compute it, describes what computation should perform, without specifying detailed state changes

- functional – a desired result is declared as the value of a series of function evaluations, uses evaluation of mathematical functions and avoids state and mutable data

- logic – a desired result is declared as the answer to a question about a system of facts and rules, uses explicit mathematical logic for programming

- reactive – a desired result is declared with data streams and the propagation of change

- Concurrent programming – has language constructs for concurrency, these may involve multi-threading, support for distributed computing, message passing, shared resources (including shared memory), or futures

- Actor programming – concurrent computation with actors that make local decisions in response to the environment (capable of selfish or competitive behaviour)

- Constraint programming – relations between variables are expressed as constraints (or constraint networks), directing allowable solutions (uses constraint satisfaction or simplex algorithm)

- Dataflow programming – forced recalculation of formulas when data values change (e.g. spreadsheets)

- Distributed programming – has support for multiple autonomous computers that communicate via computer networks

- Generic programming – uses algorithms written in terms of to-be-specified-later types that are then instantiated as needed for specific types provided as parameters

- Metaprogramming – writing programs that write or manipulate other programs (or themselves) as their data, or that do part of the work at compile time that would otherwise be done at runtime

- Template metaprogramming – metaprogramming methods in which a compiler uses templates to generate temporary source code, which is merged by the compiler with the rest of the source code and then compiled

- Reflective programming – metaprogramming methods in which a program modifies or extends itself

- Pipeline programming – a simple syntax change to add syntax to nest function calls to language originally designed with none

- Rule-based programming – a network of rules of thumb that comprise a knowledge base and can be used for expert systems and problem deduction & resolution

- Visual programming – manipulating program elements graphically rather than by specifying them textually (e.g. Simulink); also termed diagrammatic programming'

Overview

[edit]

Programming paradigms come from computer science research into existing practices of software development. The findings allow for describing and comparing programming practices and the languages used to code programs. For perspective, other fields of research study software engineering processes and describe various methodologies to describe and compare them.

A programming language can be described in terms of paradigms. Some languages support only one paradigm. For example, Smalltalk supports object-oriented and Haskell supports functional. Most languages support multiple paradigms. For example, a program written in C++, Object Pascal, or PHP can be purely procedural, purely object-oriented, or can contain aspects of both paradigms, or others.

When using a language that supports multiple paradigms, the developer chooses which paradigm elements to use. But, this choice may not involve considering paradigms per se. The developer often uses the features of a language as the language provides them and to the extent that the developer knows them. Categorizing the resulting code by paradigm is often an academic activity done in retrospect.

Languages categorized as imperative paradigm have two main features: they state the order in which operations occur, with constructs that explicitly control that order, and they allow side effects, in which state can be modified at one point in time, within one unit of code, and then later read at a different point in time inside a different unit of code. The communication between the units of code is not explicit.

In contrast, languages in the declarative paradigm do not state the order in which to execute operations. Instead, they supply a number of available operations in the system, along with the conditions under which each is allowed to execute.[7] The implementation of the language's execution model tracks which operations are free to execute and chooses the order independently. More at Comparison of multi-paradigm programming languages.

In object-oriented programming, code is organized into objects that contain state that is owned by and (usually) controlled by the code of the object. Most object-oriented languages are also imperative languages.

In object-oriented programming, programs are treated as a set of interacting objects. In functional programming, programs are treated as a sequence of stateless function evaluations. When programming computers or systems with many processors, in process-oriented programming, programs are treated as sets of concurrent processes that act on a logical shared data structures.

Many programming paradigms are as well known for the techniques they forbid as for those they support. For instance, pure functional programming disallows side-effects, while structured programming disallows the goto construct. Partly for this reason, new paradigms are often regarded as doctrinaire or overly rigid by those accustomed to older ones.[8] Yet, avoiding certain techniques can make it easier to understand program behavior, and to prove theorems about program correctness.

Programming paradigms can also be compared with programming models, which allows invoking an execution model by using only an API. Programming models can also be classified into paradigms based on features of the execution model.

For parallel computing, using a programming model instead of a language is common. The reason is that details of the parallel hardware leak into the abstractions used to program the hardware. This causes the programmer to have to map patterns in the algorithm onto patterns in the execution model (which have been inserted due to leakage of hardware into the abstraction). As a consequence, no one parallel programming language maps well to all computation problems. Thus, it is more convenient to use a base sequential language and insert API calls to parallel execution models via a programming model. Such parallel programming models can be classified according to abstractions that reflect the hardware, such as shared memory, distributed memory with message passing, notions of place visible in the code, and so forth. These can be considered flavors of programming paradigm that apply to only parallel languages and programming models.

Criticism

[edit]Some programming language researchers criticise the notion of paradigms as a classification of programming languages, e.g. Harper,[9] and Krishnamurthi.[10] They argue that many programming languages cannot be strictly classified into one paradigm, but rather include features from several paradigms. See Comparison of multi-paradigm programming languages.

History

[edit]Different approaches to programming have developed over time. Classification of each approach was either described at the time the approach was first developed, but often not until some time later, retrospectively. An early approach consciously identified as such is structured programming, advocated since the mid 1960s. The concept of a programming paradigm as such dates at least to 1978, in the Turing Award lecture of Robert W. Floyd, entitled The Paradigms of Programming, which cites the notion of paradigm as used by Thomas Kuhn in his The Structure of Scientific Revolutions (1962).[11] Early programming languages did not have clearly defined programming paradigms and sometimes programs made extensive use of goto statements. Liberal use of which lead to spaghetti code which is difficult to understand and maintain. This led to the development of structured programming paradigms that disallowed the use of goto statements; only allowing the use of more structured programming constructs.[12]

Languages and paradigms

[edit]Machine code

[edit]Machine code is the lowest-level of computer programming as it is machine instructions that define behavior at the lowest level of abstract possible for a computer. As it is the most prescriptive way to code it is classified as imperative.

It is sometimes called the first-generation programming language.

Assembly

[edit]Assembly language introduced mnemonics for machine instructions and memory addresses. Assembly is classified as imperative and is sometimes called the second-generation programming language.

In the 1960s, assembly languages were developed to support library COPY and quite sophisticated conditional macro generation and preprocessing abilities, CALL to subroutine, external variables and common sections (globals), enabling significant code re-use and isolation from hardware specifics via the use of logical operators such as READ/WRITE/GET/PUT. Assembly was, and still is, used for time-critical systems and often in embedded systems as it gives the most control of what the machine does.

Procedural languages

[edit]Procedural languages, also called the third-generation programming languages are the first described as high-level languages. They support vocabulary related to the problem being solved. For example,

- COmmon Business Oriented Language (COBOL) – uses terms like file, move and copy.

- FORmula TRANslation (FORTRAN) – using mathematical language terminology, it was developed mainly for scientific and engineering problems.

- ALGOrithmic Language (ALGOL) – focused on being an appropriate language to define algorithms, while using mathematical language terminology, targeting scientific and engineering problems, just like FORTRAN.

- Programming Language One (PL/I) – a hybrid commercial-scientific general purpose language supporting pointers.

- Beginners All purpose Symbolic Instruction Code (BASIC) – it was developed to enable more people to write programs.

- C – a general-purpose programming language, initially developed by Dennis Ritchie between 1969 and 1973 at AT&T Bell Labs.

These languages are classified as procedural paradigm. They directly control the step by step process that a computer program follows. The efficacy and efficiency of such a program is therefore highly dependent on the programmer's skill.

Object-oriented programming

[edit]In attempt to improve on procedural languages, object-oriented programming (OOP) languages were created, such as Simula, Smalltalk, C++, Eiffel, Python, PHP, Java, and C#. In these languages, data and methods to manipulate the data are in the same code unit called an object. This encapsulation ensures that the only way that an object can access data is via methods of the object that contains the data. Thus, an object's inner workings may be changed without affecting code that uses the object.

There is controversy raised by Alexander Stepanov, Richard Stallman[13] and other programmers, concerning the efficacy of the OOP paradigm versus the procedural paradigm. The need for every object to have associative methods leads some skeptics to associate OOP with software bloat; an attempt to resolve this dilemma came through polymorphism.

Although most OOP languages are third-generation, it is possible to create an object-oriented assembler language. High Level Assembly (HLA) is an example of this that fully supports advanced data types and object-oriented assembly language programming – despite its early origins. Thus, differing programming paradigms can be seen rather like motivational memes of their advocates, rather than necessarily representing progress from one level to the next.[citation needed] Precise comparisons of competing paradigms' efficacy are frequently made more difficult because of new and differing terminology applied to similar entities and processes together with numerous implementation distinctions across languages.

Declarative languages

[edit]A declarative programming program describes what the problem is, not how to solve it. The program is structured as a set of properties to find in the expected result, not as a procedure to follow. Given a database or a set of rules, the computer tries to find a solution matching all the desired properties. An archetype of a declarative language is the fourth generation language SQL, and the family of functional languages and logic programming.

Functional programming is a subset of declarative programming. Programs written using this paradigm use functions, blocks of code intended to behave like mathematical functions. Functional languages discourage changes in the value of variables through assignment, making a great deal of use of recursion instead.

The logic programming paradigm views computation as automated reasoning over a body of knowledge. Facts about the problem domain are expressed as logic formulas, and programs are executed by applying inference rules over them until an answer to the problem is found, or the set of formulas is proved inconsistent.

Other paradigms

[edit]Symbolic programming is a paradigm that describes programs able to manipulate formulas and program components as data.[4] Programs can thus effectively modify themselves, and appear to "learn", making them suited for applications such as artificial intelligence, expert systems, natural-language processing and computer games. Languages that support this paradigm include Lisp and Prolog.[14]

Differentiable programming structures programs so that they can be differentiated throughout, usually via automatic differentiation.[15][16]

Literate programming, as a form of imperative programming, structures programs as a human-centered web, as in a hypertext essay: documentation is integral to the program, and the program is structured following the logic of prose exposition, rather than compiler convenience.

Symbolic programming techniques such as reflective programming (reflection), which allow a program to refer to itself, might also be considered as a programming paradigm. However, this is compatible with the major paradigms and thus is not a real paradigm in its own right.

See also

[edit]References

[edit]- ^ "Multi-Paradigm Programming Language". Mozilla Developer Network. Mozilla Foundation. Jun 21, 2013. Archived from the original on 21 August 2013.

- ^ Nørmark, Kurt. Overview of the four main programming paradigms. Aalborg University, 9 May 2011. Retrieved 22 September 2012.

- ^ Frans Coenen (1999-10-11). "Characteristics of declarative programming languages". cgi.csc.liv.ac.uk. Archived from the original on 2014-02-27. Retrieved 2014-02-20.

- ^ a b Michael A. Covington (2010-08-23). "CSCI/ARTI 4540/6540: First Lecture on Symbolic Programming and LISP" (PDF). University of Georgia. Archived from the original (PDF) on 2012-03-07. Retrieved 2013-11-20.

- ^ Peter Van Roy (2009-05-12). "Programming Paradigms: What Every Programmer Should Know" (PDF). info.ucl.ac.be. Retrieved 2014-01-27.

- ^ Peter Van-Roy; Seif Haridi (2004). Concepts, Techniques, and Models of Computer Programming. MIT Press. ISBN 978-0-262-22069-9.

- ^ "Programming paradigms: What are the principles of programming?". IONOS Digitalguide. 20 April 2020. Archived from the original on Jun 29, 2022. Retrieved 2022-05-03.

- ^ Frank Rubin (March 1987). "'GOTO Considered Harmful' Considered Harmful" (PDF). Communications of the ACM. 30 (3): 195–196. doi:10.1145/214748.315722. S2CID 6853038. Archived from the original (PDF) on March 20, 2009.

- ^ Harper, Robert (1 May 2017). "What, if anything, is a programming-paradigm?". FifteenEightyFour. Cambridge University Press.

- ^ Krishnamurthi, Shriram (November 2008). "Teaching programming languages in a post-linnaean age". ACM SIGPLAN Notices. 43 (11). ACM: 81–83. doi:10.1145/1480828.1480846. S2CID 35714982..

- ^ Floyd, R. W. (1979). "The paradigms of programming". Communications of the ACM. 22 (8): 455–460. doi:10.1145/359138.359140.

- ^ Soroka, Barry I. (2006). Java 5: Objects First. Jones & Bartlett Learning. ISBN 9780763737207.

- ^ "Mode inheritance, cloning, hooks & OOP (Google Groups Discussion)".

- ^ "Business glossary: Symbolic programming definition". allbusiness.com. Retrieved 2014-07-30.

- ^ Wang, Fei; Decker, James; Wu, Xilun; Essertel, Gregory; Rompf, Tiark (2018), Bengio, S.; Wallach, H.; Larochelle, H.; Grauman, K. (eds.), "Backpropagation with Callbacks: Foundations for Efficient and Expressive Differentiable Programming" (PDF), Advances in Neural Information Processing Systems 31, Curran Associates, Inc., pp. 10201–10212, retrieved 2019-02-13

- ^ Innes, Mike (2018). "On Machine Learning and Programming Languages" (PDF). SysML Conference 2018. Archived from the original (PDF) on 2018-09-20. Retrieved 2019-02-13.

KSF

KSF