Integration By Parts

From Handwiki

From Handwiki | Part of a series of articles about |

| Calculus |

|---|

|

In calculus, and more generally in mathematical analysis, integration by parts or partial integration is a process that finds the integral of a product of functions in terms of the integral of the product of their derivative and antiderivative. It is frequently used to transform the antiderivative of a product of functions into an antiderivative for which a solution can be more easily found. The rule can be thought of as an integral version of the product rule of differentiation; it is indeed derived using the product rule.

The integration by parts formula states: [math]\displaystyle{ \begin{align} \int_a^b u(x) v'(x) \, dx & = \Big[u(x) v(x)\Big]_a^b - \int_a^b u'(x) v(x) \, dx\\ & = u(b) v(b) - u(a) v(a) - \int_a^b u'(x) v(x) \, dx. \end{align} }[/math]

Or, letting [math]\displaystyle{ u = u(x) }[/math] and [math]\displaystyle{ du = u'(x) \,dx }[/math] while [math]\displaystyle{ v = v(x) }[/math] and [math]\displaystyle{ dv = v'(x) \, dx, }[/math] the formula can be written more compactly: [math]\displaystyle{ \int u \, dv \ =\ uv - \int v \, du. }[/math]

It is important to note that the former expression is written as a definite integral and the latter is written as an indefinite integral. Applying the appropriate limits to the latter expression should yield the former, but the latter is not necessarily equivalent to the former.

Mathematician Brook Taylor discovered integration by parts, first publishing the idea in 1715.[1][2] More general formulations of integration by parts exist for the Riemann–Stieltjes and Lebesgue–Stieltjes integrals. The discrete analogue for sequences is called summation by parts.

Theorem

Product of two functions

The theorem can be derived as follows. For two continuously differentiable functions [math]\displaystyle{ u(x) }[/math] and [math]\displaystyle{ v(x) }[/math], the product rule states:

[math]\displaystyle{ \Big(u(x)v(x)\Big)' = v(x) u'(x) + u(x) v'(x). }[/math]

Integrating both sides with respect to [math]\displaystyle{ x }[/math],

[math]\displaystyle{ \int \Big(u(x)v(x)\Big)'\,dx = \int u'(x)v(x)\,dx + \int u(x)v'(x) \,dx, }[/math]

and noting that an indefinite integral is an antiderivative gives

[math]\displaystyle{ u(x)v(x) = \int u'(x)v(x)\,dx + \int u(x)v'(x)\,dx, }[/math]

where we neglect writing the constant of integration. This yields the formula for integration by parts:

[math]\displaystyle{ \int u(x)v'(x)\,dx = u(x)v(x) - \int u'(x)v(x) \,dx, }[/math]

or in terms of the differentials [math]\displaystyle{ du=u'(x)\,dx }[/math], [math]\displaystyle{ dv=v'(x)\,dx, \quad }[/math]

[math]\displaystyle{ \int u(x)\,dv = u(x)v(x) - \int v(x)\,du. }[/math]

This is to be understood as an equality of functions with an unspecified constant added to each side. Taking the difference of each side between two values [math]\displaystyle{ x = a }[/math] and [math]\displaystyle{ x = b }[/math] and applying the fundamental theorem of calculus gives the definite integral version: [math]\displaystyle{ \int_a^b u(x) v'(x) \, dx = u(b) v(b) - u(a) v(a) - \int_a^b u'(x) v(x) \, dx . }[/math] The original integral [math]\displaystyle{ \int uv' \, dx }[/math] contains the derivative v'; to apply the theorem, one must find v, the antiderivative of v', then evaluate the resulting integral [math]\displaystyle{ \int vu' \, dx. }[/math]

Validity for less smooth functions

It is not necessary for [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] to be continuously differentiable. Integration by parts works if [math]\displaystyle{ u }[/math] is absolutely continuous and the function designated [math]\displaystyle{ v' }[/math] is Lebesgue integrable (but not necessarily continuous).[3] (If [math]\displaystyle{ v' }[/math] has a point of discontinuity then its antiderivative [math]\displaystyle{ v }[/math] may not have a derivative at that point.)

If the interval of integration is not compact, then it is not necessary for [math]\displaystyle{ u }[/math] to be absolutely continuous in the whole interval or for [math]\displaystyle{ v' }[/math] to be Lebesgue integrable in the interval, as a couple of examples (in which [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] are continuous and continuously differentiable) will show. For instance, if

[math]\displaystyle{ u(x)= e^x/x^2, \, v'(x) =e^{-x} }[/math]

[math]\displaystyle{ u }[/math] is not absolutely continuous on the interval [1, ∞), but nevertheless

[math]\displaystyle{ \int_1^\infty u(x)v'(x)\,dx = \Big[u(x)v(x)\Big]_1^\infty - \int_1^\infty u'(x)v(x)\,dx }[/math]

so long as [math]\displaystyle{ \left[u(x)v(x)\right]_1^\infty }[/math] is taken to mean the limit of [math]\displaystyle{ u(L)v(L)-u(1)v(1) }[/math] as [math]\displaystyle{ L\to\infty }[/math] and so long as the two terms on the right-hand side are finite. This is only true if we choose [math]\displaystyle{ v(x)=-e^{-x}. }[/math] Similarly, if

[math]\displaystyle{ u(x)= e^{-x},\, v'(x) =x^{-1}\sin(x) }[/math]

[math]\displaystyle{ v' }[/math] is not Lebesgue integrable on the interval [1, ∞), but nevertheless

[math]\displaystyle{ \int_1^\infty u(x)v'(x)\,dx = \Big[u(x)v(x)\Big]_1^\infty - \int_1^\infty u'(x)v(x)\,dx }[/math] with the same interpretation.

One can also easily come up with similar examples in which [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] are not continuously differentiable.

Further, if [math]\displaystyle{ f(x) }[/math] is a function of bounded variation on the segment [math]\displaystyle{ [a,b], }[/math] and [math]\displaystyle{ \varphi(x) }[/math] is differentiable on [math]\displaystyle{ [a,b], }[/math] then

[math]\displaystyle{ \int_{a}^{b}f(x)\varphi'(x)\,dx=-\int_{-\infty}^{\infty} \widetilde\varphi(x)\,d(\widetilde\chi_{[a,b]}(x)\widetilde f(x)), }[/math]

where [math]\displaystyle{ d(\chi_{[a,b]}(x)\widetilde f(x)) }[/math] denotes the signed measure corresponding to the function of bounded variation [math]\displaystyle{ \chi_{[a,b]}(x)f(x) }[/math], and functions [math]\displaystyle{ \widetilde f, \widetilde \varphi }[/math] are extensions of [math]\displaystyle{ f, \varphi }[/math] to [math]\displaystyle{ \R, }[/math] which are respectively of bounded variation and differentiable.[citation needed]

Product of many functions

Integrating the product rule for three multiplied functions, [math]\displaystyle{ u(x) }[/math], [math]\displaystyle{ v(x) }[/math], [math]\displaystyle{ w(x) }[/math], gives a similar result:

[math]\displaystyle{ \int_a^b u v \, dw \ =\ \Big[u v w\Big]^b_a - \int_a^b u w \, dv - \int_a^b v w \, du. }[/math]

In general, for [math]\displaystyle{ n }[/math] factors

[math]\displaystyle{ \left(\prod_{i=1}^n u_i(x) \right)' \ =\ \sum_{j=1}^n u_j'(x)\prod_{i\neq j}^n u_i(x), }[/math]

which leads to

[math]\displaystyle{ \left[ \prod_{i=1}^n u_i(x) \right]_a^b \ =\ \sum_{j=1}^n \int_a^b u_j'(x) \prod_{i\neq j}^n u_i(x). }[/math]

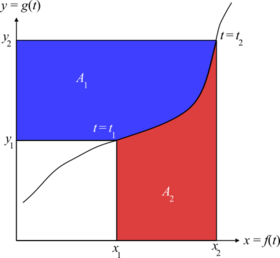

Visualization

Consider a parametric curve by (x, y) = (f(t), g(t)). Assuming that the curve is locally one-to-one and integrable, we can define [math]\displaystyle{ \begin{align} x(y) &= f(g^{-1}(y)) \\ y(x) &= g(f^{-1}(x)) \end{align} }[/math]

The area of the blue region is

[math]\displaystyle{ A_1=\int_{y_1}^{y_2}x(y) \, dy }[/math]

Similarly, the area of the red region is [math]\displaystyle{ A_2=\int_{x_1}^{x_2}y(x)\,dx }[/math]

The total area A1 + A2 is equal to the area of the bigger rectangle, x2y2, minus the area of the smaller one, x1y1:

[math]\displaystyle{ \overbrace{\int_{y_1}^{y_2}x(y) \, dy}^{A_1}+\overbrace{\int_{x_1}^{x_2}y(x) \, dx}^{A_2}\ =\ \biggl.x \cdot y(x)\biggl|_{x_1}^{x_2} \ =\ \biggl.y \cdot x(y)\biggl|_{y_1}^{y_2} }[/math] Or, in terms of t, [math]\displaystyle{ \int_{t_1}^{t_2}x(t) \, dy(t) + \int_{t_1}^{t_2}y(t) \, dx(t) \ =\ \biggl. x(t)y(t) \biggl|_{t_1}^{t_2} }[/math] Or, in terms of indefinite integrals, this can be written as [math]\displaystyle{ \int x\,dy + \int y \,dx \ =\ xy }[/math] Rearranging: [math]\displaystyle{ \int x\,dy \ =\ xy - \int y \,dx }[/math] Thus integration by parts may be thought of as deriving the area of the blue region from the area of rectangles and that of the red region.

This visualization also explains why integration by parts may help find the integral of an inverse function f−1(x) when the integral of the function f(x) is known. Indeed, the functions x(y) and y(x) are inverses, and the integral ∫ x dy may be calculated as above from knowing the integral ∫ y dx. In particular, this explains use of integration by parts to integrate logarithm and inverse trigonometric functions. In fact, if [math]\displaystyle{ f }[/math] is a differentiable one-to-one function on an interval, then integration by parts can be used to derive a formula for the integral of [math]\displaystyle{ f^{-1} }[/math]in terms of the integral of [math]\displaystyle{ f }[/math]. This is demonstrated in the article, Integral of inverse functions.

Applications

Finding antiderivatives

Integration by parts is a heuristic rather than a purely mechanical process for solving integrals; given a single function to integrate, the typical strategy is to carefully separate this single function into a product of two functions u(x)v(x) such that the residual integral from the integration by parts formula is easier to evaluate than the single function. The following form is useful in illustrating the best strategy to take:

[math]\displaystyle{ \int uv\,dx = u \int v\,dx - \int\left(u' \int v\,dx \right)\,dx. }[/math]

On the right-hand side, u is differentiated and v is integrated; consequently it is useful to choose u as a function that simplifies when differentiated, or to choose v as a function that simplifies when integrated. As a simple example, consider:

[math]\displaystyle{ \int\frac{\ln(x)}{x^2}\,dx\,. }[/math]

Since the derivative of ln(x) is 1/x, one makes (ln(x)) part u; since the antiderivative of 1/x2 is −1/x, one makes 1/x2 part v. The formula now yields:

[math]\displaystyle{ \int\frac{\ln(x)}{x^2}\,dx = -\frac{\ln(x)}{x} - \int \biggl(\frac1{x}\biggr) \biggl(-\frac1{x}\biggr)\,dx\,. }[/math]

The antiderivative of −1/x2 can be found with the power rule and is 1/x.

Alternatively, one may choose u and v such that the product u′ (∫v dx) simplifies due to cancellation. For example, suppose one wishes to integrate:

[math]\displaystyle{ \int\sec^2(x)\cdot\ln\Big(\bigl|\sin(x)\bigr|\Big)\,dx. }[/math]

If we choose u(x) = ln(|sin(x)|) and v(x) = sec2x, then u differentiates to 1/ tan x using the chain rule and v integrates to tan x; so the formula gives:

[math]\displaystyle{ \int\sec^2(x)\cdot\ln\Big(\bigl|\sin(x)\bigr|\Big)\,dx = \tan(x)\cdot\ln\Big(\bigl|\sin(x)\bigr|\Big)-\int\tan(x)\cdot\frac1{\tan(x)} \, dx\ . }[/math]

The integrand simplifies to 1, so the antiderivative is x. Finding a simplifying combination frequently involves experimentation.

In some applications, it may not be necessary to ensure that the integral produced by integration by parts has a simple form; for example, in numerical analysis, it may suffice that it has small magnitude and so contributes only a small error term. Some other special techniques are demonstrated in the examples below.

Polynomials and trigonometric functions

In order to calculate

[math]\displaystyle{ I=\int x\cos(x)\,dx\,, }[/math]

let: [math]\displaystyle{ \begin{alignat}{3} u &= x\ &\Rightarrow\ &&du &= dx \\ dv &= \cos(x)\,dx\ &\Rightarrow\ && v &= \int\cos(x)\,dx = \sin(x) \end{alignat} }[/math]

then:

[math]\displaystyle{ \begin{align} \int x\cos(x)\,dx & = \int u\ dv \\ & = u\cdot v - \int v \, du \\ & = x\sin(x) - \int \sin(x)\,dx \\ & = x\sin(x) + \cos(x) + C, \end{align} }[/math]

where C is a constant of integration.

For higher powers of [math]\displaystyle{ x }[/math] in the form

[math]\displaystyle{ \int x^n e^x\,dx,\ \int x^n\sin(x)\,dx,\ \int x^n\cos(x)\,dx\,, }[/math]

repeatedly using integration by parts can evaluate integrals such as these; each application of the theorem lowers the power of [math]\displaystyle{ x }[/math] by one.

Exponentials and trigonometric functions

An example commonly used to examine the workings of integration by parts is

[math]\displaystyle{ I=\int e^x\cos(x)\,dx. }[/math]

Here, integration by parts is performed twice. First let

[math]\displaystyle{ \begin{alignat}{3} u &= \cos(x)\ &\Rightarrow\ &&du &= -\sin(x)\,dx \\ dv &= e^x\,dx\ &\Rightarrow\ &&v &= \int e^x\,dx = e^x \end{alignat} }[/math]

then:

[math]\displaystyle{ \int e^x\cos(x)\,dx = e^x\cos(x) + \int e^x\sin(x)\,dx. }[/math]

Now, to evaluate the remaining integral, we use integration by parts again, with:

[math]\displaystyle{ \begin{alignat}{3} u &= \sin(x)\ &\Rightarrow\ &&du &= \cos(x)\,dx \\ dv &= e^x\,dx\,&\Rightarrow\ && v &= \int e^x\,dx = e^x. \end{alignat} }[/math]

Then:

[math]\displaystyle{ \int e^x\sin(x)\,dx = e^x\sin(x) - \int e^x\cos(x)\,dx. }[/math]

Putting these together,

[math]\displaystyle{ \int e^x\cos(x)\,dx = e^x\cos(x) + e^x\sin(x) - \int e^x\cos(x)\,dx. }[/math]

The same integral shows up on both sides of this equation. The integral can simply be added to both sides to get

[math]\displaystyle{ 2\int e^x\cos(x)\,dx = e^x\bigl[\sin(x)+\cos(x)\bigr] + C, }[/math]

which rearranges to

[math]\displaystyle{ \int e^x\cos(x)\,dx = \frac{1}{2}e^x\bigl[\sin(x)+\cos(x)\bigr] + C' }[/math]

where again [math]\displaystyle{ C }[/math] (and [math]\displaystyle{ C' = C/2 }[/math]) is a constant of integration.

A similar method is used to find the integral of secant cubed.

Functions multiplied by unity

Two other well-known examples are when integration by parts is applied to a function expressed as a product of 1 and itself. This works if the derivative of the function is known, and the integral of this derivative times [math]\displaystyle{ x }[/math] is also known.

The first example is [math]\displaystyle{ \int \ln(x) dx }[/math]. We write this as:

[math]\displaystyle{ I=\int\ln(x)\cdot 1\,dx\,. }[/math]

Let:

[math]\displaystyle{ u = \ln(x)\ \Rightarrow\ du = \frac{dx}{x} }[/math] [math]\displaystyle{ dv = dx\ \Rightarrow\ v = x }[/math]

then:

[math]\displaystyle{ \begin{align} \int \ln(x)\,dx & = x\ln(x) - \int\frac{x}{x}\,dx \\ & = x\ln(x) - \int 1\,dx \\ & = x\ln(x) - x + C \end{align} }[/math]

where [math]\displaystyle{ C }[/math] is the constant of integration.

The second example is the inverse tangent function [math]\displaystyle{ \arctan(x) }[/math]:

[math]\displaystyle{ I=\int\arctan(x)\,dx. }[/math]

Rewrite this as

[math]\displaystyle{ \int\arctan(x)\cdot 1\,dx. }[/math]

Now let:

[math]\displaystyle{ u = \arctan(x)\ \Rightarrow\ du = \frac{dx}{1+x^2} }[/math]

[math]\displaystyle{ dv = dx\ \Rightarrow\ v = x }[/math]

then

[math]\displaystyle{ \begin{align} \int\arctan(x)\,dx & = x\arctan(x) - \int\frac{x}{1+x^2}\,dx \\[8pt] & = x\arctan(x) - \frac{\ln(1+x^2)}{2} + C \end{align} }[/math]

using a combination of the inverse chain rule method and the natural logarithm integral condition.

LIATE rule

A rule of thumb has been proposed, consisting of choosing as u the function that comes first in the following list:[4]

- L – logarithmic functions: [math]\displaystyle{ \ln(x),\ \log_b(x), }[/math] etc.

- I – inverse trigonometric functions (including hyperbolic analogues): [math]\displaystyle{ \arctan(x),\ \arcsec(x),\ \operatorname{arsinh}(x), }[/math] etc.

- A – algebraic functions (such as polynomials): [math]\displaystyle{ x^2,\ 3x^{50}, }[/math] etc.

- T – trigonometric functions (including hyperbolic analogues): [math]\displaystyle{ \sin(x),\ \tan(x),\ \operatorname{sech}(x), }[/math] etc.

- E – exponential functions: [math]\displaystyle{ e^x,\ 19^x, }[/math] etc.

The function which is to be dv is whichever comes last in the list. The reason is that functions lower on the list generally have easier antiderivatives than the functions above them. The rule is sometimes written as "DETAIL" where D stands for dv and the top of the list is the function chosen to be dv.

To demonstrate the LIATE rule, consider the integral

[math]\displaystyle{ \int x \cdot \cos(x) \,dx. }[/math]

Following the LIATE rule, u = x, and dv = cos(x) dx, hence du = dx, and v = sin(x), which makes the integral become [math]\displaystyle{ x \cdot \sin(x) - \int 1 \sin(x) \,dx, }[/math] which equals [math]\displaystyle{ x \cdot \sin(x) + \cos(x) + C. }[/math]

In general, one tries to choose u and dv such that du is simpler than u and dv is easy to integrate. If instead cos(x) was chosen as u, and x dx as dv, we would have the integral

[math]\displaystyle{ \frac{x^2}{2} \cos(x) + \int \frac{x^2}{2} \sin(x) \,dx, }[/math]

which, after recursive application of the integration by parts formula, would clearly result in an infinite recursion and lead nowhere.

Although a useful rule of thumb, there are exceptions to the LIATE rule. A common alternative is to consider the rules in the "ILATE" order instead. Also, in some cases, polynomial terms need to be split in non-trivial ways. For example, to integrate

[math]\displaystyle{ \int x^3 e^{x^2} \,dx, }[/math]

one would set

[math]\displaystyle{ u = x^2, \quad dv = x \cdot e^{x^2} \,dx, }[/math]

so that

[math]\displaystyle{ du = 2x \,dx, \quad v = \frac{e^{x^2}}{2}. }[/math]

Then

[math]\displaystyle{ \int x^3 e^{x^2} \,dx = \int \left(x^2\right) \left(xe^{x^2}\right) \,dx = \int u \,dv = uv - \int v \,du = \frac{x^2 e^{x^2}}{2} - \int x e^{x^2} \,dx. }[/math]

Finally, this results in [math]\displaystyle{ \int x^3 e^{x^2} \,dx = \frac{e^{x^2}\left(x^2 - 1\right)}{2} + C. }[/math]

Integration by parts is often used as a tool to prove theorems in mathematical analysis.

Wallis product

The Wallis infinite product for [math]\displaystyle{ \pi }[/math]

[math]\displaystyle{ \begin{align} \frac{\pi}{2} & = \prod_{n=1}^\infty \frac{ 4n^2 }{ 4n^2 - 1 } = \prod_{n=1}^\infty \left(\frac{2n}{2n-1} \cdot \frac{2n}{2n+1}\right) \\[6pt] & = \Big(\frac{2}{1} \cdot \frac{2}{3}\Big) \cdot \Big(\frac{4}{3} \cdot \frac{4}{5}\Big) \cdot \Big(\frac{6}{5} \cdot \frac{6}{7}\Big) \cdot \Big(\frac{8}{7} \cdot \frac{8}{9}\Big) \cdot \; \cdots \end{align} }[/math]

may be derived using integration by parts.

Gamma function identity

The gamma function is an example of a special function, defined as an improper integral for [math]\displaystyle{ z \gt 0 }[/math]. Integration by parts illustrates it to be an extension of the factorial function:

[math]\displaystyle{ \begin{align} \Gamma(z) & = \int_0^\infty e^{-x} x^{z-1} dx \\[6pt] & = - \int_0^\infty x^{z-1} \, d\left(e^{-x}\right) \\[6pt] & = - \Biggl[e^{-x} x^{z-1}\Biggl]_0^\infty + \int_0^\infty e^{-x} d\left(x^{z-1}\right) \\[6pt] & = 0 + \int_0^\infty \left(z-1\right) x^{z-2} e^{-x} dx\\[6pt] & = (z-1)\Gamma(z-1). \end{align} }[/math]

Since

[math]\displaystyle{ \Gamma(1) = \int_0^\infty e^{-x} \, dx = 1, }[/math]

when [math]\displaystyle{ z }[/math] is a natural number, that is, [math]\displaystyle{ z = n \in \mathbb{N} }[/math], applying this formula repeatedly gives the factorial: [math]\displaystyle{ \Gamma(n+1) = n! }[/math]

Use in harmonic analysis

Integration by parts is often used in harmonic analysis, particularly Fourier analysis, to show that quickly oscillating integrals with sufficiently smooth integrands decay quickly. The most common example of this is its use in showing that the decay of function's Fourier transform depends on the smoothness of that function, as described below.

Fourier transform of derivative

If [math]\displaystyle{ f }[/math] is a [math]\displaystyle{ k }[/math]-times continuously differentiable function and all derivatives up to the [math]\displaystyle{ k }[/math]th one decay to zero at infinity, then its Fourier transform satisfies

[math]\displaystyle{ (\mathcal{F}f^{(k)})(\xi) = (2\pi i\xi)^k \mathcal{F}f(\xi), }[/math]

where [math]\displaystyle{ f^{(k)} }[/math] is the [math]\displaystyle{ k }[/math]th derivative of [math]\displaystyle{ f }[/math]. (The exact constant on the right depends on the convention of the Fourier transform used.) This is proved by noting that

[math]\displaystyle{ \frac{d}{dy} e^{-2\pi iy\xi} = -2\pi i\xi e^{-2\pi iy\xi}, }[/math]

so using integration by parts on the Fourier transform of the derivative we get

[math]\displaystyle{ \begin{align} (\mathcal{F}f')(\xi) &= \int_{-\infty}^\infty e^{-2\pi iy\xi} f'(y)\,dy \\ &=\left[e^{-2\pi iy\xi} f(y)\right]_{-\infty}^\infty - \int_{-\infty}^\infty (-2\pi i\xi e^{-2\pi iy\xi}) f(y)\,dy \\[5pt] &=2\pi i\xi \int_{-\infty}^\infty e^{-2\pi iy\xi} f(y)\,dy \\[5pt] &=2\pi i\xi \mathcal{F}f(\xi). \end{align} }[/math]

Applying this inductively gives the result for general [math]\displaystyle{ k }[/math]. A similar method can be used to find the Laplace transform of a derivative of a function.

Decay of Fourier transform

The above result tells us about the decay of the Fourier transform, since it follows that if [math]\displaystyle{ f }[/math] and [math]\displaystyle{ f^{(k)} }[/math] are integrable then

[math]\displaystyle{ \vert\mathcal{F}f(\xi)\vert \leq \frac{I(f)}{1+\vert 2\pi\xi\vert^k}, \text{ where } I(f) = \int_{-\infty}^\infty \Bigl(\vert f(y)\vert + \vert f^{(k)}(y)\vert\Bigr) \, dy. }[/math]

In other words, if [math]\displaystyle{ f }[/math] satisfies these conditions then its Fourier transform decays at infinity at least as quickly as 1/|ξ|k. In particular, if [math]\displaystyle{ k \geq 2 }[/math] then the Fourier transform is integrable.

The proof uses the fact, which is immediate from the definition of the Fourier transform, that

[math]\displaystyle{ \vert\mathcal{F}f(\xi)\vert \leq \int_{-\infty}^\infty \vert f(y) \vert \,dy. }[/math]

Using the same idea on the equality stated at the start of this subsection gives

[math]\displaystyle{ \vert(2\pi i\xi)^k \mathcal{F}f(\xi)\vert \leq \int_{-\infty}^\infty \vert f^{(k)}(y) \vert \,dy. }[/math]

Summing these two inequalities and then dividing by 1 + |2πξk| gives the stated inequality.

Use in operator theory

One use of integration by parts in operator theory is that it shows that the −∆ (where ∆ is the Laplace operator) is a positive operator on [math]\displaystyle{ L^2 }[/math] (see Lp space). If [math]\displaystyle{ f }[/math] is smooth and compactly supported then, using integration by parts, we have

[math]\displaystyle{ \begin{align} \langle -\Delta f, f \rangle_{L^2} &= -\int_{-\infty}^\infty f''(x)\overline{f(x)}\,dx \\[5pt] &=-\left[f'(x)\overline{f(x)}\right]_{-\infty}^\infty + \int_{-\infty}^\infty f'(x)\overline{f'(x)}\,dx \\[5pt] &=\int_{-\infty}^\infty \vert f'(x)\vert^2\,dx \geq 0. \end{align} }[/math]

Other applications

- Determining boundary conditions in Sturm–Liouville theory

- Deriving the Euler–Lagrange equation in the calculus of variations

Repeated integration by parts

Considering a second derivative of [math]\displaystyle{ v }[/math] in the integral on the LHS of the formula for partial integration suggests a repeated application to the integral on the RHS: [math]\displaystyle{ \int u v''\,dx = uv' - \int u'v'\,dx = uv' - \left( u'v - \int u''v\,dx \right). }[/math]

Extending this concept of repeated partial integration to derivatives of degree n leads to [math]\displaystyle{ \begin{align} \int u^{(0)} v^{(n)}\,dx &= u^{(0)} v^{(n-1)} - u^{(1)}v^{(n-2)} + u^{(2)}v^{(n-3)} - \cdots + (-1)^{n-1}u^{(n-1)} v^{(0)} + (-1)^n \int u^{(n)} v^{(0)} \,dx.\\[5pt] &= \sum_{k=0}^{n-1}(-1)^k u^{(k)}v^{(n-1-k)} + (-1)^n \int u^{(n)} v^{(0)} \,dx. \end{align} }[/math]

This concept may be useful when the successive integrals of [math]\displaystyle{ v^{(n)} }[/math] are readily available (e.g., plain exponentials or sine and cosine, as in Laplace or Fourier transforms), and when the nth derivative of [math]\displaystyle{ u }[/math] vanishes (e.g., as a polynomial function with degree [math]\displaystyle{ (n-1) }[/math]). The latter condition stops the repeating of partial integration, because the RHS-integral vanishes.

In the course of the above repetition of partial integrations the integrals [math]\displaystyle{ \int u^{(0)} v^{(n)}\,dx \quad }[/math] and [math]\displaystyle{ \quad \int u^{(\ell)} v^{(n-\ell)}\,dx \quad }[/math] and [math]\displaystyle{ \quad \int u^{(m)} v^{(n-m)}\,dx \quad\text{ for } 1 \le m,\ell \le n }[/math] get related. This may be interpreted as arbitrarily "shifting" derivatives between [math]\displaystyle{ v }[/math] and [math]\displaystyle{ u }[/math] within the integrand, and proves useful, too (see Rodrigues' formula).

Tabular integration by parts

The essential process of the above formula can be summarized in a table; the resulting method is called "tabular integration"[5] and was featured in the film Stand and Deliver (1988).[6]

For example, consider the integral

[math]\displaystyle{ \int x^3 \cos x \,dx \quad }[/math] and take [math]\displaystyle{ \quad u^{(0)} = x^3, \quad v^{(n)} = \cos x. }[/math]

Begin to list in column A the function [math]\displaystyle{ u^{(0)} = x^3 }[/math] and its subsequent derivatives [math]\displaystyle{ u^{(i)} }[/math] until zero is reached. Then list in column B the function [math]\displaystyle{ v^{(n)} = \cos x }[/math] and its subsequent integrals [math]\displaystyle{ v^{(n-i)} }[/math] until the size of column B is the same as that of column A. The result is as follows:

# i Sign A: derivatives [math]\displaystyle{ u^{(i)} }[/math] B: integrals [math]\displaystyle{ v^{(n-i)} }[/math] 0 + [math]\displaystyle{ x^3 }[/math] [math]\displaystyle{ \cos x }[/math] 1 − [math]\displaystyle{ 3x^2 }[/math] [math]\displaystyle{ \sin x }[/math] 2 + [math]\displaystyle{ 6x }[/math] [math]\displaystyle{ -\cos x }[/math] 3 − [math]\displaystyle{ 6 }[/math] [math]\displaystyle{ -\sin x }[/math] 4 + [math]\displaystyle{ 0 }[/math] [math]\displaystyle{ \cos x }[/math]

The product of the entries in row i of columns A and B together with the respective sign give the relevant integrals in step i in the course of repeated integration by parts. Step i = 0 yields the original integral. For the complete result in step i > 0 the ith integral must be added to all the previous products (0 ≤ j < i) of the jth entry of column A and the (j + 1)st entry of column B (i.e., multiply the 1st entry of column A with the 2nd entry of column B, the 2nd entry of column A with the 3rd entry of column B, etc. ...) with the given jth sign. This process comes to a natural halt, when the product, which yields the integral, is zero (i = 4 in the example). The complete result is the following (with the alternating signs in each term):

[math]\displaystyle{ \underbrace{(+1)(x^3)(\sin x)}_{j=0} + \underbrace{(-1)(3x^2)(-\cos x)}_{j=1} + \underbrace{(+1)(6x)(-\sin x)}_{j=2} +\underbrace{(-1)(6)(\cos x)}_{j=3}+ \underbrace{\int(+1)(0)(\cos x) \,dx}_{i=4: \;\to \;C}. }[/math]

This yields

[math]\displaystyle{ \underbrace{\int x^3 \cos x \,dx}_{\text{step 0}} = x^3\sin x + 3x^2\cos x - 6x\sin x - 6\cos x + C. }[/math]

The repeated partial integration also turns out useful, when in the course of respectively differentiating and integrating the functions [math]\displaystyle{ u^{(i)} }[/math] and [math]\displaystyle{ v^{(n-i)} }[/math] their product results in a multiple of the original integrand. In this case the repetition may also be terminated with this index i.This can happen, expectably, with exponentials and trigonometric functions. As an example consider

[math]\displaystyle{ \int e^x \cos x \,dx. }[/math]

# i Sign A: derivatives [math]\displaystyle{ u(i) }[/math] B: integrals [math]\displaystyle{ v^{(n-i)} }[/math] 0 + [math]\displaystyle{ e^x }[/math] [math]\displaystyle{ \cos x }[/math] 1 − [math]\displaystyle{ e^x }[/math] [math]\displaystyle{ \sin x }[/math] 2 + [math]\displaystyle{ e^x }[/math] [math]\displaystyle{ -\cos x }[/math]

In this case the product of the terms in columns A and B with the appropriate sign for index i = 2 yields the negative of the original integrand (compare rows i = 0 and i = 2).

[math]\displaystyle{ \underbrace{\int e^x \cos x \,dx}_{\text{step 0}} = \underbrace{(+1)(e^x)(\sin x)}_{j=0} + \underbrace{(-1)(e^x)(-\cos x)}_{j=1} + \underbrace{\int(+1)(e^x)(-\cos x) \,dx}_{i= 2}. }[/math]

Observing that the integral on the RHS can have its own constant of integration [math]\displaystyle{ C' }[/math], and bringing the abstract integral to the other side, gives

[math]\displaystyle{ 2 \int e^x \cos x \,dx = e^x\sin x + e^x\cos x + C', }[/math]

and finally:

[math]\displaystyle{ \int e^x \cos x \,dx = \frac 12 \left(e^x ( \sin x + \cos x ) \right) + C, }[/math]

where [math]\displaystyle{ C = C'/2 }[/math].

Higher dimensions

Integration by parts can be extended to functions of several variables by applying a version of the fundamental theorem of calculus to an appropriate product rule. There are several such pairings possible in multivariate calculus, involving a scalar-valued function u and vector-valued function (vector field) V.[7]

The product rule for divergence states:

[math]\displaystyle{ \nabla \cdot ( u \mathbf{V} ) \ =\ u\, \nabla \cdot \mathbf V \ +\ \nabla u\cdot \mathbf V. }[/math]

Suppose [math]\displaystyle{ \Omega }[/math] is an open bounded subset of [math]\displaystyle{ \R^n }[/math] with a piecewise smooth boundary [math]\displaystyle{ \Gamma=\partial\Omega }[/math]. Integrating over [math]\displaystyle{ \Omega }[/math] with respect to the standard volume form [math]\displaystyle{ d\Omega }[/math], and applying the divergence theorem, gives:

[math]\displaystyle{ \int_{\Gamma} u \mathbf{V} \cdot \hat{\mathbf n} \,d\Gamma \ =\ \int_\Omega\nabla\cdot ( u \mathbf{V} )\,d\Omega \ =\ \int_\Omega u\, \nabla \cdot \mathbf V\,d\Omega \ +\ \int_\Omega\nabla u\cdot \mathbf V\,d\Omega, }[/math]

where [math]\displaystyle{ \hat{\mathbf n} }[/math] is the outward unit normal vector to the boundary, integrated with respect to its standard Riemannian volume form [math]\displaystyle{ d\Gamma }[/math]. Rearranging gives:

[math]\displaystyle{ \int_\Omega u \,\nabla \cdot \mathbf V\,d\Omega \ =\ \int_\Gamma u \mathbf V \cdot \hat{\mathbf n}\,d\Gamma - \int_\Omega \nabla u \cdot \mathbf V \, d\Omega, }[/math]

or in other words [math]\displaystyle{ \int_\Omega u\,\operatorname{div}(\mathbf V)\,d\Omega \ =\ \int_\Gamma u \mathbf V \cdot \hat{\mathbf n}\,d\Gamma - \int_\Omega \operatorname{grad}(u)\cdot\mathbf V\,d\Omega . }[/math] The regularity requirements of the theorem can be relaxed. For instance, the boundary [math]\displaystyle{ \Gamma=\partial\Omega }[/math] need only be Lipschitz continuous, and the functions u, v need only lie in the Sobolev space [math]\displaystyle{ H^1(\Omega) }[/math].

Green's first identity

Consider the continuously differentiable vector fields [math]\displaystyle{ \mathbf U = u_1\mathbf e_1+\cdots+u_n\mathbf e_n }[/math] and [math]\displaystyle{ v \mathbf e_1,\ldots, v\mathbf e_n }[/math], where [math]\displaystyle{ \mathbf e_i }[/math]is the i-th standard basis vector for [math]\displaystyle{ i=1,\ldots,n }[/math]. Now apply the above integration by parts to each [math]\displaystyle{ u_i }[/math] times the vector field [math]\displaystyle{ v\mathbf e_i }[/math]:

[math]\displaystyle{ \int_\Omega u_i\frac{\partial v}{\partial x_i}\,d\Omega \ =\ \int_\Gamma u_i v \,\mathbf e_i\cdot\hat\mathbf{n}\,d\Gamma - \int_\Omega \frac{\partial u_i}{\partial x_i} v\,d\Omega. }[/math]

Summing over i gives a new integration by parts formula:

[math]\displaystyle{ \int_\Omega \mathbf U \cdot \nabla v\,d\Omega \ =\ \int_\Gamma v \mathbf{U}\cdot \hat{\mathbf n}\,d\Gamma - \int_\Omega v\, \nabla \cdot \mathbf{U}\,d\Omega. }[/math]

The case [math]\displaystyle{ \mathbf{U}=\nabla u }[/math], where [math]\displaystyle{ u\in C^2(\bar{\Omega}) }[/math], is known as the first of Green's identities:

[math]\displaystyle{ \int_\Omega \nabla u \cdot \nabla v\,d\Omega\ =\ \int_\Gamma v\, \nabla u\cdot\hat{\mathbf n}\,d\Gamma - \int_\Omega v\, \nabla^2 u \, d\Omega. }[/math]

See also

- Integration by parts for the Lebesgue–Stieltjes integral

- Integration by parts for semimartingales, involving their quadratic covariation.

- Integration by substitution

- Legendre transformation

Notes

- ↑ "Brook Taylor". History.MCS.St-Andrews.ac.uk. http://www-history.mcs.st-andrews.ac.uk/Biographies/Taylor.html.

- ↑ "Brook Taylor". Stetson.edu. https://www2.stetson.edu/~efriedma/periodictable/html/Tl.html.

- ↑ "Integration by parts". https://www.encyclopediaofmath.org/index.php/Integration_by_parts.

- ↑ Kasube, Herbert E. (1983). "A Technique for Integration by Parts". The American Mathematical Monthly 90 (3): 210–211. doi:10.2307/2975556.

- ↑ Thomas, G. B.; Finney, R. L. (1988). Calculus and Analytic Geometry (7th ed.). Reading, MA: Addison-Wesley. ISBN 0-201-17069-8.

- ↑ Horowitz, David (1990). "Tabular Integration by Parts". The College Mathematics Journal 21 (4): 307–311. doi:10.2307/2686368. https://www.maa.org/sites/default/files/pdf/mathdl/CMJ/Horowitz307-311.pdf.

- ↑ Rogers, Robert C. (September 29, 2011). "The Calculus of Several Variables". http://www.math.nagoya-u.ac.jp/~richard/teaching/s2016/Ref2.pdf.

Further reading

- Louis Brand (10 October 2013). Advanced Calculus: An Introduction to Classical Analysis. Courier Corporation. pp. 267–. ISBN 978-0-486-15799-3. https://books.google.com/books?id=hdSIAAAAQBAJ&q="integration+by+parts"&pg=PA267.

- Hoffmann, Laurence D.; Bradley, Gerald L. (2004). Calculus for Business, Economics, and the Social and Life Sciences (8th ed.). pp. 450–464. ISBN 0-07-242432-X.

- Willard, Stephen (1976). Calculus and its Applications. Boston: Prindle, Weber & Schmidt. pp. 193–214. ISBN 0-87150-203-8.

- Washington, Allyn J. (1966). Technical Calculus with Analytic Geometry. Reading: Addison-Wesley. pp. 218–245. ISBN 0-8465-8603-7.

External links

- Hazewinkel, Michiel, ed. (2001), "Integration by parts", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4, https://www.encyclopediaofmath.org/index.php?title=p/i051730

- Integration by parts—from MathWorld

es:Métodos de integración#Método de integración por partes

|

Categories: [Integral calculus] [Mathematical identities] [Theorems in analysis] [Theorems in calculus]

↧ Download as ZWI file | Last modified: 07/24/2024 11:51:34 | 15 views

☰ Source: https://handwiki.org/wiki/Integration_by_parts | License: CC BY-SA 3.0

KSF

KSF